windows入门Elasticsearch7.10(es)之3:本地集群搭建

安装里面启动的单机,很简单集群肯定是多个

1:首先复制你下载下来的压缩包:在相同位置解压,多启动几个,

例如下图,原始名就是之前第一次安装的因为本人电脑配置比较差

所以启动三个做一个演示就可以了

2:这里说说修改配置,建议本地演示。首先修改每个config文件夹下面jvm.options改为512M,1g默认是1g

-Xms512m

-Xmx512m3:修改elasticsearch.yml

注意:不一样位置3.1:首先在三个配置文件cluster.name命名一样的

3.2:node.name配置不一样的,

3.3:因为同一服务器端口号使用相同端口,会冲突所以http.port不一样

3.4:这两个参数

node.master: true

# 时候进行数据存贮

node.data: true关系到

gateway.recover_after_nodes: 2

gateway.expected_nodes: 3

node.max_local_storage_nodes: 3这三个参数的设置,如果设置几个为master: true,那么gateway.recover_after_nodes 的值就是几

gateway.expected_nodes是节点数量,目前只有三个所以写3

node.max_local_storage_nodes的值有几个node.data设置为true值就是几

3.5:discovery.seed_hosts写入所有节点访问

3.6:cluster.initial_master_nodes 所有主机节点名字

4:其他根据这个参考自己决定设置配置,直接进入每个目录bin下面运行elasticsearch回车就ok了

# ======================== Elasticsearch Configuration =========================

#

# NOTE: Elasticsearch comes with reasonable defaults for most settings.

# Before you set out to tweak and tune the configuration, make sure you

# understand what are you trying to accomplish and the consequences.

#

# The primary way of configuring a node is via this file. This template lists

# the most important settings you may want to configure for a production cluster.

#

# Please consult the documentation for further information on configuration options:

# https://www.elastic.co/guide/en/elasticsearch/reference/index.html

#

# ---------------------------------- Cluster -----------------------------------

#

# Use a descriptive name for your cluster:

#集群名字 同一个集群的节点要设置在同一个集群名称

cluster.name: csdemo

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

#节点名字 同一集群的节点名称不能相同

node.name: node-2

#

# Add custom attributes to the node:

#指定节点的部落属性,这是一个比集群更大的范围。

#node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

# 数据存放目录

path.data: D:/elasticsearch-7.10.0-windows-x86_64/elasticsearch-7.10.0-1/path/to/data

#

# Path to log files:

#日志存放目录

path.logs: D:/elasticsearch-7.10.0-windows-x86_64/elasticsearch-7.10.0-1/path/to/logs

#

# ----------------------------------- Memory -----------------------------------

#

# Lock the memory on startup:

#锁定物理内存地址,防止elasticsearch内存被交换出去,也就是避免es使用swap交换分区

bootstrap.memory_lock: true

#

# Make sure that the heap size is set to about half the memory available

# on the system and that the owner of the process is allowed to use this

# limit.

#当系统进行内存交换的时候,es的性能很差

# Elasticsearch performs poorly when the system is swapping the memory.

#

# ---------------------------------- Network -----------------------------------

#

# Set the bind address to a specific IP (IPv4 or IPv6):

#设置ip绑定

network.host: 127.0.0.1

#

# Set a custom port for HTTP:

#自定义端口号

http.port: 9202

transport.tcp.port: 9302

# 是否启用TCP保持活动状态,默认为true

network.tcp.keep_alive: true

#是否启用tcp无延迟,true为启用tcp不延迟,默认为false启用tcp延迟

network.tcp.no_delay: true

#设置是否压缩tcp传输时的数据,默认为false,不压缩。

transport.tcp.compress: true

#

# For more information, consult the network module documentation.

#

# --------------------------------- Discovery ----------------------------------

#设置成主服务

node.master: false

# 时候进行数据存贮

node.data: true

#集群节点列表

# Pass an initial list of hosts to perform discovery when this node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

discovery.seed_hosts: ["127.0.0.1:9301", "127.0.0.1:9302", "127.0.0.1:9303"]

#

# Bootstrap the cluster using an initial set of master-eligible nodes:

#j

cluster.initial_master_nodes: ["node-1", "node-2", "node-3"]

#每个节点在选中的主节点的检查之间等待的时间。默认为1秒

cluster.fault_detection.leader_check.interval: 15s

#设置主节点等待每个集群状态完全更新后发布到所有节点的时间,默认为30秒

cluster.publish.timeout: 90s

#集群内同时启动的数据任务个数,默认是2个

#cluster.routing.allocation.cluster_concurrent_rebalance: 2

#

#添加或删除节点及负载均衡时并发恢复的线程个数,默认4个

#cluster.routing.allocation.node_concurrent_recoveries: 4

#

# For more information, consult the discovery and cluster formation module documentation.

# ---------------------------------- Gateway -----------------------------------

#

# Block initial recovery after a full cluster restart until N nodes are started:

#集群中的N个节点启动后,才允许进行数据恢复处理

gateway.recover_after_nodes: 3

gateway.expected_nodes: 3

#配置限制了单节点上可以开启的ES存储实例的个数

node.max_local_storage_nodes: 3

gateway.auto_import_dangling_indices: true

#

# For more information, consult the gateway module documentation.

#

# ---------------------------------- Various -----------------------------------

#

# Require explicit names when deleting indices:

#删除索引必须要索引名称

action.destructive_requires_name: true

#是否允许跨域

http.cors.enabled: true

#允许跨域访问 *代表所有

http.cors.allow-origin: "*"

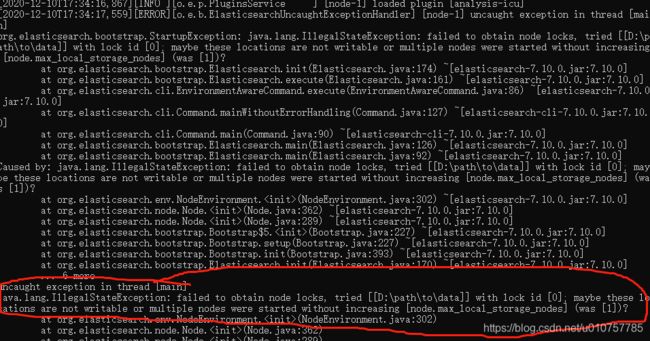

说说中间遇到的错误:

1:就是上面内存问题

2:提示需要设置node.max_local_storage_nodes

这个配置限制了单节点上可以开启的ES存储实例的个数,我们需要开多个实例,因此需要把这个配置写到配置文件中,并为这个配置赋值为2或者更高。

3:子节点没有找到错误,这个错误是因为discovery.seed_hosts: ["127.0.0.1:9301", "127.0.0.1:9302", "127.0.0.1:9303"]我中间的:是中文的换成英文的就好了

4:master not discovered yet也是因为上面tcp没有通导致主机没有可分配的

5:下面这个错误在配置里面加上 gateway.auto_import_dangling_indices: true就是说索引无法自动检测需要手动

[2020-12-11T10:07:25,053][WARN ][o.e.g.DanglingIndicesState] [node-1]

gateway.auto_import_dangling_indices is disabled,

dangling indices will not be automatically detected or imported and must be managed manually最后来几张启动监听图片: