SentenceTransformers库介绍

Sentence Transformer是一个Python框架,用于句子、文本和图像嵌入Embedding。

这个框架计算超过100种语言的句子或文本嵌入。然后,这些嵌入可以进行比较,例如与余弦相似度进行比较,以找到具有相似含义的句子,这对于语义文本相似、语义搜索或释义挖掘非常有用。

该框架基于PyTorch和Transformer,并提供了大量预训练的模型集合,用于各种任务,此外,很容易微调您自己的模型。

Sentence Transformers官网

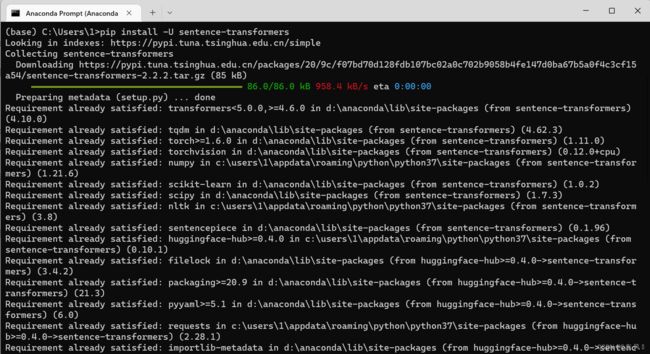

1️⃣ 安装

pip安装命令如下

pip install -U sentence-transformers

在一些NLP任务当中,我们需要提前将我们的文本信息形成连续性向量,方便之后送入模型训练,最容易的方式就是 OneHot 编码方式,但是这种方式会丧失句子的语义信息,所以为了能够用一组向量表示文本,这就利用到了 Embedding 的方式,这种方式首先会根据一个大的语料库训练出一个词表,之后我们会拿着这个词表来形成我们的语义向量。

下面给出示例如何基于 Sentence Transformers 来形成文本嵌入Embedding:

from sentence_transformers import SentenceTransformer

# 导入模型

model = SentenceTransformer('all-MiniLM-L6-v2')

# 文本信息

sentences = ['This framework generates embeddings for each input sentence',

'Sentences are passed as a list of string.',

'The quick brown fox jumps over the lazy dog.']

# 获取embedding向量

embeddings = model.encode(sentences=sentences, show_progress_bar=True, convert_to_tensor=True)

# 打印结果

for sentence, embedding in zip(sentences, embeddings):

print("Sentence:", sentence)

print("Embedding:", embedding)

print("")

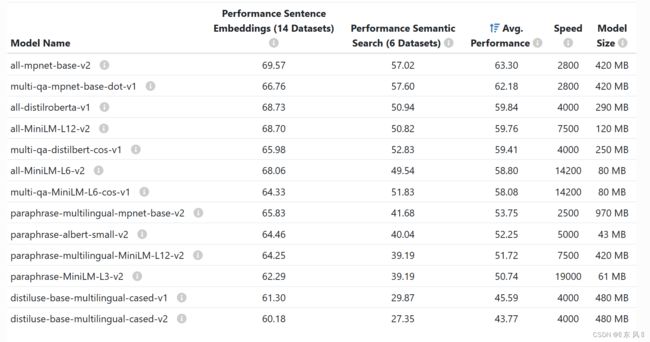

首先就是导入预训练模型,以下为官网列出的预训练模型,如果需要更多可以到 Hugging Face 这个网站下载更多的预训练模型。

导入模型之后调用模型的 encoder 方法就可以对我们给定的文本生成Embedding向量,可视效果如下:

Batches: 0%| | 0/1 [00:00<?, ?it/s]

Sentence: This framework generates embeddings for each input sentence

Embedding: tensor([-1.3717e-02, -4.2852e-02, -1.5629e-02, 1.4054e-02, 3.9554e-02,

1.2180e-01, 2.9433e-02, -3.1752e-02, 3.5496e-02, -7.9314e-02,

1.7588e-02, -4.0437e-02, 4.9726e-02, 2.5491e-02, -7.1870e-02,

8.1497e-02, 1.4707e-03, 4.7963e-02, -4.5034e-02, -9.9218e-02,

-2.8177e-02, 6.4505e-02, 4.4467e-02, -4.7622e-02, -3.5295e-02,

4.3867e-02, -5.2857e-02, 4.3305e-04, 1.0192e-01, 1.6407e-02,

3.2700e-02, -3.4599e-02, 1.2134e-02, 7.9487e-02, 4.5834e-03,

1.5778e-02, -9.6821e-03, 2.8763e-02, -5.0581e-02, -1.5579e-02,

-2.8791e-02, -9.6228e-03, 3.1556e-02, 2.2735e-02, 8.7145e-02,

-3.8503e-02, -8.8472e-02, -8.7550e-03, -2.1234e-02, 2.0892e-02,

-9.0208e-02, -5.2573e-02, -1.0564e-02, 2.8831e-02, -1.6146e-02,

6.1783e-03, -1.2323e-02, -1.0734e-02, 2.8335e-02, -5.2857e-02,

-3.5862e-02, -5.9799e-02, -1.0906e-02, 2.9157e-02, 7.9798e-02,

-3.2789e-04, 6.8350e-03, 1.3272e-02, -4.2462e-02, 1.8766e-02,

-9.8923e-02, 2.0905e-02, -8.6961e-02, -1.5015e-02, -4.8620e-02,

8.0441e-02, -3.6770e-03, -6.6504e-02, 1.1456e-01, -3.0423e-02,

2.9663e-02, -2.8070e-02, 4.6499e-02, -2.2551e-02, 8.5422e-02,

3.1545e-02, 7.3454e-02, -2.2186e-02, -5.2968e-02, 1.2713e-02,

-5.2734e-02, -1.0619e-01, 7.0473e-02, 2.7674e-02, -8.0553e-02,

2.3965e-02, -2.6512e-02, -2.1733e-02, 4.3528e-02, 4.8471e-02,

-2.3707e-02, 2.8577e-02, 1.1185e-01, -6.3494e-02, -1.5832e-02,

-2.2617e-02, -1.3103e-02, -1.6207e-03, -3.6093e-02, -9.7830e-02,

-4.6773e-02, 1.7627e-02, -3.9749e-02, -1.7641e-04, 3.3963e-02,

-2.0963e-02, 6.3366e-03, -2.5941e-02, 8.1041e-02, 6.1439e-02,

-5.4459e-03, 6.4828e-02, -1.1684e-01, 2.3686e-02, -1.3206e-02,

-1.1248e-01, 1.9005e-02, -1.7466e-34, 5.5895e-02, 1.9424e-02,

4.6544e-02, 5.1865e-02, 3.8939e-02, 3.4054e-02, -4.3211e-02,

7.9064e-02, -9.7953e-02, -1.2744e-02, -2.9187e-02, 1.0205e-02,

1.8812e-02, 1.0894e-01, 6.6347e-02, -5.3529e-02, -3.2923e-02,

4.6983e-02, 2.2888e-02, 2.7411e-02, -2.9198e-02, 3.1271e-02,

-2.2285e-02, -1.0228e-01, -2.7912e-02, 1.1379e-02, 9.0631e-02,

-4.7541e-02, -1.0072e-01, -1.2323e-02, -7.9693e-02, -1.4464e-02,

-7.7640e-02, -7.6692e-03, 9.7395e-03, 2.2420e-02, 7.7727e-02,

-3.1715e-03, 2.1154e-02, -3.3039e-02, 9.5525e-03, -3.7301e-02,

2.6136e-02, -9.7909e-03, -6.3151e-02, 5.7744e-03, -3.8003e-02,

1.2968e-02, -1.8250e-02, -1.5628e-02, -1.2336e-03, 5.5558e-02,

1.1309e-04, -5.6126e-02, 7.4017e-02, 1.8445e-02, -2.6637e-02,

1.3195e-02, 7.5009e-02, -2.4680e-02, -3.2401e-02, -1.5767e-02,

-8.0351e-03, -5.6132e-03, 1.0569e-02, 3.2616e-03, -3.9199e-02,

-9.3868e-02, 1.1423e-01, 6.5730e-02, -4.7263e-02, 1.4509e-02,

-3.5449e-02, -3.3776e-02, -5.1551e-02, -3.8100e-03, -5.1504e-02,

-5.9343e-02, -1.6941e-03, 7.4211e-02, -4.2009e-02, -7.1998e-02,

3.1725e-02, -1.6630e-02, 3.9699e-03, -6.5275e-02, 2.7739e-02,

-7.5165e-02, 2.2746e-02, -3.9137e-02, 1.5432e-02, -5.5491e-02,

1.2332e-02, -2.5952e-02, 6.6642e-02, -6.9126e-34, 3.3163e-02,

8.4793e-02, -6.6558e-02, 3.3354e-02, 4.7161e-03, 1.3536e-02,

-5.3869e-02, 9.2069e-02, -2.9688e-02, 3.1622e-02, -2.3750e-02,

1.9877e-02, 1.0345e-01, -9.0695e-02, 6.3063e-03, 1.4289e-02,

1.1929e-02, 6.4372e-03, 4.2010e-02, 1.2534e-02, 3.9302e-02,

5.3569e-02, -4.3075e-02, 6.1043e-02, -5.4005e-05, 6.9168e-02,

1.0552e-02, 1.2211e-02, -7.2319e-02, 2.5047e-02, -5.1837e-02,

-4.3656e-02, -6.7182e-02, 1.3483e-02, -7.2589e-02, 7.0416e-03,

6.5894e-02, 1.0899e-02, -2.6001e-03, 5.4997e-02, 5.0697e-02,

3.2795e-02, -6.6883e-02, 6.4556e-02, -2.5208e-02, -2.9257e-02,

-1.1670e-01, 3.2406e-02, 5.8586e-02, -3.5176e-02, -7.1524e-02,

2.2494e-02, -1.0079e-01, -4.7455e-02, -7.6196e-02, -5.8717e-02,

4.2114e-02, -7.4721e-02, 1.9847e-02, -3.3650e-03, -5.2974e-02,

2.7473e-02, 3.4574e-02, -6.1185e-02, 1.0636e-01, -9.6412e-02,

-4.5595e-02, 1.5149e-02, -5.1353e-03, -6.6445e-02, 4.3172e-02,

-1.1041e-02, -9.8025e-03, 7.5378e-02, -1.4957e-02, -4.8021e-02,

5.8073e-02, -2.4390e-02, -2.2314e-02, -4.3699e-02, 5.1205e-02,

-3.2863e-02, 1.0876e-01, 6.0893e-02, 3.3079e-03, 5.5382e-02,

8.4320e-02, 1.2709e-02, 3.8447e-02, 6.5233e-02, -2.9468e-02,

5.0801e-02, -2.0935e-02, 1.4614e-01, 2.2556e-02, -1.7723e-08,

-5.0267e-02, -2.7921e-04, -1.0033e-01, 2.4281e-02, -7.5404e-02,

-3.7914e-02, 3.9605e-02, 3.1008e-02, -9.0570e-03, -6.5041e-02,

4.0545e-02, 4.8339e-02, -4.5696e-02, 4.7601e-03, 2.6436e-03,

9.3561e-02, -4.0260e-02, 3.2740e-02, 1.1830e-02, 5.5434e-02,

1.4805e-01, 7.2119e-02, 2.7698e-04, 1.6865e-02, 8.3488e-03,

-8.7616e-03, -1.3365e-02, 6.1424e-02, 1.5717e-02, 6.9496e-02,

1.0862e-02, 6.0802e-02, -5.3342e-02, -3.4792e-02, -3.3627e-02,

6.9391e-02, 1.2299e-02, -1.4524e-01, -2.0697e-03, -4.6113e-02,

3.7275e-03, -5.5936e-03, -1.0066e-01, -4.4595e-02, 5.4092e-02,

4.9889e-03, 1.4953e-02, -8.2606e-02, 6.2663e-02, -5.0191e-03,

-4.8186e-02, -3.5399e-02, 9.0339e-03, -2.4234e-02, 5.6627e-02,

2.5153e-02, -1.7071e-02, -1.2478e-02, 3.1952e-02, 1.3842e-02,

-1.5582e-02, 1.0018e-01, 1.2366e-01, -4.2297e-02])

Sentence: Sentences are passed as a list of string.

Embedding: tensor([ 5.6452e-02, 5.5002e-02, 3.1380e-02, 3.3949e-02, -3.5425e-02,

8.3467e-02, 9.8880e-02, 7.2755e-03, -6.6866e-03, -7.6581e-03,

7.9374e-02, 7.3970e-04, 1.4929e-02, -1.5105e-02, 3.6767e-02,

4.7874e-02, -4.8197e-02, -3.7605e-02, -4.6028e-02, -8.8982e-02,

1.2023e-01, 1.3066e-01, -3.7394e-02, 2.4786e-03, 2.5582e-03,

7.2581e-02, -6.8044e-02, -5.2470e-02, 4.9023e-02, 2.9956e-02,

-5.8443e-02, -2.0226e-02, 2.0882e-02, 9.7669e-02, 3.5239e-02,

3.9114e-02, 1.0567e-02, 1.5623e-03, -1.3082e-02, 8.5290e-03,

-4.8410e-03, -2.0377e-02, -2.7180e-02, 2.8331e-02, 3.6602e-02,

2.5128e-02, -9.9086e-02, 1.1563e-02, -3.6038e-02, -7.2378e-02,

-1.1267e-01, 1.1294e-02, -3.8640e-02, 4.6739e-02, -2.8846e-02,

2.2670e-02, -8.5241e-03, 3.3281e-02, -1.0658e-03, -7.0975e-02,

-6.3117e-02, -5.7219e-02, -6.1603e-02, 5.4715e-02, 1.1832e-02,

-4.6626e-02, 2.5696e-02, -7.0741e-03, -5.7384e-02, 4.1284e-02,

-5.9150e-02, 5.8902e-02, -4.4170e-02, 4.6508e-02, -3.1581e-02,

5.5831e-02, 5.5458e-02, -5.9653e-02, 4.0641e-02, 4.8376e-03,

-4.9677e-02, -1.0094e-01, 3.4008e-02, 4.1327e-03, -2.9353e-03,

2.1184e-02, -3.7396e-02, -2.7907e-02, -4.6177e-02, 5.2614e-02,

-2.7974e-02, -1.6238e-01, 6.6104e-02, 1.7227e-02, -5.4511e-03,

4.7447e-02, -3.8224e-02, -3.9690e-02, 1.3454e-02, 4.4965e-02,

4.5367e-03, 2.8298e-02, 8.3663e-02, -1.0086e-02, -1.1935e-01,

-3.8462e-02, 4.8286e-02, -9.4608e-02, 1.9185e-02, -9.9652e-02,

-6.3060e-02, 3.0270e-02, 1.1740e-02, -4.7837e-02, -6.2026e-03,

-3.3285e-02, -4.0439e-03, 1.2831e-02, 4.0525e-02, 7.5648e-02,

2.9243e-02, 2.8427e-02, -2.7894e-02, 1.6686e-02, -2.4796e-02,

-6.8365e-02, 2.8997e-02, -5.3987e-33, -2.6901e-03, -2.6507e-02,

-6.4792e-04, -8.4619e-03, -7.3515e-02, 4.9408e-03, -5.9784e-02,

1.0344e-02, 2.1290e-03, -2.8822e-03, -3.1708e-02, -9.4236e-02,

3.0302e-02, 7.0023e-02, 4.5069e-02, 3.6944e-02, 1.1359e-02,

3.5303e-02, 5.5045e-03, 1.3442e-03, 3.4612e-03, 7.7505e-02,

5.4511e-02, -7.9206e-02, -9.3170e-02, -4.0340e-02, 3.1067e-02,

-3.8308e-02, -5.8944e-02, 1.9333e-02, -2.6716e-02, -7.9194e-02,

1.0416e-04, 7.7062e-02, 4.1660e-02, 8.9093e-02, 3.5684e-02,

-1.0915e-02, 3.7150e-02, -2.0707e-02, -2.4610e-02, -2.0503e-02,

2.6220e-02, 3.4359e-02, 4.3925e-02, -8.2052e-03, -8.4071e-02,

4.2417e-02, 4.8750e-02, 5.9539e-02, 2.8775e-02, 3.3764e-02,

-4.0744e-02, -1.6637e-03, 7.9193e-02, 3.4109e-02, -5.7284e-04,

1.8775e-02, -1.3696e-02, 7.3833e-02, 5.7451e-04, 8.3351e-02,

5.6081e-02, -1.1371e-02, 4.4261e-02, 2.6958e-02, -4.8054e-02,

-3.1509e-02, 7.7523e-02, 1.8177e-02, -8.8301e-02, -7.8552e-03,

-6.2224e-02, 7.1937e-02, -2.3348e-02, 6.5248e-03, -9.4953e-03,

-9.8831e-02, 4.0131e-02, 3.0740e-02, -2.2161e-02, -9.4591e-02,

1.0237e-02, 1.0219e-01, -4.1296e-02, -3.1578e-02, 4.7475e-02,

-1.1021e-01, 1.6961e-02, -3.7171e-02, -1.0326e-02, -4.7254e-02,

-1.2021e-02, -1.9326e-02, 5.7929e-02, 4.2387e-34, 3.9201e-02,

8.4136e-02, -1.0295e-01, 6.9226e-02, 1.6882e-02, -3.2676e-02,

9.6596e-03, 1.8090e-02, 2.1794e-02, 1.6319e-02, -9.6929e-02,

3.7485e-03, -2.3846e-02, -3.4406e-02, 7.1196e-02, 9.2190e-04,

-6.2385e-03, 3.2375e-02, -8.9037e-04, 5.0191e-03, -4.2454e-02,

9.8908e-02, -4.6032e-02, 4.6971e-02, -1.7528e-02, -7.0252e-03,

1.3274e-02, -5.3015e-02, 2.6641e-03, 1.4582e-02, 7.4335e-03,

-3.0713e-02, -2.0942e-02, 8.2411e-02, -5.1589e-02, -2.7118e-02,

1.1758e-01, 7.7250e-03, -1.8952e-02, 3.9456e-02, 7.1736e-02,

2.5912e-02, 2.7519e-02, 9.5054e-03, -3.0236e-02, -4.0794e-02,

-1.0403e-01, -7.9742e-03, -3.6446e-03, 3.2972e-02, -2.3595e-02,

-7.5052e-03, -5.8223e-02, -3.1791e-02, -4.1805e-02, 2.1745e-02,

-6.6729e-02, -4.8910e-02, 4.5851e-03, -2.6605e-02, -1.1260e-01,

5.1117e-02, 5.4853e-02, -6.6986e-02, 1.2677e-01, -8.5949e-02,

-5.9423e-02, -2.9219e-03, -1.1488e-02, -1.2603e-01, -3.4828e-03,

-9.1200e-02, -1.2293e-01, 1.3378e-02, -4.7577e-02, -6.5793e-02,

-3.3941e-02, -3.0711e-02, -5.2203e-02, -2.3546e-02, 5.9004e-02,

-3.8576e-02, 3.1970e-02, 4.0512e-02, 1.6708e-02, -3.5828e-02,

1.4569e-02, 3.2014e-02, -1.3484e-02, 6.0782e-02, -8.3140e-03,

-1.0811e-02, 4.6941e-02, 7.6613e-02, -4.2340e-02, -2.1196e-08,

-7.2529e-02, -4.2023e-02, -6.1237e-02, 5.2467e-02, -1.4236e-02,

1.1849e-02, -1.4079e-02, -3.6753e-02, -4.4498e-02, -1.1514e-02,

5.2332e-02, 2.9665e-02, -4.6278e-02, -3.7089e-02, 1.8913e-02,

2.0431e-02, -2.2401e-02, -1.4856e-02, -1.7950e-02, 4.2001e-02,

1.4094e-02, -2.8349e-02, -1.1686e-01, 1.4896e-02, -7.3060e-04,

5.6603e-02, -2.6874e-02, 1.0911e-01, 2.9456e-03, 1.1927e-01,

1.1421e-01, 8.9297e-02, -1.7026e-02, -4.9905e-02, -2.1193e-02,

3.1842e-02, 7.0344e-02, -1.0293e-01, 8.2382e-02, 2.8197e-02,

3.2115e-02, 3.7911e-02, -1.0955e-01, 8.1962e-02, 8.7322e-02,

-5.7356e-02, -2.0171e-02, -5.6944e-02, -1.3034e-02, -5.5568e-02,

-1.3297e-02, 8.6401e-03, 5.3001e-02, -4.0685e-02, 2.7171e-02,

-2.5595e-03, 3.0578e-02, -4.6187e-02, 4.6803e-03, -3.6495e-02,

6.8080e-02, 6.6509e-02, 8.4915e-02, -3.3285e-02])

Sentence: The quick brown fox jumps over the lazy dog.

Embedding: tensor([ 4.3934e-02, 5.8934e-02, 4.8178e-02, 7.7548e-02, 2.6744e-02,

-3.7630e-02, -2.6051e-03, -5.9943e-02, -2.4960e-03, 2.2073e-02,

4.8026e-02, 5.5755e-02, -3.8945e-02, -2.6617e-02, 7.6934e-03,

-2.6238e-02, -3.6416e-02, -3.7816e-02, 7.4078e-02, -4.9505e-02,

-5.8522e-02, -6.3620e-02, 3.2435e-02, 2.2009e-02, -7.1064e-02,

-3.3158e-02, -6.9410e-02, -5.0037e-02, 7.4627e-02, -1.1113e-01,

-1.2306e-02, 3.7746e-02, -2.8031e-02, 1.4535e-02, -3.1559e-02,

-8.0584e-02, 5.8353e-02, 2.5901e-03, 3.9280e-02, 2.5770e-02,

4.9851e-02, -1.7563e-03, -4.5530e-02, 2.9261e-02, -1.0202e-01,

5.2229e-02, -7.9090e-02, -1.0286e-02, 9.2025e-03, 1.3073e-02,

-4.0478e-02, -2.7793e-02, 1.2467e-02, 6.7283e-02, 6.8125e-02,

-7.5712e-03, -6.0994e-03, -4.2378e-02, 5.1782e-02, -1.5671e-02,

9.5636e-03, 4.1239e-02, 2.1496e-02, 1.0429e-02, 2.7335e-02,

1.8706e-02, -2.6961e-02, -7.0054e-02, -1.0470e-01, -1.8988e-03,

1.7702e-02, -5.7473e-02, -1.4422e-02, 4.7049e-04, 2.3323e-03,

-2.5192e-02, 4.9300e-02, -5.0961e-02, 6.3198e-02, 1.4917e-02,

-2.7077e-02, -4.5288e-02, -4.9059e-02, 3.7494e-02, 3.8458e-02,

1.5690e-03, 3.0992e-02, 2.0163e-02, -1.2436e-02, -3.0672e-02,

-2.7882e-02, -6.8918e-02, -5.1368e-02, 2.1480e-02, 1.1575e-02,

1.2541e-03, 1.8877e-02, -4.4232e-02, -4.4982e-02, -3.4187e-03,

1.3113e-02, 2.0010e-02, 1.2110e-01, 2.3107e-02, -2.2016e-02,

-3.2885e-02, -3.1552e-03, 1.1785e-04, 9.9150e-02, 1.6524e-02,

-4.6967e-03, -1.4537e-02, -3.7108e-03, 9.6514e-02, 2.8591e-02,

2.1348e-02, -7.1764e-02, -2.4114e-02, -4.4094e-02, -1.0735e-01,

6.7995e-02, 1.3047e-01, -7.9703e-02, 6.7951e-03, -2.3751e-02,

-4.6164e-02, -2.9965e-02, -3.6941e-33, 7.3097e-02, -2.2017e-02,

-8.6146e-02, -7.1438e-02, -6.3674e-02, -7.2186e-02, -5.9304e-03,

-2.3364e-02, -2.8366e-02, 4.7743e-02, -8.0618e-02, -1.5648e-03,

1.3844e-02, -2.8624e-02, -3.3539e-02, -1.1378e-01, -9.1763e-03,

-1.0810e-02, 3.2320e-02, 5.8838e-02, 3.3421e-02, 1.0799e-01,

-3.7271e-02, -2.9677e-02, 5.1719e-02, -2.2534e-02, -6.9609e-02,

-2.1448e-02, -2.3341e-02, 4.8220e-02, -3.5877e-02, -4.6899e-02,

-3.9787e-02, 1.1081e-01, -1.4301e-02, -1.1846e-01, 5.8292e-02,

-6.2589e-02, -2.9404e-02, 6.0324e-02, -2.4441e-03, 1.6012e-02,

2.6723e-02, 2.4953e-02, -6.4932e-02, -1.0680e-02, 2.8147e-02,

1.0356e-02, -6.6362e-04, 1.9819e-02, -3.0429e-02, 6.2842e-03,

5.1527e-02, -4.7538e-02, -6.4442e-02, 9.5503e-02, 7.5586e-02,

-2.8157e-02, -3.4997e-02, 1.0182e-01, 1.9873e-02, -3.6804e-02,

2.9352e-03, -5.0074e-02, 1.5093e-01, -6.1608e-02, -8.5881e-02,

7.1399e-03, -1.3307e-02, 7.8040e-02, 1.7525e-02, 4.2128e-02,

3.5794e-02, -1.3295e-01, 3.5697e-02, -2.0312e-02, 1.2491e-02,

-3.8036e-02, 4.9154e-02, -1.5654e-02, 1.2142e-01, -8.0864e-02,

-4.6878e-02, 4.1084e-02, -1.8432e-02, 6.6969e-02, 4.3360e-03,

2.2732e-02, -1.3643e-02, -4.5324e-02, -3.9283e-02, -6.2989e-03,

5.2961e-02, -3.6906e-02, 7.1168e-02, 2.3334e-33, 1.0523e-01,

-4.8187e-02, 6.9592e-02, 6.5698e-02, -4.6515e-02, 5.1449e-02,

-1.2447e-02, 3.2087e-02, -9.2336e-02, 5.0093e-02, -3.2888e-02,

1.3914e-02, -8.7021e-04, -4.9091e-03, 1.0395e-01, 3.2159e-04,

5.2811e-02, -1.1799e-02, 2.3157e-02, 1.3177e-02, -5.2596e-02,

3.2670e-02, 3.0866e-04, 6.4113e-02, 3.8850e-02, 5.8801e-02,

8.2979e-02, -1.8815e-02, -2.2638e-02, -1.0047e-01, -3.8375e-02,

-5.8808e-02, 1.8242e-03, -4.2700e-02, 2.5020e-02, 6.4006e-02,

-3.7748e-02, -6.8390e-03, -2.5461e-03, -9.7604e-02, 1.8848e-02,

-8.8318e-04, 1.7361e-02, 7.1079e-02, 3.3039e-02, 6.9342e-03,

-5.6052e-02, 5.1463e-02, -4.2954e-02, 4.6008e-02, -8.7883e-03,

3.1729e-02, 4.9397e-02, 2.9519e-02, -5.0519e-02, -5.4319e-02,

1.4996e-04, -2.7661e-02, 3.4688e-02, -2.1089e-02, 1.3806e-02,

2.9989e-02, 1.3974e-02, -4.2647e-03, -1.5034e-02, -8.7610e-02,

-6.8505e-02, -4.2814e-02, 7.7695e-02, -7.1029e-02, -7.3769e-03,

2.1373e-02, 1.3556e-02, -7.9046e-02, 5.4767e-03, 8.3066e-02,

1.1415e-01, 1.8076e-03, 8.7549e-02, -4.1605e-02, 1.5542e-02,

-1.0121e-02, -7.3244e-03, 1.0797e-02, -6.6282e-02, 3.9841e-02,

-1.1671e-01, 6.4299e-02, 4.0292e-02, -6.5474e-02, 1.9505e-02,

8.1000e-02, 5.3646e-02, 7.6797e-02, -1.3485e-02, -1.7692e-08,

-4.4393e-02, 9.2064e-03, -8.7959e-02, 4.2692e-02, 7.3137e-02,

1.6843e-02, -4.0326e-02, 1.8513e-02, 8.4417e-02, -3.7448e-02,

3.0300e-02, 2.9064e-02, 6.3688e-02, 2.8975e-02, -1.4727e-02,

1.7754e-02, -3.3690e-02, 1.7316e-02, 3.3788e-02, 1.7683e-01,

-1.7553e-02, -6.0308e-02, -1.4339e-02, -2.3854e-02, -4.4553e-02,

-2.8985e-02, -8.9678e-02, -1.7594e-03, -2.6149e-02, 5.9400e-03,

-5.1836e-02, 8.5728e-02, -8.1840e-02, 8.3544e-03, 4.0079e-02,

4.1776e-02, 1.0457e-01, -2.8656e-03, 1.9669e-02, 5.8105e-03,

1.3325e-02, 4.5100e-02, -2.1759e-02, -1.3949e-02, -6.8699e-02,

-2.9411e-03, -3.1077e-02, -1.0585e-01, 6.9162e-02, -4.2411e-02,

-4.6768e-02, -3.6475e-02, 4.5040e-02, 6.0982e-02, -6.5656e-02,

-5.4564e-03, -1.8623e-02, -6.3148e-02, -3.8744e-02, 3.4673e-02,

5.5546e-02, 5.2163e-02, 5.6107e-02, 1.0206e-01])

3️⃣ 计算语义相似度

对于NLP有个常见的任务就是计算不同文本之间的相似度,对于文本来讲我们是用Embedding向量来进行表示,因为这个嵌入向量就已经蕴含了该文本的语义信息,所以我们可以根据这个向量来计算文本之间的相似度。

下面给出示例代码:

from sentence_transformers import SentenceTransformer, util

model = SentenceTransformer('all-MiniLM-L6-v2')

# 文本列表

sentences = ['The cat sits outside',

'A man is playing guitar',

'I love pasta',

'The new movie is awesome',

'The cat plays in the garden']

# 计算embeddings

embeddings = model.encode(sentences, convert_to_tensor=True)

# 计算不同文本之间的相似度

cosine_scores = util.cos_sim(embeddings, embeddings)

# 保存结果

pairs = []

for i in range(len(cosine_scores)-1):

for j in range(i+1, len(cosine_scores)):

pairs.append({'index': [i, j], 'score': cosine_scores[i][j]})

# 按照相似度分数进行排序打印

pairs = sorted(pairs, key=lambda x: x['score'], reverse=True)

for pair in pairs:

i, j = pair['index']

print("{:<30} \t\t {:<30} \t\t Score: {:.4f}".format(sentences[i], sentences[j], pair['score']))

首先就是将我们的所有文本信息进行Embedding嵌入,然后利用 cos_sim 函数计算不同文本之间的相似度,之后就可以将结果保存,按照相似度大小进行排序。

The cat sits outside The cat plays in the garden Score: 0.6788

I love pasta The new movie is awesome Score: 0.2440

A man is playing guitar The cat plays in the garden Score: 0.2105

The cat sits outside A man is playing guitar Score: 0.0363

The new movie is awesome The cat plays in the garden Score: 0.0275

I love pasta The cat plays in the garden Score: 0.0230

A man is playing guitar The new movie is awesome Score: 0.0093

The cat sits outside I love pasta Score: 0.0081

The cat sits outside The new movie is awesome Score: -0.0247

A man is playing guitar I love pasta Score: -0.0368