Android C/C++ 内存泄漏分析 unreachable

背景

随着对客户端稳定性质量的不断深入,部分的重点、难点问题逐步治理,内存质量逐步成为了影响客户端质量的最突出的问题之一。因此淘宝对此进行了系统性的内存治理,成立了内存专项。

“工欲善其事、必先利其器”。本文主要讲述内存专项的工具之一,内存泄漏分析memunreachable。

内存泄漏

内存泄漏(Memory Leak)是指程序中已动态分配的堆内存由于某种原因程序未释放或无法释放,造成系统内存的浪费,导致程序运行速度减慢甚至系统崩溃等严重后果。

对于 c/c++内存泄漏,由于存在指针要精确找到那些对象没有被引用是非常困难的,一直是困扰 c/c++重点、难点问题之一。目前也有一些基于类似 GC Swap-Mark 的算法去找到内存泄露,常见工具如 libmemunreachable,kmemleak,llvm leaksanitizer 这类工具也需要记录分配信息。

Android 的 libmemunreachable 是一个零开销的本地内存泄漏检测器。 它会使用不精确的“标记-清除”垃圾回收器遍历所有本机内存,同时将任何不可访问的块报告为泄漏。 有关使用说明,请参阅 libmemunacachable 文档[1]。虽然 Android 提供了 libmemunreachable 如此优秀的开源 c/c++内存泄漏工具,并内嵌到 Android 的系统环境,帮忙我们去定位内存泄漏问题,但是目前 libmemunreachable 使用依赖线下的 Debug 配置环境,无法支持淘宝 Release 包。

本文结合 libmemunreachable 源码,我们一起来欣赏 libmemunreachable 的实现原理以及淘宝对 libmemunreachable 改造用来实现对 Release 包的支持,帮助淘宝定位和排查线上的内存泄漏问题。

libmemunreachable 分析

基本原理

我们知道 JAVA GC 算法中,如果内存中的对象中,如果不在被 GcRoot 节点直接或间接持有,那么 GC 在适当的时间会触发垃圾回收机制,去释放内存。那么哪些节点可以被作为 GC 的 Root 节点:

- 虚拟机栈(栈帧中的本地变量表)中引用的对象;

- 方法区中的类静态属性引用的对象;

- 方法区中常量引用的对象;

- 本地方法栈中 JNI(即一般说的 Native 方法)中引用的对象。(JVM 中判断对象是否清理的一种方法是可达性算法.可达性算法就是采用 GC Roots 为根节点, 采用树状结构,向下搜索.如果对象直接到达 GC Roots ,中间没有任何中间节点.则判断对象可回收. 而堆区是 GC 的重点区域,所以堆区不能作为 GC roots。)

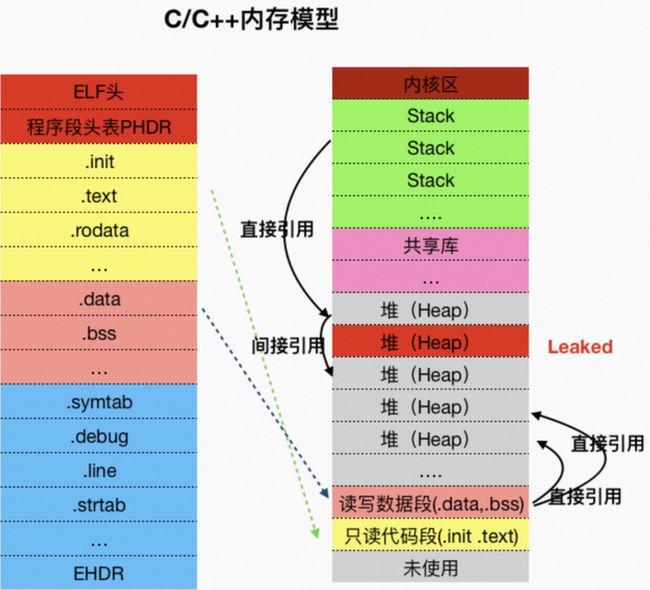

而 C/C++内存模型,堆 heap 、栈 stack、全局/静态存储区 (.bss 段和.data 段)、常量存储区 (.rodata 段) 、代码区 (.text 段)。libmemunreachable 通过 C/C++内存模型结合可达性算法,将栈 stack、全局/静态存储区 (.bss 段和.data 段)作为 GC Root 节点,判断堆 heap 中的内存是否被 GC Root 所持有,如果不被直接或间接持有,则被判定为泄漏(别较真,不一定要 100%的判断 C/C++的内存泄漏,而是可以分析可能存在的潜在泄漏)。

图 1 C/C++内存模型可达性算法示意图

libmemunreachable 会使用不精确的“标记-清除”垃圾回收器遍历所有本机内存,同时将任何不可访问的块报告为泄漏。

libmemunreachable 流程图

图 2 memunreachable 时序图

memunreachable 时序:

- 创建 LeakPipe:用来与子进程通信,子进程发送数据,父进程接受数据;

- Fork 子进程:通过 fork 子进程的方式来保护当前进程的状态;

- CaptureThreads:通过 Ptrace 的方式使得目标进程可以被子进程 Dump,从而使得子进程获取父进程的信息;

- CaptureThreadInfo:通过 PTRACE_GETREGSET 获取寄存器的信息,部分 Heap 的内存可能被寄存器持有,这些被寄存器持有的 Heap 不应该被判定为泄漏;

- ProcessMappings:解析/proc/self/maps 文件信息,maps 文件记录了堆 heap 、栈 stack、全局/静态存储区 (.bss 段和.data 段)、常量存储区 (.rodata 段) 、代码区 (.text 段)等内存相关的信息;

- ReleaseThreads:通过 Ptrace 的方式恢复目标进程的 Ptrace 状态,并且主进程结束等待,开始接受数据;

- 第二次 Fork 子进程:这里又 Fork 一次子进程,我的理解可能是为了性能,第一次 Fork 的是收集了需要分析内存泄漏的相关信息,第二次 Fork 则在收集的相关信息基础上去分析;

- CollectAllocations:从/proc/pid/maps 的信息中分类,将栈 stack、全局/静态存储区 (.bss 段和.data 段)放入 GC Root 节点,堆 heap 放入被检查的对象;

- GetUnreachableMemory:获取不可达的泄漏内存,C/C++内存模型结合可达性算法开始工作,去分析可能泄漏的 Heaps;

- PipeSend:通过 Pipe 将泄漏信息发送给主进程;

- PipeReceiver:主进程接受泄漏数据。

核心代码如下:

//MemUnreachable.cpp

bool GetUnreachableMemory(UnreachableMemoryInfo &info, size_t limit) {

int parent_pid = getpid();

int parent_tid = gettid();

Heap heap;

Semaphore continue_parent_sem;

LeakPipe pipe;

PtracerThread thread{[&]() -> int {

/

// Collection thread

/

ALOGE("collecting thread info for process %d...", parent_pid);

ThreadCapture thread_capture(parent_pid, heap);

allocator::vector thread_info(heap);

allocator::vector mappings(heap);

allocator::vector refs(heap);

// ptrace all the threads

if (!thread_capture.CaptureThreads()) {

LOGE("CaptureThreads failed");

}

// collect register contents and stacks

if (!thread_capture.CapturedThreadInfo(thread_info)) {

LOGE("CapturedThreadInfo failed");

}

// snapshot /proc/pid/maps

if (!ProcessMappings(parent_pid, mappings)) {

continue_parent_sem.Post();

LOGE("ProcessMappings failed");

return 1;

}

// malloc must be enabled to call fork, at_fork handlers take the same

// locks as ScopedDisableMalloc. All threads are paused in ptrace, so

// memory state is still consistent. Unfreeze the original thread so it

// can drop the malloc locks, it will block until the collection thread

// exits.

thread_capture.ReleaseThread(parent_tid);

continue_parent_sem.Post();

// fork a process to do the heap walking

int ret = fork();

if (ret < 0) {

return 1;

} else if (ret == 0) {

/

// Heap walker process

/

// Examine memory state in the child using the data collected above and

// the CoW snapshot of the process memory contents.

if (!pipe.OpenSender()) {

_exit(1);

}

MemUnreachable unreachable{parent_pid, heap};

//C/C++内存模型结合可达性算法开始工作

if (!unreachable.CollectAllocations(thread_info, mappings)) {

_exit(2);

}

size_t num_allocations = unreachable.Allocations();

size_t allocation_bytes = unreachable.AllocationBytes();

allocator::vector leaks{heap};

size_t num_leaks = 0;

size_t leak_bytes = 0;

bool ok = unreachable.GetUnreachableMemory(leaks, limit, &num_leaks, &leak_bytes);

ok = ok && pipe.Sender().Send(num_allocations);

ok = ok && pipe.Sender().Send(allocation_bytes);

ok = ok && pipe.Sender().Send(num_leaks);

ok = ok && pipe.Sender().Send(leak_bytes);

ok = ok && pipe.Sender().SendVector(leaks);

if (!ok) {

_exit(3);

}

_exit(0);

} else {

// Nothing left to do in the collection thread, return immediately,

// releasing all the captured threads.

ALOGI("collection thread done");

return 0;

}

}};

/

// Original thread

/

{

// Disable malloc to get a consistent view of memory

ScopedDisableMalloc disable_malloc;

// Start the collection thread

thread.Start();

// Wait for the collection thread to signal that it is ready to fork the

// heap walker process.

continue_parent_sem.Wait(300s);

// Re-enable malloc so the collection thread can fork.

}

// Wait for the collection thread to exit

int ret = thread.Join();

if (ret != 0) {

return false;

}

// Get a pipe from the heap walker process. Transferring a new pipe fd

// ensures no other forked processes can have it open, so when the heap

// walker process dies the remote side of the pipe will close.

if (!pipe.OpenReceiver()) {

return false;

}

bool ok = true;

ok = ok && pipe.Receiver().Receive(&info.num_allocations);

ok = ok && pipe.Receiver().Receive(&info.allocation_bytes);

ok = ok && pipe.Receiver().Receive(&info.num_leaks);

ok = ok && pipe.Receiver().Receive(&info.leak_bytes);

ok = ok && pipe.Receiver().ReceiveVector(info.leaks);

if (!ok) {

return false;

}

LOGD("unreachable memory detection done");

LOGD("%zu bytes in %zu allocation%s unreachable out of %zu bytes in %zu allocation%s",

info.leak_bytes, info.num_leaks, plural(info.num_leaks),

info.allocation_bytes, info.num_allocations, plural(info.num_allocations));

return true;

} CaptureThreads(核心函数)

//ThreadCapture.cpp

bool ThreadCaptureImpl::CaptureThreads() {

TidList tids{allocator_};

bool found_new_thread;

do {

//从/proc/pid/task中获取全部线程Tid

if (!ListThreads(tids)) {

LOGE("ListThreads failed");

ReleaseThreads();

return false;

}

found_new_thread = false;

for (auto it = tids.begin(); it != tids.end(); it++) {

auto captured = captured_threads_.find(*it);

if (captured == captured_threads_.end()) {

//通过ptrace(PTRACE_SEIZE, tid, NULL, NULL)使得线程tid可以被DUMP

if (CaptureThread(*it) < 0) {

LOGE("CaptureThread(*it) failed");

ReleaseThreads();

return false;

}

found_new_thread = true;

}

}

} while (found_new_thread);

return true;

}CaptureThreads 存在两个核心核心函数

- ListThreads:从/proc/pid/task 中获取全部线程 Tid

- CaptureThread:通过 ptrace(PTRACE_SEIZE, tid, NULL, NULL)使得线程 tid 可以被 DUMP

CaptureThreadInfo(核心函数)

//ThreadCaptureImpl.cpp

bool ThreadCaptureImpl::CapturedThreadInfo(ThreadInfoList &threads) {

threads.clear();

for (auto it = captured_threads_.begin(); it != captured_threads_.end(); it++) {

ThreadInfo t{0, allocator::vector(allocator_),

std::pair(0, 0)};

//ptrace(PTRACE_GETREGSET, tid, reinterpret_cast(NT_PRSTATUS), &iovec)

if (!PtraceThreadInfo(it->first, t)) {

return false;

}

threads.push_back(t);

}

return true;

} CaptureThreads 的个核心函数

- PtraceThreadInfo:ptrace(PTRACE_GETREGSET, tid...),通过 ptrace 获寄存器信息,部分 Heap 的内存可能被寄存器持有,这些被寄存器持有的 Heap 不应该被判定为泄漏。

ProcessMappings(核心函数)

//ProcessMappings.cpp

bool ProcessMappings(pid_t pid, allocator::vector &mappings) {

char map_buffer[1024];

snprintf(map_buffer, sizeof(map_buffer), "/proc/%d/maps", pid);

android::base::unique_fd fd(open(map_buffer, O_RDONLY));

if (fd == -1) {

LOGE("ProcessMappings parent pid failed to open %s: %s", map_buffer, strerror(errno));

//get self pid to replace

//Release 包有权限问题只能访问自身进程

snprintf(map_buffer, sizeof(map_buffer), "/proc/self/maps");

fd.reset(open(map_buffer, O_RDONLY));

if (fd == -1) {

LOGE("ProcessMappings failed to open %s: %s", map_buffer, strerror(errno));

return false;

}

}

LineBuffer line_buf(fd, map_buffer, sizeof(map_buffer));

char *line;

size_t line_len;

while (line_buf.GetLine(&line, &line_len)) {

int name_pos;

char perms[5];

Mapping mapping{};

if (sscanf(line, "%" SCNxPTR "-%" SCNxPTR " %4s %*x %*x:%*x %*d %n",

&mapping.begin, &mapping.end, perms, &name_pos) == 3) {

if (perms[0] == 'r') {

mapping.read = true;

}

if (perms[1] == 'w') {

mapping.write = true;

}

if (perms[2] == 'x') {

mapping.execute = true;

}

if (perms[3] == 'p') {

mapping.priv = true;

}

if ((size_t) name_pos < line_len) {

strlcpy(mapping.name, line + name_pos, sizeof(mapping.name));

}

mappings.emplace_back(mapping);

}

}

return true;

} - ProcessMappings 解析 maps 文件信息。

CollectAllocations(核心函数)

//MemUnreachable.cpp

bool MemUnreachable::ClassifyMappings(const allocator::vector &mappings,

allocator::vector &heap_mappings,

allocator::vector &anon_mappings,

allocator::vector &globals_mappings,

allocator::vector &stack_mappings) {

heap_mappings.clear();

anon_mappings.clear();

globals_mappings.clear();

stack_mappings.clear();

allocator::string current_lib{allocator_};

for (auto it = mappings.begin(); it != mappings.end(); it++) {

if (it->execute) {

current_lib = it->name;

continue;

}

if (!it->read) {

continue;

}

const allocator::string mapping_name{it->name, allocator_};

if (mapping_name == "[anon:.bss]") {

// named .bss section

globals_mappings.emplace_back(*it);

} else if (mapping_name == current_lib) {

// .rodata or .data section

globals_mappings.emplace_back(*it);

} else if (has_prefix(mapping_name, "[anon:scudo:secondary]")) {

// named malloc mapping

heap_mappings.emplace_back(*it);

} else if (has_prefix(mapping_name, "[anon:scudo:primary]")) {

// named malloc mapping

heap_mappings.emplace_back(*it);

} else if (mapping_name == "[anon:libc_malloc]") {

// named malloc mapping

heap_mappings.emplace_back(*it);

} else if (has_prefix(mapping_name, "/dev/ashmem/dalvik")

|| has_prefix(mapping_name, "[anon:dalvik")) {

// named dalvik heap mapping

globals_mappings.emplace_back(*it);

} else if (has_prefix(mapping_name, "[stack")) {

// named stack mapping

stack_mappings.emplace_back(*it);

} else if (mapping_name.size() == 0 || mapping_name == "") {

globals_mappings.emplace_back(*it);

} else if (has_prefix(mapping_name, "[anon:stack_and_tls")) {

stack_mappings.emplace_back(*it);

} else if (has_prefix(mapping_name, "[anon:") &&

mapping_name != "[anon:leak_detector_malloc]") {

// TODO(ccross): it would be nice to treat named anonymous mappings as

// possible leaks, but naming something in a .bss or .data section makes

// it impossible to distinguish them from mmaped and then named mappings.

globals_mappings.emplace_back(*it);

}

}

return true;

}

bool MemUnreachable::CollectAllocations(const allocator::vector &threads,

const allocator::vector &mappings) {

ALOGI("searching process %d for allocations", pid_);

allocator::vector heap_mappings{mappings};

allocator::vector anon_mappings{mappings};

allocator::vector globals_mappings{mappings};

allocator::vector stack_mappings{mappings};

if (!ClassifyMappings(mappings, heap_mappings, anon_mappings,

globals_mappings, stack_mappings)) {

return false;

}

for (auto it = heap_mappings.begin(); it != heap_mappings.end(); it++) {

HeapIterate(*it, [&](uintptr_t base, size_t size) {

if (!heap_walker_.Allocation(base, base + size)) {

LOGD("Allocation Failed base:%p size:%d name:%s", base, size, it->name);

}

});

}

for (auto it = anon_mappings.begin(); it != anon_mappings.end(); it++) {

if (!heap_walker_.Allocation(it->begin, it->end)) {

LOGD("Allocation Failed base:%p end:%d name:%s", it->begin, it->end, it->name);

}

}

for (auto it = globals_mappings.begin(); it != globals_mappings.end(); it++) {

heap_walker_.Root(it->begin, it->end);

}

if (threads.size() > 0) {

for (auto thread_it = threads.begin(); thread_it != threads.end(); thread_it++) {

for (auto it = stack_mappings.begin(); it != stack_mappings.end(); it++) {

if (thread_it->stack.first >= it->begin && thread_it->stack.first <= it->end) {

heap_walker_.Root(thread_it->stack.first, it->end);

}

}

//写入寄存器的信息,作为根节点

heap_walker_.Root(thread_it->regs);

}

} else {

//由于获取寄存器信息失败,采取降级逻辑

for (auto it = stack_mappings.begin(); it != stack_mappings.end(); it++) {

heap_walker_.Root(it->begin, it->end);

}

}

if (threads.size() > 0) {

for (auto thread_it = threads.begin(); thread_it != threads.end(); thread_it++) {

for (auto it = stack_mappings.begin(); it != stack_mappings.end(); it++) {

if (thread_it->stack.first >= it->begin && thread_it->stack.first <= it->end) {

heap_walker_.Root(thread_it->stack.first, it->end);

}

}

//写入寄存器的信息,作为根节点

heap_walker_.Root(thread_it->regs);

}

} else {

//由于获取寄存器信息失败,采取降级逻辑

for (auto it = stack_mappings.begin(); it != stack_mappings.end(); it++) {

heap_walker_.Root(it->begin, it->end);

}

}

ALOGI("searching done");

return true;

} CollectAllocations 将 maps 分四个模块,分别是 1.heap_mappings 存放堆信息,stack_mappings 存放线程栈信息(GC Root),globals_mappings 存放.bss .data 信息(GC Root),anon_mappings 其他可读的内存信息(GC Root,这些也会作为 GC Root 防止有泄漏误报):

- ClassifyMappings:将 maps 信息存放到目标模块中;

- HeapIterate:遍历有效内存分布;Android 内存分配算法,在申请的过程中会通过 mmap 申请一块块的大内存,最后通过内存分配器进行内存管理,Android 11 以上使用了scudo 内存分配[2](Android 11 以下使用的是jemalloc 内存分配器[3]),无论是那种分配器,Android 都提供了遍历有效内存的便利函数 malloc_iterate 这使得我们获取有效内存变得容易很多。相关内容可以看malloc_debug[4]。

GetUnreachableMemory(核心函数)

//HeapWalker.cpp

void HeapWalker::RecurseRoot(const Range &root) {

allocator::vector to_do(1, root, allocator_);

while (!to_do.empty()) {

Range range = to_do.back();

to_do.pop_back();

//将GC Root的节点的一个块内存作为指针,去遍历,直到队列为空

ForEachPtrInRange(range, [&](Range &ref_range, AllocationInfo *ref_info) {

if (!ref_info->referenced_from_root) {

ref_info->referenced_from_root = true;

to_do.push_back(ref_range);

}

});

}

}

bool HeapWalker::DetectLeaks() {

// Recursively walk pointers from roots to mark referenced allocations

for (auto it = roots_.begin(); it != roots_.end(); it++) {

RecurseRoot(*it);

}

Range vals;

vals.begin = reinterpret_cast(root_vals_.data());

vals.end = vals.begin + root_vals_.size() * sizeof(uintptr_t);

RecurseRoot(vals);

return true;

}

bool MemUnreachable::GetUnreachableMemory(allocator::vector &leaks,

size_t limit, size_t *num_leaks, size_t *leak_bytes) {

ALOGI("sweeping process %d for unreachable memory", pid_);

leaks.clear();

if (!heap_walker_.DetectLeaks()) {

return false;

}

//数据统计

...

return true;

} 核心函数 DetectLeaks

- ForEachPtrInRange:将 GC Root 的节点的一个块内存作为指针,去遍历,直到队列为空

- DetectLeaks:遍历 GC Root 节点,将能访问到的 Heap 对象标记;

- 数据统计:没有遍历到的 Heap 对象设置为泄漏。

淘宝 Release 包改进

Android 10 之后系统收回了进程私有文件的权限,如 /proc/pid/maps,/proc/pid/task 等,fork 出来的子进程无法获取父进程目录下的文件,否则会抛“Operation not permitted”的异常。因此当我们通过 dlsym 的方式去调用系统 libmemunreachable.so 库的时候在 Release 包的时候会抛“Failed to get unreachable memory if you are trying to get unreachable memory from a system app (like com.android.systemui), disable selinux first using setenforce 0”(当然我们无法去设置用户的系统环境)。

针对这问题,淘宝选择重新编译了 libmemunreachable 库,并且修改了相关所需权限的配置,如/proc/pid/maps 的获取不在获取父进程(目标进程)的 maps(没有权限),而获取/proc/self/maps,因为子进程保留了父进程的内存信息,这与获取/proc/pid/maps 的效果是一致。

Ptrace 失败的修复:google unreachable 在 debug 包可以,在 release 包里不能运行,原因是 PR_GET_DUMPABLE 在 debug 的时候默认是 1,直接可以 ATTACH,而在 release 的默认是 0,不可以 attach,导致 release 跑 unreach 不正常工作 (google 太坏了),修复方案:设置下 prctl(PR_SET_DUMPABLE, 1);

其他改造:

- 工程化的改造,打通 TBRest,使得线上的泄漏数据上报到 EMAS;

- 非核心权限绕开,如/proc/pid/task 获取线程寄存器信息,如果获取失败不终止流程(虽然线程寄存器有可能会指向内存,并且这个内存不被.bss .data 和 stack 等持有,导致误判,但是这样的场景不多)。

可能的误报场景

base+offset 的场景特定的内存分析会失败。比如他申请的内存是 A,但是堆栈和 Global 是通过 Base+offset=A 这种方法来引用的 ,就会误判,因为 Base 和 offset 在堆和.bss 里,但是堆和.bss 没有 A ,就判断 A 泄漏了 就误报了。