【统计学习方法】第7章 支持向量机

支持向量机(support vector machines,SVM)是一种二类分类模型。它的基本模型是定义在特征空间上的间隔最大的线性分类器,间隔最大使它有别于感知机;支持向量机还包括核技巧,这使它成为实质上的非线性分类器。支持向量机的学习策略就是间隔最大化,可形式化为一个求解凸二次规划(convex quadratic programming)的问题,也等价于正则化的合页损失函数的最小化问题。支持向量机的学习算法是求解凸二次规划的最优化算法。

1、线性可分支持向量机与硬间隔最大化

线性可分支持向量机

假设给定一个特征空间上的训练数据集 T = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , ⋯ , ( x N , y N ) } \begin{aligned} & T = \left\{ \left( x_{1}, y_{1} \right), \left( x_{2}, y_{2} \right), \cdots, \left( x_{N}, y_{N} \right) \right\} \end{aligned} T={(x1,y1),(x2,y2),⋯,(xN,yN)}

其中, x i ∈ X = R n , y i ∈ Y = { + 1 , − 1 } , i = 1 , 2 , ⋯ , N x_{i} \in \mathcal{X} = R^{n}, y_{i} \in \mathcal{Y} = \left\{ +1, -1 \right\}, i = 1, 2, \cdots, N xi∈X=Rn,yi∈Y={+1,−1},i=1,2,⋯,N, x i x_{i} xi为第 i i i个特征向量(实例), y i y_{i} yi为第 x i x_{i} xi的类标记,当 y i = + 1 y_{i}=+1 yi=+1时,称 x i x_{i} xi为正例;当 y i = − 1 y_{i}= -1 yi=−1时,称 x i x_{i} xi为负例, ( x i , y i ) \left( x_{i}, y_{i} \right) (xi,yi)称为样本点。

分离超平面对应于方程 w . x + b = 0 w.x+b=0 w.x+b=0,它由法向量 w w w和截距 b b b决定,可用 ( w , b ) (w, b) (w,b)来表示。

线性可分支持向量机(硬间隔支持向量机):给定线性可分训练数据集,通过间隔最大化或等价地求解相应地凸二次规划问题学习得到分离超平面为 w ∗ ⋅ x + b ∗ = 0 \begin{aligned} & w^{*} \cdot x + b^{*} = 0 \end{aligned} w∗⋅x+b∗=0

以及相应的分类决策函数 f ( x ) = s i g n ( w ∗ ⋅ x + b ∗ ) \begin{aligned} & f \left( x \right) = sign \left( w^{*} \cdot x + b^{*} \right) \end{aligned} f(x)=sign(w∗⋅x+b∗)

称为线型可分支持向量机。

函数间隔和几何间隔

对于给定的训练数据集T和超平面 ( w , b ) (w, b) (w,b),定义超平面 ( w , b ) \left( w, b \right) (w,b)关于样本点 ( x i , y i ) \left( x_{i}, y_{i} \right) (xi,yi)的函数间隔为 γ ^ i = y i ( w ⋅ x i + b ) \begin{aligned} & \hat \gamma_{i} = y_{i} \left( w \cdot x_{i} + b \right) \end{aligned} γ^i=yi(w⋅xi+b)

超平面 ( w , b ) \left( w, b \right) (w,b)关于训练集 T T T的函数间隔 γ ^ = min i = 1 , 2 , ⋯ , N γ ^ i \begin{aligned} & \hat \gamma = \min_{i = 1, 2, \cdots, N} \hat \gamma_{i} \end{aligned} γ^=i=1,2,⋯,Nminγ^i

即超平面 ( w , b ) \left( w, b \right) (w,b)关于训练集 T T T中所有样本点 ( x i , y i ) \left( x_{i}, y_{i} \right) (xi,yi)的函数间隔的最小值。

超平面 ( w , b ) \left( w, b \right) (w,b)关于样本点 ( x i , y i ) \left( x_{i}, y_{i} \right) (xi,yi)的几何间隔为 γ i = y i ( w ∥ w ∥ ⋅ x i + b ∥ w ∥ ) \begin{aligned} & \gamma_{i} = y_{i} \left( \dfrac{w}{\| w \|} \cdot x_{i} + \dfrac{b}{\| w \|} \right) \end{aligned} γi=yi(∥w∥w⋅xi+∥w∥b)

超平面 ( w , b ) \left( w, b \right) (w,b)关于训练集 T T T的几何间隔 γ = min i = 1 , 2 , ⋯ , N γ i \begin{aligned} & \gamma = \min_{i = 1, 2, \cdots, N} \gamma_{i} \end{aligned} γ=i=1,2,⋯,Nminγi

即超平面 ( w , b ) \left( w, b \right) (w,b)关于训练集 T T T中所有样本点 ( x i , y i ) \left( x_{i}, y_{i} \right) (xi,yi)的几何间隔的最小值。

函数间隔和几何间隔的关系 γ i = γ ^ i ∥ w ∥ γ = γ ^ ∥ w ∥ \begin{aligned} & \gamma_{i} = \dfrac{\hat \gamma_{i}}{\| w \|} \\& \gamma = \dfrac{\hat \gamma}{\| w \|} \end{aligned} γi=∥w∥γ^iγ=∥w∥γ^

间隔最大化

最大间隔分离超平面等价为求解 max w , b γ s . t . y i ( w ∥ w ∥ ⋅ x i + b ∥ w ∥ ) ≥ γ , i = 1 , 2 , ⋯ , N \begin{aligned} & \max_{w,b} \quad \gamma \\ & s.t. \quad y_{i} \left( \dfrac{w}{\| w \|} \cdot x_{i} + \dfrac{b}{\| w \|} \right) \geq \gamma, \quad i=1,2, \cdots, N \end{aligned} w,bmaxγs.t.yi(∥w∥w⋅xi+∥w∥b)≥γ,i=1,2,⋯,N

等价的 max w , b γ ^ ∥ w ∥ s . t . y i ( w ⋅ x i + b ) ≥ γ ^ , i = 1 , 2 , ⋯ , N \begin{aligned} & \max_{w,b} \quad \dfrac{\hat \gamma}{\| w \|} \\ & s.t. \quad y_{i} \left( w \cdot x_{i} + b \right) \geq \hat \gamma, \quad i=1,2, \cdots, N \end{aligned} w,bmax∥w∥γ^s.t.yi(w⋅xi+b)≥γ^,i=1,2,⋯,N

取 γ ^ = 1 \hat \gamma = 1 γ^=1,将其入上面的最优化问题,注意到最大化 1 ∥ w ∥ \dfrac{1}{\| w \|} ∥w∥1和最小化 1 2 ∥ w ∥ 2 \dfrac{1}{2} \| w \|^{2} 21∥w∥2是等价的,

等价的 min w , b 1 2 ∥ w ∥ 2 s . t . y i ( w ⋅ x i + b ) − 1 ≥ 0 , i = 1 , 2 , ⋯ , N \begin{aligned} & \min_{w,b} \quad \dfrac{1}{2} \| w \|^{2} \\ & s.t. \quad y_{i} \left( w \cdot x_{i} + b \right) -1 \geq 0, \quad i=1,2, \cdots, N \end{aligned} w,bmin21∥w∥2s.t.yi(w⋅xi+b)−1≥0,i=1,2,⋯,N

线性可分支持向量机学习算法(最大间隔法):

- 输入:线性可分训练数据集 T = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , ⋯ , ( x N , y N ) } T = \left\{ \left( x_{1}, y_{1} \right), \left( x_{2}, y_{2} \right), \cdots, \left( x_{N}, y_{N} \right) \right\} T={(x1,y1),(x2,y2),⋯,(xN,yN)},其中 x i ∈ X = R n , y i ∈ Y = { + 1 , − 1 } , i = 1 , 2 , ⋯ , N x_{i} \in \mathcal{X} = R^{n}, y_{i} \in \mathcal{Y} = \left\{ +1, -1 \right\}, i = 1, 2, \cdots, N xi∈X=Rn,yi∈Y={+1,−1},i=1,2,⋯,N

- 输出:最大间隔分离超平面和分类决策函数

- 构建并求解约束最优化问题 min w , b 1 2 ∥ w ∥ 2 s . t . y i ( w ⋅ x i + b ) − 1 ≥ 0 , i = 1 , 2 , ⋯ , N \begin{aligned} \\ & \min_{w,b} \quad \dfrac{1}{2} \| w \|^{2} \\ & s.t. \quad y_{i} \left( w \cdot x_{i} + b \right) -1 \geq 0, \quad i=1,2, \cdots, N \end{aligned} w,bmin21∥w∥2s.t.yi(w⋅xi+b)−1≥0,i=1,2,⋯,N

求得最优解 w ∗ , b ∗ w^{*}, b^{*} w∗,b∗。 - 得到分离超平面 w ∗ ⋅ x + b ∗ = 0 \begin{aligned} & w^{*} \cdot x + b^{*} = 0 \end{aligned} w∗⋅x+b∗=0

以及分类决策函数

f ( x ) = s i g n ( w ∗ ⋅ x + b ∗ ) \begin{aligned} & f \left( x \right) = sign \left( w^{*} \cdot x + b^{*} \right) \end{aligned} f(x)=sign(w∗⋅x+b∗)

支持向量和间隔边界

(硬间隔)支持向量:训练数据集的样本点中与分离超平面距离最近的样本点的实例,即使约束条件等号成立的样本点 y i ( w ⋅ x i + b ) − 1 = 0 \begin{aligned} & y_{i} \left( w \cdot x_{i} + b \right) -1 = 0 \end{aligned} yi(w⋅xi+b)−1=0

对 y i = + 1 y_{i} = +1 yi=+1的正例点,支持向量在超平面

H 1 : w ⋅ x + b = 1 \begin{aligned} & H_{1}:w \cdot x + b = 1 \end{aligned} H1:w⋅x+b=1

对 y i = − 1 y_{i} = -1 yi=−1的正例点,支持向量在超平面

H 1 : w ⋅ x + b = − 1 \begin{aligned} & H_{1}:w \cdot x + b = -1 \end{aligned} H1:w⋅x+b=−1

H 1 H_{1} H1和 H 2 H_{2} H2称为间隔边界, H 1 H_{1} H1和 H 2 H_{2} H2上的点就是支持向量。

H 1 H_{1} H1和 H 2 H_{2} H2之间的距离称为间隔,且 ∣ H 1 H 2 ∣ = 1 ∥ w ∥ + 1 ∥ w ∥ = 2 ∥ w ∥ |H_{1}H_{2}| = \dfrac{1}{\| w \|} + \dfrac{1}{\| w \|} = \dfrac{2}{\| w \|} ∣H1H2∣=∥w∥1+∥w∥1=∥w∥2。

2、线性支持向量机与软间隔最大化

线性支持向量机

线性支持向量机(软间隔支持向量机):给定线性不可分训练数据集,通过求解凸二次规划问题

min w , b , ξ 1 2 ∥ w ∥ 2 + C ∑ i = 1 N ξ i s . t . y i ( w ⋅ x i + b ) ≥ 1 − ξ i ξ i ≥ 0 , i = 1 , 2 , ⋯ , N \begin{aligned} & \min_{w,b,\xi} \quad \dfrac{1}{2} \| w \|^{2} + C \sum_{i=1}^{N} \xi_{i} \\ & s.t. \quad y_{i} \left( w \cdot x_{i} + b \right) \geq 1 - \xi_{i} \\ & \xi_{i} \geq 0, \quad i=1,2, \cdots, N \end{aligned} w,b,ξmin21∥w∥2+Ci=1∑Nξis.t.yi(w⋅xi+b)≥1−ξiξi≥0,i=1,2,⋯,N

学习得到分离超平面为 w ∗ ⋅ x + b ∗ = 0 \begin{aligned} & w^{*} \cdot x + b^{*} = 0 \end{aligned} w∗⋅x+b∗=0

以及相应的分类决策函数 f ( x ) = s i g n ( w ∗ ⋅ x + b ∗ ) \begin{aligned} & f \left( x \right) = sign \left( w^{*} \cdot x + b^{*} \right) \end{aligned} f(x)=sign(w∗⋅x+b∗)

称为线型支持向量机。

最优化问题的求解:

-

引入拉格朗日乘子 α i ≥ 0 , i = 1 , 2 , ⋯ , N \alpha_{i} \geq 0, i = 1, 2, \cdots, N αi≥0,i=1,2,⋯,N构建拉格朗日函数 L ( w , b , α ) = 1 2 ∥ w ∥ 2 + ∑ i = 1 N α i [ − y i ( w ⋅ x i + b ) + 1 ] = 1 2 ∥ w ∥ 2 − ∑ i = 1 N α i y i ( w ⋅ x i + b ) + ∑ i = 1 N α i \begin{aligned} & L \left( w, b, \alpha \right) = \dfrac{1}{2} \| w \|^{2} + \sum_{i=1}^{N} \alpha_{i} \left[- y_{i} \left( w \cdot x_{i} + b \right) + 1 \right] \\ & = \dfrac{1}{2} \| w \|^{2} - \sum_{i=1}^{N} \alpha_{i} y_{i} \left( w \cdot x_{i} + b \right) + \sum_{i=1}^{N} \alpha_{i} \end{aligned} L(w,b,α)=21∥w∥2+i=1∑Nαi[−yi(w⋅xi+b)+1]=21∥w∥2−i=1∑Nαiyi(w⋅xi+b)+i=1∑Nαi

其中, α = ( α 1 , α 2 , ⋯ , α N ) T \alpha = \left( \alpha_{1}, \alpha_{2}, \cdots, \alpha_{N} \right)^{T} α=(α1,α2,⋯,αN)T为拉格朗日乘子向量。 -

求 min w , b L ( w , b , α ) \min_{w,b}L \left( w, b, \alpha \right) minw,bL(w,b,α): ∇ w L ( w , b , α ) = w − ∑ i = 1 N α i y i x i = 0 ∇ b L ( w , b , α ) = − ∑ i = 1 N α i y i = 0 \begin{aligned} & \nabla _{w} L \left( w, b, \alpha \right) = w - \sum_{i=1}^{N} \alpha_{i} y_{i} x_{i} = 0 \\ & \nabla _{b} L \left( w, b, \alpha \right) = -\sum_{i=1}^{N} \alpha_{i} y_{i} = 0 \end{aligned} ∇wL(w,b,α)=w−i=1∑Nαiyixi=0∇bL(w,b,α)=−i=1∑Nαiyi=0

得

w = ∑ i = 1 N α i y i x i ∑ i = 1 N α i y i = 0 \begin{aligned} & w = \sum_{i=1}^{N} \alpha_{i} y_{i} x_{i} \\ & \sum_{i=1}^{N} \alpha_{i} y_{i} = 0 \end{aligned} w=i=1∑Nαiyixii=1∑Nαiyi=0代入拉格朗日函数,得 L ( w , b , α ) = 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) − ∑ i = 1 N α i y i [ ( ∑ j = 1 N α j y j x j ) ⋅ x i + b ] + ∑ i = 1 N α i = − 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) − ∑ i = 1 N α i y i b + ∑ i = 1 N α i = − 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) + ∑ i = 1 N α i \begin{aligned} \\ & L \left( w, b, \alpha \right) = \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) - \sum_{i=1}^{N} \alpha_{i} y_{i} \left[ \left( \sum_{j=1}^{N} \alpha_{j} y_{j} x_{j} \right) \cdot x_{i} + b \right] + \sum_{i=1}^{N} \alpha_{i} \\ & = - \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) - \sum_{i=1}^{N} \alpha_{i} y_{i} b + \sum_{i=1}^{N} \alpha_{i} \\ & = - \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) + \sum_{i=1}^{N} \alpha_{i} \end{aligned} L(w,b,α)=21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)−i=1∑Nαiyi[(j=1∑Nαjyjxj)⋅xi+b]+i=1∑Nαi=−21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)−i=1∑Nαiyib+i=1∑Nαi=−21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)+i=1∑Nαi

即 min w , b L ( w , b , α ) = − 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) + ∑ i = 1 N α i \begin{aligned} \\ & \min_{w,b}L \left( w, b, \alpha \right) = - \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) + \sum_{i=1}^{N} \alpha_{i} \end{aligned} w,bminL(w,b,α)=−21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)+i=1∑Nαi -

求 max α min w , b L ( w , b , α ) \max_{\alpha} \min_{w,b}L \left( w, b, \alpha \right) maxαminw,bL(w,b,α): max α − 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) + ∑ i = 1 N α i s . t . ∑ i = 1 N α i y i = 0 α i ≥ 0 , i = 1 , 2 , ⋯ , N \begin{aligned} \\ & \max_{\alpha} - \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) + \sum_{i=1}^{N} \alpha_{i} \\ & s.t. \sum_{i=1}^{N} \alpha_{i} y_{i} = 0 \\ & \alpha_{i} \geq 0, \quad i=1,2, \cdots, N \end{aligned} αmax−21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)+i=1∑Nαis.t.i=1∑Nαiyi=0αi≥0,i=1,2,⋯,N

等价的 min α 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) − ∑ i = 1 N α i s . t . ∑ i = 1 N α i y i = 0 α i ≥ 0 , i = 1 , 2 , ⋯ , N \begin{aligned} \\ & \min_{\alpha} \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) - \sum_{i=1}^{N} \alpha_{i} \\ & s.t. \sum_{i=1}^{N} \alpha_{i} y_{i} = 0 \\ & \alpha_{i} \geq 0, \quad i=1,2, \cdots, N \end{aligned} αmin21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)−i=1∑Nαis.t.i=1∑Nαiyi=0αi≥0,i=1,2,⋯,N

线性可分支持向量机(硬间隔支持向量机)学习算法:

- 输入:线性可分训练数据集 T = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , ⋯ , ( x N , y N ) } T = \left\{ \left( x_{1}, y_{1} \right), \left( x_{2}, y_{2} \right), \cdots, \left( x_{N}, y_{N} \right) \right\} T={(x1,y1),(x2,y2),⋯,(xN,yN)},其中 x i ∈ X = R n , y i ∈ Y = { + 1 , − 1 } , i = 1 , 2 , ⋯ , N x_{i} \in \mathcal{X} = R^{n}, y_{i} \in \mathcal{Y} = \left\{ +1, -1 \right\}, i = 1, 2, \cdots, N xi∈X=Rn,yi∈Y={+1,−1},i=1,2,⋯,N

- 输出:最大间隔分离超平面和分类决策函数

-

构建并求解约束最优化问题 min α 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) − ∑ i = 1 N α i s . t . ∑ i = 1 N α i y i = 0 α i ≥ 0 , i = 1 , 2 , ⋯ , N \begin{aligned} & \min_{\alpha} \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) - \sum_{i=1}^{N} \alpha_{i} \\ & s.t. \sum_{i=1}^{N} \alpha_{i} y_{i} = 0 \\ & \alpha_{i} \geq 0, \quad i=1,2, \cdots, N \end{aligned} αmin21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)−i=1∑Nαis.t.i=1∑Nαiyi=0αi≥0,i=1,2,⋯,N

求得最优解 α ∗ = ( α 1 ∗ , α 1 ∗ , ⋯ , α N ∗ ) \alpha^{*} = \left( \alpha_{1}^{*}, \alpha_{1}^{*}, \cdots, \alpha_{N}^{*} \right) α∗=(α1∗,α1∗,⋯,αN∗)

-

计算 w ∗ = ∑ i = 1 N α i ∗ y i x i \begin{aligned} & w^{*} = \sum_{i=1}^{N} \alpha_{i}^{*} y_{i} x_{i} \end{aligned} w∗=i=1∑Nαi∗yixi

并选择 α ∗ \alpha^{*} α∗的一个正分量 α j ∗ > 0 \alpha_{j}^{*} \gt 0 αj∗>0,计算 b ∗ = y j − ∑ i = 1 N α i ∗ y i ( x i ⋅ x j ) \begin{aligned} & b^{*} = y_{j} - \sum_{i=1}^{N} \alpha_{i}^{*} y_{i} \left( x_{i} \cdot x_{j} \right) \end{aligned} b∗=yj−i=1∑Nαi∗yi(xi⋅xj)

-

得到分离超平面 w ∗ ⋅ x + b ∗ = 0 \begin{aligned} & w^{*} \cdot x + b^{*} = 0 \end{aligned} w∗⋅x+b∗=0

以及分类决策函数

f ( x ) = s i g n ( w ∗ ⋅ x + b ∗ ) \begin{aligned} & f \left( x \right) = sign \left( w^{*} \cdot x + b^{*} \right) \end{aligned} f(x)=sign(w∗⋅x+b∗)

最优化问题的求解:

-

引入拉格朗日乘子 α i ≥ 0 , μ i ≥ 0 , i = 1 , 2 , ⋯ , N \alpha_{i} \geq 0, \mu_{i} \geq 0, i = 1, 2, \cdots, N αi≥0,μi≥0,i=1,2,⋯,N构建拉格朗日函数 L ( w , b , ξ , α , μ ) = 1 2 ∥ w ∥ 2 + C ∑ i = 1 N ξ i + ∑ i = 1 N α i [ − y i ( w ⋅ x i + b ) + 1 − ξ i ] + ∑ i = 1 N μ i ( − ξ i ) = 1 2 ∥ w ∥ 2 + C ∑ i = 1 N ξ i − ∑ i = 1 N α i [ y i ( w ⋅ x i + b ) − 1 + ξ i ] − ∑ i = 1 N μ i ξ i \begin{aligned} & L \left( w, b, \xi, \alpha, \mu \right) = \dfrac{1}{2} \| w \|^{2} + C \sum_{i=1}^{N} \xi_{i} + \sum_{i=1}^{N} \alpha_{i} \left[- y_{i} \left( w \cdot x_{i} + b \right) + 1 - \xi_{i} \right] + \sum_{i=1}^{N} \mu_{i} \left( -\xi_{i} \right) \\ & = \dfrac{1}{2} \| w \|^{2} + C \sum_{i=1}^{N} \xi_{i} - \sum_{i=1}^{N} \alpha_{i} \left[ y_{i} \left( w \cdot x_{i} + b \right) -1 + \xi_{i} \right] - \sum_{i=1}^{N} \mu_{i} \xi_{i} \end{aligned} L(w,b,ξ,α,μ)=21∥w∥2+Ci=1∑Nξi+i=1∑Nαi[−yi(w⋅xi+b)+1−ξi]+i=1∑Nμi(−ξi)=21∥w∥2+Ci=1∑Nξi−i=1∑Nαi[yi(w⋅xi+b)−1+ξi]−i=1∑Nμiξi

其中, α = ( α 1 , α 2 , ⋯ , α N ) T \alpha = \left( \alpha_{1}, \alpha_{2}, \cdots, \alpha_{N} \right)^{T} α=(α1,α2,⋯,αN)T以及 μ = ( μ 1 , μ 2 , ⋯ , μ N ) T \mu = \left( \mu_{1}, \mu_{2}, \cdots, \mu_{N} \right)^{T} μ=(μ1,μ2,⋯,μN)T为拉格朗日乘子向量。

-

求 min w , b L ( w , b , ξ , α , μ ) \min_{w,b}L \left( w, b, \xi, \alpha, \mu \right) minw,bL(w,b,ξ,α,μ): ∇ w L ( w , b , ξ , α , μ ) = w − ∑ i = 1 N α i y i x i = 0 ∇ b L ( w , b , ξ , α , μ ) = − ∑ i = 1 N α i y i = 0 ∇ ξ i L ( w , b , ξ , α , μ ) = C − α i − μ i = 0 \begin{aligned} & \nabla_{w} L \left( w, b, \xi, \alpha, \mu \right) = w - \sum_{i=1}^{N} \alpha_{i} y_{i} x_{i} = 0 \\ & \nabla_{b} L \left( w, b, \xi, \alpha, \mu \right) = -\sum_{i=1}^{N} \alpha_{i} y_{i} = 0 \\ & \nabla_{\xi_{i}} L \left( w, b, \xi, \alpha, \mu \right) = C - \alpha_{i} - \mu_{i} = 0 \end{aligned} ∇wL(w,b,ξ,α,μ)=w−i=1∑Nαiyixi=0∇bL(w,b,ξ,α,μ)=−i=1∑Nαiyi=0∇ξiL(w,b,ξ,α,μ)=C−αi−μi=0

得

w = ∑ i = 1 N α i y i x i ∑ i = 1 N α i y i = 0 C − α i − μ i = 0 \begin{aligned} & w = \sum_{i=1}^{N} \alpha_{i} y_{i} x_{i} \\ & \sum_{i=1}^{N} \alpha_{i} y_{i} = 0 \\ & C - \alpha_{i} - \mu_{i} = 0\end{aligned} w=i=1∑Nαiyixii=1∑Nαiyi=0C−αi−μi=0代入拉格朗日函数,得 L ( w , b , ξ , α , μ ) = 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) + C ∑ i = 1 N ξ i − ∑ i = 1 N α i y i [ ( ∑ j = 1 N α j y j x j ) ⋅ x i + b ] + ∑ i = 1 N α i − ∑ i = 1 N α i ξ i − ∑ i N μ i ξ i = − 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) − ∑ i = 1 N α i y i b + ∑ i = 1 N α i + ∑ i = 1 N ξ i ( C − α i − μ i ) = − 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) + ∑ i = 1 N α i \begin{aligned} & L \left( w, b, \xi, \alpha, \mu \right) = \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) + C \sum_{i=1}^{N} \xi_{i} - \sum_{i=1}^{N} \alpha_{i} y_{i} \left[ \left( \sum_{j=1}^{N} \alpha_{j} y_{j} x_{j} \right) \cdot x_{i} + b \right] \\ & \quad\quad\quad\quad\quad\quad\quad\quad\quad\quad\quad\quad + \sum_{i=1}^{N} \alpha_{i} - \sum_{i=1}^{N} \alpha_{i} \xi_{i} - \sum_{i}^{N} \mu_{i} \xi_{i} \\ & = - \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) - \sum_{i=1}^{N} \alpha_{i} y_{i} b + \sum_{i=1}^{N} \alpha_{i} + \sum_{i=1}^{N} \xi_{i} \left( C - \alpha_{i} - \mu_{i} \right) \\ & = - \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) + \sum_{i=1}^{N} \alpha_{i} \end{aligned} L(w,b,ξ,α,μ)=21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)+Ci=1∑Nξi−i=1∑Nαiyi[(j=1∑Nαjyjxj)⋅xi+b]+i=1∑Nαi−i=1∑Nαiξi−i∑Nμiξi=−21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)−i=1∑Nαiyib+i=1∑Nαi+i=1∑Nξi(C−αi−μi)=−21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)+i=1∑Nαi

即 min w , b , ξ L ( w , b , ξ , α , μ ) = − 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) + ∑ i = 1 N α i \begin{aligned} & \min_{w,b,\xi}L \left( w, b, \xi, \alpha, \mu \right) = - \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) + \sum_{i=1}^{N} \alpha_{i} \end{aligned} w,b,ξminL(w,b,ξ,α,μ)=−21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)+i=1∑Nαi

-

求 max α min w , b , ξ L ( w , b , ξ , α , μ ) \max_{\alpha} \min_{w,b, \xi}L \left( w, b, \xi, \alpha, \mu \right) maxαminw,b,ξL(w,b,ξ,α,μ): max α − 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) + ∑ i = 1 N α i s . t . ∑ i = 1 N α i y i = 0 C − α i − μ i = 0 α i ≥ 0 μ i ≥ 0 , i = 1 , 2 , ⋯ , N \begin{aligned} & \max_{\alpha} - \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) + \sum_{i=1}^{N} \alpha_{i} \\ & s.t. \sum_{i=1}^{N} \alpha_{i} y_{i} = 0 \\ & C - \alpha_{i} - \mu_{i} = 0 \\ & \alpha_{i} \geq 0 \\ & \mu_{i} \geq 0, \quad i=1,2, \cdots, N \end{aligned} αmax−21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)+i=1∑Nαis.t.i=1∑Nαiyi=0C−αi−μi=0αi≥0μi≥0,i=1,2,⋯,N

等价的 min α 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) − ∑ i = 1 N α i s . t . ∑ i = 1 N α i y i = 0 0 ≤ α i ≤ C , i = 1 , 2 , ⋯ , N \begin{aligned} & \min_{\alpha} \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) - \sum_{i=1}^{N} \alpha_{i} \\ & s.t. \sum_{i=1}^{N} \alpha_{i} y_{i} = 0 \\ & 0 \leq \alpha_{i} \leq C , \quad i=1,2, \cdots, N \end{aligned} αmin21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)−i=1∑Nαis.t.i=1∑Nαiyi=00≤αi≤C,i=1,2,⋯,N

线性支持向量机(软间隔支持向量机)学习算法:

- 输入:训练数据集 T = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , ⋯ , ( x N , y N ) } T = \left\{ \left( x_{1}, y_{1} \right), \left( x_{2}, y_{2} \right), \cdots, \left( x_{N}, y_{N} \right) \right\} T={(x1,y1),(x2,y2),⋯,(xN,yN)},其中 x i ∈ X = R n , y i ∈ Y = { + 1 , − 1 } , i = 1 , 2 , ⋯ , N x_{i} \in \mathcal{X} = R^{n}, y_{i} \in \mathcal{Y} = \left\{ +1, -1 \right\}, i = 1, 2, \cdots, N xi∈X=Rn,yi∈Y={+1,−1},i=1,2,⋯,N

- 输出:最大间隔分离超平面和分类决策函数

-

选择惩罚参数 C ≥ 0 C \geq 0 C≥0,构建并求解约束最优化问题 min α 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ⋅ x j ) − ∑ i = 1 N α i s . t . ∑ i = 1 N α i y i = 0 0 ≤ α i ≤ C , i = 1 , 2 , ⋯ , N \begin{aligned} & \min_{\alpha} \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} \left( x_{i} \cdot x_{j} \right) - \sum_{i=1}^{N} \alpha_{i} \\ & s.t. \sum_{i=1}^{N} \alpha_{i} y_{i} = 0 \\ & 0 \leq \alpha_{i} \leq C , \quad i=1,2, \cdots, N \end{aligned} αmin21i=1∑Nj=1∑Nαiαjyiyj(xi⋅xj)−i=1∑Nαis.t.i=1∑Nαiyi=00≤αi≤C,i=1,2,⋯,N

求得最优解 α ∗ = ( α 1 ∗ , α 1 ∗ , ⋯ , α N ∗ ) \alpha^{*} = \left( \alpha_{1}^{*}, \alpha_{1}^{*}, \cdots, \alpha_{N}^{*} \right) α∗=(α1∗,α1∗,⋯,αN∗)

-

计算 w ∗ = ∑ i = 1 N α i ∗ y i x i \begin{aligned} & w^{*} = \sum_{i=1}^{N} \alpha_{i}^{*} y_{i} x_{i} \end{aligned} w∗=i=1∑Nαi∗yixi

并选择 α ∗ \alpha^{*} α∗的一个分量 0 < α j ∗ < C 0 \lt \alpha_{j}^{*} \lt C 0<αj∗<C,计算 b ∗ = y j − ∑ i = 1 N α i ∗ y i ( x i ⋅ x j ) \begin{aligned} & b^{*} = y_{j} - \sum_{i=1}^{N} \alpha_{i}^{*} y_{i} \left( x_{i} \cdot x_{j} \right) \end{aligned} b∗=yj−i=1∑Nαi∗yi(xi⋅xj)

-

得到分离超平面 w ∗ ⋅ x + b ∗ = 0 \begin{aligned} & w^{*} \cdot x + b^{*} = 0 \end{aligned} w∗⋅x+b∗=0

以及分类决策函数

f ( x ) = s i g n ( w ∗ ⋅ x + b ∗ ) \begin{aligned} & f \left( x \right) = sign \left( w^{*} \cdot x + b^{*} \right) \end{aligned} f(x)=sign(w∗⋅x+b∗)

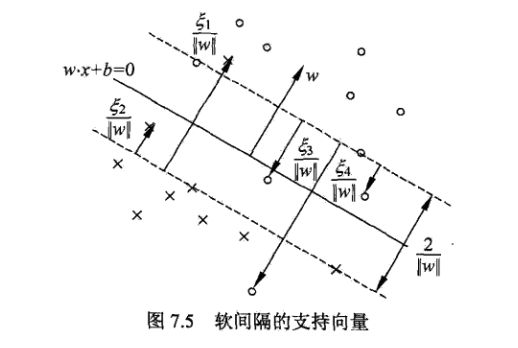

支持向量

(软间隔)支持向量:线性不可分情况下,最优化问题的解 α ∗ = ( α 1 ∗ , α 2 ∗ , ⋯ , α N ∗ ) T \alpha^{*} = \left( \alpha_{1}^{*}, \alpha_{2}^{*}, \cdots, \alpha_{N}^{*} \right)^{T} α∗=(α1∗,α2∗,⋯,αN∗)T中对应于 α i ∗ > 0 \alpha_{i}^{*} \gt 0 αi∗>0的样本点 ( x i , y i ) \left( x_{i}, y_{i} \right) (xi,yi)的实例 x i x_{i} xi。

实例 x i x_{i} xi的几何间隔 γ i = y i ( w ⋅ x i + b ) ∥ w ∥ = ∣ 1 − ξ i ∣ ∥ w ∥ \begin{aligned} & \gamma_{i} = \dfrac{y_{i} \left( w \cdot x_{i} + b \right)}{ \| w \|} = \dfrac{| 1 - \xi_{i} |}{\| w \|} \end{aligned} γi=∥w∥yi(w⋅xi+b)=∥w∥∣1−ξi∣

且 1 2 ∣ H 1 H 2 ∣ = 1 ∥ w ∥ \dfrac{1}{2} | H_{1}H_{2} | = \dfrac{1}{\| w \|} 21∣H1H2∣=∥w∥1

则实例 x i x_{i} xi到间隔边界的距离 ∣ γ i − 1 ∥ w ∥ ∣ = ∣ ∣ 1 − ξ i ∣ ∥ w ∥ − 1 ∥ w ∥ ∣ = ξ i ∥ w ∥ \begin{aligned} & \left| \gamma_{i} - \dfrac{1}{\| w \|} \right| = \left| \dfrac{| 1 - \xi_{i} |}{\| w \|} - \dfrac{1}{\| w \|} \right| = \dfrac{\xi_{i}}{\| w \|}\end{aligned} ∣∣∣∣γi−∥w∥1∣∣∣∣=∣∣∣∣∥w∥∣1−ξi∣−∥w∥1∣∣∣∣=∥w∥ξi

ξ i ≥ 0 ⇔ { ξ i = 0 , x i 在 间 隔 边 界 上 ; 0 < ξ i < 1 , x i 在 间 隔 边 界 与 分 离 超 平 面 之 间 ; ξ i = 1 , x i 在 分 离 超 平 面 上 ; ξ i > 1 , x i 在 分 离 超 平 面 误 分 类 一 侧 ; \begin{aligned} \xi_{i} \geq 0 \Leftrightarrow \left\{ \begin{aligned} \ & \xi_{i}=0, x_{i}在间隔边界上; \\ & 0 \lt \xi_{i} \lt 1, x_{i}在间隔边界与分离超平面之间; \\ & \xi_{i}=1, x_{i}在分离超平面上; \\ & \xi_{i}\gt1, x_{i}在分离超平面误分类一侧; \end{aligned} \right.\end{aligned} ξi≥0⇔⎩⎪⎪⎪⎪⎨⎪⎪⎪⎪⎧ ξi=0,xi在间隔边界上;0<ξi<1,xi在间隔边界与分离超平面之间;ξi=1,xi在分离超平面上;ξi>1,xi在分离超平面误分类一侧;

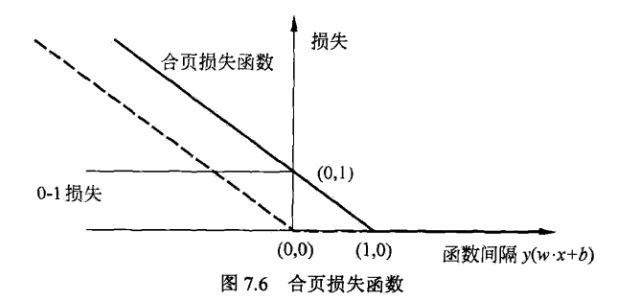

合页损失函数

线性支持向量机(软间隔)的合页损失函数 L ( y ( w ⋅ x + b ) ) = [ 1 − y ( w ⋅ x + b ) ] + \begin{aligned} & L \left( y \left( w \cdot x + b \right) \right) = \left[ 1 - y \left(w \cdot x + b \right) \right]_{+} \end{aligned} L(y(w⋅x+b))=[1−y(w⋅x+b)]+

其中,“+”为取正函数 [ z ] + = { z , z > 0 0 , z ≤ 0 \begin{aligned} \left[ z \right]_{+} = \left\{ \begin{aligned} \ & z, z \gt 0 \\ & 0, z \leq 0 \end{aligned} \right.\end{aligned} [z]+={ z,z>00,z≤0

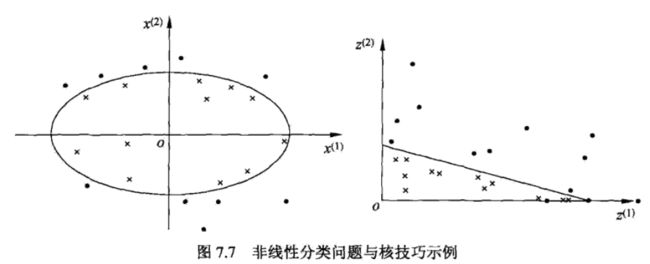

3、非线性支持向量机与核函数

核函数

设 X \mathcal{X} X是输入空间(欧氏空间 R n R^{n} Rn的子集或离散集合), H \mathcal{H} H是特征空间(希尔伯特空间),如果存在一个从 X \mathcal{X} X到 H \mathcal{H} H的映射 ϕ ( x ) : X → H \begin{aligned} & \phi \left( x \right) : \mathcal{X} \to \mathcal{H} \end{aligned} ϕ(x):X→H

使得对所有 x , z ∈ X x,z \in \mathcal{X} x,z∈X,函数 K ( x , z ) K \left(x, z \right) K(x,z)满足条件

K ( x , z ) = ϕ ( x ) ⋅ ϕ ( z ) \begin{aligned} & K \left(x, z \right) = \phi \left( x \right) \cdot \phi \left( z \right) \end{aligned} K(x,z)=ϕ(x)⋅ϕ(z)

则称 K ( x , z ) K \left(x, z \right) K(x,z)为核函数, ϕ ( x ) \phi \left( x \right) ϕ(x)为映射函数,式中 ϕ ( x ) ⋅ ϕ ( z ) \phi \left( x \right) \cdot \phi \left( z \right) ϕ(x)⋅ϕ(z)为 ϕ ( x ) \phi \left( x \right) ϕ(x)和 ϕ ( z ) \phi \left( z \right) ϕ(z)的内积。

常用核函数

多项式核函数 K ( x , z ) = ( x ⋅ z + 1 ) p \begin{aligned} & K \left( x, z \right) = \left( x \cdot z + 1 \right)^{p} \end{aligned} K(x,z)=(x⋅z+1)p

高斯核函数

K ( x , z ) = exp ( − ∥ x − z ∥ 2 2 σ 2 ) \begin{aligned} & K \left( x, z \right) = \exp \left( - \dfrac{\| x - z \|^{2}}{2 \sigma^{2}} \right) \end{aligned} K(x,z)=exp(−2σ2∥x−z∥2)

非线性支持向量分类机

非线性支持向量机:从非线性分类训练集,通过核函数与软间隔最大化,学习得到分类决策函数

f ( x ) = s i g n ( ∑ i = 1 N α i ∗ y i K ( x , x i ) + b ∗ ) \begin{aligned} & f \left( x \right) = sign \left( \sum_{i=1}^{N} \alpha_{i}^{*} y_{i} K \left(x, x_{i} \right) + b^{*} \right) \end{aligned} f(x)=sign(i=1∑Nαi∗yiK(x,xi)+b∗)

称为非线性支持向量机, K ( x , z ) K \left( x, z \right) K(x,z)是正定核函数。

非线性支持向量机学习算法:

- 输入:训练数据集 T = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , ⋯ , ( x N , y N ) } T = \left\{ \left( x_{1}, y_{1} \right), \left( x_{2}, y_{2} \right), \cdots, \left( x_{N}, y_{N} \right) \right\} T={(x1,y1),(x2,y2),⋯,(xN,yN)},其中 x i ∈ X = R n , y i ∈ Y = { + 1 , − 1 } , i = 1 , 2 , ⋯ , N x_{i} \in \mathcal{X} = R^{n}, y_{i} \in \mathcal{Y} = \left\{ +1, -1 \right\}, i = 1, 2, \cdots, N xi∈X=Rn,yi∈Y={+1,−1},i=1,2,⋯,N

- 输出:分类决策函数

- 选择适当的核函数 K ( x , z ) K \left( x, z \right) K(x,z)和惩罚参数 C ≥ 0 C \geq 0 C≥0,构建并求解约束最优化问题 min α 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j K ( x i , x j ) − ∑ i = 1 N α i s . t . ∑ i = 1 N α i y i = 0 0 ≤ α i ≤ C , i = 1 , 2 , ⋯ , N \begin{aligned} & \min_{\alpha} \dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} K \left( x_{i}, x_{j} \right) - \sum_{i=1}^{N} \alpha_{i} \\ & s.t. \sum_{i=1}^{N} \alpha_{i} y_{i} = 0 \\ & 0 \leq \alpha_{i} \leq C , \quad i=1,2, \cdots, N \end{aligned} αmin21i=1∑Nj=1∑NαiαjyiyjK(xi,xj)−i=1∑Nαis.t.i=1∑Nαiyi=00≤αi≤C,i=1,2,⋯,N

求得最优解 α ∗ = ( α 1 ∗ , α 1 ∗ , ⋯ , α N ∗ ) \alpha^{*} = \left( \alpha_{1}^{*}, \alpha_{1}^{*}, \cdots, \alpha_{N}^{*} \right) α∗=(α1∗,α1∗,⋯,αN∗) - 计算 w ∗ = ∑ i = 1 N α i ∗ y i x i \begin{aligned} \\ & w^{*} = \sum_{i=1}^{N} \alpha_{i}^{*} y_{i} x_{i} \end{aligned} w∗=i=1∑Nαi∗yixi

并选择 α ∗ \alpha^{*} α∗的一个分量 0 < α j ∗ < C 0 \lt \alpha_{j}^{*} \lt C 0<αj∗<C,计算 b ∗ = y j − ∑ i = 1 N α i ∗ y i K ( x i , x j ) \begin{aligned} \\ & b^{*} = y_{j} - \sum_{i=1}^{N} \alpha_{i}^{*} y_{i} K \left( x_{i}, x_{j} \right) \end{aligned} b∗=yj−i=1∑Nαi∗yiK(xi,xj) - 得到分离超平面 w ∗ ⋅ x + b ∗ = 0 \begin{aligned} \\ & w^{*} \cdot x + b^{*} = 0 \end{aligned} w∗⋅x+b∗=0

以及分类决策函数

f ( x ) = s i g n ( ∑ i = 1 N α i ∗ y i K ( x i , x j ) + b ∗ ) \begin{aligned} \\& f \left( x \right) = sign \left( \sum_{i=1}^{N} \alpha_{i}^{*} y_{i} K \left( x_{i}, x_{j} \right) + b^{*} \right) \end{aligned} f(x)=sign(i=1∑Nαi∗yiK(xi,xj)+b∗)

4、序列最小最优化算法

本节讨论支持向量机学习的实现问题。我们知道,支持向量机的学习问题可以形式化为求解凸二次规划问题。这样的凸二次规划问题具有全局最优解,并且有许多最优化算法可以用于这一问题的求解。但是当训练样本容量很大时,这些算法往往变得非常低效,以致无法使用。

两个变量二次规划的求解方法

序列最小最优化(sequential minimal optimization,SMO)算法 要解如下凸二次规划的对偶问题:

min α 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j K ( x i , x j ) − ∑ i = 1 N α i s . t . ∑ i = 1 N α i y i = 0 0 ≤ α i ≤ C , i = 1 , 2 , ⋯ , N \begin{aligned} \min_{\alpha} &\dfrac{1}{2} \sum_{i=1}^{N} \sum_{j=1}^{N} \alpha_{i} \alpha_{j} y_{i} y_{j} K \left( x_{i}, x_{j} \right) - \sum_{i=1}^{N} \alpha_{i} \\ s.t. & \sum_{i=1}^{N} \alpha_{i} y_{i} = 0 \\ & 0 \leq \alpha_{i} \leq C , \quad i=1,2, \cdots, N \end{aligned} αmins.t.21i=1∑Nj=1∑NαiαjyiyjK(xi,xj)−i=1∑Nαii=1∑Nαiyi=00≤αi≤C,i=1,2,⋯,N

选择 α 1 , α 2 \alpha_{1}, \alpha_{2} α1,α2两个变量,其他变量 α i ( i = 3 , 4 , ⋯ , N ) \alpha_{i} \left( i = 3, 4, \cdots, N \right) αi(i=3,4,⋯,N)是固定的,SMO的最优化问题的子问题

min α 1 , α 2 W ( α 1 , α 2 ) = 1 2 K 11 α 1 2 + 1 2 K 22 α 2 2 + y 1 y 2 K 12 α 1 α 2 − ( α 1 + α 2 ) + y 1 α 1 ∑ i = 3 N y i α i K i 1 + y 2 α 2 ∑ i = 3 N y i α i K i 2 s . t . α 1 + α 2 = − ∑ i = 3 N α i y i = ς 0 ≤ α i ≤ C , i = 1 , 2 \begin{aligned} & \min_{\alpha_{1}, \alpha_{2}} W \left( \alpha_{1}, \alpha_{2} \right) = \dfrac{1}{2} K_{11} \alpha_{1}^{2} + \dfrac{1}{2} K_{22} \alpha_{2}^{2} + y_{1} y_{2} K_{12} \alpha_{1} \alpha_{2} \\ & \quad\quad\quad\quad\quad\quad - \left( \alpha_{1} + \alpha_{2} \right) + y_{1} \alpha_{1} \sum_{i=3}^{N} y_{i} \alpha_{i} K_{i1} + y_{2} \alpha_{2} \sum_{i=3}^{N} y_{i} \alpha_i K_{i2} \\ & s.t. \quad \alpha_{1} + \alpha_{2} = -\sum_{i=3}^{N} \alpha_{i} y_{i} = \varsigma \\ & 0 \leq \alpha_{i} \leq C , \quad i=1,2 \end{aligned} α1,α2minW(α1,α2)=21K11α12+21K22α22+y1y2K12α1α2−(α1+α2)+y1α1i=3∑Nyiαi