VGG网络与中间层特征提取

1. 背景

VGG是常见的用于大型图片识别的极深度卷积网络,

这里主要介绍VGG网络预测在ImageNet数据集上的训练及预测。

2. ImageNet图像数据集简介

ImageNet包含了145W张224*224像素的三通道彩色图像数据集,图像划分为1000个种类。其中训练集130W张,验证集5W张,测试集10W。

数据加载及预处理:

3. VGG 网络

3.1 网络定义

from keras.models import Sequential

from keras.layers.convolutional import Conv2D, MaxPooling2D, ZeroPadding2D

from keras.layers.core import Activation, Flatten, Dense, Dropout

from keras.datasets import cifar10

from keras.utils import np_utils

from keras.optimizers import SGD, RMSprop

import cv2

import numpy as np

NB_EPOCH = 5

BATCH_SIZE = 128

VALIDATION_SPLIT = 0.2

# IMG_ROWS, IMG_COLS = 224, 224

IMG_ROWS, IMG_COLS = 32, 32

IMG_CHANNELS = 3

INPUT_SHAPE = (IMG_ROWS, IMG_COLS, IMG_CHANNELS) # 注意顺序

NB_CLASSES = 1000

class VGGNet:

@staticmethod

def build(input_shape, classes, weights_path=None):

model = Sequential()

model.add(ZeroPadding2D((1, 1), input_shape=input_shape))

model.add(Conv2D(64, kernel_size=3, activation='relu'))

model.add(ZeroPadding2D((1, 1)))

model.add(Conv2D(64, kernel_size=3, activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

model.add(ZeroPadding2D((1, 1)))

model.add(Conv2D(128, kernel_size=3, activation='relu'))

model.add(ZeroPadding2D((1, 1)))

model.add(Conv2D(128, kernel_size=3, activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

model.add(ZeroPadding2D((1, 1)))

model.add(Conv2D(256, kernel_size=3, activation='relu'))

model.add(ZeroPadding2D((1, 1)))

model.add(Conv2D(256, kernel_size=3, activation='relu'))

model.add(ZeroPadding2D((1, 1)))

model.add(Conv2D(256, kernel_size=3, activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

model.add(ZeroPadding2D((1, 1)))

model.add(Conv2D(512, kernel_size=3, activation='relu'))

model.add(ZeroPadding2D((1, 1)))

model.add(Conv2D(512, kernel_size=3, activation='relu'))

model.add(ZeroPadding2D((1, 1)))

model.add(Conv2D(512, kernel_size=3, activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

model.add(ZeroPadding2D((1, 1)))

model.add(Conv2D(512, kernel_size=3, activation='relu'))

model.add(ZeroPadding2D((1, 1)))

model.add(Conv2D(512, kernel_size=3, activation='relu'))

model.add(ZeroPadding2D((1, 1)))

model.add(Conv2D(512, kernel_size=3, activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

model.add(Flatten())

model.add(Dense(4096, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(4096, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(classes, activation='softmax'))

model.summary() # 概要汇总网络

if weights_path:

model.load_weights(weights_path)

return model3.2 数据加载

def load_and_proc_data():

(X_train, y_train), (X_test, y_test) = cifar10.load_data()

print('X_train shape', X_train.shape)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255

X_test /= 255

# 将类向量转换成二值类别矩阵

y_train = np_utils.to_categorical(y_train, NB_CLASSES)

y_test = np_utils.to_categorical(y_test, NB_CLASSES)

return X_train, X_test, y_train, y_test3.3 模型训练与保存

def model_train(X_train, y_train):

OPTIMIZER = RMSprop()

model = VGGNet.build(input_shape=INPUT_SHAPE, classes=NB_CLASSES)

model.compile(loss='categorical_crossentropy', optimizer=OPTIMIZER, metrics=['accuracy'])

model.fit(X_train, y_train, batch_size=BATCH_SIZE, epochs=NB_EPOCH, verbose=0, validation_split=VALIDATION_SPLIT)

return model

def model_save(model):

# 保存网络结构

model_json = model.to_json()

with open('cifar10_architecture.json', 'w') as f:

f.write(model_json)

# 保存网络权重

model.save_weights('cifar10_weights.h5', overwrite=True)

3.4 模型评估与预测

def model_evaluate(model, X_test, y_test):

score = model.evaluate(X_test, y_test, batch_size=BATCH_SIZE, verbose=1)

print('Test score: ', score[0])

print('Test acc: ', score[1])

def model_predict():

model = VGGNet().build(INPUT_SHAPE, NB_CLASSES, 'vgg16_weights.h5')

sgd = SGD()

model.compile(loss='categorical_crossentropy', optimizer=sgd)

img = cv2.imread('tain.png')

im = cv2.resize(img, (224, 224)).astype(np.float32)

im = im.transpose((2, 0, 1))

im = np.expand_dims(im, axis=0)

out = model.predict(im)

print(np.argmax(out))3.5 测试

if __name__ == '__main__':

X_train, X_test, y_train, y_test = load_and_proc_data()

model = model_train(X_train, y_train)

# model_save(model)

model_evaluate(model, X_test, y_test)4. 使用keras自带的VGG16网络

keras中模型都是预训练好的,其权重存储在~/.keras/models/中,模型初始化时权重会自动下载。

from keras.optimizers import SGD

from keras.applications.vgg16 import VGG16

import matplotlib.pyplot as plt

import numpy as np

import cv2

model = VGG16(weights='imagenet', include_top=True)

sgd = SGD(lr=0.1, decay=1e-6, momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd)

im = cv2.resize(cv2.imread('tain.png'), (224, 224))

im = np.expand_dims(im, axis=0)

out = model.predict(im)

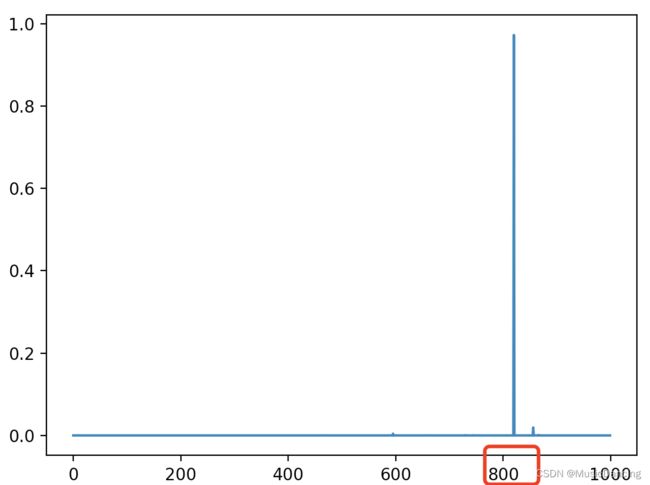

plt.plot(out.ravel())

plt.show()

print(np.argmax(out))

# 820 蒸汽火车5. 从深度学习模型特定网络层中提取特征

深度学习模型天然就具有可重用的特性:可以把一个在大规模数据上训练好的图像分类或语音识别的模型重用在另一个很不一样的问题上,而只需要做有限的一点改动。尤其是在计算机视觉领域,许多预训练的模型现在都被公开下载,并重用在其他问题上以提升在小数据集上的性能。

5.1 为什么从DCNN的中间网络层提取特征?

1. 网络的每一层都在学习识别对最终分类必要的那些特征;

2. 低级的网络层识别的是类似颜色和边界这样的顺序特征;

3. 高级的网络层则是把低层的这些顺序特征组合成更高的顺序特征,如形状或者物体等;

因此,中间的网络层才有能力从图像中提取出重要的特征,这些特征有可能对不同的分类有益。

5.2 特征提取的益处?

1. 可以依赖大型的公开可用的训练并把学习转换到新的领域;

2. 可以为大型的耗时训练节省时间;

3. 即使该领域没有足够多的训练样例,也可以提供出合理的解决方案(有了一个良好的初始网络模型)。

5.3 从VGG-16网络特定网络层中进行特征提取

from keras.models import Model

from keras.preprocessing import image

from keras.applications.vgg16 import VGG16, preprocess_input

from keras.optimizers import SGD

import numpy as np

import cv2

# 加载预训练好的VGG16模型

base_model = VGG16(weights='imagenet', include_top=True)

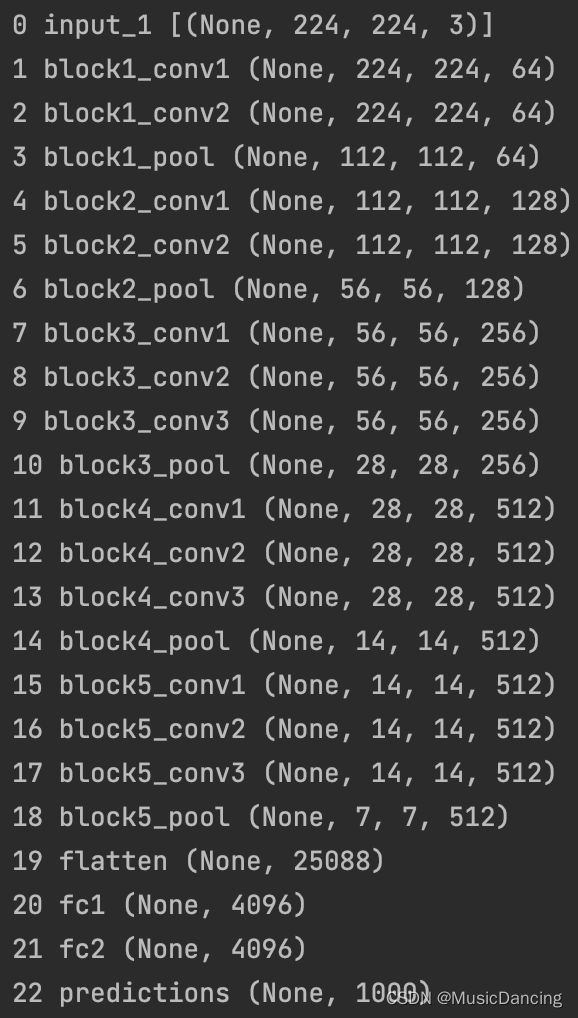

for i, layer in enumerate(base_model.layers):

print(i, layer.name, layer.output_shape)5.4 极深度 inception-v3 网络中间层特征提取

迁移学习是非常强大的深度学习技术,在那些数据集没有大到足以从头训练整个CNN的地方, 通常会使用预训练好的CNN网络来生成新任务的代理,然后对此网络进行微调。

inception-v3是google开发的一个深度网络。

1. 加载与训练网络模型

可选择是否包括顶部处理层

from keras.applications.inception_v3 import InceptionV3

from keras.models import Model

from keras.preprocessing import image

from keras.layers import Dense, GlobalAveragePooling2D

# 加载预训练好的InceptionV3模型,不包括顶部的网络层,以便在新数据集上进行微调

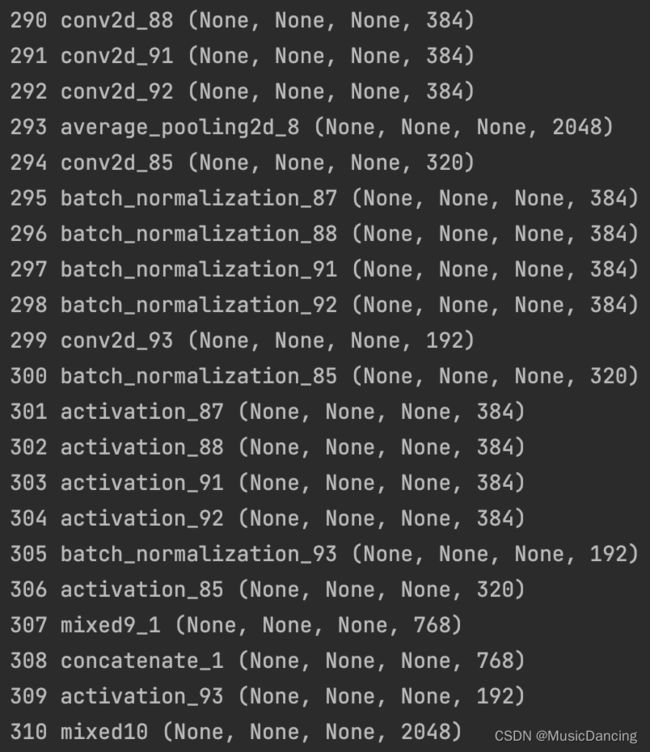

base_model = InceptionV3(weights='imagenet', include_top=False)

for i, layer in enumerate(base_model.layers):

print(i, layer.name, layer.output_shape)2. 冻结特定网络层,修改网络结构

# 冻结所有卷积InceptionV3层

for layer in base_model.layers:

layer.trainable = False

x = base_model.output # (n_samples, rows, cols, channels)

# shape=(None, None, None, 2048), name='mixed10/concat:0')

# 把输入转换成dense层可以处理的形状 (n_samples, channels)

x = GlobalAveragePooling2D()(x)

# shape=(None, 2048), name='global_average_pooling2d/Mean:0')

x = Dense(1024, activation='relu')(x)

# shape=(None, 1024), name='dense/Relu:0'

preds = Dense(200, activation='softmax')(x)

# shape=(None, 200), name='dense_1/Softmax:0'3. 调整优化后,重新编译模型

model = Model(input=base_model.input, output=preds) # ????该句有问题

# 只冻结前172层

for layer in model.layers[:172]:

layer.trainable = False

for layer in model.layers[172:]:

layer.trainable = True

model.compile(loss='categorical_crossentropy', optimizer='rmsprop')

# 将模型在新数据集上进行训练

# model.fit_generator(...)