一、Pipeline

1.查看支持的任务类型

from transformers.pipelines import SUPPORTED_TASKS, get_supported_tasks

print(SUPPORTED_TASKS.items(), get_supported_tasks())

2.Pipeline的创建与使用方式

1.根据任务类型直接创建Pipeline, 默认都是英文的模型

from transformers import pipeline

pipe = pipeline("text-classification")

pipe("very good!")

2.指定任务类型,再指定模型,创建基于指定模型的Pipeline

from transformers import pipeline

pipe = pipeline("text-classification",

model="uer/roberta-base-finetuned-dianping-chinese")

pipe("我觉得不太行!")

3.预先加载模型,再创建Pipeline

from transformers import AutoModelForSequenceClassification, AutoTokenizer, pipeline

model = AutoModelForSequenceClassification.from_pretrained("uer/roberta-base-finetuned-dianping-chinese")

tokenizer = AutoTokenizer.from_pretrained("uer/roberta-base-finetuned-dianping-chinese")

pipe = pipeline("text-classification", model=model, tokenizer=tokenizer)

pipe("你真是个人才!")

3.GPU推理

pipe.model.device

%%time

for i in range(100):

pipe("你真是个人才!")

'''

CPU times: total: 19.4 s

Wall time: 4.94 s

'''

import torch

import time

times = []

for i in range(100):

torch.cuda.synchronize()

start = time.time()

pipe("我觉得不太行!")

torch.cuda.synchronize()

end = time.time()

times.append(end - start)

print(sum(times) / 100)

pipe = pipeline("text-classification", model="uer/roberta-base-finetuned-dianping-chinese", device=0)

pipe.model.device

4.确定Pipeline参数

qa_pipeline = pipeline("question-answering", model="uer/roberta-base-chinese-extractive-qa")

qa_pipeline(question="王训志是谁?", context="王训志是帅哥!")

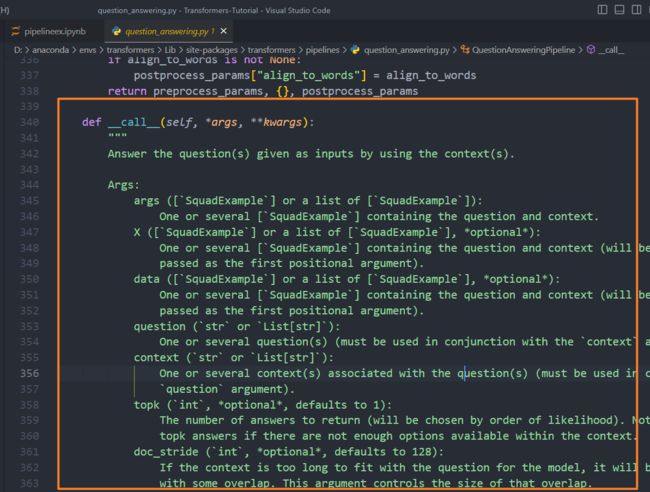

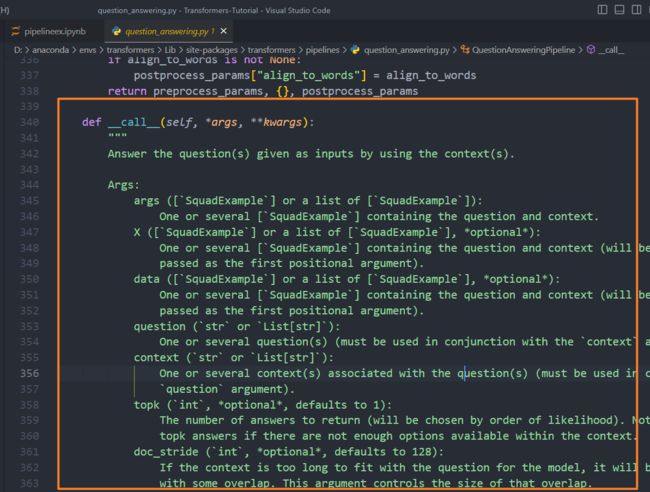

- 具体做法是,查看

qa_pipeline的类 QuestionAnsweringPipeline,然后 Ctrl+鼠标左键查看 __call__方法源码

5.其他Piepeline示例

checkpoint = "google/owlvit-base-patch32"

detector = pipeline(model=checkpoint, task="zero-shot-object-detection")

import requests

from PIL import Image

url = "https://unsplash.com/photos/oj0zeY2Ltk4/download?ixid=MnwxMjA3fDB8MXxzZWFyY2h8MTR8fHBpY25pY3xlbnwwfHx8fDE2Nzc0OTE1NDk&force=true&w=640"

im = Image.open(requests.get(url, stream=True).raw)

im

predictions = detector(im,

candidate_labels = ["hat", "book"])

from PIL import ImageDraw

draw = ImageDraw.Draw(im)

for prediction in predictions:

box = prediction["box"]

label = prediction["label"]

score = prediction["score"]

xmin, ymin, xmax, ymax = box.values()

draw.rectangle((xmin, ymin, xmax, ymax), outline="red", width=1)

draw.text((xmin, ymin), f"{label}: {round(score,2)}", fill="red")

im

6.Pipeline的背后实现

'''

1.处理输入

2.模型输出

3.id2label model.config.id2label

'''

import torch

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("uer/roberta-base-finetuned-dianping-chinese")

tokenizer = AutoTokenizer.from_pretrained("uer/roberta-base-finetuned-dianping-chinese")

text = "我觉得不行!"

input = tokenizer(text, return_tensors="pt")

output = model(**input)

logits = torch.softmax(output.logits, dim= -1)

id = torch.argmax(logits).item()

model.config.id2label = id2label

print(text, "\n", model.config.id2label.get(id))