视觉SLAM十四讲笔记-7-1

视觉SLAM十四讲笔记-7-1

文章目录

- 视觉SLAM十四讲笔记-7-1

- 视觉里程计-特征点法

-

- 7.1 特征点法

-

- 7.1.1 特征点

- 7.1.2 ORB特征

-

- **FAST关键点**

- **BRIEF描述子**

- 7.1.3 特征匹配

- 7.2 实践:特征提取和匹配

-

- 7.2.1 OpenCV的ORB特征

- 7.2.2 手写ORB特征

视觉里程计-特征点法

主要目标:

1.理解图像特征点的意义,并掌握在单幅图像中提取特征点及多幅图像中匹配特征点的方法;

2.理解对极几何的原理,利用对极几何的约束,恢复图像之间的摄像机的三维运动;

3.理解PNP问题,以及利用已知三维结构与图像的对应关系求解摄像机的三维运动;

4.理解ICP问题,以及利用点云的匹配关系求解摄像机的三维运动;

5.理解如何通过三角化获得二维图像上对应点的三维结构。

本节前面介绍了运动方程和观测方程的具体形式,并讲解了以非线性优化为主的求解方法。从这讲开始,进入正题。分别介绍四个模块:视觉里程计、后端优化、回环检测和地图构建。

本将介绍视觉里程计中常用的一种方法:特征点法。

7.1 特征点法

一个SLAM系统分为前端和后端,前端也成为视觉里程计。视觉里程计根据相邻图像的信息估计出粗略的相机运动,给后端提供较好的初始值。视觉里程计的算法主要分为两大类:特征点法和直接法。

本将学习如何提取、匹配图像特征点,然后估计两帧之间的相机运动和场景结构,从而实现一个两帧间视觉里程计,这类算法也成为两视图几何。

7.1.1 特征点

视觉里程计的核心问题是如何根据图像估计相机运动。然而,图像是由一个由亮度和色彩组成的矩阵,如何直接从矩阵层面进行考虑运动估计,将会非常困难。所以,比较方便的做法是:首先,从图像中选取比较有代表性的点,这些点在相机视角发生少量变化后会保持不变,于是就可以在各个图像中找到相同的点。然后在这些点的基础上讨论相机的位姿估计问题,以及这些点的定位问题。在经典SLAM中,这些点称为路标。而在视觉SLAM中,路标则是图像特征。

特征是图像信息的另一种数字表达形式。一组好的特征对在指定任务上的最终表现至关重要。在视觉里程计中希望特征点在相机运动之后保持稳定。 特征点是图像里一些特别的地方。例如角点(提取角点的方法:Harris角点,Fast角点,GFTT角点等)。但是在大多数应用中,单纯的角点并不能满足要求。为此,计算机视觉领域的研究者们在常年的研究中设计了更加稳定的局部图像特征,如著名的SIFT,SURF,ORB等等。相比于朴素的角点,这些人共设计的特征点能够拥有如下性质:

1.可重复性;2.可区别性;3.高效率;4.局部性

特征点由关键点(Key-point) 和 描述子(Descriptor) 两部分组成。当说在一个图像中计算SIFT特征点时,是指提取SIFT关键点并计算描述子两件事情。关键点是指该特征点在图像中的位置,有些特征点还具有朝向、大小等信息。描述子通常是一个向量,按照某些人为设计的方式,描述了该关键点周围像素的信息。描述子是按照“外观相似的特征应该具有相似的描述子”的原则设计的。因此,只要认为两个特征点的描述子在向量空间上的距离相近,就认为它们是同样的特征点。

在目前的SLAM方案中,ORB是质量和性能之间较好的这种。因此以ORB为代表介绍提取特征的整个过程。

7.1.2 ORB特征

ORB特征有关键点和描述子两部分组成。它的关键点称为"Oriented FAST",是一种改进的FAST角点。它的描述子称为BRIEF。因此,提取ORB特征分为如下两个步骤:

1.FAST角点提取:找到图像中的“角点”。相较于原版的FAST,ORB中计算了特征点的主方向,为后续的BRIEF描述子增加了旋转不变特性。

2.BRIEF描述子:对前一步提取出特征点的周围图像区域进行描述。ORB对BRIEF进行了一些改进,主要是指在BRIEF中使用了先前计算的方向信息。

FAST关键点

FAST是一种角点,主要检测局部像素灰度变化明显的地方,以速度快著称。它的一个思想是:如果一个像素与邻域像素的差别较大(过亮或过暗),那么它更可能是角点。相比于其他角点检测算法,FAST只需比较像素亮度的大小,十分敏捷。

检测过程如下:

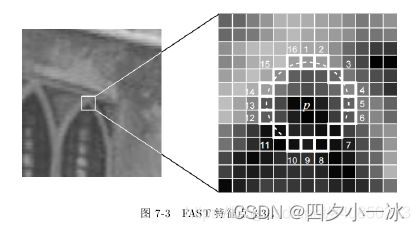

1.在图像中选取像素 p p p,假设它的亮度为 I p I_p Ip。

2.设置一个阈值 T T T (比如 I p I_p Ip的 20 % 20 \% 20% ) 。

3.以像素 p p p为中心, 选取半径为 3 的圆上的 16 个像素点。

4.假如选取的圆上,有连续的 N N N个点的亮度大于 I p + T I_p + T Ip+T 或小于 I p − T I_p − T Ip−T,那么像素 p p p可以被认为是特征点 ( N N N通常取 12,即为 FAST-12。其它常用的 N N N 取值为 9 和 11,他们分别被称为 FAST-9, FAST-11)。

循环以上四步,对每一个像素执行相同的操作。

图片来源:link

在FAST-12算法中,为了更高效,可以添加一项预测试操作,以快速地排除绝大多数不是角点的像素。具体操作为:对于每个像素,直接检测邻域圆上的第1,5,9,13个像素的亮度。只有当这4个像素中有3个同时大于 I p + T I_p + T Ip+T 或小于 I p − T I_p − T Ip−T时,当前像素才有可能是一个角点,否则应直接排除。这样的预测试操作大大加速了角点检测。此外,原始的FAST角点经常出现“扎堆”现象。所以在第一遍检测之后,还需要用非极大值抑制-(Non-maximal suppression),在一定区域内仅保留响应极大值的角点,避免角点集中的问题。

FAST角点的计算仅仅比较像素间亮度的差异,所以速度非常快,但是它也有重复性不强、分布不均匀的缺点。此外,FAST角点不具有方向信息。而且在远看是角点近看不一定是角点了。针对FAST角点不具有方向性和尺度的弱点,ORB添加了尺度和旋转的描述。尺度不变性由构建图像金字塔,并在图像金字塔的每一层上检测角点来实现。而特征的旋转由灰度质心法实现。

金字塔是计算机视觉中常用的一种方法。金字塔底层是原始图像,每往上一层,就对图像进行一个固定倍率的缩放,这样就有了不同倍率的图像。较小的图像可以看作是远处看来的景象。在特征匹配算法中,可以匹配不同层的图像,从而实现尺度不变性。

图像链接:link

在旋转方面,计算特征点附近的图像灰度质心。所谓质心是指以图像块灰度值作为权重的中心。其具体操作步骤如下:

1.在一个小的图像块 B B B中,定义图像块的矩为:

m p q = ∑ x , y ∈ B x p y q I ( x , y ) , p , q = { 0 , 1 } m_{p q}=\sum_{x, y \in B} x^{p} y^{q} I(x, y), \quad p, q=\{0,1\} mpq=x,y∈B∑xpyqI(x,y),p,q={0,1}

2.通过矩可以找到图像块的质心:

C = ( m 10 m 00 , m 01 m 00 ) = ( ∑ x I ( x , y ) ∑ I ( x , y ) , ∑ y I ( x , y ) ∑ I ( x , y ) ) C=\left(\frac{m_{10}}{m_{00}}, \frac{m_{01}}{m_{00}}\right)=\left(\frac{\sum x I(x, y)}{\sum I(x, y)}, \frac{\sum y I(x, y)}{\sum I(x, y)}\right) C=(m00m10,m00m01)=(∑I(x,y)∑xI(x,y),∑I(x,y)∑yI(x,y))

3.连接图像块的几何中心 O O O 与质心 C C C,得到一个方向向量 O C → \overrightarrow{O C} OC,于是特征点的方向可以定义为:

θ = arctan ( m 01 m 10 ) = arctan ( ∑ y I ( x , y ) ∑ x I ( x , y ) ) \theta=\arctan \left(\frac{m_{01}}{m_{10}}\right)=\arctan \left(\frac{\sum y I(x, y)}{\sum x I(x, y)}\right) θ=arctan(m10m01)=arctan(∑xI(x,y)∑yI(x,y))

通过以上方法,FAST角点便具有了尺度和旋转的描述,从而大大提升了其在不同图像之间表述的鲁棒性。所以在ORB中,把这种改进后的FAST称为Oriented Fast。

BRIEF描述子

在提取到Oriented FAST关键点后,对每个点计算其描述子。ORB使用改进的BRIEF特征描述。

BRIEF是一种二进制描述子,其描述向量由许多个0和1组成,这里的0和1编码了关键点䢋两个随机像素(比如 p p p和 q q q)的大小关系:如果 p p p> q q q,则取1;反之取0。如果取128个这样的 p p p、 q q q,则最后得到128维由0,1组成的向量。BRIEF使用了随机选点的比较,速度非常快,而且由于使用了二进制表达,存储起来也比较方便,适用于实时的图像匹配。原始的BRIEF描述子不具有旋转不变性,因此在图像发生旋转时容易丢失。而ORB在FAST特征点提取阶段计算量关键点的方向,所以可以利用方向信息,计算旋转之后的"Steer BRIEF"特征使ORB的描述子具有较好的旋转不变性。

由于考虑了旋转和缩放,ORB在平移、旋转和缩放的变换下仍有良好的表现。同时FAST和BRIEF的组合也非常高效,使得ORB特征在实时SLAM中非常受欢迎。在下图中展示了一张使用OpenCV提取ORB特征的结果:

图像来源:link

下面介绍如何在不同的图像之间进行特征匹配。

7.1.3 特征匹配

特征匹配是视觉SLAM中极为关键的一步,特征匹配解决了SLAM中数据关联问题,即确定当前看到的路标与之前看到的路标之间的对应关系。通过对图像与图像或者图像与地图之间的描述子进行准确匹配,可以为后续的姿态估计、优化等操作减轻大量负担。然而,由于图像特征的局部特性,误匹配的情况广泛存在,而且长期以来一直没有得到有效解决,目前已经成为视觉SLAM制约性能提升的一大瓶颈。主要原因是场景中存在大量的重复纹理,使得特征描述非常相似。

考虑两个时刻的图像。如果在图像 I t I_t It中提取到特征点 x t m , m = 1 , 2 , 3... , M x_t^m,m=1,2,3...,M xtm,m=1,2,3...,M,在图像 I t + 1 I_{t+1} It+1中提取到特征点 x t + 1 n , n = 1 , 2 , 3 , . . . , N x_{t+1}^n,n=1,2,3,...,N xt+1n,n=1,2,3,...,N,如何寻找这两个集合元素的对应关系尼?最简单的方法就是暴力匹配,即对每一个特征点 x t m x_t^m xtm与所有的 x t + 1 n x_{t+1}^n xt+1n测量描述子之间的距离,然后排序,取最近的一个作为匹配点。描述子距离表示了两个特征之间的相似程度。不过在实际应用中还可以取不同的距离度量范数。对于浮点类型的描述子,使用欧氏距离进行度量;对于二进制的描述子(BRIEF),往往使用汉明距离作为度量—两个二进制之间的汉明距离,指的是不同位数的个数。

当特征点数量很大时,暴力匹配法的运算量将变得很大,特别是当想要匹配某个帧和一张地图时,这不符合在SLAM中的实时性要求。此时,快速近似最近邻(FLANN)算法更加适合于匹配数量极多的情况。目前这些匹配算法已经成熟并且已经集成到OpenCV。

7.2 实践:特征提取和匹配

OpenCV已经集成了多数主流的图像特征

7.2.1 OpenCV的ORB特征

新建文件夹,并在该文件夹下打开VS Code。

mkdir orb_cv

cd orb_cv

code .

//launch.json

{

// Use IntelliSense to learn about possible attributes.

// Hover to view descriptions of existing attributes.

// For more information, visit: https://go.microsoft.com/fwlink/?linkid=830387

"version": "0.2.0",

"configurations": [

{

"name": "g++ - 生成和调试活动文件",

"type": "cppdbg",

"request":"launch",

"program":"${workspaceFolder}/build/orb_cv",

"args": [],

"stopAtEntry": false,

"cwd": "${workspaceFolder}",

"environment": [],

"externalConsole": false,

"MIMode": "gdb",

"setupCommands": [

{

"description": "为 gdb 启动整齐打印",

"text": "-enable-pretty-printing",

"ignoreFailures": true

}

],

"preLaunchTask": "Build",

"miDebuggerPath": "/usr/bin/gdb"

}

]

}

//tasks.json

{

"version": "2.0.0",

"options":{

"cwd": "${workspaceFolder}/build" //指明在哪个文件夹下做下面这些指令

},

"tasks": [

{

"type": "shell",

"label": "cmake", //label就是这个task的名字,这个task的名字叫cmake

"command": "cmake", //command就是要执行什么命令,这个task要执行的任务是cmake

"args":[

".."

]

},

{

"label": "make", //这个task的名字叫make

"group": {

"kind": "build",

"isDefault": true

},

"command": "make", //这个task要执行的任务是make

"args": [

]

},

{

"label": "Build",

"dependsOrder": "sequence", //按列出的顺序执行任务依赖项

"dependsOn":[ //这个label依赖于上面两个label

"cmake",

"make"

]

}

]

}

#CMakeLists.txt

cmake_minimum_required(VERSION 3.0)

project(ORBCV)

#在g++编译时,添加编译参数,比如-Wall可以输出一些警告信息

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall")

set(CMAKE_CXX_FLAGS "-std=c++11")

#一定要加上这句话,加上这个生成的可执行文件才是可以Debug的,不然不加或者是Release的话生成的可执行文件是无法进行调试的

set(CMAKE_BUILD_TYPE Debug)

#此工程要调用opencv库,因此需要添加opancv头文件和链接库

#寻找OpenCV库

find_package(OpenCV REQUIRED)

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

add_executable(orb_cv orb_cv.cpp)

#链接OpenCV库

target_link_libraries(orb_cv ${OpenCV_LIBS})

#include 运行结果:

可以看出,从匹配所有点的图片中可以看出,带有大量的误匹配。经过一次筛选后,匹配数量就减少了好多,单大多数都是正确的。

从用时可以看出,ORB提取用时比匹配用时耗费多得多,可见大部分时间都花在特征提取上。

7.2.2 手写ORB特征

//launch.json

{

// Use IntelliSense to learn about possible attributes.

// Hover to view descriptions of existing attributes.

// For more information, visit: https://go.microsoft.com/fwlink/?linkid=830387

"version": "0.2.0",

"configurations": [

{

"name": "g++ - 生成和调试活动文件",

"type": "cppdbg",

"request":"launch",

"program":"${workspaceFolder}/build/orb_self",

"args": [],

"stopAtEntry": false,

"cwd": "${workspaceFolder}",

"environment": [],

"externalConsole": false,

"MIMode": "gdb",

"setupCommands": [

{

"description": "为 gdb 启动整齐打印",

"text": "-enable-pretty-printing",

"ignoreFailures": true

}

],

"preLaunchTask": "Build",

"miDebuggerPath": "/usr/bin/gdb"

}

]

}

//tasks.json

{

"version": "2.0.0",

"options":{

"cwd": "${workspaceFolder}/build" //指明在哪个文件夹下做下面这些指令

},

"tasks": [

{

"type": "shell",

"label": "cmake", //label就是这个task的名字,这个task的名字叫cmake

"command": "cmake", //command就是要执行什么命令,这个task要执行的任务是cmake

"args":[

".."

]

},

{

"label": "make", //这个task的名字叫make

"group": {

"kind": "build",

"isDefault": true

},

"command": "make", //这个task要执行的任务是make

"args": [

]

},

{

"label": "Build",

"dependsOrder": "sequence", //按列出的顺序执行任务依赖项

"dependsOn":[ //这个label依赖于上面两个label

"cmake",

"make"

]

}

]

}

#CMakeLists.txt

cmake_minimum_required(VERSION 3.0)

project(ORBCV)

#在g++编译时,添加编译参数,比如-Wall可以输出一些警告信息

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall")

set(CMAKE_CXX_FLAGS "-std=c++14 -mfma")

#一定要加上这句话,加上这个生成的可执行文件才是可以Debug的,不然不加或者是Release的话生成的可执行文件是无法进行调试的

set(CMAKE_BUILD_TYPE Debug)

#此工程要调用opencv库,因此需要添加opancv头文件和链接库

#寻找OpenCV库

find_package(OpenCV REQUIRED)

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

add_executable(orb_self orb_self.cpp)

#链接OpenCV库

target_link_libraries(orb_self ${OpenCV_LIBS})

#include