C++无锁编程——无锁栈(lock-free stack)

C++无锁编程——无锁栈(lock-free stack)

贺志国

2023.6.28

无锁数据结构意味着线程可以并发地访问数据结构而不出错。例如,一个无锁栈能同时允许一个线程压入数据,另一个线程弹出数据。不仅如此,当调度器中途挂起其中一个访问线程时,其他线程必须能够继续完成自己的工作,而无需等待挂起线程。

无锁栈一个很大的问题在于,如何在不加锁的前提下,正确地分配和释放节点的内存,同时不引起逻辑错误和程序崩溃。

一、使用智能指针std::shared_ptr实现

一个最朴素的想法是,使用智能指针管理节点。事实上,如果平台支持std::atomic_is_lock_free(&some_shared_ptr)实现返回true,那么所有内存回收问题就都迎刃而解了(我在X86和Arm平台测试,均返回false)。示例代码(文件命名为 lock_free_stack.h)如下:

#pragma once

#include 上述代码中,希望借助std::shared_ptr<>来完成节点内存的动态分配和回收,因为其有内置的引用计数机制。不幸地是,虽然std::shared_ptr<>虽然可以用于原子操作,但在大多数平台上不是无锁的,需要通过C++标准库添加内部锁来实现原子操作,这样会带来极大的性能开销,无法满足高并发访问的需求。

如果编译器支持C++20标准,std::atomic允许用户原子地操纵 std::shared_ptr,即在确保原子操作的同时,还能正确地处理引用计数。与其他原子类型一样,其实现也不确定是否无锁。使用std::atomic实现无锁栈(表面上看肯定无锁,实际上是否无锁取决于std::atomic的is_lock_free函数返回值是否为true)的示例代码(文件命名为 lock_free_stack.h)如下:

#pragma once

#include 我的编译器目前只支持C++17标准,上述代码会出现如下编译错误:

In file included from /home/zhiguohe/code/excercise/lock_freee/lock_free_stack_with_shared_ptr_cpp/lock_free_stack_with_shared_ptr.h:3,

from /home/zhiguohe/code/excercise/lock_freee/lock_free_stack_with_shared_ptr_cpp/lock_free_stack_with_shared_ptr.cpp:1:

/usr/include/c++/9/atomic: In instantiation of ‘struct std::atomic<std::shared_ptr<LockFreeStack<int>::Node> >’:

/home/zhiguohe/code/excercise/lock_freee/lock_free_stack_with_shared_ptr_cpp/lock_free_stack_with_shared_ptr.h:61:38: required from ‘class LockFreeStack<int>’

/home/zhiguohe/code/excercise/lock_freee/lock_free_stack_with_shared_ptr_cpp/lock_free_stack_with_shared_ptr.cpp:16:22: required from here

/usr/include/c++/9/atomic:191:21: error: static assertion failed: std::atomic requires a trivially copyable type

191 | static_assert(__is_trivially_copyable(_Tp),

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~

make[2]: *** [CMakeFiles/lock_free_stack_with_shared_ptr_cpp.dir/build.make:63: CMakeFiles/lock_free_stack_with_shared_ptr_cpp.dir/lock_free_stack_with_shared_ptr.cpp.o] Error 1

make[1]: *** [CMakeFiles/Makefile2:644: CMakeFiles/lock_free_stack_with_shared_ptr_cpp.dir/all] Error 2

make: *** [Makefile:117: all] Error 2

二、手动管理内存——使用简单的计数器判断是否存在线程调用Pop函数

2.1 不考虑放宽内存顺序

如果编译器不支持C++20标准,我们需要手动管理节点的内存分配和回收。一种简单的思路是,判断当前有无线程访问Pop函数,如果不存在,则删除所有弹出的节点,否则将弹出的节点存储到待删除列表to_be_deleted_中,等到最终无线程访问Pop函数时再释放to_be_deleted_。下面展示该思路的实现代码(文件命名为 lock_free_stack.h,示例来源于C++ Concurrency In Action, 2ed 2019,修复了其中的bug):

#pragma once

#include ();

std::shared_ptr<T> res;

if (old_head != nullptr) {

++threads_in_pop_;

res.swap(old_head->data);

// Reclaim deleted nodes.

TryReclaim(old_head);

}

return res;

}

~LockFreeStack() {

while (Pop()) {

// Do nothing and wait for all elements are poped.

}

}

private:

// If the struct definition of Node is placed in the private data member

// field where 'head_' is defined, the following compilation error will occur:

//

// error: 'Node' has not been declared ...

//

// It should be a bug of the compiler. The struct definition of Node is put in

// front of the private member function `DeleteNodes` to eliminate this error.

struct Node {

// std::make_shared does not throw an exception.

Node(const T& input_data)

: data(std::make_shared<T>(input_data)), next(nullptr) {}

std::shared_ptr<T> data;

Node* next;

};

private:

static void DeleteNodes(Node* nodes) {

while (nodes != nullptr) {

Node* next = nodes->next;

delete nodes;

nodes = next;

}

}

void ChainPendingNodes(Node* first, Node* last) {

last->next = to_be_deleted_;

// If last->next is the same as to_be_deleted_, update head_ to first and

// return true.

// If last->next and to_be_deleted_ are not equal, update last->next to

// to_be_deleted_ and return false.

while (!to_be_deleted_.compare_exchange_weak(last->next, first)) {

// Do nothing and wait for the to_be_deleted_ is updated to first.

}

}

void ChainPendingNodes(Node* nodes) {

Node* last = nodes;

while (Node* next = last->next) {

last = next;

}

ChainPendingNodes(nodes, last);

}

void ChainPendingNode(Node* n) { ChainPendingNodes(n, n); }

void TryReclaim(Node* old_head) {

if (old_head == nullptr) {

return;

}

if (threads_in_pop_ == 1) {

Node* nodes_to_delete = to_be_deleted_.exchange(nullptr);

if (!--threads_in_pop_) {

DeleteNodes(nodes_to_delete);

} else if (nodes_to_delete) {

ChainPendingNodes(nodes_to_delete);

}

delete old_head;

} else {

ChainPendingNode(old_head);

--threads_in_pop_;

}

}

private:

std::atomic<Node*> head_;

std::atomic<Node*> to_be_deleted_;

std::atomic<unsigned> threads_in_pop_;

};

上述代码通过计数的方式来回收节点的内存。当栈处于低负荷状态时,这种方式没有问题。然而,删除节点是一项非常耗时的工作,并且希望其他线程对链表做的修改越少越好。从第一次发现threads_in_pop_是1,到尝试删除节点,会用耗费很长的时间,就会让线程有机会调用Pop(),让threads_in_pop_不为0,阻止节点的删除操作。栈处于高负荷状态时,因为其他线程在初始化后都能使用Pop(),所以待删除节点的链表to_be_deleted_将会无限增加,会再次泄露。另一种方式是,确定无线程访问给定节点,这样给定节点就能回收,这种最简单的替换机制就是使用风险指针(hazard pointer)和引用计数。我们将在后续示例中讲解。

2.2 放宽内存顺序

上述实现代码的所有原子操作函数没给出内存顺序,默认使用的都是std::memory_order_seq_cst(顺序一致序)。std::memory_order_seq_cst比起其他内存序要简单得多,在顺序一致序下,所有操作(包括原子与非原子的操作)都与代码顺序一致,符合人类正常的思维逻辑,但消耗的系统资源相对会更高。任何一个无锁数据的实现,内存顺序都应当从std::memory_order_seq_cst开始。只有当基本操作正常工作的时候,才可考虑放宽内存顺序的选择。通常,放松后的内存顺序很难保证在所有平台工作正常。除非性能真正成了瓶颈,否则不必考虑放宽内存顺序。如果追求极致性能,需要部分放宽内存顺序。实际上,内存顺序仅对ARM嵌入式平台的性能产生较大的影响,在X86平台上几乎无影响,X86编译器实现的内存顺序似乎都是std::memory_order_seq_cst(顺序一致序)。放宽内存顺序的基本原则为:如原子操作不需要和其他操作同步,可使用std::memory_order_relaxed(自由序,或称松弛序);写入操作一般使用std::memory_order_release(释放序),读入操作一般使用std::memory_order_acquire(获取序)。放宽内存顺序需要严格测试,尤其是在嵌入式平台上。如无把握,并且性能瓶颈不严重,建议一律不填写内存顺序参数,也就是使用默认的std::memory_order_seq_cst(顺序一致序)。下面给出参考的放宽内存顺序代码(文件命名为 lock_free_stack.h),不能保证所有平台都会正确:

#pragma once

#include ();

std::shared_ptr<T> res;

if (old_head != nullptr) {

threads_in_pop_.fetch_add(1, std::memory_order_relaxed);

res.swap(old_head->data);

// Reclaim deleted nodes.

TryReclaim(old_head);

}

return res;

}

private:

// If the struct definition of Node is placed in the private data member

// field where 'head_' is defined, the following compilation error will occur:

//

// error: 'Node' has not been declared ...

//

// It should be a bug of the compiler. The struct definition of Node is put in

// front of the private member function `DeleteNodes` to eliminate this error.

struct Node {

// std::make_shared does not throw an exception.

Node(const T& input_data)

: data(std::make_shared<T>(input_data)), next(nullptr) {}

std::shared_ptr<T> data;

Node* next;

};

private:

static void DeleteNodes(Node* nodes) {

while (nodes != nullptr) {

Node* next = nodes->next;

delete nodes;

nodes = next;

}

}

void ChainPendingNodes(Node* first, Node* last) {

last->next = to_be_deleted_.load(std::memory_order_relaxed);

// If last->next is the same as to_be_deleted_, update head_ to first and

// return true.

// If last->next and to_be_deleted_ are not equal, update last->next to

// to_be_deleted_ and return false.

while (!to_be_deleted_.compare_exchange_weak(last->next, first,

std::memory_order_release,

std::memory_order_relaxed)) {

// Do nothing and wait for the to_be_deleted_ is updated to first.

}

}

void ChainPendingNodes(Node* nodes) {

Node* last = nodes;

while (Node* next = last->next) {

last = next;

}

ChainPendingNodes(nodes, last);

}

void ChainPendingNode(Node* n) { ChainPendingNodes(n, n); }

void TryReclaim(Node* old_head) {

if (old_head == nullptr) {

return;

}

if (threads_in_pop_ == 1) {

Node* nodes_to_delete =

to_be_deleted_.exchange(nullptr, std::memory_order_relaxed);

if (!--threads_in_pop_) {

DeleteNodes(nodes_to_delete);

} else if (nodes_to_delete) {

ChainPendingNodes(nodes_to_delete);

}

delete old_head;

} else {

ChainPendingNode(old_head);

threads_in_pop_.fetch_sub(1, std::memory_order_relaxed);

}

}

private:

std::atomic<Node*> head_;

std::atomic<Node*> to_be_deleted_;

std::atomic<unsigned> threads_in_pop_;

};

三、手动管理内存——使用风险指针(hazard pointer)标识正在访问的对象

风险指针(hazard pointer)之所以称为是风险的,是因为删除一个节点可能会让其他引用线程处于危险状态。其他线程持有已删除节点的指针对其进行解引用操作时,会出现未定义行为。其基本思想就是,当有线程去访问(其他线程)删除的对象时,会先对这个对象设置风险指针,而后通知其他线程——使用这个指针是危险的行为。当这个对象不再需要,就可以清除风险指针。当线程想要删除一个对象,就必须检查系统中其他线程是否持有风险指针。当没有风险指针时,就可以安全删除对象。否则,就必须等待风险指针消失。这样,线程就需要周期性的检查要删除的对象是否能安全删除。下面展示该思路的实现代码(文件命名为 lock_free_stack.h,示例来源于C++ Concurrency In Action, 2ed 2019,修复了其中的bug):

#pragma once

#include 注意我们之前的比较交换操作用的是compare_exchange_weak函数,而在以下代码中使用的是compare_exchange_strong函数:

do {

Node* temp = nullptr;

do {

temp = old_head;

hp.store(old_head);

old_head = head_.load();

} while (old_head != temp);

} while (old_head != nullptr &&

!head_.compare_exchange_strong(old_head, old_head->next));

compare_exchange_weak函数的优点是比较交换动作消耗的资源较少,但经常存在操作系统调度引起的虚假失败;compare_exchange_strong函数的优点是不存在操作系统调度引起的虚假失败,但比较交换动作消耗的资源较多。选择二者依据是:如果while循环体中没有任何操作或者while循环体中的操作消耗资源非常少,则使用compare_exchange_weak函数;反之,如果while循环体中的操作消耗资源比较多,则使用compare_exchange_strong函数。对应到上述代码,大while循环中嵌套了一个小while循环,小while循环中包含三条语句,消耗资源较多,compare_exchange_weak函数虚假失败带来的资源消耗会超过compare_exchange_strong函数比较操作消耗的资源,因此选用compare_exchange_strong函数。如果觉得仍然难以把握,建议反复大数据量测试两种方式的实际资源消耗,最终选出合适的函数版本。

以下代码:

template <typename T>

typename LockFreeStack<T>::HazardPointer

LockFreeStack<T>::hazard_pointers_[kMaxHazardPointerNum];

是定义静态成员数组hazard_pointers_[kMaxHazardPointerNum],也就是我们通常所说的静态成员数组初始化。语法相当丑陋,但是只能这么写。使用风险指针的方法实现内存回收虽然很简单,也的确安全地回收了删除的节点,不过增加了很多开销。遍历风险指针数组需要检查kMaxHazardPointerNum个原子变量,并且每次Pop()调用时,都需要再检查一遍。原子操作很耗时,所以Pop()成为了性能瓶颈,不仅需要遍历节点的风险指针链表,还要遍历等待链表上的每一个节点。有kMaxHazardPointerNum在链表中时,就需要检查kMaxHazardPointerNum个已存储的风险指针。

四、手动管理内存——使用引用计数判断节点是否未被访问

判断弹出的节点是否能被删除的另一种思路是,当前被删除的节点是否存在线程访问,如果不存在就删除,否则就等待。该思路与智能指针的引用计数思路一致。具体做法为:对每个节点使用两个引用计数:内部计数和外部计数。两个值的总和就是对这个节点的引用数。外部记数与节点指针绑定在一起,节点指针每次被线程读到时,外部计数加1。当线程结束对节点的访问时,内部计数减1。当节点(内部包含节点指针和绑定在一起的外部计数)不被外部线程访问时,将内部计数与外部计数-2相加并将结果重新赋值给内部计数,同时丢弃外部计数。一旦内部计数等于0,表明当前节点没有被外部线程访问,可安全地将节点删除。实现代码如下(文件命名为 lock_free_stack.h):

4.1 不考虑内存顺序

#pragma once

#include 上述代码中,值得特别指出的是,带引用计数的节点指针结构体CountedNodePtr使用了位域的概念:

struct CountedNodePtr {

CountedNodePtr() : external_count(0), ptr(0) {}

// We know that the platform has spare bits in a pointer (for example,

// because the address space is only 48 bits but a pointer is 64 bits), we

// can store the count inside the spare bits of the pointer to fit it all

// back in a single machine word.

uint16_t external_count : 16;

uint64_t ptr : 48;

};

ptr的真实类型是Node*,但这里给出的却是占据48位内存空间的无符整型uint64_t 。为什么要这么做?现在主流的操作系统和编译器只支持最多8字节数据类型的无锁操作,即std::atomic的成员函数is_lock_free只有在sizeof(CountedNodePtr) <= 8时才会返回true。因此,必须将CountedNodePtr的字节数控制8以内,于是我们想到了位域。在主流的操作系统中,指针占用的空间不会超过48位(如果超过该尺寸则必须重新设计位域大小,请查阅操作系统使用手册确认),为此将external_count分配16位(最大支持65535),ptr分配48位,合计64位(8字节)。此时,std::atomic的成员函数is_lock_free在主流操作系统中都会返回true,是真正的无锁原子变量。为了适应上述更改,必须使用reinterpret_cast完成ptr从uint64_t到Node*类型的转换,使用reinterpret_cast完成指针变量从ptr从Node*到uint64_t类型的转换,从而正常地存储于ptr中。注意:external_count的计数只自增,不自减。当没有线程访问节点时,直接丢弃external_count。注意:internal_count的计数只自减,不自增,另外还与外部计数external_count - 2相加合并。

4.2 考虑内存顺序

修改内存顺序之前,需要检查一下操作间的依赖关系,再去确定适合这种关系的最佳内存序。为了保证这种方式能够工作,需要从线程的视角进行观察。其中最简单的视角就是向栈中推入一个数据项,之后让其他线程从栈中弹出这个数据项。这里需要三个重要数据参与。

CountedNodePtr转移的数据head_。head_引用的Node。- 节点所指向的数据项。

执行Push()的线程,会先构造数据项,并设置head_。执行Pop()的线程,会先加载head_,再做“比较/交换”操作,并增加引用计数,读取对应的Node节点,获取next的指向值。next的值是非原子对象,所以为了保证读取安全,必须确定存储(推送线程)和加载(弹出线程)的先行(happens-before)关系。因为原子操作就是Push()函数中的compare_exchange_weak(),所以需要获取两个线程间的先行(happens-before)关系。compare_exchange_weak()必须是std::memory_order_release或更严格的内存序。不过,compare_exchange_weak()调用失败时,什么都不会改变,并且可以持续循环下去,所以使用std::memory_order_relaxed就足够了。

Pop()的实现呢?为了确定先行(happens-before)关系,必须在访问next值之前使用std::memory_order_acquire或更严格的内存序操作。因为,IncreaseHeadCount()中使用compare_exchange_strong(),会获取next指针指向的旧值,所以要其获取成功就需要std::memory_order_acquire。如同调用Push()那样,当交换失败,循环会继续,所以在失败时可使用std::memory_order_relaxed。

compare_exchange_strong()调用成功时,ptr中的值就被存到old_counter中。存储操作是Push()中的一个释放操作,compare_exchange_strong()操作是一个获取操作,现在存储同步于加载,并且能够获取先行(happens-before)关系。因此,Push()中存储ptr的值要先行于在Pop()中对ptr->next的访问,目前的操作完全安全。

内存序对head_.load()的初始化并不妨碍分析,现在就可以使用std::memory_order_relaxed。

接下来compare_exchange_strong()将old_head.ptr->next设置为head_。是否需要做什么来保证操作线程中的数据完整性呢?交换成功就能访问ptr->data,所以需要保证在Push()线程中对ptr->data进行存储(在加载之前)。increase_head_count()中的获取操作,保证与Push()线程中的存储和“比较/交换”操作同步。在Push()线程中存储数据,先行于存储head_指针;调用increase_head_count()先行于对ptr->data的加载。即使,Pop()中的“比较/交换”操作使用std::memory_order_relaxed,这些操作还是能正常运行。唯一不同的地方就是,调用swap()让ptr->data有所变化,且没有其他线程可以对同一节点进行操作(这就是“比较/交换”操作的作用)。

compare_exchange_strong()失败时,新值不会更新old_head,并继续循环。因为确定了std::memory_order_acquire内存序在IncreaseHeadCount()中使用的可行性,所以使用std::memory_order_relaxed也可以。

其他线程呢?是否需要设置一些更为严格的内存序来保证其他线程的安全呢?回答是“不用”。因为,head_只会因“比较/交换”操作有所改变,对于“读-改-写”操作来说,Push()中的“比较/交换”操作是构成释放序列的一部分。因此,即使有很多线程在同一时间对head_进行修改,Push()中的compare_exchange_weak()与IncreaseHeadCount()(读取已存储的值)中的compare_exchange_strong()也是同步的。

剩余的就可以用来处理fetch_add()操作(用来改变引用计数的操作),因为已知其他线程不可能对该节点的数据进行修改,所以从节点中返回数据的线程可以继续执行。不过,当线程获取修改后的值时,就代表操作失败(swap()是用来提取数据项的引用)。为了避免数据竞争,要保证swap()先行于delete操作。一种简单的解决办法:在“成功返回”分支中对fetch_add()使用std::memory_order_release内存序,在“再次循环”分支中对fetch_add()使用std::memory_order_acquire内存序。不过,这有点矫枉过正:只有一个线程做delete操作(将引用计数设置为0的线程),所以只有这个线程需要获取操作。因为fetch_add()是一个“读-改-写”操作,是释放序列的一部分,所以可以使用一个额外的load()做获取。当“再次循环”分支将引用计数减为0时,fetch_add()可以重载引用计数,使用std::memory_order_acquire为了保持需求的同步关系。并且,fetch_add()本身可以使用std::memory_order_relaxed。

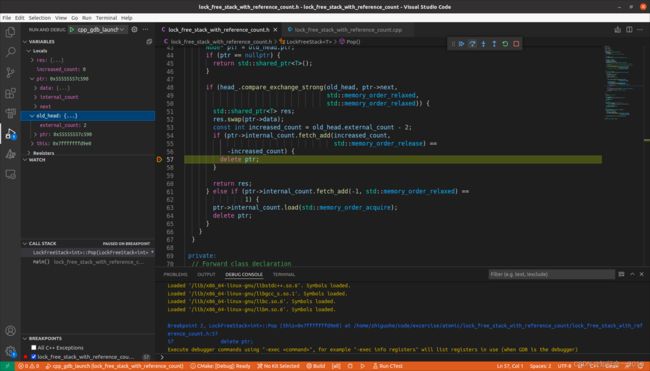

完整的放宽内存顺序的代码如下(文件命名为 lock_free_stack.h),不能保证所有平台都会正确:

#pragma once

#include 五、测试代码

下面给出测试无锁栈工作是否正常的简单测试代码(文件命名为:lock_free_stack.cpp):

#include "lock_free_stack.h"

#include CMake的编译配置文件CMakeLists.txt:

cmake_minimum_required(VERSION 3.0.0)

project(lock_free_stack VERSION 0.1.0)

set(CMAKE_CXX_STANDARD 17)

# If the debug option is not given, the program will not have debugging information.

SET(CMAKE_BUILD_TYPE "Debug")

add_executable(${PROJECT_NAME} ${PROJECT_NAME}.cpp)

find_package(Threads REQUIRED)

# libatomic should be linked to the program.

# Otherwise, the following link errors occured:

# /usr/include/c++/9/atomic:254: undefined reference to `__atomic_load_16'

# /usr/include/c++/9/atomic:292: undefined reference to `__atomic_compare_exchange_16'

# target_link_libraries(${PROJECT_NAME} ${CMAKE_THREAD_LIBS_INIT} atomic)

target_link_libraries(${PROJECT_NAME} ${CMAKE_THREAD_LIBS_INIT})

include(CTest)

enable_testing()

set(CPACK_PROJECT_NAME ${PROJECT_NAME})

set(CPACK_PROJECT_VERSION ${PROJECT_VERSION})

include(CPack)

上述配置中添加了对原子库atomic的链接。因为引用计数的结构体CountedNodePtr包含两个数据成员(注:最初实现的版本未使用位域,需要添加对原子库atomic的链接。新版本使用位域,不再需要添加):int external_count; Node* ptr;,这两个变量占用16字节,而16字节的数据结构需要额外链接原子库atomic,否则会出现链接错误:

/usr/include/c++/9/atomic:254: undefined reference to `__atomic_load_16'

/usr/include/c++/9/atomic:292: undefined reference to `__atomic_compare_exchange_16'

VSCode调试启动配置文件.vscode/launch.json:

{

"version": "0.2.0",

"configurations": [

{

"name": "cpp_gdb_launch",

"type": "cppdbg",

"request": "launch",

"program": "${workspaceFolder}/build/${workspaceFolderBasename}",

"args": [],

"stopAtEntry": false,

"cwd": "${fileDirname}",

"environment": [],

"externalConsole": false,

"MIMode": "gdb",

"setupCommands": [

{

"description": "Enable neat printing for gdb",

"text": "-enable-pretty-printing",

"ignoreFailures": true

}

],

// "preLaunchTask": "cpp_build_task",

"miDebuggerPath": "/usr/bin/gdb"

}

]

}

使用CMake的编译命令:

cd lock_free_stack

# 只执行一次

mkdir build

cd build

cmake .. && make

运行结果如下:

./lock_free_stack

The data 0 is pushed in the stack.

The data 1 is pushed in the stack.

The data 2 is pushed in the stack.

The data 3 is pushed in the stack.

The data 4 is pushed in the stack.

The data 5 is pushed in the stack.

The data 6 is pushed in the stack.

The data 7 is pushed in the stack.

The data 8 is pushed in the stack.

The data 9 is pushed in the stack.

stack.IsEmpty() == false

Current data is : 9

Current data is : 8

Current data is : 7

Current data is : 6

Current data is : 5

Current data is : 4

Current data is : 3

Current data is : 2

Current data is : 1

Current data is : 0