【kubernetes系列】flannel三种常见模式分析

概述

大家接触flannel这种网络模式大多数可能都是从k8s中知道的,初始使用很少去深入了解它,毕竟使用它其实是很简单的。但是有时候会出现奇奇怪怪的网络问题,这个时候就需要我们更深入了解一下flannel这种网络模式。

Flannel是CoreOS开源的,Overlay模式的CNI网络插件,Flannel在每个集群节点上运行一个flanneld的代理守护服务,为每个集群节点(host)分配一个子网(subnet),同时为节点上的容器组(pod)分配IP,在整个集群节点间构建一个虚拟的网络,实现集群内部跨节点通信。Flannel的数据包在集群节点间转发是由backend实现的,目前,常见的模式有UDP、VXLAN、HOST-GW三种,还有一些其他的可以自行了解。

常见三种模式对比

UDP模式:使用设备flannel.0进行封包解包,原生内核不支持,上下文切换较大,性能非常差

VXLAN模式:使用flannel.1进行封包解包,原生内核支持,性能较强,集群可以由不同网段的主机组成

HOST-GW模式:不需要flannel.1这样的中间设备,直接将宿主机的IP当作子网的下一跳地址,性能最强

大概来说,host-gw的性能损失大约在10%左右,而其他所有基于VXLAN“隧道”机制 的网络方案,性能损失在20%~30%左右

常见三种模式的测试

udp模式

flannel官文说明: Use UDP only for debugging if your network and kernel prevent you from using VXLAN or host-gw.

使用udp后端的节点会创建一个 flannel0的TUN设备(Tunnel设备)。在 Linux 中,TUN 设备是一种工作在三层(Network Layer)的虚拟网络设备。TUN 设备的功能非常简单,即:在操作系统内核和用户应用程序之间传递 IP 包。在这个过程中,由于使用了flannel0这个TUN设备,仅在发出IP包的过程中就要经过了三次用户态到内核态的数据拷贝(linux的上下文切换代价比较大),所以性能非常差。虽然vxlan模式用的也是udp协议,但因为是在内核态完成数据包的处理,所以性能要远高于udp模式。

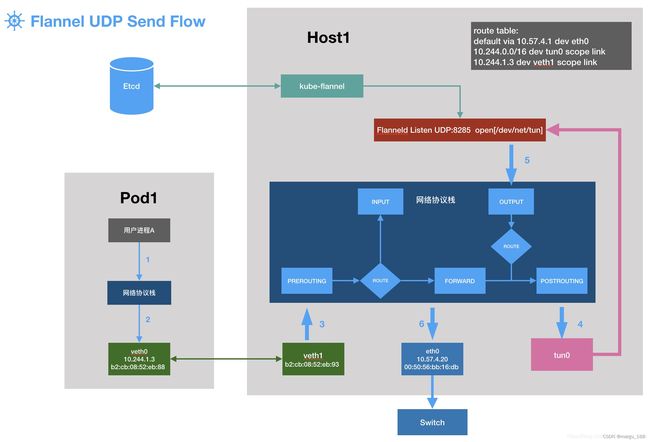

UDP模式简单来说,就是数据报文在发送实际物理网络之前,通过flanneld进行一层UDP封装,将数据报文作为payload发送给对端,对端收到UDP报文之后,flanneld通过解包得到真正的数据报文后,再转发至最终的服务端,如上图所示。

UDP模式的核心点是虚拟网络设备tun/tap,该设备一端连接协议栈,另外一端连接用户程序,允许用户程序像读写文件一样进行收发数据包。tun/tap工作原理基本一致,tun模拟是三层网络设备,收发的是IP层数据包;tap模拟是二层网络设备,收发以太网数据帧。

udp模式部署

[root@k8s-m1 k8s-total]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

#主要修改type类型和securityContext.privileged为true,type后面可以指定端口Port,默认为8285。securityContext.privileged不修改的话会报open /dev/net/tun: no such file or directory这个错误

[root@k8s-m1 k8s-total]# cat kube-flannel.yml

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "udp"

}

}

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

#udp模式下,会生成一个flannel0的网卡

[root@k8s-m1 k8s-total]# ip a

33: flannel0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1472 qdisc pfifo_fast state UNKNOWN group default qlen 500

link/none

inet 10.244.2.0/32 scope global flannel0

valid_lft forever preferred_lft forever

[root@k8s-m1 k8s-total]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.2.254 0.0.0.0 UG 0 0 0 ens32

10.244.0.0 0.0.0.0 255.255.0.0 U 0 0 0 flannel0

10.244.2.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 ens32

172.16.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 ens32

#可以看到去往10.244.0.0网段地址是走flannel0这个网卡

可以看到, 10.224.0.0 网段都会经过 cni0,同一主机网段的pod之间的访问都会从容器网络到 cni0 设备,然后再转发到目标容器中。而pod网段中非本机的网段 10.224.2.0/16 都会经过 flannel0 设备,然后通过flanneld进程封包后抓发到目标机器的udp端口。

udp模式抓包分析

#选取不在同一节点上的pod

[root@k8s-m1 k8s-total]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

liveness-exec-pod 1/1 Running 0 18m 10.244.2.2 k8s-m1 <none> <none>

my-nginx-7ff446c4f4-6zk5c 1/1 Running 0 19m 10.244.0.2 k8s-m2 <none> <none>

my-nginx-7ff446c4f4-bslht 1/1 Running 0 17m 10.244.2.3 k8s-m1 <none> <none>

#查看对应的veth网卡

[root@k8s-m1 k8s-total]# kubectl exec -it liveness-exec-pod -- /bin/sh

/ # cat /sys/class/net/eth0/iflink

28

#ping不在同一节点的pod

/ # ping 10.244.0.2

PING 10.244.0.2 (10.244.0.2): 56 data bytes

64 bytes from 10.244.0.2: seq=0 ttl=60 time=0.704 ms

64 bytes from 10.244.0.2: seq=1 ttl=60 time=0.464 ms

64 bytes from 10.244.0.2: seq=2 ttl=60 time=0.477 ms

64 bytes from 10.244.0.2: seq=3 ttl=60 time=0.490 ms

#查看28这个veth网卡

[root@k8s-m1 k8s-total]# ip a

.....

28: vethfabdae20@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1472 qdisc noqueue master cni0 state UP group default

link/ether e2:c9:c1:f5:bf:59 brd ff:ff:ff:ff:ff:ff link-netnsid 5

.....

#抓28网卡上的包

[root@k8s-m1 k8s-total]# tcpdump -i vethfabdae20 -p icmp -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on vethfabdae20, link-type EN10MB (Ethernet), capture size 262144 bytes

15:08:01.142468 IP 10.244.2.2 > 10.244.0.2: ICMP echo request, id 2091, seq 0, length 64

15:08:01.143012 IP 10.244.0.2 > 10.244.2.2: ICMP echo reply, id 2091, seq 0, length 64

15:08:02.142590 IP 10.244.2.2 > 10.244.0.2: ICMP echo request, id 2091, seq 1, length 64

15:08:02.142972 IP 10.244.0.2 > 10.244.2.2: ICMP echo reply, id 2091, seq 1, length 64

15:08:03.142709 IP 10.244.2.2 > 10.244.0.2: ICMP echo request, id 2091, seq 2, length 64

15:08:03.143117 IP 10.244.0.2 > 10.244.2.2: ICMP echo reply, id 2091, seq 2, length 64

15:08:04.142824 IP 10.244.2.2 > 10.244.0.2: ICMP echo request, id 2091, seq 3, length 64

15:08:04.143247 IP 10.244.0.2 > 10.244.2.2: ICMP echo reply, id 2091, seq 3, length 64

^C

8 packets captured

8 packets received by filter

0 packets dropped by kernel

#抓取flannel0上的包

[root@k8s-m1 k8s-total]# tcpdump -i flannel0 -p icmp -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on flannel0, link-type RAW (Raw IP), capture size 262144 bytes

15:08:14.144355 IP 10.244.2.2 > 10.244.0.2: ICMP echo request, id 2091, seq 13, length 64

15:08:14.144825 IP 10.244.0.2 > 10.244.2.2: ICMP echo reply, id 2091, seq 13, length 64

15:08:15.144557 IP 10.244.2.2 > 10.244.0.2: ICMP echo request, id 2091, seq 14, length 64

15:08:15.144951 IP 10.244.0.2 > 10.244.2.2: ICMP echo reply, id 2091, seq 14, length 64

15:08:15.621785 IP 10.244.0.0 > 10.244.2.0: ICMP host 10.244.0.12 unreachable, length 68

15:08:15.621889 IP 10.244.0.0 > 10.244.2.0: ICMP host 10.244.0.12 unreachable, length 68

15:08:15.621924 IP 10.244.0.0 > 10.244.2.0: ICMP host 10.244.0.12 unreachable, length 68

^C

7 packets captured

7 packets received by filter

0 packets dropped by kernel

#出口网卡ens32上的包

[root@k8s-m1 k8s-total]# tcpdump -i ens32 -p icmp -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on ens32, link-type EN10MB (Ethernet), capture size 262144 bytes

15:08:20.878531 IP 192.168.2.141 > 192.168.2.140: ICMP 192.168.2.141 protocol 112 unreachable, length 48

15:08:20.941755 IP 192.168.2.140 > 103.39.231.155: ICMP echo request, id 1, seq 42132, length 64

15:08:20.978561 IP 103.39.231.155 > 192.168.2.140: ICMP echo reply, id 1, seq 42132, length 64

15:08:21.679384 IP 192.168.2.141 > 192.168.2.140: ICMP host 192.168.2.141 unreachable, length 68

15:08:21.679429 IP 192.168.2.141 > 192.168.2.140: ICMP host 192.168.2.141 unreachable, length 68

^C

5 packets captured

5 packets received by filter

0 packets dropped by kernel

总结,在flannel的udp模式下,ping不在同一节点的pod,流量路径为pod(容器)->cni0->flannel0-> flanneld 进程将数据封装在UDP包-> 本端host 网卡->对端host 网卡 ->flanneld 进程解析出原始数据->flannel0-> cni0 ->对应的pod(容器),对端宿主机的流量可以用类似方法抓取。

和两台主机直接通信相比,Flannel UDP模式多了一个 flanneld 进程的处理,该处理会导致数据多次在用户态和内核态传递。如下图所示:

第一次,用户态的容器进程发出的 IP 包经过 cni0 网桥进入内核态;

第二次,IP 包根据路由表进入 TUN(flannel0)设备,从而回到用户态的 flanneld 进程;

第三次,flanneld 进行 UDP 封包之后重新进入内核态,将 UDP 包通过宿主机的 eth0 发出去。

在 Linux 操作系统中,上述这些上下文切换和用户态操作的代价其实是比较高的,这也正是造成 Flannel UDP 模式性能不好的主要原因。

Flannel 的VXLAN后端使用Linux 内核本身支持的VXLAN网络虚拟化技术,可以完全在内核态实现上述封装和解封装的工作,能明显提高网络性能,这也是Flannel支持的VXLAN网络成为主流容器网络方案的原因。

vxlan模式

VXLAN协议是一种隧道协议,旨在解决IEEE 802.1q中限制VLAN ID(4096)的问题。 使用 VXLAN,标识符的大小扩展到 24 位 (16777216)。

VXLAN的特点是将L2的以太帧封装到UDP报文(即L2 over L4)中,并在L3网络中传输。 在三层网络上构建一个逻辑的二层网络。

VXLAN本质上是一种隧道技术,在源网络设备与目的网络设备之间的IP网络上,建立一条逻辑隧道,将用户侧报文经过特定的封装后通过这条隧道转发。

vxlan模式部署

官方给的flannel部署yaml文件默认就是vxlan模式

#先清理环境,后面更换模式一样可以先清理

[root@k8s-m1 k8s-total]# kubectl delete -f kube-flannel.yml

[root@k8s-m1 k8s-total]# rm -rf /var/lib/cni/;rm -rf /etc/cni/;ifconfig cni0 down;ifconfig flannel.1 down;ip link delete cni0;ip link delete flannel.1 #这一步所有节点都需要执行

#修改配置

[root@k8s-m1 k8s-total]# cat kube-flannel.yml

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

[root@k8s-m1 k8s-total]# ip a

......

16: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether ce:ce:74:1d:a6:f2 brd ff:ff:ff:ff:ff:ff

inet 10.244.2.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

23: veth60f8f73d@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master cni0 state UP group default

link/ether 26:13:a3:00:b5:75 brd ff:ff:ff:ff:ff:ff link-netnsid 5

[root@k8s-m1 k8s-total]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.2.254 0.0.0.0 UG 0 0 0 ens32

10.244.0.0 10.244.0.0 255.255.255.0 UG 0 0 0 flannel.1

10.244.1.0 10.244.1.0 255.255.255.0 UG 0 0 0 flannel.1

10.244.2.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 ens32

172.16.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 ens32

可以看到到,到其他节点的网段,此处是10.244.0.0,10.244.0.0,网关地址为对应的flannel.1网卡。

vxlan抓包分析

#注意改变了网络模式,pod要重新部署,后面的host-gw模式测试一样需要重新部署pod

[root@k8s-m1 k8s-total]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

liveness-exec-pod 1/1 Running 0 17h 10.244.2.10 k8s-m1 <none> <none>

my-nginx-5b8555d6b8-9jns5 1/1 Running 0 17h 10.244.0.15 k8s-m2 <none> <none>

my-nginx-5b8555d6b8-h5bws 1/1 Running 0 17h 10.244.1.6 k8s-m3 <none> <none>

#对应上面看到的23网卡

[root@k8s-m1 k8s-total]# kubectl exec -it liveness-exec-pod -- /bin/sh

/ # cat /sys/class/net/eth0/iflink

23

#ping不在同一节点的pod

/ # ping 10.244.0.15

PING 10.244.0.15 (10.244.0.15): 56 data bytes

64 bytes from 10.244.0.15: seq=0 ttl=62 time=0.477 ms

64 bytes from 10.244.0.15: seq=1 ttl=62 time=0.373 ms

64 bytes from 10.244.0.15: seq=2 ttl=62 time=0.351 ms

^C

--- 10.244.0.15 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.351/0.400/0.477 ms

#抓对应网卡上的包

[root@k8s-m1 k8s-total]# tcpdump -i veth60f8f73d -p icmp -nn

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on veth60f8f73d, link-type EN10MB (Ethernet), capture size 262144 bytes

09:48:02.442543 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 8039, seq 477, length 64

09:48:02.442773 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 8039, seq 477, length 64

09:48:03.442674 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 8039, seq 478, length 64

09:48:03.442915 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 8039, seq 478, length 64

^C

4 packets captured

4 packets received by filter

0 packets dropped by kernel

[root@k8s-m1 k8s-total]# tcpdump -i cni0 -p icmp -nn

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on cni0, link-type EN10MB (Ethernet), capture size 262144 bytes

09:48:14.444007 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 8039, seq 489, length 64

09:48:14.444264 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 8039, seq 489, length 64

09:48:15.444132 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 8039, seq 490, length 64

09:48:15.444364 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 8039, seq 490, length 64

^C

4 packets captured

4 packets received by filter

0 packets dropped by kernel

[root@k8s-m1 k8s-total]# tcpdump -i flnanel.1 -p icmp -nn

tcpdump: flnanel.1: No such device exists

(SIOCGIFHWADDR: No such device)

[root@k8s-m1 k8s-total]# tcpdump -i flannel.1 -p icmp -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on flannel.1, link-type EN10MB (Ethernet), capture size 262144 bytes

09:48:33.447459 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 8039, seq 508, length 64

09:48:33.447695 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 8039, seq 508, length 64

09:48:34.447606 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 8039, seq 509, length 64

09:48:34.447857 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 8039, seq 509, length 64

^C

4 packets captured

4 packets received by filter

0 packets dropped by kernel

[root@k8s-m1 k8s-total]# tcpdump -i ens32 -p icmp -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on ens32, link-type EN10MB (Ethernet), capture size 262144 bytes

09:48:39.944790 IP 192.168.2.140 > 103.39.231.155: ICMP echo request, id 1, seq 22955, length 64

09:48:39.981623 IP 103.39.231.155 > 192.168.2.140: ICMP echo reply, id 1, seq 22955, length 64

09:48:40.944945 IP 192.168.2.140 > 103.39.231.155: ICMP echo request, id 1, seq 22956, length 64

09:48:40.981931 IP 103.39.231.155 > 192.168.2.140: ICMP echo reply, id 1, seq 22956, length 64

09:48:41.059271 IP 192.168.2.141 > 192.168.2.140: ICMP 192.168.2.141 protocol 112 unreachable, length 48

总结,在flannel的vxlan模式下,ping不在同一节点的pod,流量路径为pod(容器)->cni0->flannel.1-> 本host IP -对端host IP ->flannel.1-> cni0 ->对应的pod(容器),对端宿主机的流量可以用类似方法抓取。

如下图,使用vxlan 后数据包传输过程:

假设 k8s-m2节点上的pod1(container1) 需要访问 k8s-m3节点上的pod2(container2)

- 容器路由:根据容器路由表,数据从容器的 eth0 发出

- 主机路由:数据包接入到主机网络设备 cni0后, 根据主机路由到 flannel.1 设备,即隧道入口。

- vxlan 封装:加上 vxlan 相关的header,封装成一个普通的数据帧

- 封装为udp包转发到目的机器:从FDB获取目标设备对应的 ip 和 mac,形成一个UDP包并转发出去。

- 数据到达目标机器:数据包到达k8s-m3节点,在内核解封装发现是VXLAN数据包,把它交给flannel.1设备。flannel.1设备则会进一步拆包,取出原始IP包(源容器IP和目标容器IP),通过cni0网桥转发给容器。

host-gw模式

可查看flannel官方文档:Use host-gw to create IP routes to subnets via remote machine IPs. Requires direct layer2 connectivity between hosts running flannel.

howt-gw模式的工作原理,就是将每个Flannel子网的下一跳,设置成了该子网对应的宿主机的IP地址,也就是说,宿主机(host)充当了这条容器通信路径的“网关”(Gateway),这正是host-gw的含义。所有的子网和主机的信息,都保存在Etcd中,flanneld只需要watch这些数据的变化 ,实时更新路由表就行了。它核心是IP包在封装成桢的时候,使用路由表的“下一跳”设置上的MAC地址,这样可以经过二层网络到达目的宿主机。要求集群的主机位于同一个子网段。

vxlan模式修改为host-gw模式,直接修改type即可。

host-gw部署

[root@k8s-m1 k8s-total]# cat kube-flannel.yml

......

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "host-gw"

}

}

#然后部署

[root@k8s-m1 k8s-total]# kubectl apply -f kube-flannel.yml

[root@k8s-m1 k8s-total]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.2.254 0.0.0.0 UG 0 0 0 ens32

10.244.0.0 192.168.2.141 255.255.255.0 UG 0 0 0 ens32

10.244.1.0 192.168.2.142 255.255.255.0 UG 0 0 0 ens32

10.244.2.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 ens32

172.16.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 ens32

和vxlan打的路由对比,发现在host-gw模式下到10.244 的其他网段的Gateway已经是对应宿主机ens32 的IP地址,而上面的vxlan的下一跳是每个宿主机上flannel.1这个网卡的IP地址

host-gw抓包分析

创建测试的pod,带ping命令,位于不同节点就可以。

[root@k8s-m1 k8s-total]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

liveness-exec-pod 1/1 Running 0 4h29m 10.244.2.10 k8s-m1 <none> <none>

my-nginx-5b8555d6b8-9jns5 1/1 Running 0 4h32m 10.244.0.15 k8s-m2 <none> <none>

my-nginx-5b8555d6b8-h5bws 1/1 Running 0 4h33m 10.244.1.6 k8s-m3 <none> <none>

[root@k8s-m1 k8s-total]# kubectl exec -it liveness-exec-pod -- /bin/sh

/ # cat /sys/class/net/eth0/iflink

23

/ # ^C

/ # ping 10.244.0.15

PING 10.244.0.15 (10.244.0.15): 56 data bytes

64 bytes from 10.244.0.15: seq=0 ttl=62 time=0.452 ms

64 bytes from 10.244.0.15: seq=1 ttl=62 time=0.336 ms

64 bytes from 10.244.0.15: seq=2 ttl=62 time=0.387 ms

^C

--- 10.244.0.15 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.336/0.391/0.452 ms

在对应节点进行抓包,如上在k8s-m1节点先抓包。

#查看对应veth的网卡名称

[root@k8s-m1 k8s-total]# ip a

......

22: veth6250cd19@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 46:95:62:b1:34:cb brd ff:ff:ff:ff:ff:ff link-netnsid 7

23: veth60f8f73d@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master cni0 state UP group default

link/ether 26:13:a3:00:b5:75 brd ff:ff:ff:ff:ff:ff link-netnsid 5

[root@k8s-m1 k8s-total]# tcpdump -i veth60f8f73d -p icmp -nn

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on veth60f8f73d, link-type EN10MB (Ethernet), capture size 262144 bytes

20:49:40.746935 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 27224, seq 0, length 64

20:49:40.747213 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 27224, seq 0, length 64

20:49:41.747140 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 27224, seq 1, length 64

20:49:41.747424 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 27224, seq 1, length 64

20:49:42.747341 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 27224, seq 2, length 64

20:49:42.747545 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 27224, seq 2, length 64

20:49:43.747487 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 27224, seq 3, length 64

20:49:43.747703 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 27224, seq 3, length 64

^C

8 packets captured

8 packets received by filter

0 packets dropped by kernel

[root@k8s-m1 k8s-total]# tcpdump -i cni0 -p icmp -nn

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on cni0, link-type EN10MB (Ethernet), capture size 262144 bytes

20:49:55.749255 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 27224, seq 15, length 64

20:49:55.749460 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 27224, seq 15, length 64

20:49:56.749400 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 27224, seq 16, length 64

20:49:56.750816 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 27224, seq 16, length 64

^C

4 packets captured

4 packets received by filter

0 packets dropped by kernel

#可以看到,由于我开始部署了vxlan模式,flannel.1的网卡没有清理,但是抓flannel上的包已经没有icmp数据

[root@k8s-m1 k8s-total]# tcpdump -i flannel.1 -p icmp -nn

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on flannel.1, link-type EN10MB (Ethernet), capture size 262144 bytes

^C

0 packets captured

0 packets received by filter

0 packets dropped by kernel

[root@k8s-m1 k8s-total]# tcpdump -i ens32 -p icmp -nn

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on ens32, link-type EN10MB (Ethernet), capture size 262144 bytes

20:50:12.770374 IP 103.39.231.155 > 192.168.2.140: ICMP echo reply, id 1, seq 41794, length 64

20:50:13.733641 IP 192.168.2.140 > 103.39.231.155: ICMP echo request, id 1, seq 41795, length 64

20:50:13.751660 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 27224, seq 33, length 64

20:50:13.751826 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 27224, seq 33, length 64

20:50:13.770154 IP 103.39.231.155 > 192.168.2.140: ICMP echo reply, id 1, seq 41795, length 64

^X20:50:14.733778 IP 192.168.2.140 > 103.39.231.155: ICMP echo request, id 1, seq 41796, length 64

20:50:14.751769 IP 10.244.2.10 > 10.244.0.15: ICMP echo request, id 27224, seq 34, length 64

20:50:14.751933 IP 10.244.0.15 > 10.244.2.10: ICMP echo reply, id 27224, seq 34, length 64

20:50:14.770229 IP 103.39.231.155 > 192.168.2.140: ICMP echo reply, id 1, seq 41796, length 64

20:50:15.430190 IP 192.168.2.141 > 192.168.2.140: ICMP 192.168.2.141 protocol 112 unreachable, length 48

^C

10 packets captured

18 packets received by filter

0 packets dropped by kernel

**总结:flannel的host-gw模式下的不同节点上pod访问流量路径如下:

pod(容器)->cni0-> 本host IP -对应host IP -> cni0 ->对应的pod(容器)**而不会经过flannel.1网卡(正常情况应该没有这个网卡)。对端宿主机的流量可以用类似方法抓取。

host-gw 模式的工作原理,其实就是将每个 Flannel 子网(Flannel Subnet,比如:10.244.1.0/24)的“下一跳”,设置成了该子网对应的宿主机的 IP 地址;从而让这台主机充当容器网络的网关角色。

如何查看集群使用flannel的哪种网络模式

通过configmap查看

[root@k8s-m1 k8s-total]# kubectl get cm -n kube-system kube-flannel-cfg -o yaml|grep Type

"Type": "udp"

根据type类型查看。

直接通过网卡进行查看

[root@k8s-m1 k8s-total]# ip a

.....

25: flannel0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1472 qdisc pfifo_fast state UNKNOWN group default qlen 500

link/none

inet 10.244.1.0/32 scope global flannel0

valid_lft forever preferred_lft forever

3: docker_gwbridge: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:df:5a:5c:5e brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 brd 172.18.255.255 scope global docker_gwbridge

valid_lft forever preferred_lft forever

查看网卡,如果是flannel0,则是udp模式,如果是类似docker_gwbridge则应该是host-gw模式,而是flannel.1,则应该是vxlan模式。

通过路由查看

[root@k8s-m1 k8s-total]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.2.254 0.0.0.0 UG 0 0 0 ens32

10.244.0.0 192.168.2.141 255.255.255.0 UG 0 0 0 ens32

10.244.1.0 192.168.2.142 255.255.255.0 UG 0 0 0 ens32

10.244.2.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 ens32

172.16.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 ens32

如果10.244. (根据实际环境设置的pod网段)的gateway地址是flannel0,则是UDP模式,如上gateway是flannel1的地址,则是vxlan模式,而如果gateway是宿主机的地址,则是host-gw模式。

更多关于kubernetes的知识分享,请前往博客主页。编写过程中,难免出现差错,敬请指出