说一说websocket通道的几种集群模式

一、背景

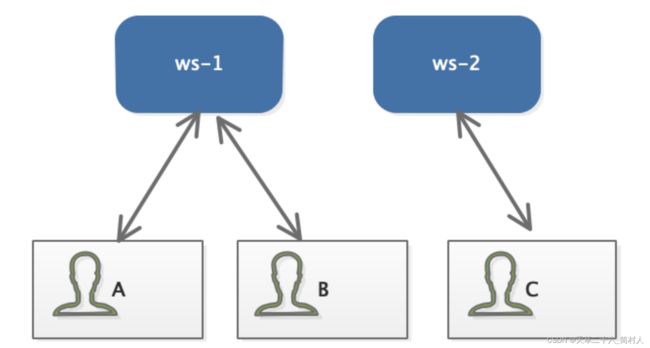

在websocket集群中,同一个房间的ws客户端连接到不同的服务节点。如下图所示

- A和B客户端连接到节点1,C客户端连接到节点2

- 现在的需求是C要发送消息给A和B

这里因为netty的channel不能够持久化到redis,只能存储在当前的jvm内存里。所以,要实现上面的跨节点之间的消息发送,就得符合下面几点:

- 1、接收消息者A和B,存储在哪个节点。这个可以通过redis维护映射关系,或者使用分布式缓存共享map集合。

- 2、消息体要发送到其他节点,有后文的多种方案,都必须引入一个三方中间件来转发消息。方案不同,只是转发消息的机制不同而已。

本文主要是针对方案一的详细实现。

二、技术架构图

我们没有额外引入接入层,也没有使用websocket网关,而是直接通过SLB将ws集群暴露出去。客户端通过外网访问进来,并不需要知道具体连接的是哪个ws节点。

方案一、ws服务节点互为客户端

方案二、hazelcast广播模式

方案三、redis发布与订阅

同样的发布订阅思路,redis也可以使用mq替代。这里不再赘述~

三、详细设计

这里讲述的是上面的方案一。每个服务节点在启动成功后,将注册到zk上。集群下的所有服务节点上,zk监听器都会得到有新增节点,于是把自己作为ws客户端,去和新增节点建立一个长连接,并保持心跳维持。

那什么时候断开长连接呢? 在节点重启的时候,由于zk的顺序临时节点的特性,会自动从zk下线。 这个时候,zk监听器会得知有节点删除了,于是把本地集合中的数据移除,不再维持心跳。

回到最开头的问题,C要把消息发给A和B,就是转变为问题:C所在的ws节点,把消息转发给A和B所在的ws节点。 转发的媒介就是刚创建好的ws长连接。

四、源码示例

前文已有讲解如何集成zk,本文只侧重消息的转发。

除了引入netty包,还得引入websocket_client包。

org.java-websocket

Java-WebSocket

1.3.8

1、ws节点启动成功后,注册至zookeeper

ChannelFuture channelFuture = bootstrap.bind(port).sync();

channelFuture.addListener(new GenericFutureListener>() {

@Override

public void operationComplete(Future future) throws Exception {

if (future.isSuccess()) {

log.info("服务端启动成功");

//注册到zookeeper

zkService.registry(ServerNode.builder()

// 当前ip地址

.host("192.168.8.18")

.port(port)

// 当前mac地址

.mac(HostUtils.getMac())

.build());

} else {

log.error("服务端启动成失败");

}

}

});

log.info("socket port :{} ,heartBeatTime:{}", port, heartBeatTime);

ChannelFuture closeFuture = channelFuture.channel().closeFuture();

closeFuture.sync();

2、zk监听器

/**

* zk节点新增

*

* @param data

*/

private void processAdd(ChildData data) {

ServerNode serverNode = JSONObject.parseObject(data.getData(), ServerNode.class);

String localMac = HostUtils.getMac();

if (localMac.equalsIgnoreCase(serverNode.getMac())) {

log.info("监听器--自身加入到在线节点列表");

return;

}

// 把当前节点作为ws客户端,去连接那个新增的ws节点

ServerPeerSender serverPeerSender = new ServerPeerSender(serverNode, localMac);

serverPeerSender.start();

log.info("监听器--新节点加入:{}", serverNode);

// 把映射关系保存在内存中

ServerPeerSenderHolder.addWorker(localMac + "_" + serverNode.getHost(), serverPeerSender);

}

/**

* zk节点删除

*

* @param data

*/

private void processRemove(ChildData data) {

ServerNode serverNode = JSONObject.parseObject(data.getData(), ServerNode.class);

if (HostUtils.getMac().equalsIgnoreCase(serverNode.getMac())) {

log.info("监听器--自身从在线节点列表中移除");

return;

}

ServerPeerSenderHolder.removeWorker(HostUtils.getMac() + "_" + serverNode.getHost());

log.info("监听器--节点删除:{}", serverNode);

}

3、ws客户端的集合管理者ServerPeerSenderHolder.java

public class ServerPeerSenderHolder {

private static ConcurrentHashMap serverSenders =

new ConcurrentHashMap<>();

public static void addWorker(String localMacAndPeerHost, ServerPeerSender serverPeerSender) {

serverSenders.put(localMacAndPeerHost, serverPeerSender);

}

public static ServerPeerSender getWorker(String localMacAndPeerHost) {

return serverSenders.get(localMacAndPeerHost);

}

public static void removeWorker(String localMacAndPeerHost) {

serverSenders.remove(localMacAndPeerHost);

}

public static ConcurrentHashMap getAll() {

return serverSenders;

}

}

4、ws客户端ServerPeerSender.java

import com.alibaba.fastjson.JSON;

import com.xx.ws.common.beans.OutMessage;

import com.xx.ws.common.constant.UserType;

import com.xx.ws.common.mq.HandleMessageService;

import com.xx.ws.common.utils.SpringUtil;

import com.xx.ws.common.utils.WebsocketUtil;

import lombok.extern.slf4j.Slf4j;

import org.java_websocket.client.WebSocketClient;

import org.java_websocket.handshake.ServerHandshake;

import java.net.URI;

import java.nio.ByteBuffer;

import java.util.HashMap;

import java.util.Map;

import static com.xx.ws.common.constant.Constants.CLUSTER_BUSINESS_NAME;

@Slf4j

public class ServerPeerSender {

private static String CLUSTER_ROOM_ID = "peer2peer";

private ServerNode serverNode;

private String userId;

private String roomId;

private WebSocketClient client;

public ServerPeerSender(ServerNode serverNode, String userId) {

this.serverNode = serverNode;

this.userId = userId;

this.roomId = CLUSTER_ROOM_ID;

}

public WebSocketClient getClient() {

return this.client;

}

public String getUserId() {

return this.userId;

}

public WebSocketClient start() {

try {

Map headers = new HashMap<>();

// 具体header传值

client = new WebSocketClient(new URI(serverNode.getWsAddr()), headers) {

@Override

public void onOpen(ServerHandshake handshake) {

// log.info("userId:{}, socket connect {} success ", userId, WEB_SOCKET_ADDRESS);

}

@Override

public void onMessage(String message) {

handleMessage(message);

}

@Override

public void onClose(int code, String reason, boolean remote) {

// log.info("userId:{}, socket close connect {} ", userId, WEB_SOCKET_ADDRESS);

}

@Override

public void onError(Exception ex) {

}

@Override

public void onMessage(ByteBuffer bytes) {

}

};

client.setConnectionLostTimeout(20);

client.connect();

// while (!WebSocket.READYSTATE.OPEN.equals(client.getReadyState())) {

// log.info("userId:{} 连接中···请稍后", userId);

// }

// 等待ws连接成功

try {

Thread.sleep(2 * 1000L);

} catch (Exception e) {

// 忽略异常

}

} catch (Exception e) {

log.error("client start error...", e);

}

return client;

}

private void handleMessage(String message) {

// 因为我们发送ws消息都是异步的,不会使用到本代码

log.info("收到ws消息:{}", message);

}

}

5、ws客户端和服务端的心跳线程HeartbeatThread.java

import lombok.extern.slf4j.Slf4j;

import org.java_websocket.client.WebSocketClient;

import org.springframework.stereotype.Service;

import javax.annotation.PostConstruct;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.ScheduledThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

@Slf4j

@Service

public class HeartbeatThread {

private ScheduledThreadPoolExecutor scheduled = new ScheduledThreadPoolExecutor(1);

@PostConstruct

public void init() {

scheduled.scheduleAtFixedRate(new CheckClientRunnable(), 0, 3, TimeUnit.SECONDS);

}

class CheckClientRunnable implements Runnable {

@Override

public void run() {

try {

// 获取当前所有在线的Peer服务节点

ConcurrentHashMap remotePeerMap = ServerPeerSenderHolder.getAll();

for (ServerPeerSender serverPeerSender : remotePeerMap.values()) {

WebSocketClient client = serverPeerSender.getClient();

if (null == client) {

serverPeerSender.start();

return;

}

// 不停地发送ping命令

if (client.isOpen()) {

client.sendPing();

} else {

client.close();

serverPeerSender.start();

}

}

} catch (Exception e) {

log.error("checkClientAlive error", e);

}

}

}

}

6、消息的转发与接收

// 交由本节点发送消息

handleMessageService.dealMessage(message);

// 告知其他ws节点,也即转发消息给其他ws服务节点。关键代码。

ConcurrentHashMap allRemote = ServerPeerSenderHolder.getAll();

for (ServerPeerSender sender : allRemote.values()) {

sender.getClient().send(message);

}

- 在channelRead0()方法中,处理消息。

protected void channelRead0(ChannelHandlerContext ctx, Object message) throws Exception {

// 集群的客户端发送过来的消息

if(Constants.CLUSTER_BUSINESS_NAME.equals(appId)){

handleMessageService.dealMessage(message);

return;

}

}

7、处理消息HandleMessageService.java

package com.xuehai.ws.common.mq;

import com.alibaba.fastjson.JSONObject;

import com.google.common.collect.Sets;

import com.xuehai.ws.common.beans.OutMessage;

import com.xuehai.ws.common.constant.CMD;

import com.xuehai.ws.common.netty.ChannelHolder;

import com.xuehai.ws.common.netty.service.DirectSender;

import io.netty.channel.Channel;

import io.netty.channel.ChannelId;

import io.netty.channel.group.ChannelGroup;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import java.util.Set;

@Slf4j

@Service

public class HandleMessageService {

@Autowired

private ChannelHolder channelHolder;

public void dealMessage(String message) {

// 解析出字段roomId和toId

unicast(roomId, toId, message);

}

/**

* 通过通道单播

*

* @param roomId

* @param toId

* @param message

*/

private void unicast(String roomId, String toId, String message) {

Channel channel = channelHolder.getSingleChannel(roomId, toId);

if (channel != null) {

log.debug(" uniCast channel find roomId:{},toId:{}, message:{}", roomId, toId, message);

channel.writeAndFlush(wrapperMessage(message.toString())).addListener(s -> {

if (!s.isSuccess()) {

log.warn("message send fail message:{},cause:{}", message, s.cause());

}

});

} else {

log.debug(" uniCast channel not find roomId :{},toId:{}", roomId, toId);

}

}

}