web爬虫第四弹 - 生产者与消费者模型(python)

前言

做了很长一段时间爬虫工作, 一直没时间记录。 去年好不容易静下心来想写点东西, 也是因为各种琐事断掉了, 看了下之前的爬虫笔记。 web爬虫第三弹, postman的使用; 第四弹:代理ip的充分使用;第五弹: 原型链;第六弹:简单的加密;第七弹: 各种混淆等等,全部都是草稿。。。本来想着写个草稿慢慢补充慢慢发布。结果还是没能发布,唉!我这三天打鱼两天晒网的性格啊, 啥时候能改。

话不多说,进入今天的主题:生产者消费者模型 。如果单聊生产者消费者模型, 大家应该都能说出个12345,但是如果不是正儿八经的大型项目却很少用到。 也可能是自己确实菜, 我的原则是cv过来的东西能运行绝不优化。也不看他是什么模式什么设计,这就导致了我一段时间再提起生产者消费者模型就忘记具体干啥的, 再次复习再次忘。

案例一

业务刚给我发过来一个压缩包, 里面是一些产品的型号, 数量为2000w。 需要我去查询一下产品参数并补充至数据库(数据就不给大家放了, 这里只用于学习)。首先看到这个量就知道不是一个快活。 产品参数查询需要1- 通过搜索产品信息获取产品列表; 2- 判断产品列表中是否存在该型号,如存在则进入详情页, 否则记录为无数据; 3- 进入详情页获取参数信息。4- 如果存在图片,则需要下载。 所以一个产品需要对页面请求4次。2000w的量就一共是8000w次以内的请求, 请求数量已经达到了项目级别。如果按照常规框架一天20w的查询已经算多的了,也需要3个月完成。

0- 分析

项目得完成时间还得短。 必须要用分布式, 正常应该使用scrapy-redis, 但是因为机器限制。所以我手动将数据拆成了3份。两份回家处理一份公司处理。于是就有了今天的内容。跟着步骤一步一步的优化我们的代码。

1- 程序v1: 单机器单进程

2000w的数据分成3分, 没份大概在700w。 如果是7w的数据我们会怎么做。

以上就是分出来的数据。 以下则是初步的代码。并没有任何反爬, 考虑到封ip的情况, 代理ip还是需要给上的。 此处代理ip的逻辑不要学习,一切为了方便,偷拿过去被领导骂概不负责。

import os, time, requests, cchardet, traceback, redis, shutil, json

import random

import pandas as pd

from lxml import etree

# 读取需要爬取的数据

def read_file(path):

redis_pool = redis.ConnectionPool(host='*.*.*.*', port=6379, password='spider..', db=6)

redis_conn = redis.Redis(connection_pool=redis_pool)

key = 'key' # 此为数据库名, 和网站的域名, 为了规避风险, 大家体谅体谅

for filepath, dirnames, filenames in os.walk(path):

for filename in filenames:

filename_num = filename.split('.')[0]

print(filename_num)

# 读取Excel中的数据

file_path = os.path.join(filepath, filename)

res_list = read_excel(file_path)

write_path = os.path.join(filepath, '已查询数据.txt')

with open(write_path, mode='r', encoding='utf-8') as f:

str_pro = f.read()

w_lsit = str_pro.split(';')

for pro_name in res_list:

if pro_name in w_lsit:

print('已查询: ' + str(pro_name))

continue

# 开始抓取

result_dict = crawl_info(str(pro_name))

print('这里正常接受了数据: ')

print(result_dict)

print('----------------------------------------------------------------')

redis_dict = {}

if result_dict:

redis_dict[str(result_dict)] = 0

else:

redis_dict[str(filename_num)] = 0

redis_conn.zadd(key, redis_dict)

# 写入已处理数据

with open(write_path, mode='a', encoding='utf-8') as f:

if str(pro_name) == '':

pass

f.write(str(pro_name))

f.write(';')

# 处理了一个数据, 则移动

mycopyfile(file_path, r'D:\work_done\local_data')

# 爬虫主逻辑

def crawl_info(pro_name):

# ============================================== 列表页数据抓取 ==============================================

result_dict = {}

# 格式化url

pro_name_str = pro_name.strip()

pro_name_param = pro_name.replace(' ', '%')

url = f"https://www.key.com/keywords/{pro_name_param}"

print('要爬取的url: ' + url)

# 爬取列表页数据, 重试5次

for t in range(9):

status, html, redirected_url = downloader(url, debug=True)

# 数据解析, 获取详情url

if status !=200:

print('{}列表页面查询失败============================'.format(url))

if t > 6:

return {}

continue

html_page = etree.HTML(html)

if not html_page:

return {}

if not html_page.xpath("//div[@class='bot']//a[@title='{}']/@href".format(pro_name_str)):

print('没有获取到指定的详情页')

return result_dict

detail_url = html_page.xpath("//div[@class='bot']//a[@title='{}']/@href".format(pro_name_str))[0]

detail_url = 'https://www.keys.com' + detail_url

# ============================================== 详情页数据抓取 ==============================================

detial_status, detial_html, detial_redirected_url = downloader(detail_url)

if detial_status != 200:

print('{}详情页面查询失败============================'.format(detail_url))

if t > 6:

return {}

return result_dict

if type(html_page) == 'NoneType':

return {}

# 数据解析, 获取详情数据

detial_html_page = etree.HTML(detial_html)

if not detial_html_page.xpath("//h2/text()"):

print('未查询到数据!!!')

return {}

try:

pro_id = detial_html_page.xpath("//h2/text()")[0]

pro_img = detial_html_page.xpath("//div[@class='imgBox']/img/@src")[0]

pro_title_1 = detial_html_page.xpath("//div[@class='crumbs w']/a/text()")[0]

pro_title_2 = detial_html_page.xpath("//div[@class='crumbs w']/a/text()")[1]

pro_title_3 = detial_html_page.xpath("//div[@class='crumbs w']/a/text()")[2]

pro_title_4 = detial_html_page.xpath("//div[@class='crumbs w']/a/text()")[3]

pro_Mfr_No = detial_html_page.xpath("//div[@class='cot']/div[@attr='Mfr No:']/text()")[0]

pro_USHTS = detial_html_page.xpath("//div[@class='cot']/div[@attr='USHTS:']/text()")[0]

pro_Manufacturer = detial_html_page.xpath("//div[@class='cot']/div[@attr='Manufacturer:']/a/@href")[0]

pro_Package = detial_html_page.xpath("//div[@class='cot']/div[@attr='Package:']/text()")[0]

pro_Datasheet = detial_html_page.xpath("//div[@class='cot']/div[@attr='Datasheet:']/a/@href")[0]

pro_Description = detial_html_page.xpath("//div[@class='cot']/div[@attr='Description:']/text()")[0]

result_dict['pro_id'] = pro_id

result_dict['pro_img'] = pro_img

result_dict['pro_title_1'] = pro_title_1.replace('\n', '').strip()

result_dict['pro_title_2'] = pro_title_2.replace('\n', '').strip()

result_dict['pro_title_3'] = pro_title_3.replace('\n', '').strip()

result_dict['pro_title_4'] = pro_title_4.replace('\n', '').strip()

result_dict['pro_Mfr_No'] = pro_Mfr_No.replace('\n', '').strip()

result_dict['pro_USHTS'] = pro_USHTS.replace('\n', '').strip()

result_dict['pro_Manufacturer'] = 'https://www.keys.com' + pro_Manufacturer.replace('\n', '').strip()

result_dict['pro_Package'] = pro_Package.replace('\n', '').strip()

result_dict['pro_Datasheet'] = pro_Datasheet.replace('\n', '').strip()

result_dict['pro_Description'] = pro_Description.replace('\n', '').strip()

break

except:

print('数据有误!!!')

print('这里正常获取了数据: ' + str(result_dict))

print('-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=')

return result_dict

# 下载器

def downloader(url, timeout=10, headers=None, debug=False, binary=False):

_headers = {'User-Agent': ('Mozilla/5.0 (compatible; MSIE 9.0; '

'Windows NT 6.1; Win64; x64; Trident/5.0)')}

redirected_url = url

if headers:

_headers = headers

try:

# 从本地获取 ip

proxies = get_local_proxy()

r = requests.get(url, headers=_headers, timeout=timeout, proxies=proxies)

if binary:

html = r.content

else:

encoding = cchardet.detect(r.content)['encoding']

html = r.content.decode(encoding)

status = r.status_code

redirected_url = r.url

except:

if debug:

traceback.print_exc()

msg = 'failed download: {}'.format(url)

print(msg)

if binary:

html = b''

else:

html = ''

status = 0

return status, html, redirected_url

# 读取Excel中产品信息

def read_excel(file_path):

execl_df = pd.read_excel(file_path)

result = execl_df['Product'].values

res_list = list(result)

return res_list

# 获取付费代理

def get_proxy_from_url():

proxy_url = 'http://http.tiqu.alibabaapi.com/getip?用的是太阳代理后面的参数就不能让你们知道了'

print("获取了付费代理。。。")

res_json = requests.get(proxy_url).json()

print(res_json)

proxies = {'https': ''}

if res_json['code'] == 0:

ip = res_json['data'][0]['ip']

port = res_json['data'][0]['port']

proxies = {"https": ip + ":" + port}

ip_path = r'./代理池.txt'

with open(ip_path, mode='w', encoding='utf-8') as f:

f.write(str(proxies))

return proxies

# 从本地获取ip

def get_local_proxy():

# 代理是为了方便, 不要学习这段, 后续会有专门的高效利用代理ip的文章

with open('./代理池.txt', mode='r', encoding='utf-8') as f:

res_str = f.read()

res_str = res_str.replace("'", '"')

proxies_list = json.loads(res_str)

proxies = random.choice(proxies_list)

proxy = {"https": proxies['https']}

return proxy

# 将代理更新到代理池

def str_2_txt(proxy_ip):

ip_path = r'./代理池.txt'

with open(ip_path, mode='w', encoding='utf-8') as f:

f.write(str(proxy_ip))

return 'ok'

# 文件夹下一个文件处理完后移动到指定目录

def mycopyfile(srcfile, dstpath): # 复制函数

if not os.path.isfile(srcfile):

print("%s not exist!" % (srcfile))

else:

fpath, fname = os.path.split(srcfile) # 分离文件名和路径

if not os.path.exists(dstpath):

print('路径不存在')

os.makedirs(dstpath) # 创建路径

shutil.copy(srcfile, dstpath + '\\' + fname) # 复制文件

print("copy %s -> %s" % (srcfile, dstpath + '\\' + fname))

if __name__ == '__main__':

path = r"D:\data_space\path_1"

read_file(path)

风风火火写了2个钟结果以为搞定了项目。一运行10秒一条数据。就算不出问题, 一天也才跑1w的数据。内心os:“儿子, 我给你找到了个铁饭碗, 这个项目可以干到你退休。”

2- 程序v2: 多机器多线程

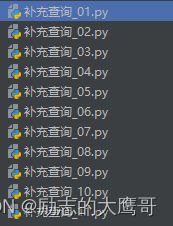

一天10000的筛选了还是太低了。如果程序慢一定是电脑的问题,不可能是我写的问题, 于是开多两台机器, 开多两个pycharm, 2000w的数据手动分给3台机器,一台机器跑700w,每台机器开11个pycharm。一个进程只用跑70w的数据。 真棒!70天就能跑完这个项目了。又可以划水3个月!!!

一台机器分11个文件夹

开他个11个程序

总结

如果只是几十万, 百万级数据或许可以试一下这个简单的办法。 也能做到一周之内搞定数据的查询。但是2000w的数据, 前前后后多次拆分容易出错不说, 对人力的消耗也是一大缺陷。 长痛不如短痛, 我们这次不能再使用CV大法了。 得改进我们的程序。 那我们正式进入今天的主题。

3- 程序v3: 生产者消费者

1- 我们先来分析下需求

2000w的数据, 短时间拿到结果。 那我们目前已经有了产品型号,初始url已经有了

url = f"https://www.key.com/keywords/{pro_name_param}"

那就更简单了, 有2000w个这样的url。

生产者:

读取文件中的产品型号构建出url, 并将url推至队列。

消费者:

读取队列中url, 进行数据抓取, 清洗,入库

队列

保持队列数据最大化

这时候我们不用考了阻塞的问题, 很明显, 生产者生产速度远远快于消费者消费速度, 我们只用将队列设置到尽可能大的情形下, 慢慢等所有的数据全部抓取完成就行了。

import threading, os, queue, shutil, requests, cchardet, traceback, random, json, pymysql, redis

import time

import pandas as pd

from lxml import etree

def produce():

'''

1- 从 mysql 中提取数据。

2- 读取 redis 中的数据

3- 如果数据在 redis 中, 则直接将 redis 中的数据返回

3.1- 将返回的数据写入 mysql 表二。 继续下一条

4- 不在redis中, 则读取url,写入队列

:return:

'''

# 从mysql中提取数据

mysql_pro_info = read_mysql()

print('我们看一下数据库中产品信息: ')

'''

(('ZXMP6A17G ',), ('ZXRE1004FF ',))

'''

print(mysql_pro_info)

for item in mysql_pro_info:

q.put(item[0])

def read_mysql():

mydb = pymysql.connect(

host="*.*.*.*", # 默认用主机名

port=3306,

user="root", # 默认用户名

password="*..", # mysql密码

database='chipsmall', # 库名

charset='utf8' # 编码方式

)

mycursor = mydb.cursor()

sql = "select p_id from filter_pro"

product_info = ''

try:

mycursor.execute(sql)

print('mysql执行成功。。。')

product_info = mycursor.fetchall()

except Exception as e:

print('执行失败。。。')

print(e)

mydb.rollback()

mydb.commit()

mydb.close()

return product_info

def read_redis():

redis_pool = redis.ConnectionPool(host='*.*.*.*', port=6379, password='*..', db=6)

redis_conn = redis.Redis(connection_pool=redis_pool)

filter_end_index = redis_conn.zcard('key')

res_list = redis_conn.zrange('key', 0, filter_end_index)

return [res.decode('utf-8') for res in res_list]

def consume():

'''

1- 连接 redis

2- 查询到结果

3- 结果写入redis

:return:

'''

# 链接redis

redis_pool = redis.ConnectionPool(host='*.*.*.*', port=6379, password='*..', db=6)

redis_conn = redis.Redis(connection_pool=redis_pool)

key = 'filter_product'

while True:

item = q.get()

if not item:

break

print(' consume %s' % item)

result_dict = crawl_info(str(item))

print('这里正常接受了数据: ')

print(result_dict)

print('----------------------------------------------------------------')

# 获取到的数据写入 redis

redis_dict = {}

redis_dict[str(result_dict)] = 0

redis_conn.zadd(key, redis_dict)

write_path = './已查询数据.txt'

# 写入已处理数据

with open(write_path, mode='a', encoding='utf-8') as f:

if str(item) == '':

pass

f.write(str(item))

f.write('&;&')

# 爬虫主逻辑

def crawl_info(pro_name):

# ============================================== 列表页数据抓取 ==============================================

result_dict = {}

# 格式化url

pro_name_str = pro_name.strip()

pro_name_param = pro_name.replace(' ', '%')

url = f"https://www.keys.com/keywords/{pro_name_param}"

print('要爬取的url: ' + url)

# 爬取列表页数据, 重试5次

for t in range(9):

status, html, redirected_url = downloader(url, debug=True)

# 数据解析, 获取详情url

if status !=200:

print('{}列表页面查询失败============================'.format(url))

if t > 6:

return {}

continue

html_page = etree.HTML(html)

if not html_page:

return {}

if not html_page.xpath("//div[@class='bot']//a[@title='{}']/@href".format(pro_name_str)):

print('没有获取到指定的详情页')

return result_dict

detail_url = html_page.xpath("//div[@class='bot']//a[@title='{}']/@href".format(pro_name_str))[0]

detail_url = 'https://www.keys.com' + detail_url

# ============================================== 详情页数据抓取 ==============================================

detial_status, detial_html, detial_redirected_url = downloader(detail_url)

if detial_status != 200:

print('{}详情页面查询失败============================'.format(detail_url))

if t > 6:

return {}

return result_dict

if type(html_page) == 'NoneType':

return {}

# 数据解析, 获取详情数据

detial_html_page = etree.HTML(detial_html)

if not detial_html_page.xpath("//h2/text()"):

print('未查询到数据!!!')

return {}

try:

pro_id = detial_html_page.xpath("//h2/text()")[0]

pro_img = detial_html_page.xpath("//div[@class='imgBox']/img/@src")[0]

pro_title_1 = detial_html_page.xpath("//div[@class='crumbs w']/a/text()")[0]

pro_title_2 = detial_html_page.xpath("//div[@class='crumbs w']/a/text()")[1]

pro_title_3 = detial_html_page.xpath("//div[@class='crumbs w']/a/text()")[2]

pro_title_4 = detial_html_page.xpath("//div[@class='crumbs w']/a/text()")[3]

pro_Mfr_No = detial_html_page.xpath("//div[@class='cot']/div[@attr='Mfr No:']/text()")[0]

pro_USHTS = detial_html_page.xpath("//div[@class='cot']/div[@attr='USHTS:']/text()")[0]

pro_Manufacturer = detial_html_page.xpath("//div[@class='cot']/div[@attr='Manufacturer:']/a/@href")[0]

pro_Package = detial_html_page.xpath("//div[@class='cot']/div[@attr='Package:']/text()")[0]

pro_Datasheet = detial_html_page.xpath("//div[@class='cot']/div[@attr='Datasheet:']/a/@href")[0]

pro_Description = detial_html_page.xpath("//div[@class='cot']/div[@attr='Description:']/text()")[0]

result_dict['pro_id'] = pro_id

result_dict['pro_img'] = pro_img

result_dict['pro_title_1'] = pro_title_1.replace('\n', '').strip()

result_dict['pro_title_2'] = pro_title_2.replace('\n', '').strip()

result_dict['pro_title_3'] = pro_title_3.replace('\n', '').strip()

result_dict['pro_title_4'] = pro_title_4.replace('\n', '').strip()

result_dict['pro_Mfr_No'] = pro_Mfr_No.replace('\n', '').strip()

result_dict['pro_USHTS'] = pro_USHTS.replace('\n', '').strip()

result_dict['pro_Manufacturer'] = 'https://www.keys.com' + pro_Manufacturer.replace('\n', '').strip()

result_dict['pro_Package'] = pro_Package.replace('\n', '').strip()

result_dict['pro_Datasheet'] = pro_Datasheet.replace('\n', '').strip()

result_dict['pro_Description'] = pro_Description.replace('\n', '').strip()

break

except:

print('数据有误!!!')

print('这里正常获取了数据: ' + str(result_dict))

print('-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=')

return result_dict

# 下载器

def downloader(url, timeout=10, headers=None, debug=False, binary=False):

_headers = {'User-Agent': ('Mozilla/5.0 (compatible; MSIE 9.0; '

'Windows NT 6.1; Win64; x64; Trident/5.0)')}

redirected_url = url

if headers:

_headers = headers

try:

# 从本地获取 ip

proxies = get_local_proxy()

r = requests.get(url, headers=_headers, timeout=timeout, proxies=proxies)

if binary:

html = r.content

else:

encoding = cchardet.detect(r.content)['encoding']

html = r.content.decode(encoding)

status = r.status_code

redirected_url = r.url

except:

if debug:

traceback.print_exc()

msg = 'failed download: {}'.format(url)

print(msg)

if binary:

html = b''

else:

html = ''

status = 0

return status, html, redirected_url

# 获取付费代理

def get_proxy_from_url():

proxy_url = 'http://http.tiqu.alibabaapi.com/getip?不能看不能看'

print("获取了付费代理。。。")

res_json = requests.get(proxy_url).json()

print(res_json)

proxies = {'https': ''}

if res_json['code'] == 0:

ip = res_json['data'][0]['ip']

port = res_json['data'][0]['port']

proxies = {"https": ip + ":" + port}

ip_path = r'../代理池.txt'

with open(ip_path, mode='w', encoding='utf-8') as f:

f.write(str(proxies))

return proxies

# 从本地获取ip

def get_local_proxy():

# 读取本地

with open('../代理池.txt', mode='r', encoding='utf-8') as f:

res_str = f.read()

res_str = res_str.replace("'", "&")

res_str = res_str.replace('&', '"')

proxies_list = json.loads(res_str)

proxies = random.choice(proxies_list)

proxy = {"https": proxies['https']}

return proxy

# 将代理更新到代理池

def str_2_txt(proxy_ip):

ip_path = r'../代理池.txt'

with open(ip_path, mode='w', encoding='utf-8') as f:

f.write(str(proxy_ip))

return 'ok'

# 文件夹下一个文件处理完后移动到指定目录

def mycopyfile(srcfile, dstpath): # 复制函数

if not os.path.isfile(srcfile):

print("%s not exist!" % (srcfile))

else:

fpath, fname = os.path.split(srcfile) # 分离文件名和路径

if not os.path.exists(dstpath):

print('路径不存在')

os.makedirs(dstpath) # 创建路径

shutil.copy(srcfile, dstpath + '\\' + fname) # 复制文件

print("copy %s -> %s" % (srcfile, dstpath + '\\' + fname))

if __name__ == '__main__':

q = queue.Queue()

producer = threading.Thread(target=produce, args=())

consumer1 = threading.Thread(target=consume, args=())

consumer2 = threading.Thread(target=consume, args=())

consumer3 = threading.Thread(target=consume, args=())

producer.start()

consumer1.start()

consumer2.start()

consumer3.start()

producer.join()

consumer1.join()

consumer2.join()

consumer3.join()

以上就是生产者消费者的思路了,生产者读取数据库中的数据存入队列, 消费者持续获取抓取数据,直至队列中数据为空。

案例二

数据库中有36w的有效数据, 需要去另一个网站通过型号下载图片和PDF内容。

0- 分析

看完案例一应该很清楚, 起始url已经存在了, 1- 生产者:只需要读取redis中的数据,抽出图片url和pdfurl推送至队列。 2- 消费者:拿到队列中的数据, 进行图片和pdf的抓取。 队列为空,则流程结束。

我们直接上代码

import time

import redis, json, re, pymysql, requests, random, queue, threading

'''

1- 读取redis中数据

2- 校验是否有图片

3- pdf补充

'''

def produce(result_list):

# 从redis中提取数据

print('redis中数据读取完毕。。。')

print(result_list)

for item in result_list:

print(item)

res = item.replace('"', "`")

res = res.replace("'", '"')

if '{' not in res:

continue

try:

q.put(res)

print('{}已推至队列'.format(res))

except Exception as e:

print('数据{}推送至队列出错'.format(res))

continue

print('生产者生产完成了')

def redis_opt(key, filter_start_index=0, filter_end_index=0):

redis_pool = redis.ConnectionPool(host='*.*.*.*', port=6379, password='*..', db=6)

redis_conn = redis.Redis(connection_pool=redis_pool)

filter_end_index = redis_conn.zcard(key)

print(filter_end_index)

res_list = redis_conn.zrange(key, 0, filter_end_index)

# res_list = redis_conn.zrange(key, 0, 50)

return [res.decode('utf-8') for res in res_list]

def check_sql(data_list):

print(data_list)

mydb = pymysql.connect(

host="*.*.*.*", # 默认用主机名

port=3306,

user="root", # 默认用户名

password="*..", # mysql密码

database='chipsmall', # 库名

charset='utf8' # 编码方式0

)

mycursor = mydb.cursor()

sql = "INSERT IGNORE INTO into_web (p_id, pro_img, pro_title_2, pro_title_3, pro_Mfr_No, pro_Manufacturer, " \

"pro_Package, pro_Datasheet, pro_Description, img_status) VALUES (%s, %s, %s, %s, %s, %s, %s, %s, %s, %s)"

# sql = 'select * from filter_pro'

try:

mycursor.executemany(sql, data_list)

# data = mycursor.execute(sql)

print('mysql执行成功。。。')

except Exception as e:

print('执行失败。。。')

print(e)

mydb.rollback()

mydb.commit()

mydb.close()

# print(data)

return

def request_download(ind, IMAGE_URL):

import requests

r = requests.get(IMAGE_URL)

with open('./image/img_{}.jpg'.format(ind), 'wb') as f:

f.write(r.content)

return r

def into_list():

headers = {

'Connection': 'close',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.58'

}

user_agent_list = [

"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36",

"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36",

"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.62 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)",

"Mozilla/5.0 (Macintosh; U; PPC Mac OS X 10.5; en-US; rv:1.9.2.15) Gecko/20110303 Firefox/3.6.15",

]

headers['User-Agent'] = random.choice(user_agent_list)

json_list = []

index = 0

while True:

if q.empty():

# 队列为空则退出

break

index += 1

print(index)

res = q.get()

print('获取到res')

if '{' in res:

try:

res = res.replace("'", '"')

json_res = json.loads(res)

if not str(json_res["pro_img"]):

continue

img_status = 'False'

img_code = b''

if '.jpg' in str(json_res["pro_img"]):

# 下载图片, 换成400

print('数据{}正常下载了图片'.format(res))

pic_url = str(json_res["pro_img"]).replace('200dimg', '400dimg')

img_code = request_download(1, pic_url).content

img_status = 'True'

pdf_code = b''

if '.pdf' in json_res["pro_Datasheet"]:

pdf_code = requests.get(json_res["pro_Datasheet"], headers=headers).content

print('PDF下载完成')

# 存在图片

json_list.append([json_res["pro_id"], img_code, json_res["pro_title_2"], json_res["pro_title_3"],

json_res["pro_Mfr_No"], json_res["pro_Manufacturer"], json_res["pro_Package"],

pdf_code, json_res["pro_Description"], img_status])

except Exception as e:

print(e)

if not res:

res = ''

with open('错误数据.txt', mode='w', encoding='utf-8') as f:

f.write(res)

f.write(';')

f.write('\n')

check_sql(json_list)

print('写入了数据库{}'.format(index))

return []

if __name__ == '__main__':

key = 'appelectronic'

q = queue.Queue()

result_list = redis_opt(key)

producer = threading.Thread(target=produce, args=(result_list,))

consumer1 = threading.Thread(target=into_list, args=())

consumer2 = threading.Thread(target=into_list, args=())

consumer3 = threading.Thread(target=into_list, args=())

consumer4 = threading.Thread(target=into_list, args=())

consumer5 = threading.Thread(target=into_list, args=())

consumer6 = threading.Thread(target=into_list, args=())

consumer7 = threading.Thread(target=into_list, args=())

consumer8 = threading.Thread(target=into_list, args=())

consumer9 = threading.Thread(target=into_list, args=())

consumer10 = threading.Thread(target=into_list, args=())

consumer11 = threading.Thread(target=into_list, args=())

producer.start()

time.sleep(10) # 很关键, 生产者为一个线程。消费者为11个线程,如果生产者消费者同时启动,可能出现消费者误判队列为空的情况

consumer1.start()

consumer2.start()

consumer3.start()

consumer4.start()

consumer5.start()

consumer6.start()

consumer7.start()

consumer8.start()

consumer9.start()

consumer10.start()

consumer11.start()

producer.join()

consumer1.join()

consumer2.join()

consumer3.join()

consumer4.join()

consumer5.join()

consumer6.join()

consumer7.join()

consumer8.join()

consumer9.join()

consumer10.join()

consumer11.join()

中间需要注意几个点, 就是请求链接超标问题, 需要修改为短连接, header弄个随机的ua。无需ip。

案例三

0- 分析

我们最终的产品已经处理完毕, 在上传到公司网站上时需要添加水印。 针对几十万张图, 单线程添加也是不够的, 这时候我们继续使用之前的思路。

1- 直接上代码

import os, queue, threading, time

from PIL import Image

def loop_dir():

file_path = r"./image"

result_list = []

for filepath, dirnames, filenames in os.walk(file_path):

for filename in filenames:

file_path = os.path.join(filepath + '/' + filename)

print(file_path)

with open('已添加水印.txt', mode='r', encoding='utf-8') as f:

pro_str = f.read()

pro_list = pro_str.split(';')

if filename in pro_list:

continue

result_list.append(file_path)

return result_list

def into_q(result_list):

for item in result_list:

q.put(item)

print('生产者生产完成')

def add_watermark():

while True:

if q.empty():

print('队列已空')

break

pic_path = q.get()

file_name = str(pic_path).split('/')[-1]

print('获取到了file_name {}'.format(file_name))

img = Image.open(pic_path)

watermark = Image.open(r"水印.png")

wm_width, wm_height = watermark.size

watermark = watermark.resize((wm_width, wm_height))

x = 1

y = 1

img.paste(watermark, (x, y), watermark)

img.save(r"D:\Scriptspace\本地数据补充\数据筛选\加水印\{}".format(file_name))

def exist_folder(pro_id):

with open('已添加水印.txt', mode='a', encoding='utf-8') as f:

f.write(pro_id)

f.write(';')

return ''

if __name__ == '__main__':

'''

1- 读取目录下所有的图片

2- 添加水印

'''

q = queue.Queue()

result_list = loop_dir()

producer = threading.Thread(target=into_q, args=(result_list, ))

consumer0 = threading.Thread(target=add_watermark, args=())

consumer1 = threading.Thread(target=add_watermark, args=())

consumer2 = threading.Thread(target=add_watermark, args=())

consumer3 = threading.Thread(target=add_watermark, args=())

consumer4 = threading.Thread(target=add_watermark, args=())

consumer5 = threading.Thread(target=add_watermark, args=())

consumer6 = threading.Thread(target=add_watermark, args=())

consumer7 = threading.Thread(target=add_watermark, args=())

consumer8 = threading.Thread(target=add_watermark, args=())

consumer9 = threading.Thread(target=add_watermark, args=())

producer.start()

time.sleep(10)

consumer0.start()

consumer1.start()

consumer2.start()

consumer3.start()

consumer4.start()

consumer5.start()

consumer6.start()

consumer7.start()

consumer8.start()

consumer9.start()

producer.join()

consumer0.join()

consumer1.join()

consumer2.join()

consumer3.join()

consumer4.join()

consumer5.join()

consumer6.join()

consumer7.join()

consumer8.join()

consumer9.join()

36w张图片仅需5分钟全部添加水印完成。

更新下完整代码

import threading, os, queue, shutil, requests, cchardet, traceback, random, json, pymysql, redis, time

from lxml import etree

# 创建一个任务队列

task_queue = queue.Queue()

class MysqlClass:

def __init__(self, host="*.*.*.*", port=3306, user="*", password="*", database='*'):

self.host = host

self.port = port

self.user = user

self.password = password

self.database = database

self.mydb = pymysql.connect(

host=self.host, # 默认用主机名

port=self.port,

user=self.user, # 默认用户名

password=self.password, # mysql密码

database=self.database, # 库名

charset='utf8' # 编码方式

)

self.mycursor = self.mydb.cursor()

def read_mysql(self, sql):

# 'select * from filter_pro'

data = []

try:

self.mycursor.execute(sql)

data = self.mycursor.fetchall()

print('mysql读取执行成功。。。')

except Exception as e:

print('读取执行失败。。。')

print(e)

self.mydb.rollback()

self.mydb.commit()

self.mydb.close()

return data

def insert_mysql(self, data_list, sql):

flag = False

lock = threading.Lock()

try:

with lock:

self.mycursor.executemany(sql, data_list)

print('mysql插入执行成功。。。')

flag = True

except Exception as e:

print('插入执行失败。。。{}{}'.format(sql, str(data_list)))

print(e)

self.mydb.rollback()

finally:

# 关闭游标和数据库连接

self.mydb.commit()

self.mydb.close()

return flag

class RedisClass:

def __init__(self, db_key, db_index, db_host='*.*.*.*', db_port=6379, db_password='*', filter_start_index=0, filter_end_index=0):

# 传入DB表名,和DB序号

self.db_key = db_key

self.db_index = db_index

self.db_host = db_host

self.db_port = db_port

self.db_password = db_password

self.filter_start_index = filter_start_index

self.filter_end_index = filter_end_index

self.redis_pool = redis.ConnectionPool(host=self.db_host, port=self.db_port, password=self.db_password,

db=self.db_index)

self.redis_conn = redis.Redis(connection_pool=self.redis_pool)

def count_redis_data(self):

# 计数: 获取redis中数据数量

return self.redis_conn.zcard(self.db_key)

def read_redis(self):

# 读取redis中全部数据

if self.filter_start_index == 0 and self.filter_end_index == 0:

# 如果无输入查询数量, 则全表查询

self.filter_end_index = self.redis_conn.zcard(self.db_key)

print('查询到的数量为: {}'.format(self.filter_end_index))

res_list = self.redis_conn.zrange(self.db_key, self.filter_start_index, self.filter_end_index)

return [res.decode('utf-8') for res in res_list]

def insert_redis(self, redis_dict):

flag = False

self.redis_conn.zadd(self.db_key, redis_dict)

return flag

# 生产者线程类

class ProducerThread(threading.Thread):

def __init__(self, mysql_pro_info):

super().__init__()

self.mysql_pro_info = mysql_pro_info

def run(self):

for item in self.mysql_pro_info:

task_queue.put(item)

print(f"Produced by {self.name}: {item}")

# 消费者线程类

class ConsumerThread(threading.Thread):

def run(self):

'''

1- 连接 redis

2- 查询到结果

3- 结果写入redis

:return:

'''

redis_obj_retry = RedisClass('WeeklyRetry', 9)

redis_obj_done = RedisClass('WeeklyDone', 9)

while True:

# 从队列获取任务

item = task_queue.get()

item = str(item).replace('! ', '').strip()

if len(str(item)) < 3:

print('{}小于3'.format(str(item)))

continue

# 如果产品已经爬取, 则跳过

redis_done = redis_obj_done.read_redis()

if str(item) in redis_done:

print("数据{}已查询, 跳过".format(item))

continue

print(f"Consumed by {self.name}: {item}")

print(' consume %s' % item)

result_dict = crawl_info(str(item))

# 获取到的数据写入 redis

redis_dict = {}

if result_dict:

print('我们看一下result_dict: {}'.format(str(result_dict)))

if result_dict.get('retry'):

# 如果数据异常, 则重试

redis_dict[str(item)] = 0

redis_obj_retry.insert_redis(redis_dict)

result_tup = (result_dict['pro_id'], result_dict['pro_data_attr'], result_dict['pro_img'],

result_dict['pro_title_1'], result_dict['pro_title_2'], result_dict['pro_title_3'],

result_dict['pro_title_4'], result_dict['pro_Mfr_No'], result_dict['pro_USHTS'],

result_dict['pro_Manufacturer'], result_dict['pro_Package'], result_dict['pro_Datasheet'],

result_dict['pro_Description'])

mysql_obj_insert = MysqlClass()

mysql_sql = "INSERT IGNORE INTO weekly_update (pro_id, pro_data_attr, pro_img, pro_title_1, pro_title_2, pro_title_3, " \

"pro_title_4, pro_mfr_no, pro_ushts, pro_manufacturer, pro_package_url, pro_datasheet_url, pro_description)" \

" VALUES (%s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s)"

mysql_obj_insert.insert_mysql([result_tup], mysql_sql)

redis_obj_done.insert_redis({str(item): 0})

print('这里正常接受了数据: {}'.format(str(result_dict)))

# 标记任务完成

task_queue.task_done()

print('----------------------------------------------------------------')

# 爬虫主逻辑

def crawl_info(pro_name):

# ============================================== 列表页数据抓取 ==============================================

result_dict = {}

# 格式化url

pro_name_str = pro_name.strip()

# 构建url用指定方法

pro_name_param = pro_name_str.replace(' ', '%')

url = f"https://www.*.com/keywords/{pro_name_param}"

print('要爬取的url: ' + url)

# 爬取列表页数据, 重试7次

url_flag = False

detail_url = ''

for t in range(7):

status, html, redirected_url = downloader(url, debug=True)

# 数据解析, 获取详情url

if status !=200:

if t > 6:

print('============={}查询页面状态码不为200============='.format(url))

# 6次请求失败, 则返回异常

return {'retry': 1}

continue

html_page = etree.HTML(html)

if not html_page:

return {}

# 如果该页查询无结果, 直接返回

if html_page.xpath("//b[contains(text(), 'Sorry, no results.')]"):

# 数据不存在

print('Sorry, no results.')

return {}

# 如果查询到进入了详情页, 则直接解析数据返回

pro_name_str = pro_name_str.replace("'", "") # xpath语法中不能包含单引号,或者其他特殊字符

if html_page.xpath("//h2[contains(text(), '{}')]/text()".format(pro_name_str)):

print('已经重定向到详情页: {}'.format(redirected_url))

detail_url = redirected_url

detial_html_page = html_page

if not detail_url:

# 未进入到详情页, 又可以查询到数据, 则解析列表

if not html_page.xpath("//div[@class='bot']//a[@title='{}']/@href".format(pro_name_str)):

# 没有详情页地址

return {}

# 在列表中查询到指定数据

detail_url = html_page.xpath("//div[@class='bot']//a[@title='{}']/@href".format(pro_name_str))[0]

detail_url = 'https://www.*.com' + detail_url

# ============================================== 详情页数据抓取 ==============================================

detial_status, detial_html, detial_redirected_url = downloader(detail_url)

if detial_status != 200:

print('{}详情页面查询失败============================'.format(detail_url))

if t > 6:

return {'retry': 1}

return result_dict

if type(html_page) == 'NoneType':

return {}

# 数据解析, 获取详情数据

detial_html_page = etree.HTML(detial_html)

if not detial_html_page.xpath("//h2/text()"):

print('未查询到数据!!!')

return {}

print('开始解析数据。。。')

try:

pro_id = ''

if detial_html_page.xpath("//h2/text()"):

pro_id = detial_html_page.xpath("//h2/text()")[0]

pro_img = ''

if detial_html_page.xpath("//div[@class='imgBox']/img/@src"):

pro_img = detial_html_page.xpath("//div[@class='imgBox']/img/@src")[0]

pro_title_1 = ''

if detial_html_page.xpath("//div[@class='crumbs w']/a/text()"):

pro_title_1 = detial_html_page.xpath("//div[@class='crumbs w']/a/text()")[0]

pro_title_2 = ''

if detial_html_page.xpath("//div[@class='crumbs w']/a/text()"):

pro_title_2 = detial_html_page.xpath("//div[@class='crumbs w']/a/text()")[1]

pro_title_3 = ''

if detial_html_page.xpath("//div[@class='crumbs w']/a/text()"):

pro_title_3 = detial_html_page.xpath("//div[@class='crumbs w']/a/text()")[2]

pro_title_4 = ''

if detial_html_page.xpath("//div[@class='crumbs w']/a/text()"):

pro_title_4 = detial_html_page.xpath("//div[@class='crumbs w']/a/text()")[3]

pro_Mfr_No = ''

if detial_html_page.xpath("//div[@class='cot']/div[@attr='Mfr No:']/text()"):

pro_Mfr_No = detial_html_page.xpath("//div[@class='cot']/div[@attr='Mfr No:']/text()")[0]

pro_USHTS = ''

if detial_html_page.xpath("//div[@class='cot']/div[@attr='USHTS:']/text()"):

pro_USHTS = detial_html_page.xpath("//div[@class='cot']/div[@attr='USHTS:']/text()")[0]

pro_Manufacturer = ''

if detial_html_page.xpath("//div[@class='cot']/div[@attr='Manufacturer:']/a/text()"):

pro_Manufacturer = detial_html_page.xpath("//div[@class='cot']/div[@attr='Manufacturer:']/a/text()")[0]

pro_Package = ''

if detial_html_page.xpath("//div[@class='cot']/div[@attr='Package:']/text()"):

pro_Package = detial_html_page.xpath("//div[@class='cot']/div[@attr='Package:']/text()")[0]

pro_Datasheet = ''

if detial_html_page.xpath("//div[@class='cot']/div[@attr='Datasheet:']/a/@href"):

pro_Datasheet = detial_html_page.xpath("//div[@class='cot']/div[@attr='Datasheet:']/a/@href")[0]

pro_Description = ''

if detial_html_page.xpath("//div[@class='cot']/div[@attr='Description:']/text()"):

pro_Description = detial_html_page.xpath("//div[@class='cot']/div[@attr='Description:']/text()")[0]

pro_data_attr = {}

attr_list = detial_html_page.xpath("//div[@class='specifications']//ul/li")

for attr in attr_list:

attr_key = attr.xpath(".//span/text()")[0]

attr_value = attr.xpath(".//p/text()")[0]

pro_data_attr[attr_key] = attr_value

result_dict['pro_data_attr'] = str(pro_data_attr).replace('\n', '').strip()

result_dict['pro_id'] = pro_id.replace('\n', '').strip()

result_dict['pro_img'] = pro_img.replace('\n', '').strip()

result_dict['pro_title_1'] = pro_title_1.replace('\n', '').strip()

result_dict['pro_title_2'] = pro_title_2.replace('\n', '').strip()

result_dict['pro_title_3'] = pro_title_3.replace('\n', '').strip()

result_dict['pro_title_4'] = pro_title_4.replace('\n', '').strip()

result_dict['pro_Mfr_No'] = pro_Mfr_No.replace('\n', '').strip()

result_dict['pro_USHTS'] = pro_USHTS.replace('\n', '').strip()

result_dict['pro_Manufacturer'] = pro_Manufacturer.replace('\n', '').strip()

result_dict['pro_Package'] = pro_Package.replace('\n', '').strip()

result_dict['pro_Datasheet'] = pro_Datasheet.replace('\n', '').strip()

result_dict['pro_Description'] = pro_Description.replace('\n', '').strip()

break

except Exception as e:

print('detail_url{} :xpath解析不成功。'.format(detail_url))

print('-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=')

return result_dict

# 下载器

def downloader(url, timeout=10, headers=None, debug=False, binary=False):

headers = {

'Connection': 'close'

}

user_agent_list = [

"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36",

"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36",

"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.62 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)",

"Mozilla/5.0 (Macintosh; U; PPC Mac OS X 10.5; en-US; rv:1.9.2.15) Gecko/20110303 Firefox/3.6.15",

]

headers['User-Agent'] = random.choice(user_agent_list)

redirected_url = url

if headers:

_headers = headers

try:

# 从本地获取 ip

proxies = get_local_proxy()

print('获取到了代理ip: {}'.format(str(proxies)))

r = requests.get(url, headers=headers, timeout=timeout, proxies=proxies, allow_redirects=True)

if binary:

html = r.content

else:

encoding = cchardet.detect(r.content)['encoding']

html = r.content.decode(encoding)

status = r.status_code

redirected_url = r.url

except:

print("爬取指定url出错: {}".format(url))

# if debug:

# traceback.print_exc()

msg = 'failed download: {}'.format(url)

print(msg)

if binary:

html = b''

else:

html = ''

status = 0

return status, html, redirected_url

def get_local_proxy():

# 读取本地

with open('代理池.txt', mode='r', encoding='utf-8') as f:

res_str = f.read()

res_str = res_str.replace("'", "&")

res_str = res_str.replace('&', '"')

proxies_list = json.loads(res_str)

proxies = random.choice(proxies_list)

proxy = {"https": proxies['https']}

return proxy

if __name__ == '__main__':

redis_obj = RedisClass('new_products', 15)

# redis_obj = RedisClass('WeeklyRetry', 9)

mysql_pro_info = redis_obj.read_redis()

print(mysql_pro_info)

# 创建生产者线程

producer_thread = ProducerThread(mysql_pro_info)

producer_thread.start()

# 创建消费者线程

consumer_threads = []

for i in range(100): # 创建100个消费者线程

consumer_thread = ConsumerThread()

consumer_threads.append(consumer_thread)

consumer_thread.start()

# 等待所有任务处理完成

task_queue.join()

# 终止所有线程

producer_thread.join()

for thread in consumer_threads:

thread.join()

总结:

以上3个案例是实际工作中需要的问题, 其实只要有这种思维,生产者消费者模型就不会忘记。

如果一个知识点经常忘记, 说明还是没有实际项目支撑。找多几个项目练习练习,再也不用担心会忘记生产者消费者模型了。