slam十四讲第二版 pdf_视觉SLAM十四讲 第九章 实践:设计前端 局部地图 代码解析...

总体思路

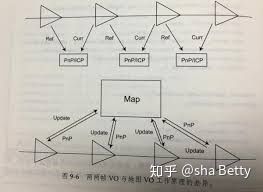

增加了地图点模块,并且增加了对地图点的优化模块,维护地图规模。整个程序的流程如下:

- 输入:color,depth以及二者对应的时间戳

- 初始帧

- 当前帧和参考帧都设置为输入帧

- 提取特征点

- 计算描述子

- 增加关键帧

- 其他帧

- 将当前帧设置为输入帧

- 当前帧的位姿设置为参考帧的位姿

- 提取特征点

- 计算描述子

- 特征匹配

- 地图中的点都是三维坐标

- 首先检查点是否在视野范围内(先预设当前帧的位姿为参考帧的位姿)

- 匹配的时候,地图点的描述子进行匹配,

- 地图中的点都是三维坐标

- PnP估计位姿

- 检查位姿是否OK,位姿的R,t不超过阈值量属OK。

- 如果ok

- 将当前帧的位姿更新为估计的位姿。

- 优化地图点

- 用更新的位姿计算地图点是否在当前帧可见,删除地图点

- 由匹配次数除以可见次数,得到一个匹配比例。如果匹配比例小于设定的匹配比例,则删除地图点。

- 获取当前帧和地图点的视角,向量为3D坐标减去相机中心坐标作为法向量n,

乘以点的法向量,求反余弦,即为点与平面的视角。如果视角大于

,去掉该地图点。

- 如果地图点数量小于100,增加地图点。

- 如果地图点数量大于1000,将去掉的比例增加0.05。

- 否则擦除比例为0.1。

- 检查关键帧是否ok,如果Ok

- 增加关键帧

- 否则

-

- 对丢帧计数

- 如果计数超过阈值将状态更新为丢失。

-

- 如果ok

visual_odometry.cpp

/*

*

* Copyright (C) 2016

*

* This program is free software: you can redistribute it and/or modify

* it under the terms of the GNU General Public License as published by

* the Free Software Foundation, either version 3 of the License, or

* (at your option) any later version.

*

* This program is distributed in the hope that it will be useful,

* but WITHOUT ANY WARRANTY; without even the implied warranty of

* MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

* GNU General Public License for more details.

*

* You should have received a copy of the GNU General Public License

* along with this program. If not, see

#include

#include

#include

#include

#include "myslam/config.h"

#include "myslam/visual_odometry.h"

#include "myslam/g2o_types.h"

namespace myslam

{

VisualOdometry::VisualOdometry() :

state_ ( INITIALIZING ), ref_ ( nullptr ), curr_ ( nullptr ), map_ ( new Map ), num_lost_ ( 0 ), num_inliers_ ( 0 ), matcher_flann_ ( new cv::flann::LshIndexParams ( 5,10,2 ) )

{

num_of_features_ = Config::get ( "number_of_features" );

scale_factor_ = Config::get ( "scale_factor" );

level_pyramid_ = Config::get ( "level_pyramid" );

match_ratio_ = Config::get ( "match_ratio" );

max_num_lost_ = Config::get ( "max_num_lost" );

min_inliers_ = Config::get ( "min_inliers" );

key_frame_min_rot = Config::get ( "keyframe_rotation" );

key_frame_min_trans = Config::get ( "keyframe_translation" );

map_point_erase_ratio_ = Config::get ( "map_point_erase_ratio" );

orb_ = cv::ORB::create ( num_of_features_, scale_factor_, level_pyramid_ );

}

VisualOdometry::~VisualOdometry()

{

}

bool VisualOdometry::addFrame ( Frame::Ptr frame )

{

switch ( state_ )

{

case INITIALIZING:

{

state_ = OK;

curr_ = ref_ = frame;

// extract features from first frame and add them into map

extractKeyPoints();

computeDescriptors();

addKeyFrame(); // the first frame is a key-frame

break;

}

case OK:

{

curr_ = frame;

curr_->T_c_w_ = ref_->T_c_w_;

extractKeyPoints();

computeDescriptors();

featureMatching();

poseEstimationPnP();

if ( checkEstimatedPose() == true ) // a good estimation

{

curr_->T_c_w_ = T_c_w_estimated_;

optimizeMap();

num_lost_ = 0;

if ( checkKeyFrame() == true ) // is a key-frame

{

addKeyFrame();

}

}

else // bad estimation due to various reasons

{

num_lost_++;

if ( num_lost_ > max_num_lost_ )

{

state_ = LOST;

}

return false;

}

break;

}

case LOST:

{

cout<<"vo has lost."<detect ( curr_->color_, keypoints_curr_ );

cout<<"extract keypoints cost time: "<compute ( curr_->color_, keypoints_curr_, descriptors_curr_ );

cout<<"descriptor computation cost time: "< matches;

// select the candidates in map

Mat desp_map;

vector candidate;

for ( auto& allpoints: map_->map_points_ )

{

MapPoint::Ptr& p = allpoints.second;

// check if p in curr frame image

if ( curr_->isInFrame(p->pos_) )

{

// add to candidate

p->visible_times_++;

candidate.push_back( p );

desp_map.push_back( p->descriptor_ );

}

}

matcher_flann_.match ( desp_map, descriptors_curr_, matches );

// select the best matches

float min_dis = std::min_element (

matches.begin(), matches.end(),

[] ( const cv::DMatch& m1, const cv::DMatch& m2 )

{

return m1.distance < m2.distance;

} )->distance;

match_3dpts_.clear();

match_2dkp_index_.clear();

for ( cv::DMatch& m : matches )

{

if ( m.distance < max ( min_dis*match_ratio_, 30.0 ) )

{

match_3dpts_.push_back( candidate[m.queryIdx] );

match_2dkp_index_.push_back( m.trainIdx );

}

}

cout<<"good matches: "< pts3d;

vector pts2d;

for ( int index:match_2dkp_index_ )

{

pts2d.push_back ( keypoints_curr_[index].pt );

}

for ( MapPoint::Ptr pt:match_3dpts_ )

{

pts3d.push_back( pt->getPositionCV() );

}

Mat K = ( cv::Mat_ ( 3,3 ) <<

ref_->camera_->fx_, 0, ref_->camera_->cx_,

0, ref_->camera_->fy_, ref_->camera_->cy_,

0,0,1

);

Mat rvec, tvec, inliers;

cv::solvePnPRansac ( pts3d, pts2d, K, Mat(), rvec, tvec, false, 100, 4.0, 0.99, inliers );

num_inliers_ = inliers.rows;

cout<<"pnp inliers: "< ( 0,0 ), rvec.at ( 1,0 ), rvec.at ( 2,0 ) ),

Vector3d ( tvec.at ( 0,0 ), tvec.at ( 1,0 ), tvec.at ( 2,0 ) )

);

// using bundle adjustment to optimize the pose

typedef g2o::BlockSolver> Block;

Block::LinearSolverType* linearSolver = new g2o::LinearSolverDense();

Block* solver_ptr = new Block ( linearSolver );

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg ( solver_ptr );

g2o::SparseOptimizer optimizer;

optimizer.setAlgorithm ( solver );

g2o::VertexSE3Expmap* pose = new g2o::VertexSE3Expmap();

pose->setId ( 0 );

pose->setEstimate ( g2o::SE3Quat (

T_c_w_estimated_.rotation_matrix(), T_c_w_estimated_.translation()

));

optimizer.addVertex ( pose );

// edges

for ( int i=0; i ( i,0 );

// 3D -> 2D projection

EdgeProjectXYZ2UVPoseOnly* edge = new EdgeProjectXYZ2UVPoseOnly();

edge->setId ( i );

edge->setVertex ( 0, pose );

edge->camera_ = curr_->camera_.get();

edge->point_ = Vector3d ( pts3d[index].x, pts3d[index].y, pts3d[index].z );

edge->setMeasurement ( Vector2d ( pts2d[index].x, pts2d[index].y ) );

edge->setInformation ( Eigen::Matrix2d::Identity() );

optimizer.addEdge ( edge );

// set the inlier map points

match_3dpts_[index]->matched_times_++;

}

optimizer.initializeOptimization();

optimizer.optimize ( 10 );

T_c_w_estimated_ = SE3 (

pose->estimate().rotation(),

pose->estimate().translation()

);

cout<<"T_c_w_estimated_: "<T_c_w_ * T_c_w_estimated_.inverse();

Sophus::Vector6d d = T_r_c.log();

if ( d.norm() > 5.0 )

{

cout<<"reject because motion is too large: "<T_c_w_ * T_c_w_estimated_.inverse();

Sophus::Vector6d d = T_r_c.log();

Vector3d trans = d.head<3>();

Vector3d rot = d.tail<3>();

if ( rot.norm() >key_frame_min_rot || trans.norm() >key_frame_min_trans )

return true;

return false;

}

void VisualOdometry::addKeyFrame()

{

if ( map_->keyframes_.empty() )

{

// first key-frame, add all 3d points into map

for ( size_t i=0; ifindDepth ( keypoints_curr_[i] );

if ( d < 0 )

continue;

Vector3d p_world = ref_->camera_->pixel2world (

Vector2d ( keypoints_curr_[i].pt.x, keypoints_curr_[i].pt.y ), curr_->T_c_w_, d

);

Vector3d n = p_world - ref_->getCamCenter();

n.normalize();

MapPoint::Ptr map_point = MapPoint::createMapPoint(

p_world, n, descriptors_curr_.row(i).clone(), curr_.get()

);

map_->insertMapPoint( map_point );

}

}

map_->insertKeyFrame ( curr_ );

ref_ = curr_;

}

void VisualOdometry::addMapPoints()

{

// add the new map points into map

vector matched(keypoints_curr_.size(), false);

for ( int index:match_2dkp_index_ )

matched[index] = true;

for ( int i=0; ifindDepth ( keypoints_curr_[i] );

if ( d<0 )

continue;

Vector3d p_world = ref_->camera_->pixel2world (

Vector2d ( keypoints_curr_[i].pt.x, keypoints_curr_[i].pt.y ),

curr_->T_c_w_, d

);

Vector3d n = p_world - ref_->getCamCenter();

n.normalize();

MapPoint::Ptr map_point = MapPoint::createMapPoint(

p_world, n, descriptors_curr_.row(i).clone(), curr_.get()

);

map_->insertMapPoint( map_point );

}

}

void VisualOdometry::optimizeMap()

{

// remove the hardly seen and no visible points

for ( auto iter = map_->map_points_.begin(); iter != map_->map_points_.end(); )

{

if ( !curr_->isInFrame(iter->second->pos_) )

{

iter = map_->map_points_.erase(iter);

continue;

}

float match_ratio = float(iter->second->matched_times_)/iter->second->visible_times_;

if ( match_ratio < map_point_erase_ratio_ )

{

iter = map_->map_points_.erase(iter);

continue;

}

double angle = getViewAngle( curr_, iter->second );

if ( angle > M_PI/6. )

{

iter = map_->map_points_.erase(iter);

continue;

}

if ( iter->second->good_ == false )

{

// TODO try triangulate this map point

}

iter++;

}

if ( match_2dkp_index_.size()<100 )

addMapPoints();

if ( map_->map_points_.size() > 1000 )

{

// TODO map is too large, remove some one

map_point_erase_ratio_ += 0.05;

}

else

map_point_erase_ratio_ = 0.1;

cout<<"map points: "<map_points_.size()<pos_ - frame->getCamCenter();

n.normalize();

return acos( n.transpose()*point->norm_ );

}

}

《视觉SLAM十四讲》中对VO中加入地图的阐述:

改进地图:将VO匹配到的特征点放到地图中,并将当前帧与地图点进行匹配,计算位姿。这里的地图是把各帧特征点缓存到一个地方构成的特征点集合。

优势:能够维护一个不断更新的地图。只要地图是正确的,即使中间某帧出了差错,仍有希望求出之后那些帧的正确位置。

两两VO与地图VO工作原理的差异局部地图:描述了附近的特征点信息,只保留离相机当前位置较近的特征点,把远的或视野外的特征点丢掉。这些特征点用来和当前帧匹配来求相机位置,所以希望它能够做得比较快。

全局地图:记录了从SLAM运行以来的所有特征点。规模要大一些,主要用来表达整个环境。但直接在全局地图上定位,对计算机的负担太大。主要用于回环检测和地图表达。

视觉里程计中,直接用于定位的局部地图,随着相机运动,往地图里添加新的特征点。是否使用地图取决于对精度-效率这个矛盾的把握。可以出于效率的考虑,使用两两无结构式的VO,也可以为了更好的精度,构建局部地图乃至考虑地图的优化。

局部地图的考验:维护它的规模。为保证实时性,需要保证地图规模不至于太大(否则匹配会消耗大量的时间)。单个帧与地图的特征匹配存在一些加速手段。

地图优化:

删除不在视野内的点,在匹配数量减少时添加新点,等等。

使用三角化来更新特征点的世界坐标,或考虑更好地动态管理地图规模的策略。

特征匹配。匹配之前,从地图中拿出一些候选点(出现在视野内的点),然后将它们与当前帧的特征描述子进行匹配。

关键帧:后端优化的主要对象。每当相机运动经过一定间隔,就取一个新的关键帧并保存起来。这些关键帧的位姿将被优化,而位于两个关键帧之间的那些东西,除了对地图贡献一些地图点外,就被理所当然地忽略掉了。

将局部地图的点投影到图像平面并显示除了。如果位姿估计正确,它们看起来应该像是固定在空间中一样。如果感觉到某个特征点不自然地运动,那可能是相机位姿估计不够准确,或特征点位置不够准确。

地图的优化:用到的原理主要是最小二乘和三角化。

局限:

视觉里程计能够估算局部时间内的相机运动及特征点的位置,但这种局部方式有明显缺点

- 容易丢失。一旦丢失,要么“等待相机转回来”(保存参考帧并与新的帧比较),要么重置整个VO以跟踪新的图像数据。

- 轨迹漂移。主要原因是每次估计的误差会累积至下一次估计,导致长时间轨迹不准确。大一点的局部地图可以缓解这种现象,但它始终是存在的。

如果只关心短时间内的运动,或者VO的精度已经满足应用需求,有时候可能需要的仅仅就是一个视觉里程计,而不用完全的SLAM。

参考文献

高翔《视觉SLAM十四讲:从理论到实践》

参考代码

gaoxiang12/slambook2

gaoxiang12/slambook