Google Cloud Pubsub使用详解(二)

Google Cloud Pubsub使用详解(二)——Sub的使用

要接收发布到某个(Topic)主题的消息,必须创建对该主题的(Subscriber)订阅。创建订阅后,只有发布到该主题的消息可用于订阅者应用程序。订阅将(Topic)主题连接到订阅者应用程序,订阅者应用程序接收和处理发布到主题的消息。一个主题可以有多个订阅,但是给定的订阅属于一个主题。

发布/订阅为每个订阅至少传递一次已发布的消息。一旦消息发送给订户,只有订户在有限的时间确认该消息后,pubsub才不会传递重复的数据。订阅可以使用拉或推机制来传递消息,我们可以随时更改或配置该机制。

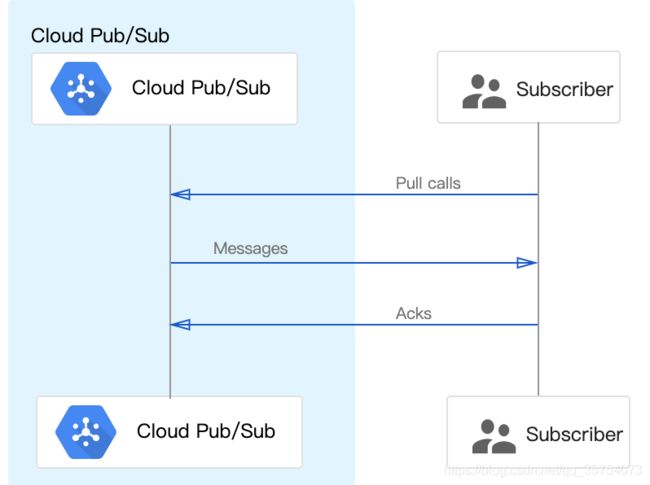

一.使用Pull接收消息

订户应用程序向Pub/Sub服务器发起请求以检索消息

- Sub应用程序调用pull方法请求传递消息

- Pub/Sub服务器响应消息(如果队列为空,则返回错误)和ack ID

- subscriber使用返回的ack ID调用确认方法来确认接收

异步pull

用异步请求,不需要请求应用程序阻止新消息,从而可以在应用程序中提供更高的吞吐量。可以使用长时间运行的消息侦听器在您的应用程序中接收消息,一次确认一条消息,实例代码我们使用Kotlin,Java版本的参考Pubsub官网。

package app

import app.config.SubConfig

import com.google.api.gax.batching.FlowControlSettings

import com.google.api.gax.core.ExecutorProvider

import com.google.api.gax.core.InstantiatingExecutorProvider

import com.google.cloud.pubsub.v1.MessageReceiver

import com.google.cloud.pubsub.v1.Subscriber

import com.google.pubsub.v1.ProjectSubscriptionName

import io.opentracing.Tracer

import kotlinx.coroutines.runBlocking

class PubsubConsumer() {

private val subscriptionName = ProjectSubscriptionName.of("projectId", "subscriptionId")

// Provides an executor service for processing messages

private val executorProvider: ExecutorProvider =

InstantiatingExecutorProvider.newBuilder().setExecutorThreadCount(5).build()

// set subscriber condition of pause the message stream and stop receiving

private val flowControl =

FlowControlSettings.newBuilder().setMaxOutstandingElementCount(1000).build()

private val subscriber = createSubscriber()

private fun createSubscriber() =

Subscriber.newBuilder(subscriptionName, receiveMessage())

.setParallelPullCount(2) // Sets the number of pullers used to pull messages from the subscription

.setFlowControlSettings(flowControl)

.setExecutorProvider(executorProvider)

.build()

private fun receiveMessage() =

MessageReceiver { message, consumer ->

val value = message.data.toStringUtf8()

/*

* 省略使用value的部分

* 例如:

* println(value)

*/

// 消息应答,如果在固定时间没有应答,则会pull到重复的数据

consumer.ack()

}

fun subscriberStart() = subscriber.awaitRunning()

fun close() {

subscriber.stopAsync()

}

}同步pull

异步pull虽然提供了错误监听,消息流控制,并发控制等功能,但是如果说我们要使用轮询模式来检索消息或者需要在任何给定时间对客户端检索到的大量消息进行精确的限制(rateLimiter),这类情况下我们就需要使用同步pull来读取数据。下面是我自己的一个例子:轮询从Pub/Sub读取数据,读取和数据处理为异步操作,处理数据时做流量控制:

gradle:

// 引入做rateLimiter

def versions = [

resilience4j: '1.5.0'

]

implementation "io.github.resilience4j:resilience4j-kotlin:${versions.resilience4j}"

implementation "io.github.resilience4j:resilience4j-ratelimiter:${versions.resilience4j}"

implementation "io.github.resilience4j:resilience4j-timelimiter:${versions.resilience4j}"code:

fun ReceiveChannel.receiveAsFlowRateLimiter(): Flow =

receiveAsFlow().rateLimiter(RateLimiter.of(

"fetchData",

RateLimiterConfig.custom()

.timeoutDuration(Duration.ofSeconds(1)) // 超时1秒

.limitRefreshPeriod(Duration.ofSeconds(1)) // 流量控制没1秒为单位

.limitForPeriod(1000) // 每流量控制单位允许的流量,连起来就是流量控制在每秒1000

.writableStackTraceEnabled(true)

.build()

)) package app

import com.google.cloud.pubsub.v1.stub.GrpcSubscriberStub

import com.google.cloud.pubsub.v1.stub.SubscriberStubSettings

import com.google.pubsub.v1.ModifyAckDeadlineRequest

import com.google.pubsub.v1.ProjectSubscriptionName

import com.google.pubsub.v1.PullRequest

import com.google.pubsub.v1.ReceivedMessage

import com.google.pubsub.v1.AcknowledgeRequest

import kotlinx.coroutines.CoroutineScope

import kotlinx.coroutines.Dispatchers

import kotlinx.coroutines.channels.Channel

import kotlinx.coroutines.coroutineScope

import kotlinx.coroutines.flow.collectIndexed

import kotlinx.coroutines.launch

import java.lang.RuntimeException

class PubsubConsumer() {

// set project id and subscription id

private val subscriptionName: String =

ProjectSubscriptionName.format("projectId", "subscriptionId")

// 设置pull Request,主要属性为每次pull拉去1000条数据

private val pullRequest: PullRequest = PullRequest.newBuilder()

.setMaxMessages(1000)

.setSubscription(subscriptionName)

.build()

// Sets the TransportProvider to use for getting the transport-specific context to make calls with.

private val subscriberStubSettings: SubscriberStubSettings = SubscriberStubSettings.newBuilder()

.setTransportChannelProvider(SubscriberStubSettings.defaultGrpcTransportProviderBuilder()

.setMaxInboundMessageSize(20 shl 20) // 20MB (maximum message size).

.build())

.build()

private val subscriber = GrpcSubscriberStub.create(subscriberStubSettings)

// 此处使用一个Channel来分离从pub/sub pull数据和程序处理数据,使程序从同步变为异步

private val recordsChannel = Channel(1000)

private val ackIds = mutableListOf()

suspend fun subscriberStart() {

subscriber.use {

coroutineScope {

// 从pub/sub pull数据的job

forwardRecords(it, recordsChannel).start()

// 从channel中读取数据做业务处理的job

consumeRecords()

}

}

}

// This function needs to be an extension of the coroutine scope since we're launching a background job.

private fun CoroutineScope.forwardRecords(from: GrpcSubscriberStub, to: Channel) =

launch(Dispatchers.IO) {

while (true) {

val pullResponse = from.pullCallable().call(pullRequest)

pullResponse.receivedMessagesList.forEach {

from.modifyAckDeadlineCallable().call(it.modifyAckDeadline())

to.send(it)

}

}

}

// 这个job用来从channel中读取数据然后做程序内部的逻辑处理

private fun CoroutineScope.consumeRecords() {

launch(Dispatchers.IO) {

recordsChannel.receiveAsFlowRateLimiter().collectIndexed { index, record ->

val data = record.message.data.toStringUtf8()

/*

此处省略data的使用逻辑

*/

ackIds.add(record.ackId)

// ack 此处demo为每次ack,当然为了提高程序性能建议批量ack

tryCommit()

}

}

}

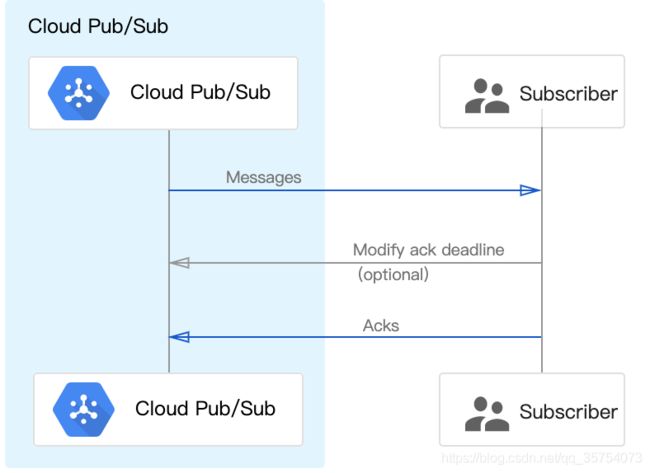

// 修改每条消息的ack的时间

private fun ReceivedMessage.modifyAckDeadline() = ModifyAckDeadlineRequest.newBuilder()

.setSubscription(subscriptionName)

.addAckIds(this.ackId)

.setAckDeadlineSeconds(600)

.build()

// ack数据

private fun tryCommit() {

val acknowledgeRequest = AcknowledgeRequest.newBuilder()

.setSubscription(subscriptionName)

.addAllAckIds(ackIds)

.build()

ackIds.clear()

try {

subscriber.acknowledgeCallable().call(acknowledgeRequest)

true

} catch (e: RuntimeException) {

false

}

}

fun close() {

logger.info("Closing pubsub consumer.")

recordsChannel.close()

subscriber.close()

}

companion object {

private val logger = //自己使用的log的框架的实现

}

}

二.使用push推动消息

在推式传递中,Pub / Sub向我们的订阅者应用程序发起请求以传递消息。

- 发布/订阅服务器将每个消息作为HTTPS请求发送到预配置端点处的订户应用程序

- 端点通过返回HTTP成功状态代码来确认消息,如果响应失败,则表明应该重新发送该消息

当Pub / Sub将消息传递到推式端点时,Pub / Sub将消息发送到POST请求的正文中。请求的主体是一个JSON对象,消息数据在该 message.data字段中。消息数据是base64编码的,例如:

{

"message": {

"attributes": {

"key": "value"

},

"data": "SGVsbG8gQ2xvdWQgUHViL1N1YiEgSGVyZSBpcyBteSBtZXNzYWdlIQ==",

"messageId": "136969346945"

},

"subscription": "projects/myproject/subscriptions/mysubscription"

}要接收来自推送订阅的消息,请使用Webhook并处理POSTPub / Sub发送到推送端点的 请求,webhook是google cloud的lib详细的请参考Google Cloud Github

import com.google.cloud.functions.BackgroundFunction

import com.google.cloud.functions.Context

import functions.eventpojos.PubSubMessage

import java.nio.charset.StandardCharsets

import java.util.*

import java.util.logging.Logger

class HelloPubSub : BackgroundFunction {

fun accept(message: PubSubMessage, context: Context) {

message?.data?.let{

val value = String(

Base64.getDecoder().decode(message.getData().getBytes(StandardCharsets.UTF_8)),

StandardCharsets.UTF_8

)

logger.info(String.format("Hello %s!", value))

}

return

}

companion object {

private val logger = Logger.getLogger(HelloPubSub::class.java.name)

}

} 因为在实际的业务中数据量基数过大,push的方式一般都是不合适的,所以作者也没有特意的去研究,在生产环境中一般也是不推荐使用的,如果有兴趣请参考Google Cloud Pubsub官方文档。