Flink实时数仓

相关数据:

启动日志:

{"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_7","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"start":{"entry":"icon","loading_time":13312,"open_ad_id":13,"open_ad_ms":9203,"open_ad_skip_ms":8503},"ts":1690869978000}

页面日志/曝光日志:

{"actions":[{"action_id":"get_coupon","item":"3","item_type":"coupon_id","ts":1690869987153}],"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_7","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"displays":[{"display_type":"recommend","item":"2","item_type":"sku_id","order":1,"pos_id":1},{"display_type":"promotion","item":"9","item_type":"sku_id","order":2,"pos_id":5},{"display_type":"promotion","item":"6","item_type":"sku_id","order":3,"pos_id":5},{"display_type":"promotion","item":"10","item_type":"sku_id","order":4,"pos_id":5},{"display_type":"query","item":"9","item_type":"sku_id","order":5,"pos_id":4}],"page":{"during_time":18307,"item":"10","item_type":"sku_id","last_page_id":"good_list","page_id":"good_detail","source_type":"activity"},"ts":1690869978000}

{"actions":[{"action_id":"cart_minus_num","item":"2","item_type":"sku_id","ts":1690869984446}],"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_7","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"page":{"during_time":12892,"last_page_id":"good_detail","page_id":"cart"},"ts":1690869978000}

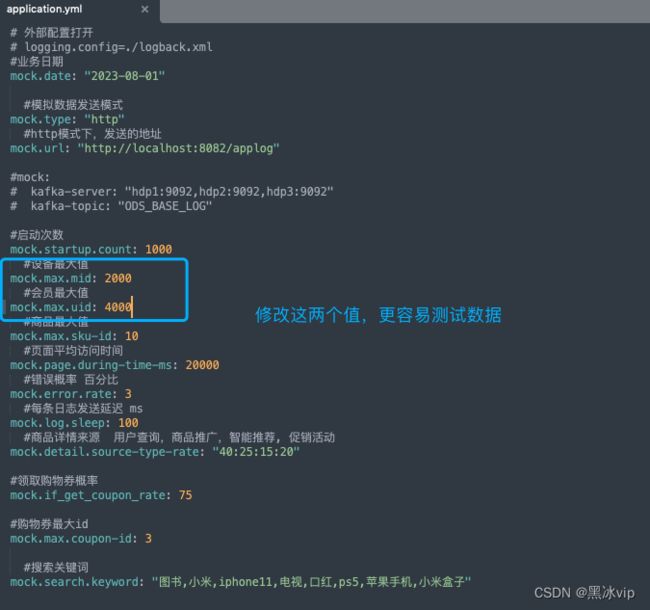

{"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_7","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"page":{"during_time":10393,"item":"1,9","item_type":"sku_ids","last_page_id":"cart","page_id":"trade"},"ts":1690869978000}017-采集模块-日志数据采集之SpringBoot创建项目&加参数测试

019-采集模块-日志数据采集之数据落盘&写入Kafka 本地测试

启动zookeeper:

bin/zkServer.sh start

启动kafaka:

启动kafka:

bin/kafka-server-start.sh config/server9092.properties

启动消费者"ods_base_log":

bin/kafka-console-consumer.sh --bootstrap-server bigdata-

training01.erongda.com:9092 -from-beginning --topic ods_base_log

启动GmallLoggerApplication.java

启动:

java -jar gma112020-mock-1og-2020-12-18.iarlogback.xml 添加"将某一个包下日志单独打印日志"控制台不会打印出启动日志

%msg%n

${LOG_HOME}/app.log

${LOG_HOME}/app.%d{yyyy-MM-dd}.log

%msg%n

java -jar gma112020-mock-1og-2020-12-18.iar

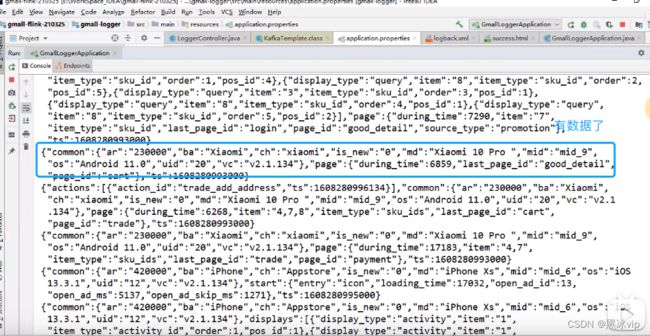

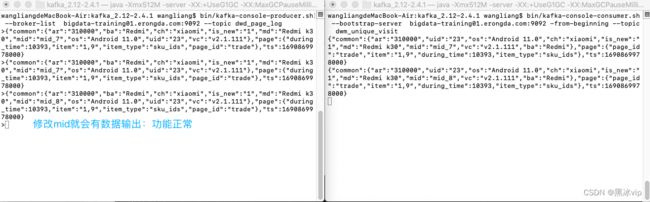

控制台数据有了:

kafka中ods_base_log数据有了:

020-采集模块-日志数据采集之数据落盘&写入Kafka 单机测试

启动zookeeper:

bin/zkServer.sh start

启动kafaka:

启动kafka:

bin/kafka-server-start.sh config/server9092.properties

启动消费者"ods_base_log":

bin/kafka-console-consumer.sh --bootstrap-server bigdata-

training01.erongda.com:9092 -from-beginning --topic ods_base_log

启动:

java -jar gma112020-mock-1og-2020-12-18.iar

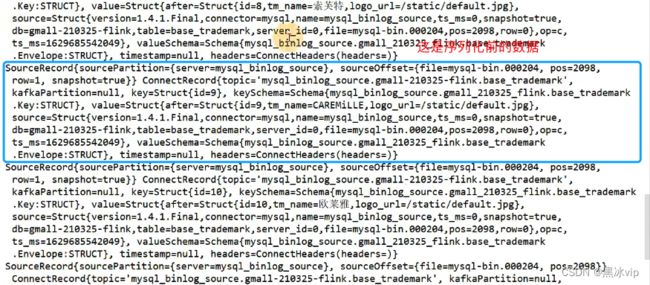

java -jar gmall-logger.jar 029-采集模块-业务数据采集之FlinkCDC DataStream方式测试

package com.atguigu;

import com.alibaba.ververica.cdc.connectors.mysql.MySQLSource;

import com.alibaba.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.alibaba.ververica.cdc.debezium.DebeziumSourceFunction;

import com.alibaba.ververica.cdc.debezium.StringDebeziumDeserializationSchema;

import org.apache.flink.runtime.state.filesystem.FsStateBackend;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class FlinkCDCWithCustomerDeserialization {

public static void main(String[] args) throws Exception {

//1.获取执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//2.通过FlinkCDC构建SourceFunction并读取数据

DebeziumSourceFunction sourceFunction = MySQLSource.builder()

.hostname("127.0.0.1")

.port(3306)

.username("root")

.password("123456")

.databaseList("gmall-210325-flink")

.tableList("gmall-210325-flink.base_trademark") //如果不添加该参数,则消费指定数据库中所有表的数据.如果指定,指定方式为db.table

.deserializer(new StringDebeziumDeserializationSchema())

.startupOptions(StartupOptions.initial())

.build();

DataStreamSource streamSource = env.addSource(sourceFunction);

//3.打印数据

streamSource.print();

//4.启动任务

env.execute("FlinkCDCWithCustomerDeserialization");

}

}

030-采集模块-FlinkCDC DataStreamAPI 设置CK&打包&开启集群

032-采集模块-业务数据采集之FlinkCDC FlinkSQLAPI 测试.mp4

package com.atguigu;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

public class FlinkCDCWithSQL {

public static void main(String[] args) throws Exception {

//1.获取执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

//2.DDL方式建表

tableEnv.executeSql("CREATE TABLE mysql_binlog ( " +

" id STRING NOT NULL, " +

" tm_name STRING, " +

" logo_url STRING " +

") WITH ( " +

" 'connector' = 'mysql-cdc', " +

" 'hostname' = '127.0.0.1', " +

" 'port' = '3306', " +

" 'username' = 'root', " +

" 'password' = '123456', " +

" 'database-name' = 'gmall-210325-flink', " +

" 'table-name' = 'base_trademark' " +

")");

//3.查询数据

Table table = tableEnv.sqlQuery("select * from mysql_binlog");

//4.将动态表转换为流

DataStream> retractStream = tableEnv.toRetractStream(table, Row.class);

retractStream.print();

//5.启动任务

env.execute("FlinkCDCWithSQL");

}

}

设置checkpoint:

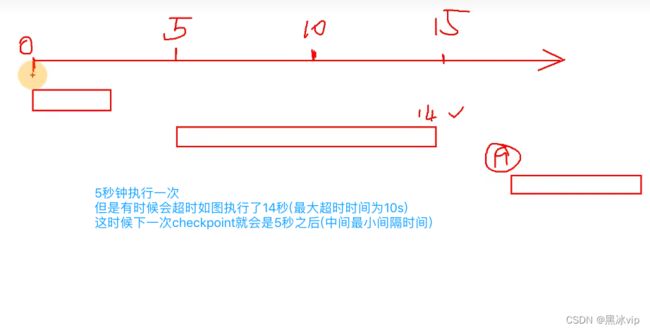

enableCheckpoint:上一次头和下一次头间隔的时间 生产环境为5min

setCheckpointTime:超时时间为10000s 具体看生产环境中状态保存的时间,如果是5秒保存状态就需要设置为10s

setMaxConcurrentCheckpoint:2 最多可以存在几个checkpoint

setMinPauseBetweenCheckpoint:3000s 上一次头和下一次尾的间隔时间

setRestartStrategy:(3,5)如果无法重启最多可以重启3次,每次间隔5s 注意:老版本需要设置,新版本不需要(新版本设置比较合理) 重启策越

1.10 默认重启int的最大值,所以需要配置(不然一直会重启) 生产环境默认就可以 如果三次都重启失败,任务就失败修改序列化:

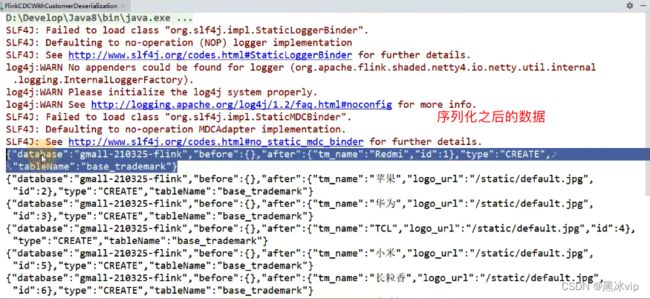

package com.atguigu;

import com.alibaba.ververica.cdc.connectors.mysql.MySQLSource;

import com.alibaba.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.alibaba.ververica.cdc.debezium.DebeziumSourceFunction;

import com.alibaba.ververica.cdc.debezium.StringDebeziumDeserializationSchema;

import org.apache.flink.runtime.state.filesystem.FsStateBackend;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class FlinkCDCWithCustomerDeserialization {

public static void main(String[] args) throws Exception {

//1.获取执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//2.通过FlinkCDC构建SourceFunction并读取数据

DebeziumSourceFunction sourceFunction = MySQLSource.builder()

.hostname("127.0.0.1")

.port(3306)

.username("root")

.password("123456")

.databaseList("gmall-210325-flink")

.tableList("gmall-210325-flink.base_trademark") //如果不添加该参数,则消费指定数据库中所有表的数据.如果指定,指定方式为db.table

.deserializer(new CustomerDeserialization()) //自定义序列化

//.deserializer(new StringDebeziumDeserializationSchema()) //默认序列化

.startupOptions(StartupOptions.initial())

.build();

DataStreamSource streamSource = env.addSource(sourceFunction);

//3.打印数据

streamSource.print();

//4.启动任务

env.execute("FlinkCDCWithCustomerDeserialization");

}

}

package com.atguigu;

import com.alibaba.fastjson.JSONObject;

import com.alibaba.ververica.cdc.debezium.DebeziumDeserializationSchema;

import io.debezium.data.Envelope;

import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.util.Collector;

import org.apache.kafka.connect.data.Field;

import org.apache.kafka.connect.data.Schema;

import org.apache.kafka.connect.data.Struct;

import org.apache.kafka.connect.source.SourceRecord;

import java.util.List;

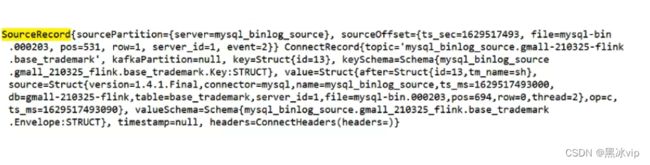

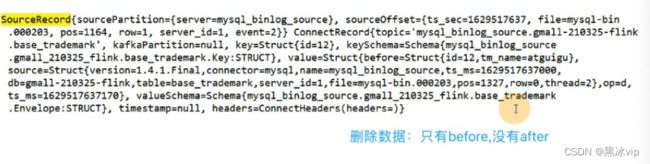

public class CustomerDeserialization implements DebeziumDeserializationSchema {

/**

* 封装的数据格式

* {

* "database":"",

* "tableName":"",

* "before":{"id":"","tm_name":""....},

* "after":{"id":"","tm_name":""....},

* "type":"c u d",

* //"ts":156456135615

* }

*/

@Override

public void deserialize(SourceRecord sourceRecord, Collector collector) throws Exception {

//1.创建JSON对象用于存储最终数据

JSONObject result = new JSONObject();

//2.获取库名&表名

String topic = sourceRecord.topic();

String[] fields = topic.split("\\.");

String database = fields[1];

String tableName = fields[2];

Struct value = (Struct) sourceRecord.value();

//3.获取"before"数据

Struct before = value.getStruct("before");

JSONObject beforeJson = new JSONObject();

if (before != null) {

Schema beforeSchema = before.schema();

List beforeFields = beforeSchema.fields();

for (Field field : beforeFields) {

Object beforeValue = before.get(field);

beforeJson.put(field.name(), beforeValue);

}

}

//4.获取"after"数据

Struct after = value.getStruct("after");

JSONObject afterJson = new JSONObject();

if (after != null) {

Schema afterSchema = after.schema();

List afterFields = afterSchema.fields();

for (Field field : afterFields) {

Object afterValue = after.get(field);

afterJson.put(field.name(), afterValue);

}

}

//5.获取操作类型 CREATE UPDATE DELETE

Envelope.Operation operation = Envelope.operationFor(sourceRecord);

String type = operation.toString().toLowerCase();

if ("create".equals(type)) {

type = "insert";

}

//6.将字段写入JSON对象

result.put("database", database);

result.put("tableName", tableName);

result.put("before", beforeJson);

result.put("after", afterJson);

result.put("type", type);

//7.输出数据

collector.collect(result.toJSONString());

}

@Override

public TypeInformation getProducedType() {

return BasicTypeInfo.STRING_TYPE_INFO;

}

}

比较FlinkCDC:

DataStream:

优点:多库多表

缺点:需要自定义反序列化器(灵活)

FlinkSQL:

优点:不需要自定义反序列化器

缺点:单表查询(Flinkcdc可以通过参数传给bean)041--采集模块-业务数据采集之读取MySQL数据并写入Kafka 测试

启动zookeeper:

bin/zkServer.sh start

查看状态:

bin/zkServer.sh status启动kafka:

创建消费者:

bin/kafka-topics.sh --create --zookeeper bigdata-training01.erongda.com:2181/kafka --replication-factor 2 --partitions 3 --topic ods_base_db

启动消费者:

bin/kafka-console-consumer.sh --bootstrap-server bigdata-training01.erongda.com:9092 -from-beginning --topic ods_base_db package com.atguigu.app.ods;

import com.alibaba.ververica.cdc.connectors.mysql.MySQLSource;

import com.alibaba.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.alibaba.ververica.cdc.debezium.DebeziumSourceFunction;

import com.atguigu.app.function.CustomerDeserialization;

import com.atguigu.utils.MyKafkaUtil;

import org.apache.flink.runtime.state.filesystem.FsStateBackend;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class FlinkCDC {

public static void main(String[] args) throws Exception {

//1.获取执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//1.1 设置CK&状态后端

//env.setStateBackend(new FsStateBackend("hdfs://hadoop102:8020/gmall-flink-210325/ck"));

//env.enableCheckpointing(5000L);

//env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

//env.getCheckpointConfig().setCheckpointTimeout(10000L);

//env.getCheckpointConfig().setMaxConcurrentCheckpoints(2);

//env.getCheckpointConfig().setMinPauseBetweenCheckpoints(3000);

//env.setRestartStrategy(RestartStrategies.fixedDelayRestart());

//2.通过FlinkCDC构建SourceFunction并读取数据

DebeziumSourceFunction sourceFunction = MySQLSource.builder()

.hostname("127.0.0.1")

.port(3306)

.username("root")

.password("123456")

.databaseList("gmall-210325-flink")

.deserializer(new CustomerDeserialization())

.startupOptions(StartupOptions.latest())

.build();

DataStreamSource streamSource = env.addSource(sourceFunction);

//3.打印数据并将数据写入Kafka

streamSource.print();

String sinkTopic = "ods_base_db";

streamSource.addSink(MyKafkaUtil.getKafkaProducer(sinkTopic));

//4.启动任务

env.execute("FlinkCDC");

}

} 045-DWD&DIM-行为数据 将数据转换为JSON对象

public class FlinkCDCWithCustomerDeserialization {

public static void main(String[] args) throws Exception {

//1.获取执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//2.通过FlinkCDC构建SourceFunction并读取数据

DebeziumSourceFunction sourceFunction = MySQLSource.builder()

.hostname("127.0.0.1")

.port(3306)

.username("root")

.password("123456")

.databaseList("gmall-210325-flink")

.tableList("gmall-210325-flink.base_trademark") //如果不添加该参数,则消费指定数据库中所有表的数据.如果指定,指定方式为db.table

.deserializer(new CustomerDeserialization()) //自定义序列化

//.deserializer(new StringDebeziumDeserializationSchema()) //默认序列化

.startupOptions(StartupOptions.initial())

.build();

DataStreamSource streamSource = env.addSource(sourceFunction);

//3.打印数据

streamSource.print();

//4.启动任务

env.execute("FlinkCDCWithCustomerDeserialization");

}

}

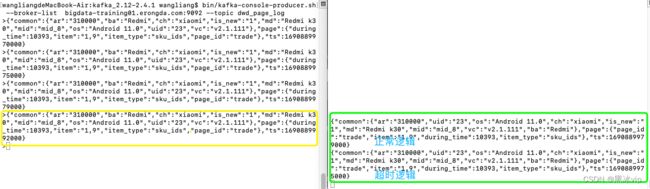

048-DWD&DIM-行为数据

启动kafka:

bin/kafka-server-start.sh config/server9092.properties

启动消费者:

bin/kafka-console-consumer.sh --bootstrap-server bigdata-training01.erongda.com:9092 -from-beginning --topic dwd_start_log

bin/kafka-console-consumer.sh --bootstrap-server bigdata-training01.erongda.com:9092 -from-beginning --topic dwd_page_log

bin/kafka-console-consumer.sh --bootstrap-server bigdata-training01.erongda.com:9092 -from-beginning --topic dwd_display_log

启动生产者:

bin/kafka-console-producer.sh --broker-list bigdata-training01.erongda.com:9092 --topic ods_base_log

相关数据:

启动日志:

{"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_7","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"start":{"entry":"icon","loading_time":13312,"open_ad_id":13,"open_ad_ms":9203,"open_ad_skip_ms":8503},"ts":1690869978000}

页面日志/曝光日志:

{"actions":[{"action_id":"get_coupon","item":"3","item_type":"coupon_id","ts":1690869987153}],"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_7","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"displays":[{"display_type":"recommend","item":"2","item_type":"sku_id","order":1,"pos_id":1},{"display_type":"promotion","item":"9","item_type":"sku_id","order":2,"pos_id":5},{"display_type":"promotion","item":"6","item_type":"sku_id","order":3,"pos_id":5},{"display_type":"promotion","item":"10","item_type":"sku_id","order":4,"pos_id":5},{"display_type":"query","item":"9","item_type":"sku_id","order":5,"pos_id":4}],"page":{"during_time":18307,"item":"10","item_type":"sku_id","last_page_id":"good_list","page_id":"good_detail","source_type":"activity"},"ts":1690869978000}

{"actions":[{"action_id":"cart_minus_num","item":"2","item_type":"sku_id","ts":1690869984446}],"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_7","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"page":{"during_time":12892,"last_page_id":"good_detail","page_id":"cart"},"ts":1690869978000}

{"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_7","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"page":{"during_time":10393,"item":"1,9","item_type":"sku_ids","last_page_id":"cart","page_id":"trade"},"ts":1690869978000}//数据流:web/app -> Nginx -> SpringBoot -> Kafka(ods) -> FlinkApp -> Kafka(dwd)

//程 序:mockLog -> Nginx -> Logger.sh -> Kafka(ZK) -> BaseLogApp -> kafka

public class BaseLogApp {

public static void main(String[] args) throws Exception {

//TODO 1.获取执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//1.1 设置CK&状态后端

//env.setStateBackend(new FsStateBackend("hdfs://hadoop102:8020/gmall-flink-210325/ck"));

//env.enableCheckpointing(5000L);

//env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

//env.getCheckpointConfig().setCheckpointTimeout(10000L);

//env.getCheckpointConfig().setMaxConcurrentCheckpoints(2);

//env.getCheckpointConfig().setMinPauseBetweenCheckpoints(3000);

//env.setRestartStrategy(RestartStrategies.fixedDelayRestart());

//TODO 2.消费 ods_base_log 主题数据创建流

String sourceTopic = "ods_base_log";

String groupId = "base_log_app_210325";

DataStreamSource kafkaDS = env.addSource(MyKafkaUtil.getKafkaConsumer(sourceTopic, groupId));

//TODO 3.将每行数据转换为JSON对象

OutputTag outputTag = new OutputTag("Dirty") {

};

SingleOutputStreamOperator jsonObjDS = kafkaDS.process(new ProcessFunction() {

@Override

public void processElement(String value, Context ctx, Collector out) throws Exception {

try {

JSONObject jsonObject = JSON.parseObject(value);

out.collect(jsonObject);

} catch (Exception e) {

//发生异常,将数据写入侧输出流

ctx.output(outputTag, value);

}

}

});

//打印脏数据

jsonObjDS.getSideOutput(outputTag).print("Dirty>>>>>>>>>>>");

//TODO 4.新老用户校验 状态编程

SingleOutputStreamOperator jsonObjWithNewFlagDS = jsonObjDS.keyBy(jsonObj -> jsonObj.getJSONObject("common").getString("mid"))

.map(new RichMapFunction() {

private ValueState valueState;

@Override

public void open(Configuration parameters) throws Exception {

valueState = getRuntimeContext().getState(new ValueStateDescriptor("value-state", String.class));

}

@Override

public JSONObject map(JSONObject value) throws Exception {

//获取数据中的"is_new"标记

String isNew = value.getJSONObject("common").getString("is_new");

//判断isNew标记是否为"1"

if ("1".equals(isNew)) {

//获取状态数据

String state = valueState.value();

if (state != null) {

//修改isNew标记

value.getJSONObject("common").put("is_new", "0");

} else {

valueState.update("1");

}

}

return value;

}

});

//TODO 5.分流 侧输出流 页面:主流 启动:侧输出流 曝光:侧输出流

OutputTag startTag = new OutputTag("start") {

};

OutputTag displayTag = new OutputTag("display") {

};

SingleOutputStreamOperator pageDS = jsonObjWithNewFlagDS.process(new ProcessFunction() {

@Override

public void processElement(JSONObject value, Context ctx, Collector out) throws Exception {

//获取启动日志字段

String start = value.getString("start");

if (start != null && start.length() > 0) {

//将数据写入启动日志侧输出流

ctx.output(startTag, value.toJSONString());

} else {

//将数据写入页面日志主流

out.collect(value.toJSONString());

//取出数据中的曝光数据

JSONArray displays = value.getJSONArray("displays");

if (displays != null && displays.size() > 0) {

//获取页面ID

String pageId = value.getJSONObject("page").getString("page_id");

for (int i = 0; i < displays.size(); i++) {

JSONObject display = displays.getJSONObject(i);

//添加页面id

display.put("page_id", pageId);

//将输出写出到曝光侧输出流

ctx.output(displayTag, display.toJSONString());

}

}

}

}

});

//TODO 6.提取侧输出流

DataStream startDS = pageDS.getSideOutput(startTag);

DataStream displayDS = pageDS.getSideOutput(displayTag);

//TODO 7.将三个流进行打印并输出到对应的Kafka主题中

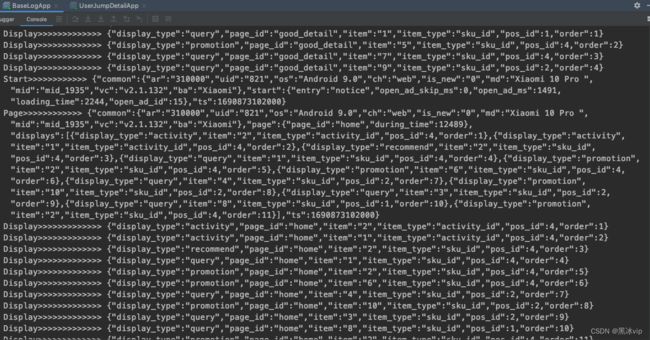

startDS.print("Start>>>>>>>>>>>");

pageDS.print("Page>>>>>>>>>>>");

displayDS.print("Display>>>>>>>>>>>>");

startDS.addSink(MyKafkaUtil.getKafkaProducer("dwd_start_log"));

pageDS.addSink(MyKafkaUtil.getKafkaProducer("dwd_page_log"));

displayDS.addSink(MyKafkaUtil.getKafkaProducer("dwd_display_log"));

//TODO 8.启动任务

env.execute("BaseLogApp");

}

}

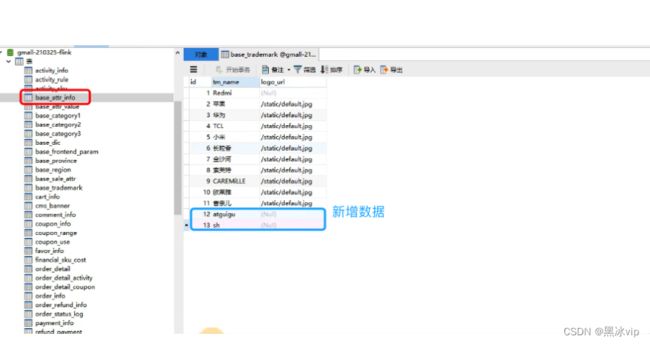

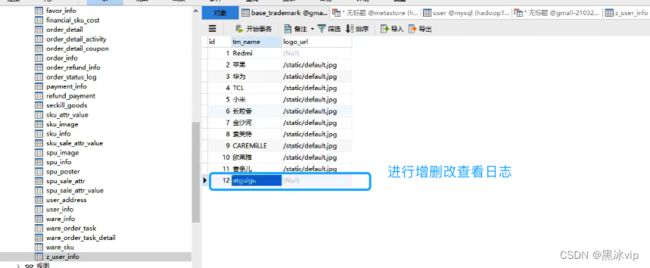

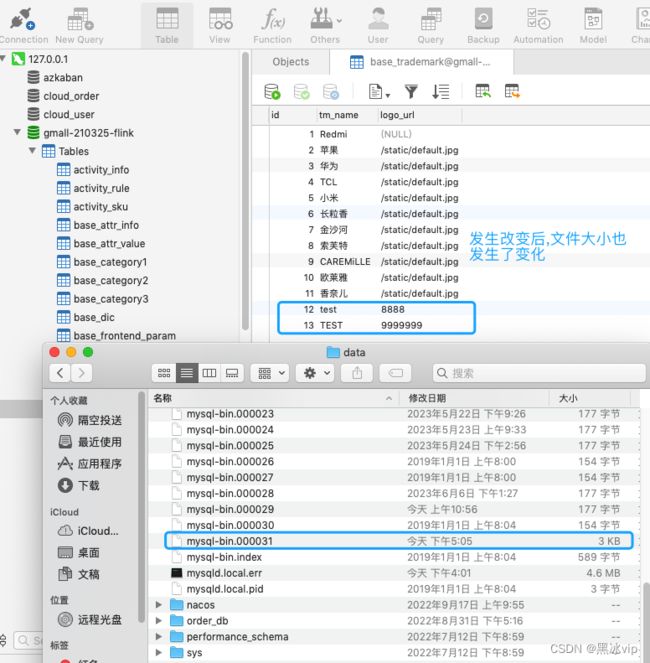

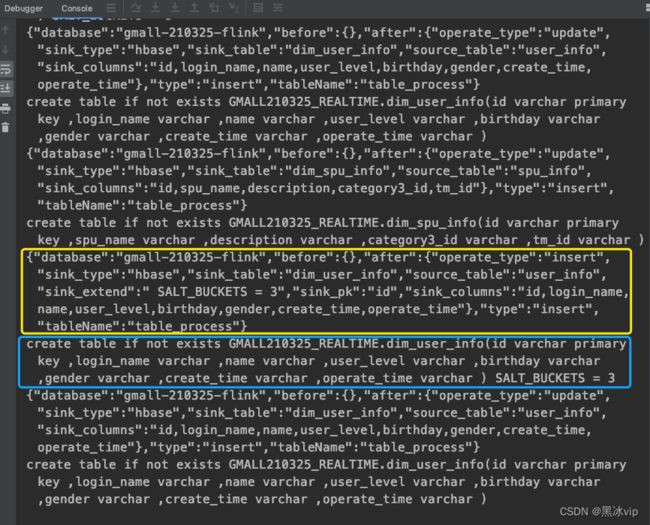

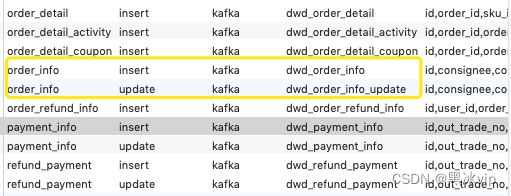

054- DWD&DIM-业务数据只代码编写 开启配置表Binlog并测试

开启Binlog:

修改my.conf文件:/etc

##监控多个库

[mysqld]

# log_bin

log-bin = mysql-bin

binlog-format = ROW

server_id = 1

binlog-do-db=gmall-210325-flink

binlog-do-db=gmall-210325-realtime063-DWD&DIM-业务数据之代码编写测试

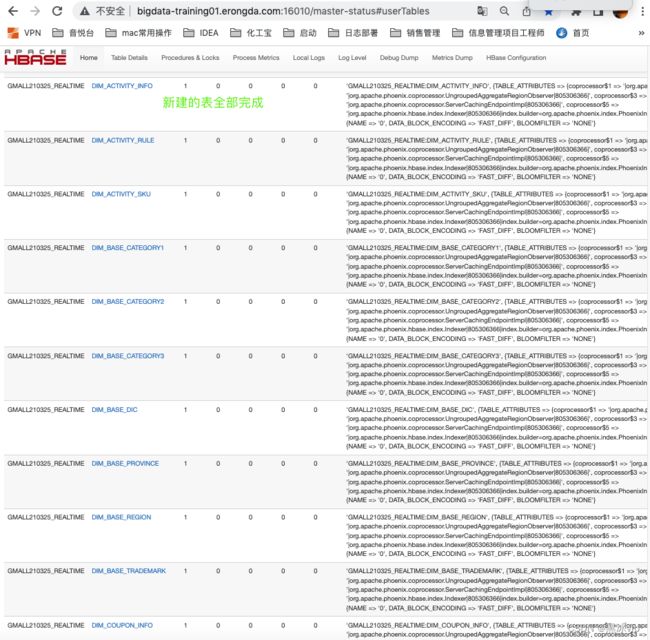

067-DWD&DIM-业务数据之整体测试 测试完成

启动zookeeper:

bin/zkServer.sh start

启动hdfs:

sbin/hadoop-daemon.sh start namenode

sbin/hadoop-daemon.sh start datanode

启动hbase:

bin/hbase-daemon.sh start master

bin/hbase-daemon.sh start regionserver

启动kafaka:

启动kafka:

bin/kafka-server-start.sh config/server9092.properties

启动消费者"ods_base_db":

bin/kafka-console-consumer.sh --bootstrap-server bigdata-

training01.erongda.com:9092 -from-beginning --topic ods_base_db

启动程序:

BaseDBApp.java

ods/FlinkCDC.java

数据流:web/app -> nginx -> SpringBoot -> Mysql -> FlinkApp -> Kafka(ods) -> FlinkApp -> Kafka(dwd)/Phoenix(dim)

程 序: mockDb -> Mysql -> FlinkCDC -> Kafka(ZK) -> BaseDBApp -> Kafka/Phoenix(hbase,zk,hdfs)

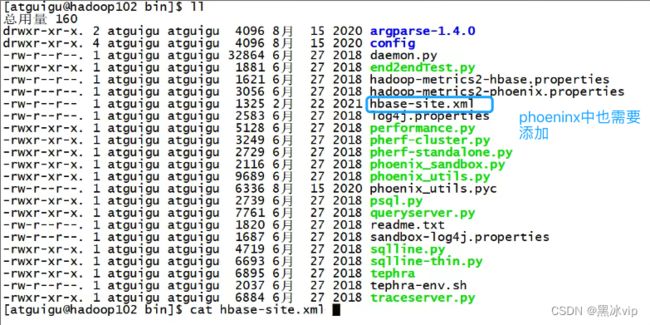

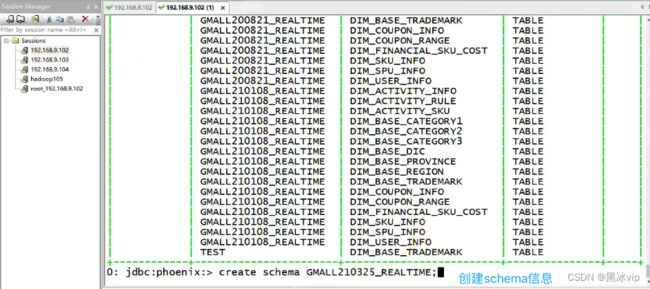

注意:为了开启 hbase 的 namespace 和 phoenix 的 schema 的映射,在程序中需要加这个配置文件,另外在 linux 服务上,也需要在 hbase 以及 phoenix 的 hbase-site.xml 配置文件中,加上以上两个配置,并使用 xsync 进行同步。

phoenix.schema.isNamespaceMappingEnabled true phoenix.schema.mapSystemTablesToNamespace true

启动phoenix后新建schema:

create schema GMALL210_REALTIME;

测试:

1.phoenix数据表启动时候或者修改后是否新建

1.2 新增、修改、删除gmall-210325-flink.table_process也会去创建表

2.type为hbase数据是否正确输出

3.type为kafka的数据是否正确输出

bin/kafka-console-consumer.sh --bootstrap-server bigdata-training01.erongda.com:9092 -from-beginning --topic dwd_order_info

078-DWM层-访客UV 代码测试

启动zookeeper:

bin/zkServer.sh start

启动kafaka:

启动kafka:

bin/kafka-server-start.sh config/server9092.properties

启动生产者:

bin/kafka-console-producer.sh --broker-list bigdata-training01.erongda.com:9092

--topic dwd_page_log

启动消费者:

bin/kafka-console-consumer.sh --bootstrap-server bigdata-

training01.erongda.com:9092 -from-beginning --topic dwm_unique_visit

启动:

java -jar gma112020-mock-1og-2020-12-18.iar

java -jar gmall-logger.jar

启动:

BaseLogApp.java

UniqueVisitApp.java

测试数据:

去除last_page_id:

{"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid7","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"page":{"during_time":10393,"item":"1,9","item_type":"sku_ids","page_id":"trade"},"ts":1690869978000}

换一个mid:

{"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_8","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"page":{"during_time":10393,"item":"1,9","item_type":"sku_ids","page_id":"trade"},"ts":1690869978000}

//数据流:web/app -> Nginx -> SpringBoot -> Kafka(ods) -> FlinkApp -> Kafka(dwd)

//程 序:mockLog -> Nginx -> Logger.sh -> Kafka(ZK) -> BaseLogApp -> kafka

自测:

造行为数据测试: java -jar gma112020-mock-1og-2020-12-18.iar

081-DWM层-跳出明细 代码测试

启动zookeeper:

bin/zkServer.sh start

启动kafaka:

启动kafka:

bin/kafka-server-start.sh config/server9092.properties

启动生产者:

bin/kafka-console-producer.sh --broker-list bigdata-training01.erongda.com:9092

--topic dwd_page_log

启动消费者:

bin/kafka-console-consumer.sh --bootstrap-server bigdata-training01.erongda.com:9092 -from-beginning --topic dwm_user_jump_detail

启动:

UserJumpDetailApp.java

测试数据:

去除last_page_id:

{"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_8","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"page":{"during_time":10393,"item":"1,9","item_type":"sku_ids","page_id":"trade"},"ts":1690889970000}

{"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_8","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"page":{"during_time":10393,"item":"1,9","item_type":"sku_ids","page_id":"trade"},"ts":1690889975000}

{"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_8","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"page":{"during_time":10393,"item":"1,9","item_type":"sku_ids","page_id":"trade"},"ts":1690889979000}

{"common":{"ar":"310000","ba":"Redmi","ch":"xiaomi","is_new":"1","md":"Redmi k30","mid":"mid_8","os":"Android 11.0","uid":"23","vc":"v2.1.111"},"page":{"during_time":10393,"item":"1,9","item_type":"sku_ids","page_id":"trade"},"ts":1690889992000}

//数据流:web/app -> Nginx -> SpringBoot -> Kafka(ods) -> FlinkApp -> Kafka(dwd) -> FlinkApp -> Kafka(dwm)

//程 序:mockLog -> Nginx -> Logger.sh -> Kafka(ZK) -> BaseLogApp -> kafka -> UserJumpDetailApp -> Kafka082-DWM层-跳出明细 测试

启动zookeeper:

bin/zkServer.sh start

启动kafaka:

启动kafka:

bin/kafka-server-start.sh config/server9092.properties

启动消费者:

bin/kafka-console-consumer.sh --bootstrap-server bigdata-training01.erongda.com:9092 -from-beginning --topic dwm_user_jump_detail

启动:

java -jar gma112020-mock-1og-2020-12-18.iar

java -jar gmall-logger.jar

启动:

BaseLogApp.java

UserJumpDetailApp.java

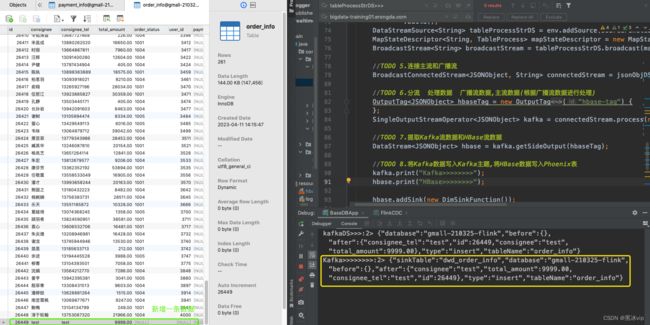

091-DWM层-订单宽表 代码测试 测试完成

启动zookeeper:

bin/zkServer.sh start

启动hdfs:

sbin/hadoop-daemon.sh start namenode

sbin/hadoop-daemon.sh start datanode

启动hbase:

bin/hbase-daemon.sh start master

bin/hbase-daemon.sh start regionserver

启动kafaka:

启动kafka:

bin/kafka-server-start.sh config/server9092.properties

启动消费者"ods_base_db":

bin/kafka-console-consumer.sh --bootstrap-server bigdata-

training01.erongda.com:9092 -from-beginning --topic ods_base_db

bin/kafka-console-consumer.sh --bootstrap-server bigdata-

training01.erongda.com:9092 -from-beginning --topic dwd_order_detail

bin/kafka-console-consumer.sh --bootstrap-server bigdata-

training01.erongda.com:9092 -from-beginning --topic dwd_order_info

bin/kafka-console-consumer.sh --bootstrap-server bigdata-

training01.erongda.com:9092 -from-beginning --topic dwm_order_wide

启动程序:

BaseDBApp.java

ods/FlinkCDC.java

OrderWideApp.java

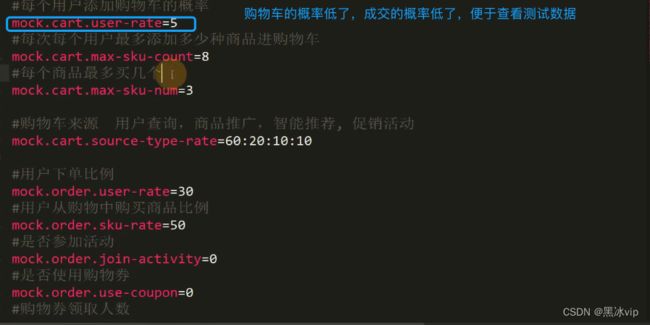

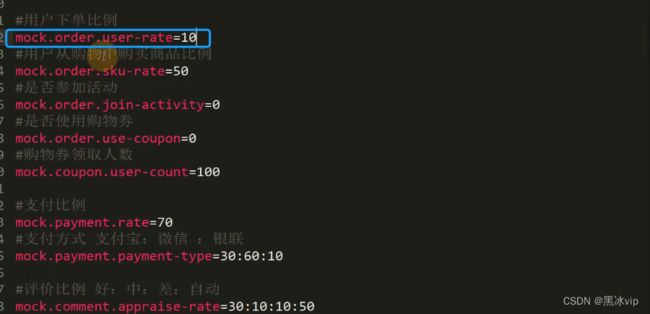

注意:mock.clear=1 每次都会置空数据库,便于测试

数据流:web/app -> nginx -> SpringBoot -> Mysql -> FlinkApp -> Kafka(ods) -> FlinkApp -> Kafka/Phoenix(dwd-dim) -> FlinkApp(redis) -> Kafka(dwm)

程 序: MockDb -> Mysql -> FlinkCDC -> Kafka(ZK) -> BaseDbApp -> Kafka/Phoenix(zk/hdfs/hbase) -> OrderWideApp(Redis) -> Kafka

测试:

1.手动创建订单数据

BaseDBApp:

Kafka>>>>>>>>:2> {"sinkTable":"dwd_order_detail","database":"gmall-210325-flink","before":{},"after":{"sku_num":"2","create_time":"2023-06-12 16:33:42","sku_id":20,"order_price":2899.00,"source_type":"2401","sku_name":"小米电视E65X 65英寸 全面屏 4K超高清HDR 蓝牙遥控内置小爱 2+8GB AI人工智能液晶网络平板电视 L65M5-EA","id":79949,"order_id":26689,"split_total_amount":5798.00},"type":"insert","tableName":"order_detail"}

FlinkCDC:

{"database":"gmall-210325-flink","before":{},"after":{"sku_num":"2","create_time":"2023-06-12 16:33:42","sku_id":20,"order_price":2899.00,"source_type":"2401","img_url":

"http://47.93.148.192:8080/group1/M00/00/02/rBHu8l-0kIGAWtMyAAGxs6Q350k510.jpg","sku_name":"小米电视E65X 65英寸 全面屏 4K超高清HDR 蓝牙遥控内置小爱 2+8GB AI人工智能液晶网络平板电视 L65M5-EA","id":79949,"order_id":26689,"split_total_amount":5798.00},"type":"insert","tableName":"order_detail"}

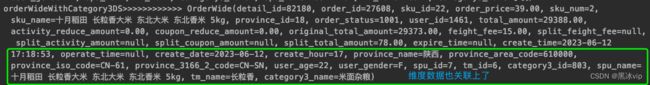

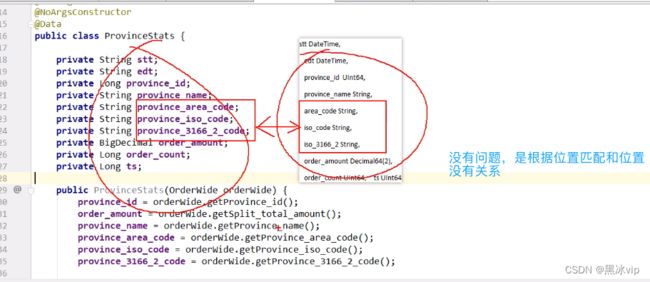

OrderWideApp: orderWideWithNoDimDS>>>>>>>>>> OrderWide(detail_id=79949, order_id=26689, sku_id=20, order_price=2899.00, sku_num=2, sku_name=小米电视E65X 65英寸 全面屏 4K超高清HDR 蓝牙遥控内置小爱 2+8GB AI人工智能液晶网络平板电视 L65M5-EA, province_id=19, order_status=1001, user_id=316, total_amount=10490.00, activity_reduce_amount=0.00, coupon_reduce_amount=0.00, original_total_amount=10484.00, feight_fee=6.00, split_feight_fee=null, split_activity_amount=null, split_coupon_amount=null, split_total_amount=5798.00, expire_time=null, create_time=2023-06-12 16:33:42, operate_time=null, create_date=2023-06-12, create_hour=16, province_name=null, province_area_code=null, province_iso_code=null, province_3166_2_code=null, user_age=null, user_gender=null, spu_id=null, tm_id=null, category3_id=null, spu_name=null, tm_name=null, category3_name=null)orderWideWithCategory3DS>>>>>>>>>>>> OrderWide(detail_id=79949, order_id=26689, sku_id=20, order_price=2899.00, sku_num=2, sku_name=小米电视E65X 65英寸 全面屏 4K超高清HDR 蓝牙遥控内置小爱 2+8GB AI人工智能液晶网络平板电视 L65M5-EA, province_id=19, order_status=1001, user_id=316, total_amount=10490.00, activity_reduce_amount=0.00, coupon_reduce_amount=0.00, original_total_amount=10484.00, feight_fee=6.00, split_feight_fee=null, split_activity_amount=null, split_coupon_amount=null, split_total_amount=5798.00, expire_time=null, create_time=2023-06-12 16:33:42, operate_time=null, create_date=2023-06-12, create_hour=16, province_name=甘肃, province_area_code=620000, province_iso_code=CN-62, province_3166_2_code=CN-GS, user_age=50, user_gender=F, spu_id=6, tm_id=5, category3_id=86, spu_name=小米电视 内置小爱 智能网络液晶平板教育电视, tm_name=小米, category3_name=平板电视)

095-DWM层-订单宽表 关联维度 JDBCUtil 测试

启动JdbcUtil

public static void main(String[] args) throws Exception {

Class.forName(GmallConfig.PHOENIX_DRIVER);

Connection connection =

DriverManager.getConnection(GmallConfig.PHOENIX_SERVER);

List queryList = queryList(connection,

"select * from GMALL210325_REALTIME.DIM_USER_INFO",

JSONObject.class,

true);

for (JSONObject jsonObject : queryList) {

System.out.println(jsonObject);

}

connection.close();

} 测试mysql、clickhouse和日志数据是否一致:107-DWM层-订单宽表 关联维度 优化2 异步IO编码 测试完成

启动zookeeper:

bin/zkServer.sh start

启动hdfs:

sbin/hadoop-daemon.sh start namenode

sbin/hadoop-daemon.sh start datanode

启动hbase:

bin/hbase-daemon.sh start master

bin/hbase-daemon.sh start regionserver

启动程序:

BaseDBApp.java

ods/FlinkCDC.java

OrderWideApp.java

启动:

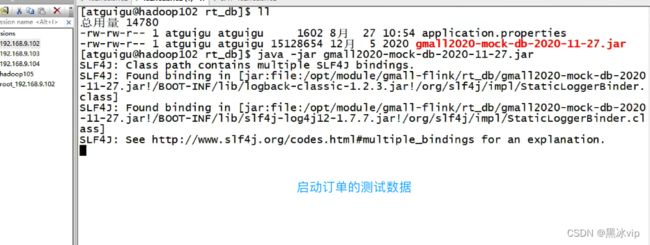

gmall2020-mock-db-2020-11-27.jar

select count(*) from `gmall-210325-flink`.order_detail

109-DWM层-订单宽表 最终测试

bin/kafka-console-consumer.sh --bootstrap-server bigdata-training01.erongda.com:9092 -from-beginning --topic dwm_order_wide

113-尚硅谷-Flink实时数仓-DWM层-支付宽表 代码测试

启动kafaka:

bin/kafka-console-consumer.sh --bootstrap-server bigdata-

training01.erongda.com:9092 -from-beginning --topic dwm_payment_wide

启动程序:

BaseDBApp.java

ods/FlinkCDC.java

OrderWideApp.java

PaymentWideApp.java

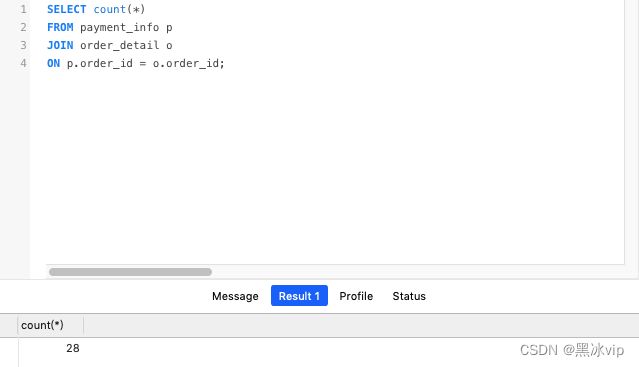

SELECT

count(*)

FROM

payment_info p

JOIN order_detail o ON p.order_id = o.order_id;![]()

测试数据是否一致:

相关数据的例子:

注意:对应数据关联上了,对应的维度数据也查询到了

OrderWideApp:

orderWideWithCategory3DS>>>>>>>>>>>> OrderWide(detail_id=80399, order_id=26873, sku_id=16, order_price=4488.00, sku_num=1, sku_name=华为 HUAWEI P40 麒麟990 5G SoC芯片 5000万超感知徕卡三摄 30倍数字变焦 8GB+128GB亮黑色全网通5G手机, province_id=16, order_status=1001, user_id=3209, total_amount=13959.00, activity_reduce_amount=0.00, coupon_reduce_amount=0.00, original_total_amount=13945.00, feight_fee=14.00, split_feight_fee=null, split_activity_amount=null, split_coupon_amount=null, split_total_amount=4488.00, expire_time=null, create_time=2023-06-12 19:59:53, operate_time=null, create_date=2023-06-12, create_hour=19, province_name=吉林, province_area_code=220000, province_iso_code=CN-22, province_3166_2_code=CN-JL, user_age=18, user_gender=F, spu_id=4, tm_id=3, category3_id=61, spu_name=HUAWEI P40, tm_name=华为, category3_name=手机)

orderWideWithCategory3DS>>>>>>>>>>>> OrderWide(detail_id=80404, order_id=26874, sku_id=31, order_price=69.00, sku_num=1, sku_name=CAREMiLLE珂曼奶油小方口红 雾面滋润保湿持久丝缎唇膏 M03赤茶, province_id=32, order_status=1001, user_id=3539, total_amount=20366.00, activity_reduce_amount=0.00, coupon_reduce_amount=0.00, original_total_amount=20361.00, feight_fee=5.00, split_feight_fee=null, split_activity_amount=null, split_coupon_amount=null, split_total_amount=69.00, expire_time=null, create_time=2023-06-12 19:59:53, operate_time=null, create_date=2023-06-12, create_hour=19, province_name=贵州, province_area_code=520000, province_iso_code=CN-52, province_3166_2_code=CN-GZ, user_age=24, user_gender=M, spu_id=10, tm_id=9, category3_id=477, spu_name=CAREMiLLE珂曼奶油小方口红 雾面滋润保湿持久丝缎唇膏, tm_name=CAREMiLLE, category3_name=唇部)

PaymentWideApp:

>>>>>>>>>> PaymentWide(payment_id=18002, subject=华为 HUAWEI P40 麒麟990 5G SoC芯片 5000万超感知徕卡三摄 30倍数字变焦 8GB+128GB亮黑色全网通5G手机等4件商品, payment_type=1101, payment_create_time=2023-06-12 19:59:53, callback_time=2023-06-12 20:00:13, detail_id=80399, order_id=26873, sku_id=16, order_price=4488.00, sku_num=1, sku_name=华为 HUAWEI P40 麒麟990 5G SoC芯片 5000万超感知徕卡三摄 30倍数字变焦 8GB+128GB亮黑色全网通5G手机, province_id=16, order_status=1001, user_id=3209, total_amount=13959.00, activity_reduce_amount=0.00, coupon_reduce_amount=0.00, original_total_amount=13945.00, feight_fee=14.00, split_feight_fee=null, split_activity_amount=null, split_coupon_amount=null, split_total_amount=4488.00, order_create_time=2023-06-12 19:59:53, province_name=吉林, province_area_code=220000, province_iso_code=CN-22, province_3166_2_code=CN-JL, user_age=18, user_gender=F, spu_id=4, tm_id=3, category3_id=61, spu_name=HUAWEI P40, tm_name=华为, category3_name=手机)

>>>>>>>>>> PaymentWide(payment_id=17996, subject=TCL 85Q6 85英寸 巨幕私人影院电视 4K超高清 AI智慧屏 全景全面屏 MEMC运动防抖 2+16GB 液晶平板电视机等8件商品, payment_type=1102, payment_create_time=2023-06-12 19:59:53, callback_time=2023-06-12 20:00:13, detail_id=80382, order_id=26864, sku_id=16, order_price=4488.00, sku_num=2, sku_name=华为 HUAWEI P40 麒麟990 5G SoC芯片 5000万超感知徕卡三摄 30倍数字变焦 8GB+128GB亮黑色全网通5G手机, province_id=8, order_status=1001, user_id=1008, total_amount=45106.00, activity_reduce_amount=0.00, coupon_reduce_amount=0.00, original_total_amount=45090.00, feight_fee=16.00, split_feight_fee=null, split_activity_amount=null, split_coupon_amount=null, split_total_amount=8976.00, order_create_time=2023-06-12 19:59:53, province_name=浙江, province_area_code=330000, province_iso_code=CN-33, province_3166_2_code=CN-ZJ, user_age=56, user_gender=F, spu_id=4, tm_id=3, category3_id=61, spu_name=HUAWEI P40, tm_name=华为, category3_name=手机)

143-DWS层-访客主题 ClickHouseUtil 测试完成

启动clickhouse:

docker exec -it clickhouse-server /bin/bash

clickhouse-client

创建clickhouse表:

create table visitor_stats_210325 (

stt DateTime,

edt DateTime,

vc String,

ch String,

ar String,

is_new String,

uv_ct UInt64,

pv_ct UInt64,

sv_ct UInt64,

uj_ct UInt64,

dur_sum UInt64,

ts UInt64

) engine =ReplacingMergeTree(ts)

partition by toYYYYMMDD(stt)

order by (stt,edt,is_new,vc,ch,ar);

启动程序:

BaseLogApp.java

UniqueVisitApp.java

UserJumpDetailApp.java

VisitorStatsApp.java

造日志数据: /opt/modules/gmall-flink/rt_applog

sudo java -jar gmall-logger.jar

sudo java -jar gmall2020-mock-log-2020-12-18.jar

select count(*) from visitor_stats_210325;

注意:插入是5的倍数,所以为55条

相关数据:

UniqueVisitApp:

{"common":{"ar":"310000","uid":"42","os":"Android 10.0","ch":"web","is_new":"0","md":"Oneplus 7","mid":"mid_6","vc":"v2.1.134","ba":"Oneplus"},"page":{"page_id":"home","during_time":14678},"displays":[{"display_type":"activity","item":"2","item_type":"activity_id","pos_id":5,"order":1},{"display_type":"activity","item":"1","item_type":"activity_id","pos_id":5,"order":2},{"display_type":"query","item":"3","item_type":"sku_id","pos_id":1,"order":3},{"display_type":"query","item":"1","item_type":"sku_id","pos_id":4,"order":4},{"display_type":"query","item":"1","item_type":"sku_id","pos_id":4,"order":5},{"display_type":"recommend","item":"9","item_type":"sku_id","pos_id":3,"order":6},{"display_type":"query","item":"9","item_type":"sku_id","pos_id":3,"order":7}],"ts":1690880847000}

{"common":{"ar":"110000","uid":"5","os":"Android 11.0","ch":"oppo","is_new":"0","md":"Sumsung Galaxy S20","mid":"mid_15","vc":"v2.1.134","ba":"Sumsung"},"page":{"page_id":"home","during_time":2184},"displays":[{"display_type":"activity","item":"1","item_type":"activity_id","pos_id":1,"order":1},{"display_type":"activity","item":"1","item_type":"activity_id","pos_id":1,"order":2},{"display_type":"promotion","item":"6","item_type":"sku_id","pos_id":5,"order":3},{"display_type":"promotion","item":"9","item_type":"sku_id","pos_id":1,"order":4},{"display_type":"query","item":"7","item_type":"sku_id","pos_id":2,"order":5},{"display_type":"promotion","item":"2","item_type":"sku_id","pos_id":5,"order":6},{"display_type":"promotion","item":"5","item_type":"sku_id","pos_id":5,"order":7},{"display_type":"recommend","item":"2","item_type":"sku_id","pos_id":5,"order":8},{"display_type":"query","item":"10","item_type":"sku_id","pos_id":4,"order":9},{"display_type":"promotion","item":"9","item_type":"sku_id","pos_id":3,"order":10},{"display_type":"query","item":"3","item_type":"sku_id","pos_id":1,"order":11},{"display_type":"query","item":"8","item_type":"sku_id","pos_id":1,"order":12}],"ts":1690880874000}

UserJumpDetailApp:

{"common":{"ar":"370000","uid":"20","os":"Android 11.0","ch":"web","is_new":"0","md":"Xiaomi Mix2 ","mid":"mid_16","vc":"v2.1.134","ba":"Xiaomi"},"page":{"page_id":"home","during_time":9663},"displays":[{"display_type":"activity","item":"2","item_type":"activity_id","pos_id":4,"order":1},{"display_type":"recommend","item":"10","item_type":"sku_id","pos_id":2,"order":2},{"display_type":"promotion","item":"7","item_type":"sku_id","pos_id":3,"order":3},{"display_type":"query","item":"3","item_type":"sku_id","pos_id":5,"order":4},{"display_type":"query","item":"3","item_type":"sku_id","pos_id":1,"order":5},{"display_type":"query","item":"10","item_type":"sku_id","pos_id":5,"order":6},{"display_type":"recommend","item":"8","item_type":"sku_id","pos_id":2,"order":7}],"ts":1690880883000}

{"common":{"ar":"110000","uid":"1","os":"iOS 13.3.1","ch":"Appstore","is_new":"0","md":"iPhone 8","mid":"mid_9","vc":"v2.1.111","ba":"iPhone"},"page":{"page_id":"home","during_time":16573},"displays":[{"display_type":"activity","item":"2","item_type":"activity_id","pos_id":4,"order":1},{"display_type":"query","item":"9","item_type":"sku_id","pos_id":4,"order":2},{"display_type":"promotion","item":"7","item_type":"sku_id","pos_id":1,"order":3},{"display_type":"promotion","item":"8","item_type":"sku_id","pos_id":3,"order":4},{"display_type":"promotion","item":"8","item_type":"sku_id","pos_id":5,"order":5},{"display_type":"query","item":"3","item_type":"sku_id","pos_id":3,"order":6},{"display_type":"query","item":"1","item_type":"sku_id","pos_id":1,"order":7},{"display_type":"query","item":"8","item_type":"sku_id","pos_id":3,"order":8},{"display_type":"recommend","item":"6","item_type":"sku_id","pos_id":2,"order":9},{"display_type":"query","item":"1","item_type":"sku_id","pos_id":1,"order":10},{"display_type":"query","item":"9","item_type":"sku_id","pos_id":4,"order":11}],"ts":1690880894000}

{"common":{"ar":"110000","uid":"33","os":"Android 11.0","ch":"oppo","is_new":"0","md":"vivo iqoo3","mid":"mid_8","vc":"v2.1.132","ba":"vivo"},"page":{"page_id":"home","during_time":2347},"displays":[{"display_type":"activity","item":"1","item_type":"activity_id","pos_id":1,"order":1},{"display_type":"activity","item":"2","item_type":"activity_id","pos_id":1,"order":2},{"display_type":"query","item":"6","item_type":"sku_id","pos_id":2,"order":3},{"display_type":"query","item":"5","item_type":"sku_id","pos_id":3,"order":4},{"display_type":"promotion","item":"7","item_type":"sku_id","pos_id":1,"order":5},{"display_type":"recommend","item":"2","item_type":"sku_id","pos_id":5,"order":6},{"display_type":"query","item":"8","item_type":"sku_id","pos_id":2,"order":7},{"display_type":"query","item":"1","item_type":"sku_id","pos_id":3,"order":8},{"display_type":"query","item":"4","item_type":"sku_id","pos_id":4,"order":9},{"display_type":"query","item":"9","item_type":"sku_id","pos_id":4,"order":10},{"display_type":"query","item":"5","item_type":"sku_id","pos_id":4,"order":11},{"display_type":"query","item":"10","item_type":"sku_id","pos_id":1,"order":12}],"ts":1690880914000}

>>>>>>>>>>>> VisitorStats(stt=2023-08-01 16:57:10, edt=2023-08-01 16:57:20, vc=v2.1.132, ch=vivo, ar=310000, is_new=0, uv_ct=1, pv_ct=0, sv_ct=0, uj_ct=0, dur_sum=0, ts=1690880232000)

>>>>>>>>>>>> VisitorStats(stt=2023-08-01 16:57:10, edt=2023-08-01 16:57:20, vc=v2.1.134, ch=oppo, ar=530000, is_new=0, uv_ct=1, pv_ct=0, sv_ct=0, uj_ct=0, dur_sum=0, ts=1690880233000)

>>>>>>>>>>>> VisitorStats(stt=2023-08-01 17:07:20, edt=2023-08-01 17:07:30, vc=v2.1.134, ch=web, ar=310000, is_new=0, uv_ct=1, pv_ct=0, sv_ct=0, uj_ct=0, dur_sum=0, ts=1690880847000)

157-DWS层-商品主题 代码编写 将数据写入ClickHouse&测试

启动kafka:

bin/kafka-server-start.sh config/server9092.properties

启动clickhouse:

docker exec -it clickhouse-server /bin/bash

clickhouse-client

启动reids:

docker exec -it redis redis-cli

启动程序:

BaseDBApp.java

ods/FlinkCDC.java

OrderWideApp.java

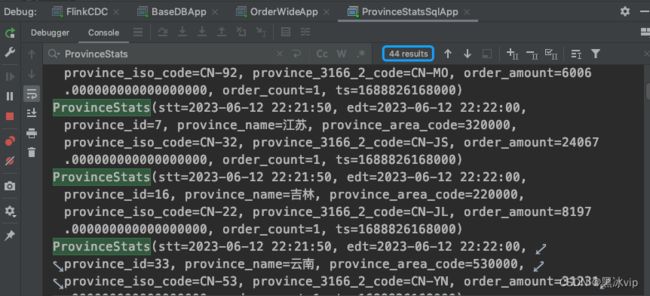

ProvinceStatsSqlApp.java

create table province_stats_210325 (

stt DateTime,

edt DateTime,

province_id UInt64,

province_name String,

area_code String,

iso_code String,

iso_3166_2 String,

order_amount Decimal64(2),

order_count UInt64,

ts UInt64

)engine =ReplacingMergeTree(ts)

partition by toYYYYMMDD(stt)

order by (stt,edt,province_id);

![]()

标题

标题

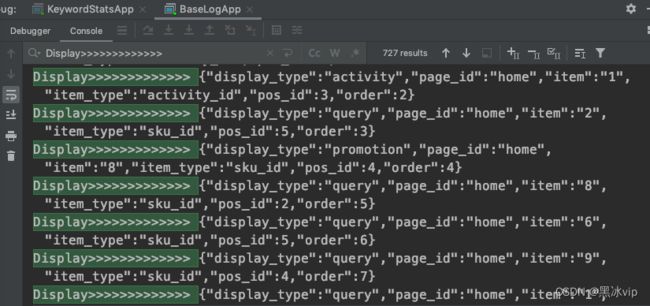

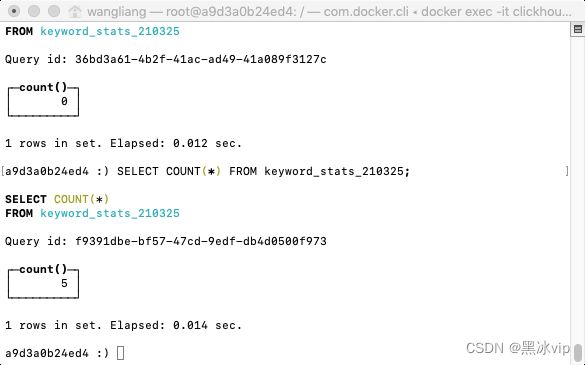

164-DWS层-关键词主题 代码测试

启动kafka:

bin/kafka-server-start.sh config/server9092.properties

启动clickhouse:

docker exec -it clickhouse-server /bin/bash

clickhouse-client

启动程序:

KeywordStatsApp.java

BaseLogApp.java

create table keyword_stats_210325 (

stt DateTime,

edt DateTime,

keyword String,

source String,

ct UInt64,

ts UInt64

)engine =ReplacingMergeTree(ts)

partition by toYYYYMMDD(stt)

order by (stt,edt,keyword,source);

启动行为日志:

/opt/modules/gmall-flink/rt_applog

sudo java -jar gmall-logger.jar

sudo java -jar gmall2020-mock-log-2020-12-18.jar

gmall2020-mock-log-2020-12-18.jar 日志是不间断的造数据

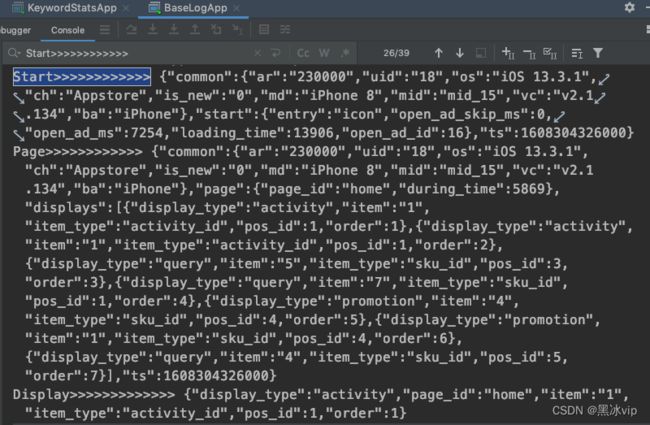

每隔10秒(窗口大小是10秒)看数据的输出:

注意:clickhouse数据大小每次设置为5的倍数

Start>>>>>>>>>>>> {"common":{"ar":"230000","uid":"18","os":"iOS 13.3.1","ch":"Appstore","is_new":"0","md":"iPhone 8","mid":"mid_15","vc":"v2.1.134","ba":"iPhone"},"start":{"entry":"icon","open_ad_skip_ms":0,"open_ad_ms":7254,"loading_time":13906,"open_ad_id":16},"ts":1608304326000}

Page>>>>>>>>>>>> {"common":{"ar":"230000","uid":"18","os":"iOS 13.3.1","ch":"Appstore","is_new":"0","md":"iPhone 8","mid":"mid_15","vc":"v2.1.134","ba":"iPhone"},"page":{"page_id":"home","during_time":5869},"displays":[{"display_type":"activity","item":"1","item_type":"activity_id","pos_id":1,"order":1},{"display_type":"activity","item":"1","item_type":"activity_id","pos_id":1,"order":2},{"display_type":"query","item":"5","item_type":"sku_id","pos_id":3,"order":3},{"display_type":"query","item":"7","item_type":"sku_id","pos_id":1,"order":4},{"display_type":"promotion","item":"4","item_type":"sku_id","pos_id":4,"order":5},{"display_type":"promotion","item":"1","item_type":"sku_id","pos_id":4,"order":6},{"display_type":"query","item":"4","item_type":"sku_id","pos_id":5,"order":7}],"ts":1608304326000}

Display>>>>>>>>>>>>> {"display_type":"activity","page_id":"home","item":"1","item_type":"activity_id","pos_id":1,"order":1}