PySpark对电影和用户进行聚类分析

之前的博文使用pyspark.mllib.recommendation做推荐案例,代码实现了如何为用户做电影推荐和为电影找到潜在的感兴趣用户。本篇博文介绍如何利用因子分解出的用户特征、电影特征做用户和电影的聚类分析,以看能否找到不同于已知的、有趣的新信息。

第一步:获取用户评分数据显式因式分解后的movieFactors、userFactors。

from pyspark.mllib.recommendation import ALS,Rating

#用户评分数据

rawData = sc.textFile("/Users/gao/data/ml-100k/u.data")

rawRatings = rawData.map(lambda line:line.split("\t")[:3])

#构造user-item-rating 数据

ratings = rawRatings.map(lambda line:Rating(user=int(line[0]),product=int(line[1]),rating=float(line[2])))

#评分数据放入缓存中

ratings.cache()

#模型训练

alsModel = ALS.train(ratings, rank=50, iterations=10, lambda_=0.1)

#根据因式分解出的 productFeatures\userFeatures

from pyspark.mllib.linalg import Vectors

movieFactors = alsModel.productFeatures().map(lambda product_features:(product_features[0],Vectors.dense(product_features[1])))

movieVectors = movieFactors.map(lambda line:line[1])

userFactors = alsModel.userFeatures().map(lambda user_features:(user_features[0],Vectors.dense(user_features[1])))

userVectors = userFactors.map(lambda line:line[1])

第二步,读入电影题材数据

#电影题材数据

genres = sc.textFile("/Users/gao/Desktop/Toby/5Spark-JDK/data/ml-100k/u.genre")

#电影题材数据按照 | 分割

genreMap = genres.filter(lambda line:line!='' and len(line)>4).map(lambda line:line.split('|')).map(lambda arr:(arr[1],arr[0])).collectAsMap()

print(genreMap)

#电影名称和题材标注数据

movies = sc.textFile("/Users/gao/data/ml-100k/u.item")

#获取电影名称和题材名对应数据

import numpy as np

def titles_and_genres(line,genreMap):

arr = line.split('|')

idx = int(arr[0])

title = str(arr[1])

genre_list = np.array([int(i) for i in arr[5:]])

index_list = [index for index in np.where(genre_list==1)[0]]

genresAssigned = [genreMap[str(index)] for index in index_list]

print(genresAssigned)

return (idx,(title,genresAssigned))

genreMap_bcast = sc.broadcast(genreMap)

titlesAndGenres = movies.map(lambda line:titles_and_genres(line,genreMap_bcast.value))第三步,查看特征数据是否需要规范化

#查看特征数据是否需要规范化

from pyspark.mllib.linalg.distributed import RowMatrix

movieMatrix = RowMatrix(movieVectors)

movieMatrixSummary =movieMatrix.computeColumnSummaryStatistics()

userMatrix = RowMatrix(userVectors)

userMatrixSummary =userMatrix.computeColumnSummaryStatistics()

print("Movie factors mean: {}".format(movieMatrixSummary.mean()))

print("Movie factors variance: {}".format(movieMatrixSummary.variance()))

print("User factors mean: {}".format(userMatrixSummary.mean()))

print("User factors variance: {}".format(userMatrixSummary.variance()))第四步,训练模型-KMeans聚类

from pyspark.mllib.clustering import KMeans

numClusters = 5

numIterations = 10

#训练产品特征数据的聚类 ,查看下聚类中心

movieClusterModel = KMeans.train(rdd=movieVectors, k=numClusters,maxIterations=numIterations)

movieClusterModel.clusterCenters

#预测聚类中心

predictions = movieClusterModel.predict(movieVectors)第五步,解释聚类含义

#解释聚类的含义

import math

def computeDistance(v1,v2):

v = v1-v2

return v.dot(v)

titlesWithFactors = titlesAndGenres.join(movieFactors)

#查看电影的聚类情况

def movieAssignedCluster(line):

(id,((title,genres), vector)) = line

pred = movieClusterModel.predict(vector)

clusterCentre = movieClusterModel.clusterCenters[pred]

dist = computeDistance(Vectors.dense(clusterCentre),Vectors.dense(vector))

return (id, title, genres, pred, dist)

moviesAssigned = titlesWithFactors.map(lambda line:movieAssignedCluster(line))

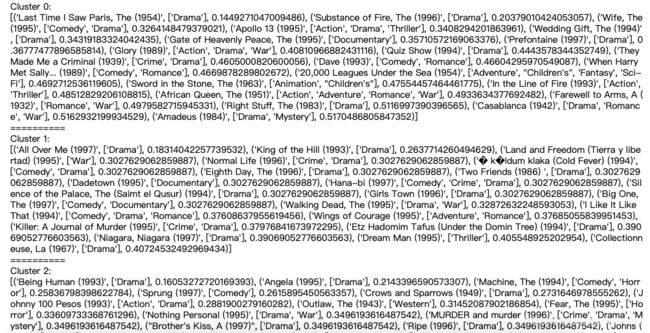

clusterAssignments = moviesAssigned.groupBy(lambda x:x[3]).collectAsMap()

#输出每个聚类中,距离聚类中心最近的Top电影

for (k,v) in clusterAssignments.items():

print('Cluster %d:'%k)

m = sorted([(x[1],x[2],x[4]) for x in v], key=lambda x:x[-1], reverse=False)

print([x for x in m[:20]])

print("==========")聚类0中的电影基本是Drama类型的,偏向爱情、浪漫的题材。

第六步,拆分训练集、测试集,调聚类中心参数K,并评价聚类模型

#拆分训练集、测试集

trainTestSplitMovies = movieVectors.randomSplit(np.array([0.6, 0.4]),123)

trainMovies = trainTestSplitMovies[0]

testMovies = trainTestSplitMovies[1]

print('Movie clustering cross-validation:')

#调参-聚类中心K

for k in [2, 3, 4, 5, 10, 20,30,40,50]:

model = KMeans.train(rdd=trainMovies, k=k,maxIterations=numIterations)

cost = model.computeCost(testMovies)

print('WCSS for k={} is {:.4f}'.format(k,cost))

随着K的增大,WCSS减小,但到K=20后,WCSS的减小趋势变缓。所以,结合需求的同时,K取在20以内比较好。

Done