Netty的ReplayingDecoder分析

说明

- io.netty.handler.codec.ReplayingDecoder是io.netty.handler.codec.ByteToMessageDecoder的一个子类,是一个抽象类,它将字节流解码成其它的消息。

- 需要ReplayingDecoder的子类实现decode(ChannelHandlerContext ctx, ByteBuf in, List out)这个函数,进行具体的解码。

- ReplayingDecoder和ByteToMessageDecoder最大的不同是它允许子类实现函数decode() 和decodeLast()的时候,就好象需要的字节已经全部接收到一样,而不需要显式判断需要的字节是否已经全部接收到。

例如,如果直接继承ByteToMessageDecoder类,decode() 函数的实现会类似下面的形式:

public class ServerBytesFramerDecoder extends ByteToMessageDecoder {

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List而如果直接继承ReplayingDecoder类,decode() 函数的实现会类似下面的形式:

public class ServerBytesFramerDecoder extends ReplayingDecoder {

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List - ReplayingDecoder之所以能做到上面那样,是因为ReplayingDecoder传递了一个特别的ByteBuf实现类ReplayingDecoderByteBuf:

public abstract class ReplayingDecoder extends ByteToMessageDecoder {

static final Signal REPLAY = Signal.valueOf(ReplayingDecoder.class, "REPLAY");

private final ReplayingDecoderByteBuf replayable = new ReplayingDecoderByteBuf();

private S state;

private int checkpoint = -1;

final class ReplayingDecoderByteBuf extends ByteBuf {

private static final Signal REPLAY = ReplayingDecoder.REPLAY;

private ByteBuf buffer;

private boolean terminated;

private SwappedByteBuf swapped;

- 当没有足够的字节的时候,ReplayingDecoderByteBuf会抛出一个Error类型,控制又回到ReplayingDecoder。这个Error类型是Signal:

public final class Signal extends Error implements Constant {

private static final long serialVersionUID = -221145131122459977L;

- 当ReplayingDecoder捕获到Error后,会将buffer的readerIndex重置回初始的位置(也就是buffer的开始),当新的数据被接收到buffer以后,又会调用decode函数。

- ReplayingDecoder尽管带来了方便,但也付出了一些代价。因为ReplayingDecoderByteBuf对ByteBuf的操作方法进行了覆盖,导致buffer的有些操作是禁止的。例如:

@Override

public byte[] array() {

throw new UnsupportedOperationException();

}

@Override

public int arrayOffset() {

throw new UnsupportedOperationException();

}

- 性能上可能有些降低。因为如果消息复杂的话,实现类的decode方法必须一次又一次地解析消息的同一部分(每次接收到新的字节,都会尝试解析,但接收到的字节数可能不够,导致解析失败,等待下一次再解析)。

示例

一个简单的正常示例

本示例的验证场景:

在这个示例中,客户端发送了12个字节的数据,服务端的ServerBytesFramerDecoder解析成功,传递给了后续的ServerRegisterRequestHandler。

服务端代码片段

package com.thb.power.server;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelOption;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

/**

* 服务端的主函数

* @author thb

*

*/

public class MainStation {

static final int PORT = Integer.parseInt(System.getProperty("port", "22335"));

public static void main(String[] args) throws Exception {

// 配置服务器

EventLoopGroup bossGroup = new NioEventLoopGroup();

EventLoopGroup workerGroup = new NioEventLoopGroup();

try {

ServerBootstrap b = new ServerBootstrap();

b.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.option(ChannelOption.SO_BACKLOG, 100)

.handler(new LoggingHandler(LogLevel.INFO))

.childHandler(new MainStationInitializer());

// 启动服务端

ChannelFuture f = b.bind(PORT).sync();

// 等待直到server socket关闭

f.channel().closeFuture().sync();

} finally {

// 关闭所有event loops以便终止所有的线程

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

}

}

package com.thb.power.server;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelPipeline;

import io.netty.channel.socket.SocketChannel;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

public class MainStationInitializer extends ChannelInitializer {

@Override

public void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline p = ch.pipeline();

p.addLast(new LoggingHandler(LogLevel.INFO));

p.addLast(new ServerBytesFramerDecoder());

p.addLast(new ServerRegisterRequestHandler());

}

}

package com.thb.power.server;

import java.util.List;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.handler.codec.ReplayingDecoder;

public class ServerBytesFramerDecoder extends ReplayingDecoder {

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List package com.thb.power.server;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

public class ServerRegisterRequestHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) {

ByteBuf m = (ByteBuf)msg;

System.out.println("ServerRegisterRequestHandler: readableBytes: " + m.readableBytes());

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

cause.printStackTrace();

ctx.close();

}

}

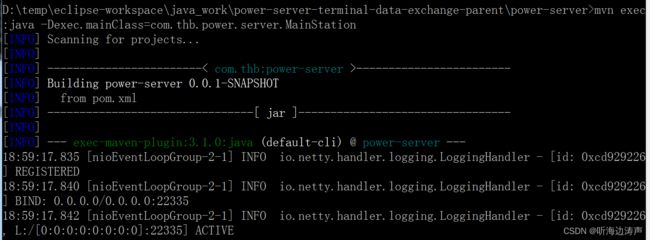

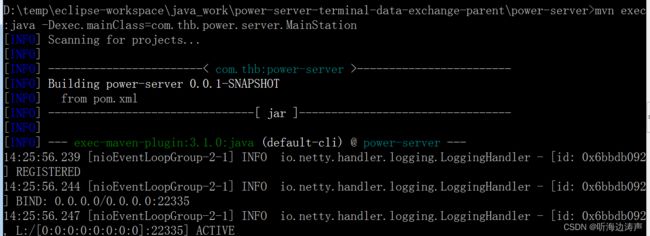

启动服务端

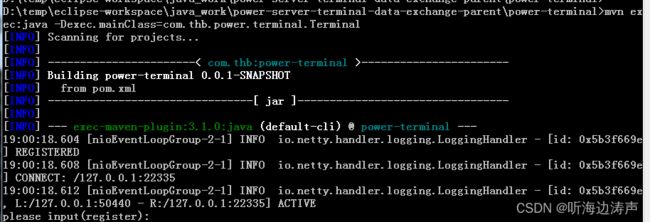

启动客户端

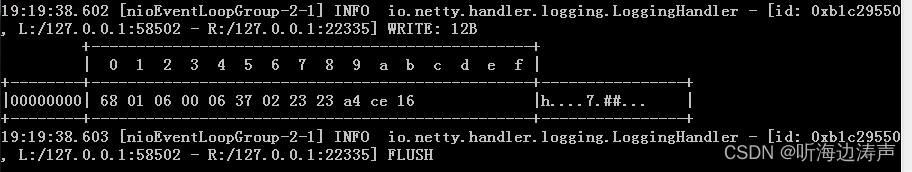

从客户端发送12个字节的业务数据

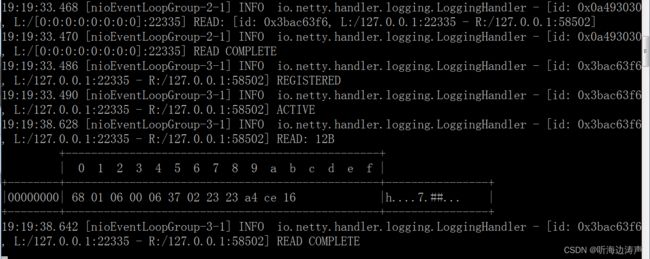

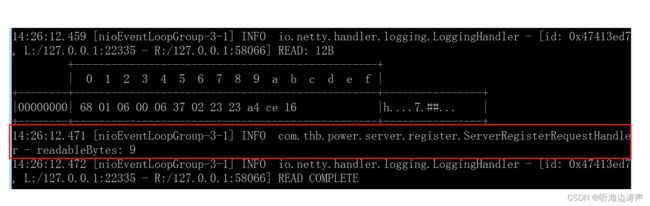

观察服务端的输出

从上面服务端的输出可以看出,ServerRegisterRequestHandler收到了12个字节的数据。而ServerRegisterRequestHandler在ServerBytesFramerDecoder的后面,所以这12个字节的数据是ServerBytesFramerDecoder解析出来传递过来的。当时在ChannelPipeline添加的ChannelHandler的顺序:

@Override

public void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline p = ch.pipeline();

p.addLast(new LoggingHandler(LogLevel.INFO));

p.addLast(new ServerBytesFramerDecoder());

p.addLast(new ServerRegisterRequestHandler());

}

客户端发送的数据少于服务端ReplayingDecoder实现类要求接收的数据

本示例的验证场景:

在这个示例中,客户端发送了12个字节的数据,服务端的ServerBytesFramerDecoder要求接收100个字节的数据,所以没有接收到足够的数据,导致后续的ServerRegisterRequestHandler没有接收到数据。

服务端代码片段

package com.thb.power.server;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelOption;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

/**

* 服务端的主函数

* @author thb

*

*/

public class MainStation {

static final int PORT = Integer.parseInt(System.getProperty("port", "22335"));

public static void main(String[] args) throws Exception {

// 配置服务器

EventLoopGroup bossGroup = new NioEventLoopGroup();

EventLoopGroup workerGroup = new NioEventLoopGroup();

try {

ServerBootstrap b = new ServerBootstrap();

b.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.option(ChannelOption.SO_BACKLOG, 100)

.handler(new LoggingHandler(LogLevel.INFO))

.childHandler(new MainStationInitializer());

// 启动服务端

ChannelFuture f = b.bind(PORT).sync();

// 等待直到server socket关闭

f.channel().closeFuture().sync();

} finally {

// 关闭所有event loops以便终止所有的线程

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

}

}

package com.thb.power.server;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelPipeline;

import io.netty.channel.socket.SocketChannel;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

public class MainStationInitializer extends ChannelInitializer {

@Override

public void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline p = ch.pipeline();

p.addLast(new LoggingHandler(LogLevel.INFO));

p.addLast(new ServerBytesFramerDecoder());

p.addLast(new ServerRegisterRequestHandler());

}

}

package com.thb.power.server;

import java.util.List;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.handler.codec.ReplayingDecoder;

public class ServerBytesFramerDecoder extends ReplayingDecoder {

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List package com.thb.power.server;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

public class ServerRegisterRequestHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) {

ByteBuf m = (ByteBuf)msg;

System.out.println("ServerRegisterRequestHandler: readableBytes: " + m.readableBytes());

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

cause.printStackTrace();

ctx.close();

}

}

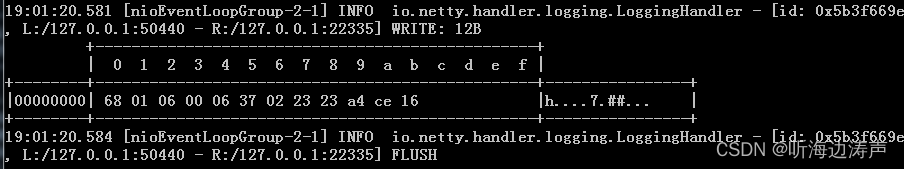

启动服务端

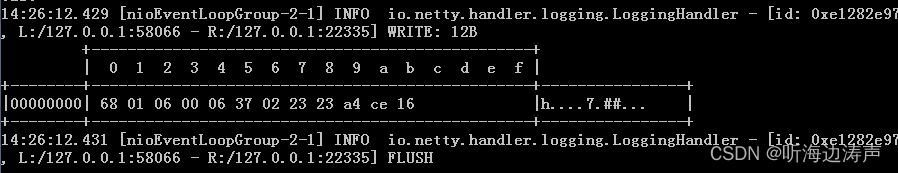

启动客户端,并发送12个字节的数据

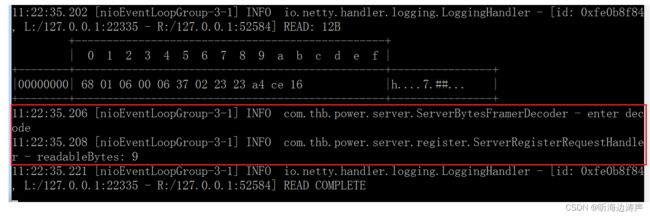

观察服务端的输出

从上面服务端的输出可以发现,LoggingHandler是收到了12个字节的数据,但ServerRegisterRequestHandler没有接收到数据。这是因为ServerBytesFramerDecoder没有接收到足够的数据(期望接收100个字节),也就没有传递给后续的ServerRegisterRequestHandler。

调用checkpoint(S state)传递状态

本示例的验证场景:

在这个示例中,客户端发送了12个字节的数据,服务端的解码器ServerBytesFramerDecoder通过调用checkpoint(S state)来更新解码器的状态,读取数据中的不同的部分。读到期望的内容,传递给后续的ServerRegisterRequestHandler。

这个场景用到了ReplayingDecoder的几个函数,先解释下:

- checkpoint(S state):这个函数的作用是保存ReplayingDecoder中累加buffer的当前的readerIndex,并且更新当前解码器的状态。

- checkpoint():这个函数的作用是保存ReplayingDecoder中累加buffer的当前的readerIndex。(本场景中我们没有使用这个参数。但作为对比,罗列出来)

- state():返回解码器的当前状态。

- state(S newState):设置解码器的当前状态。(本场景中我们没有使用这个参数。但作为对比,罗列出来)

服务端代码片段

package com.thb.power.server;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelOption;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

/**

* 服务端的主函数

* @author thb

*

*/

public class MainStation {

private static final Logger logger = LogManager.getLogger();

static final int PORT = Integer.parseInt(System.getProperty("port", "22335"));

public static void main(String[] args) throws Exception {

logger.traceEntry();

// 配置服务器

EventLoopGroup bossGroup = new NioEventLoopGroup();

EventLoopGroup workerGroup = new NioEventLoopGroup();

try {

ServerBootstrap b = new ServerBootstrap();

b.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.option(ChannelOption.SO_BACKLOG, 100)

.handler(new LoggingHandler(LogLevel.INFO))

.childHandler(new MainStationInitializer());

// 启动服务端

ChannelFuture f = b.bind(PORT).sync();

// 等待直到server socket关闭

f.channel().closeFuture().sync();

} finally {

// 关闭所有event loops以便终止所有的线程

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

logger.traceExit();

}

}

package com.thb.power.server;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import com.thb.power.server.register.ServerRegisterRequestHandler;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelPipeline;

import io.netty.channel.socket.SocketChannel;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

public class MainStationInitializer extends ChannelInitializer {

private static final Logger logger = LogManager.getLogger();

@Override

public void initChannel(SocketChannel ch) throws Exception {

logger.traceEntry();

ChannelPipeline p = ch.pipeline();

p.addLast(new LoggingHandler(LogLevel.INFO));

p.addLast(new ServerBytesFramerDecoder());

p.addLast(new ServerRegisterRequestHandler());

logger.traceExit();

}

}

package com.thb.power.server;

import java.util.List;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.handler.codec.ReplayingDecoder;

public class ServerBytesFramerDecoder extends ReplayingDecoder {

private static final Logger logger = LogManager.getLogger();

private byte length;

public ServerBytesFramerDecoder() {

super(ServerBytesFramerDecoderState.READ_START_MARK);

}

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List package com.thb.power.server;

public enum ServerBytesFramerDecoderState {

READ_START_MARK,

READ_FUNCTION_CODE,

READ_LENGTH,

READ_CONTENT;

}

package com.thb.power.server.register;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

public class ServerRegisterRequestHandler extends ChannelInboundHandlerAdapter {

private static final Logger logger = LogManager.getLogger();

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) {

logger.traceEntry();

ByteBuf m = (ByteBuf)msg;

logger.info("readableBytes: " + m.readableBytes());

logger.traceExit();

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

cause.printStackTrace();

ctx.close();

}

}

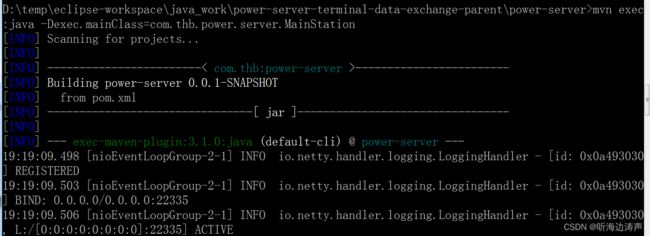

启动服务端

启动客户端,并发送12个字节的数据

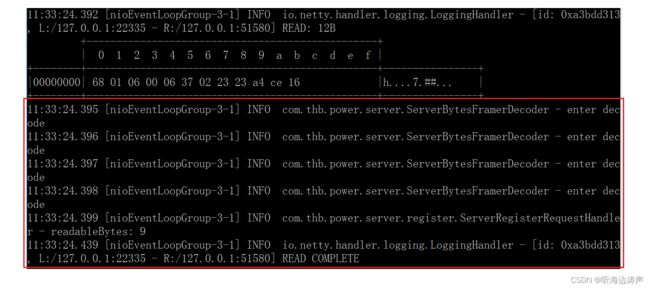

观察服务端的输出

从服务端的输出可以看出,ServerRegisterRequestHandler成功接收到了9个字节的数据。

为什么是接收到9个字节的数据呢?

因为在ServerBytesFramerDecoder的decode函数中,读取第1个字节的协议开始符、1个字节的功能码、1个字节的长度字段,这3个字节只是读出来,并没有向后传递。所以12个字节的数据,减去3个字节的数据,向后传递的是9个字节的数据。

此处只是为了说明checkpoint(S state)的场景,真正的业务中,要结合业务实际情况处理,例如判断功能码等。

对比—decode函数一次调用只处理1个状态、一次调用处理多个状态

这个对比的场景:

- ServerBytesFramerDecoder的decode函数使用了checkpoint(S state)更新状态、保存buffer的当前readerIndex。

- 用switch语句块对状态进行判断处理。switch语句块中的case分支,是按照状态顺序排列的。

- 考虑到decode函数输入的buffer可能已经累积了足够的字节,所以 1)一次调用decode函数连续处理几个状态,即case分支执行完成以后,没有使用break语句跳出switch语句块,而是继续往下处理下一个状态。这样性能其实更好一些; 或者 2)也可以每次调用decode函数只处理1个状态,即每个case分支处理完成以后,就用break语句跳出switch语句块,等下次调用decode函数再处理下一个状态,这样性能差一些。

为了进行对比,只列出ServerBytesFramerDecoder类的代码,其它类的代码没有改动。

处理方法1:一次调用decode函数连续处理几个状态

package com.thb.power.server;

import java.util.List;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.handler.codec.ReplayingDecoder;

public class ServerBytesFramerDecoder extends ReplayingDecoder {

private static final Logger logger = LogManager.getLogger();

private byte length;

public ServerBytesFramerDecoder() {

super(ServerBytesFramerDecoderState.READ_START_MARK);

}

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List 启动服务端,启动客户端并发送12个字节的数据给服务端,观察服务端的输出:

从服务端输出可以看出,只进入decode函数一次,就把报文的几个状态都处理完了,将读出的数据传递给了后面的ServerRegisterRequestHandler。为什么传递给后面ServerRegisterRequestHandler是9个字节的数据,上面有解释。

处理方法2:一次调用decode函数只处理1个状态

package com.thb.power.server;

import java.util.List;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.handler.codec.ReplayingDecoder;

public class ServerBytesFramerDecoder extends ReplayingDecoder {

private static final Logger logger = LogManager.getLogger();

private byte length;

public ServerBytesFramerDecoder() {

super(ServerBytesFramerDecoderState.READ_START_MARK);

}

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List 启动服务端,启动客户端并发送12个字节的数据给服务端,观察服务端的输出:

从服务端输出可以看出,因为switch语句块每个case处理完一个状态就break了,所以4个状态就进入decode函数4次,每个状态进入一次。

查看ReplayingDecoder的decode函数传入的ByteBuf实现类

只给出ReplayingDecoder的实现类ServerBytesFramerDecoder的decode函数的代码片段,其它的省略:

package com.thb.power.server;

import java.util.List;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.handler.codec.ReplayingDecoder;

public class ServerBytesFramerDecoder extends ReplayingDecoder {

private static final Logger logger = LogManager.getLogger();

private byte length;

......省略其它代码

@Override

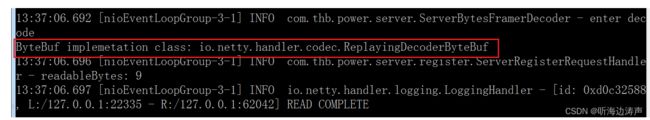

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List 输出:

从输出可以看出,实现类是io.netty.handler.codec.ReplayingDecoderByteBuf