YOLOv5、YOLOv8改进: GSConv+Slim Neck

论文题目:Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles

论文:https://arxiv.org/abs/2206.02424

代码:https://github.com/AlanLi1997/Slim-neck-by-GSConv

在计算机视觉领域,目标检测是一项复杂而具有挑战性的任务,特别是在车载边缘计算平台上,实时性要求较高,而且传统的大型模型往往难以满足这一需求。另一方面,基于大量深度可分离卷积层构建的轻量级模型可能无法保持足够的准确性。为了解决这些问题,本文提出了一种名为GSConv的新方法,以在降低模型复杂性的同时保持准确性。GSConv不仅可以平衡模型的速度和准确性,还引入了一种名为Slim-Neck的设计范式,以在计算成本方面实现更高的效益。实验结果表明,与原始网络相比,本文方法在性能上取得了显著的提升,例如在Tesla T4上以约100FPS的速度获得了70.9%的mAP0.5,显示了其在目标检测领域的优越性。

目标检测在无人驾驶汽车中具有重要的意义,它是使车辆能够感知周围环境的基本能力。当前,基于深度学习的目标检测算法在这一领域中占据主导地位。这些算法在检测阶段通常可分为两种类型:一阶段检测和两阶段检测。两阶段检测器在小目标的检测方面表现更佳,通过稀疏检测的原理能够实现更高的平均精度(mAP),但其速度较慢。而一阶段检测器虽然在小目标的检测和定位方面不如两阶段检测器准确,但其速度更快,这在工业应用中具有重要意义。

尽管人们常常认为模型的非线性能力与神经元的数量成正比,但这并不是完整的理解。事实上,生物大脑在信息处理方面具有强大的能力和低能耗,远超计算机。因此,简单地不断增加模型参数的数量并不能直接带来强大的性能。在目前阶段,采用轻量级设计可以有效地降低计算成本。这一设计思路主要通过使用深度可分离卷积(DSC)操作来减少参数数量和浮点运算数(FLOPs),从而取得显著效果。

1.

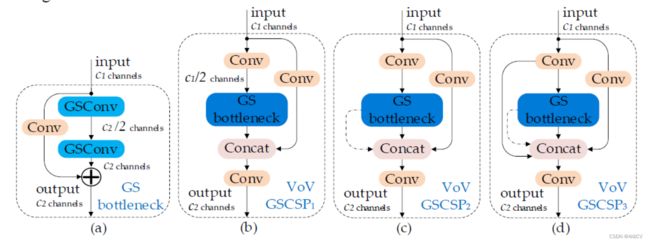

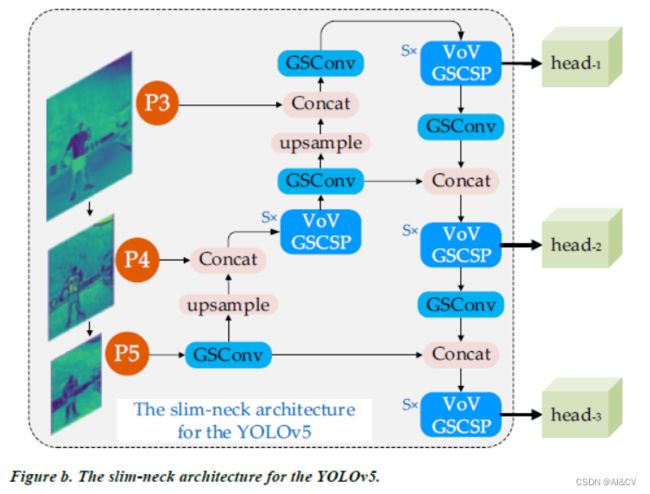

2 Slim-Neck

采用 GSConv 方法的 Slim-Neck 可缓解 DSC 缺陷对模型的负面影响,并充分利用深度可分离卷积 DSC 的优势。

---------------------------------------分割线----------------------------------------------

下面介绍一下如何在自己的网络中改进

GSConv+Slim Neck 加入common.py

###################### slim-neck-by-gsconv #### start ###############################

class GSConv(nn.Module):

# GSConv https://github.com/AlanLi1997/slim-neck-by-gsconv

def __init__(self, c1, c2, k=1, s=1, g=1, act=True):

super().__init__()

c_ = c2 // 2

self.cv1 = Conv(c1, c_, k, s, None, g, 1, act)

self.cv2 = Conv(c_, c_, 5, 1, None, c_, 1 , act)

def forward(self, x):

x1 = self.cv1(x)

x2 = torch.cat((x1, self.cv2(x1)), 1)

# shuffle

# y = x2.reshape(x2.shape[0], 2, x2.shape[1] // 2, x2.shape[2], x2.shape[3])

# y = y.permute(0, 2, 1, 3, 4)

# return y.reshape(y.shape[0], -1, y.shape[3], y.shape[4])

b, n, h, w = x2.data.size()

b_n = b * n // 2

y = x2.reshape(b_n, 2, h * w)

y = y.permute(1, 0, 2)

y = y.reshape(2, -1, n // 2, h, w)

return torch.cat((y[0], y[1]), 1)

class GSConvns(GSConv):

# GSConv with a normative-shuffle https://github.com/AlanLi1997/slim-neck-by-gsconv

def __init__(self, c1, c2, k=1, s=1, g=1, act=True):

super().__init__(c1, c2, k=1, s=1, g=1, act=True)

c_ = c2 // 2

self.shuf = nn.Conv2d(c_ * 2, c2, 1, 1, 0, bias=False)

def forward(self, x):

x1 = self.cv1(x)

x2 = torch.cat((x1, self.cv2(x1)), 1)

# normative-shuffle, TRT supported

return nn.ReLU(self.shuf(x2))

class GSBottleneck(nn.Module):

# GS Bottleneck https://github.com/AlanLi1997/slim-neck-by-gsconv

def __init__(self, c1, c2, k=3, s=1, e=0.5):

super().__init__()

c_ = int(c2*e)

# for lighting

self.conv_lighting = nn.Sequential(

GSConv(c1, c_, 1, 1),

GSConv(c_, c2, 3, 1, act=False))

self.shortcut = Conv(c1, c2, 1, 1, act=False)

def forward(self, x):

return self.conv_lighting(x) + self.shortcut(x)

class DWConv(Conv):

# Depth-wise convolution class

def __init__(self, c1, c2, k=1, s=1, act=True): # ch_in, ch_out, kernel, stride, padding, groups

super().__init__(c1, c2, k, s, g=math.gcd(c1, c2), act=act)

class GSBottleneckC(GSBottleneck):

# cheap GS Bottleneck https://github.com/AlanLi1997/slim-neck-by-gsconv

def __init__(self, c1, c2, k=3, s=1):

super().__init__(c1, c2, k, s)

self.shortcut = DWConv(c1, c2, k, s, act=False)

class VoVGSCSP(nn.Module):

# VoVGSCSP module with GSBottleneck

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

# self.gc1 = GSConv(c_, c_, 1, 1)

# self.gc2 = GSConv(c_, c_, 1, 1)

# self.gsb = GSBottleneck(c_, c_, 1, 1)

self.gsb = nn.Sequential(*(GSBottleneck(c_, c_, e=1.0) for _ in range(n)))

self.res = Conv(c_, c_, 3, 1, act=False)

self.cv3 = Conv(2 * c_, c2, 1) #

def forward(self, x):

x1 = self.gsb(self.cv1(x))

y = self.cv2(x)

return self.cv3(torch.cat((y, x1), dim=1))

class VoVGSCSPC(VoVGSCSP):

# cheap VoVGSCSP module with GSBottleneck

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):

super().__init__(c1, c2)

c_ = int(c2 * 0.5) # hidden channels

self.gsb = GSBottleneckC(c_, c_, 1, 1)

###################### slim-neck-by-gsconv #### end ###############################

GSConv+Slim Neck 加入yolo.py

args = [c1, c2, *args[1:]]

if m in {BottleneckCSP, C3, C3TR, CNeB, C3Ghost, C3x, C2f, VoVGSCSP, VoVGSCSPC}:

args.insert(2, n) # number of repeats

n = 1

if m in {

Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,

BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, CNeB, nn.ConvTranspose2d, DWConvTranspose2d, C3x, C2f,CARAFE, GSConv, VoVGSCSP, VoVGSCSPC}:

配置 yaml文件

GSConv-yolov5s.yaml

# YOLOv5 by Ultralytics, GPL-3.0 license

# Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicle

# Parameters

nc: 2 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, GSConv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, GSConv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, GSConv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, GSConv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

slimneck-yolov5s.yaml

# YOLOv5 by Ultralytics, GPL-3.0 license

# Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicle

# Parameters

nc: 2 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, GSConv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, VoVGSCSP, [512, False]], # 13

[-1, 1, GSConv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, VoVGSCSP, [256, False]], # 17 (P3/8-small)

[-1, 1, GSConv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, VoVGSCSP, [512, False]], # 20 (P4/16-medium)

[-1, 1, GSConv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, VoVGSCSP, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

这两种改法在缺陷检测数据集上亲测有效