数仓4.0笔记——用户行为数据采集四

1 日志采集Flume安装

[zhang@hadoop102 software]$ tar -zxvf apache-flume-1.9.0-bin.tar.gz -C /opt/module/

[zhang@hadoop102 module]$ mv apache-flume-1.9.0-bin/ flume

将lib文件夹下的guava-11.0.2.jar删除以兼容Hadoop 3.1.3

[zhang@hadoop102 module]$ rm /opt/module/flume/lib/guava-11.0.2.jar

hadoop能正常工作

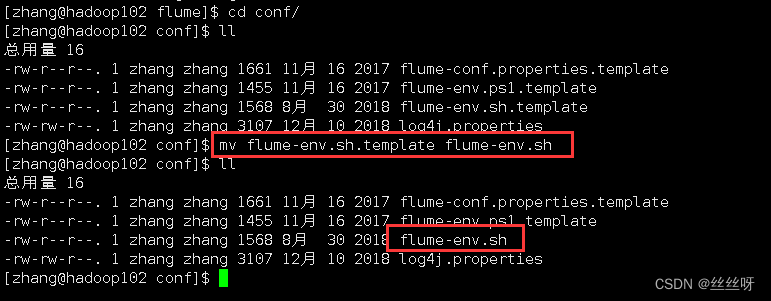

将flume/conf下的flume-env.sh.template文件修改为flume-env.sh,并配置flume-env.sh文件

[zhang@hadoop102 conf]$ mv flume-env.sh.template flume-env.sh

[zhang@hadoop102 conf]$ vi flume-env.sh

export JAVA_HOME=/opt/module/jdk1.8.0_212

分发

[zhang@hadoop102 module]$ xsync flume/

![]()

2 日志采集Flume配置

Flume的具体配置如下:

在/opt/module/flume/conf目录下创建file-flume-kafka.conf文件

[zhang@hadoop102 conf]$ vim file-flume-kafka.conf

在文件配置如下内容(先写下,之后再配置)

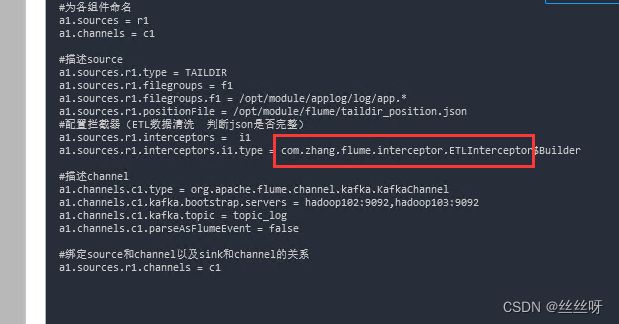

#为各组件命名

a1.sources = r1

a1.channels = c1

#描述source

a1.sources.r1.type = TAILDIR

a1.sources.r1.filegroups = f1

a1.sources.r1.filegroups.f1 = /opt/module/applog/log/app.*

a1.sources.r1.positionFile = /opt/module/flume/taildir_position.json

#配置拦截器(ETL数据清洗 判断json是否完整)

a1.sources.r1.interceptors = i1

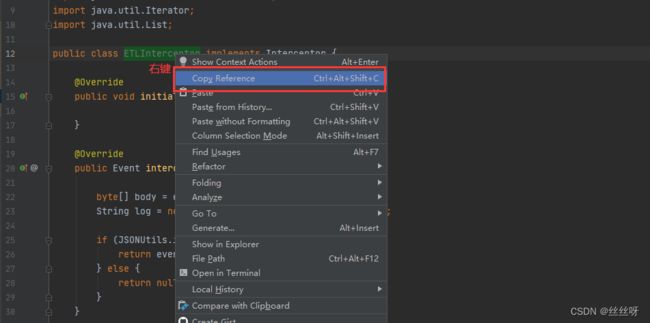

a1.sources.r1.interceptors.i1.type = com.zhang.flume.interceptor.ETLInterceptor$Builder

#描述channel

a1.channels.c1.type = org.apache.flume.channel.kafka.KafkaChannel

a1.channels.c1.kafka.bootstrap.servers = hadoop102:9092,hadoop103:9092

a1.channels.c1.kafka.topic = topic_log

a1.channels.c1.parseAsFlumeEvent = false

#绑定source和channel以及sink和channel的关系

a1.sources.r1.channels = c1

创建Maven工程flume-interceptor

org.apache.flume

flume-ng-core

1.9.0

provided

com.alibaba

fastjson

1.2.62

maven-compiler-plugin

2.3.2

1.8

1.8

maven-assembly-plugin

jar-with-dependencies

make-assembly

package

single

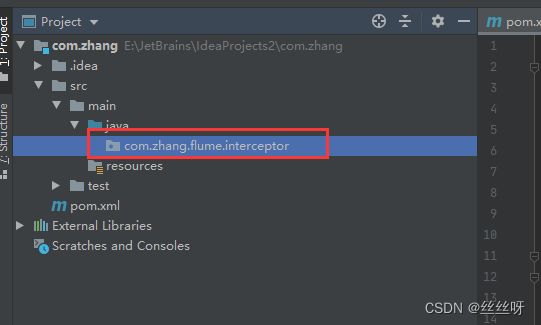

创建包名:com.zhang.flume.interceptor

在com.zhang.flume.interceptor包下创建JSONUtils类

package com.zhang.flume.interceptor;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONException;

public class JSONUtils {

public static boolean isJSONValidate(String log){

try {

JSON.parse(log);

return true;

}catch (JSONException e){

return false;

}

}

}

在com.zhang.flume.interceptor包下创建ETLInterceptor 类

package com.zhang.flume.interceptor;

import com.alibaba.fastjson.JSON;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import java.nio.charset.StandardCharsets;

import java.util.Iterator;

import java.util.List;

public class ETLInterceptor implements Interceptor {

@Override

public void initialize() {

}

@Override

public Event intercept(Event event) {

byte[] body = event.getBody();

String log = new String(body, StandardCharsets.UTF_8);

if (JSONUtils.isJSONValidate(log)) {

return event;

} else {

return null;

}

}

@Override

public List intercept(List list) {

Iterator iterator = list.iterator();

while (iterator.hasNext()){

Event next = iterator.next();

if(intercept(next)==null){

iterator.remove();

}

}

return list;

}

public static class Builder implements Interceptor.Builder{

@Override

public Interceptor build() {

return new ETLInterceptor();

}

@Override

public void configure(Context context) {

}

}

@Override

public void close() {

}

}

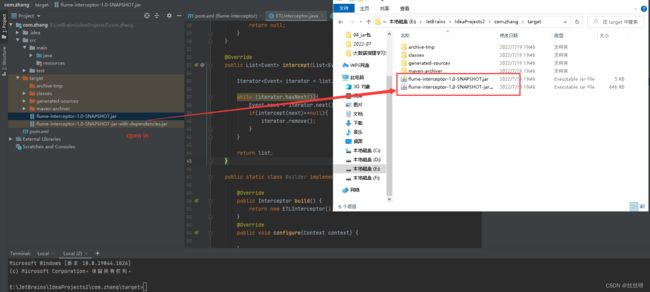

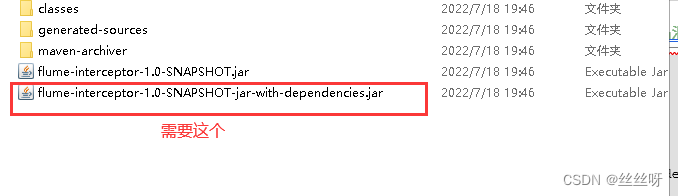

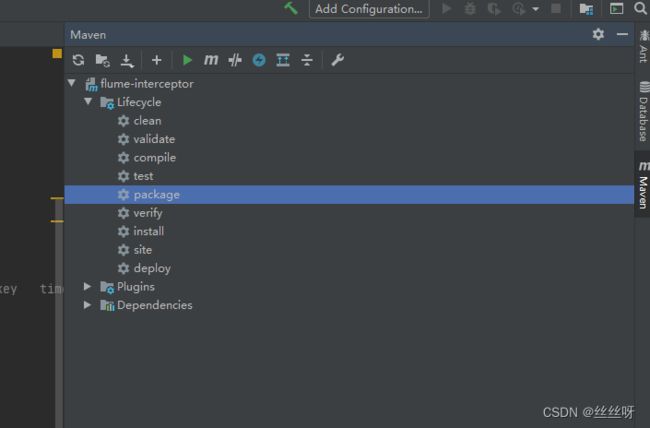

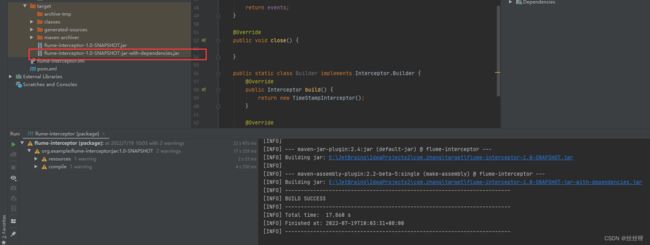

打包编译

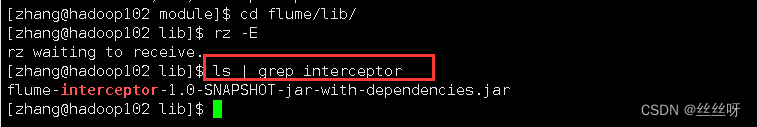

需要先将打好的包放入到hadoop102的/opt/module/flume/lib文件夹下面。

[zhang@hadoop102 module]$ cd flume/lib/

上传文件

过滤一下:

[zhang@hadoop102 lib]$ ls | grep interceptor

分发

[zhang@hadoop102 lib]$ xsync flume-interceptor-1.0-SNAPSHOT-jar-with-dependencies.jar

放在最开始的配置文件这个位置:

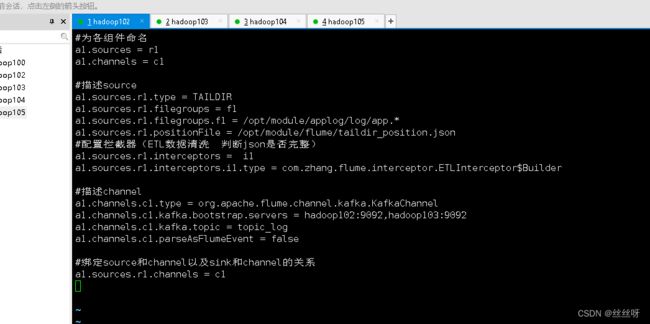

现在把配置文件放在集群上

[zhang@hadoop102 conf]$ vim file-flume-kafka.conf

分发[zhang@hadoop102 conf]$ xsync file-flume-kafka.conf

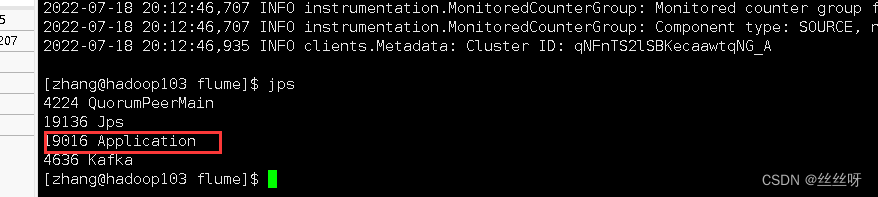

启动flume

[zhang@hadoop102 flume]$ bin/flume-ng agent --name a1 --conf-file conf/file-flume-kafka.conf &

[zhang@hadoop103 flume]$ bin/flume-ng agent --name a1 --conf-file conf/file-flume-kafka.conf &

103也启动成功。

3 测试Flume-Kafka通道

生成日志

[zhang@hadoop102 flume]$ lg.sh

消费Kafka数据,观察控制台是否有数据获取到:

[zhang@hadoop102 kafka]$ bin/kafka-console-consumer.sh \

--bootstrap-server hadoop102:9092 --from-beginning --topic topic_log

[zhang@hadoop102 ~]$ cd /opt/module/flume/

在前台启动

[zhang@hadoop102 flume]$ bin/flume-ng agent --name a1 --conf-file conf/file-flume-kafka.conf

启动成功,但是关闭客户端发现又被关闭了

加上nohup

[zhang@hadoop102 flume]$ nohup bin/flume-ng agent --name a1 --conf-file conf/file-flume-kafka.conf

![]()

nohup,该命令可以在你退出帐户/关闭终端之后继续运行相应的进程。nohup就是不挂起的意思,不挂断地运行命令。

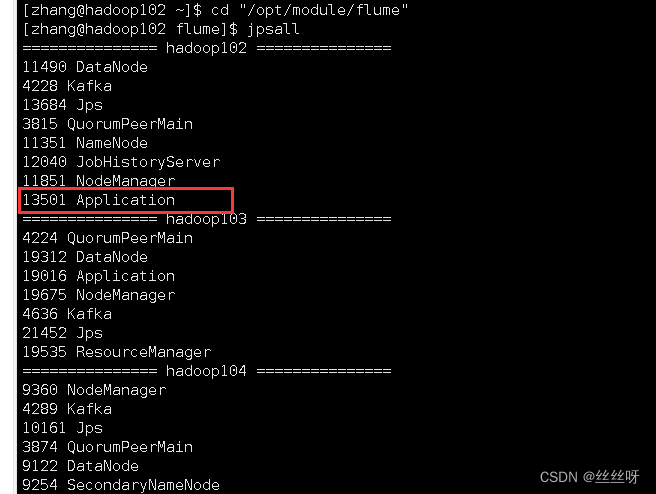

怎么停止?……如何获得号13901?

[zhang@hadoop102 kafka]$ ps -ef | grep Application

[zhang@hadoop102 kafka]$ ps -ef | grep Application | grep -v grep

[zhang@hadoop102 kafka]$ ps -ef | grep Application | grep -v grep | awk '{print $2}'

[zhang@hadoop102 kafka]$ ps -ef | grep Application | grep -v grep | awk '{print $2}' | xargs

[zhang@hadoop102 kafka]$ ps -ef | grep Application | grep -v grep | awk '{print $2}' | xargs -n1 kill -9

Application可能会被其他相同的名字代替,所以要找一个能够唯一标识flume的标志

4 日志采集Flume启动停止脚本

在/home/atguigu/bin目录下创建脚本f1.sh

[zhang@hadoop102 bin]$ vim f1.sh

在脚本中填写如下内容

#! /bin/bash

case $1 in

"start"){

for i in hadoop102 hadoop103

do

echo " --------启动 $i 采集flume-------"

ssh $i "nohup /opt/module/flume/bin/flume-ng agent --conf-file /opt/module/flume/conf/file-flume-kafka.conf --name a1 -Dflume.root.logger=INFO,LOGFILE >/opt/module/flume/log1.txt 2>&1 &"

done

};;

"stop"){

for i in hadoop102 hadoop103

do

echo " --------停止 $i 采集flume-------"

ssh $i "ps -ef | grep file-flume-kafka | grep -v grep |awk '{print \$2}' | xargs -n1 kill -9 "

done

};;

esac

[zhang@hadoop102 bin]$ chmod 777 f1.sh

测试一下停止和启动正常

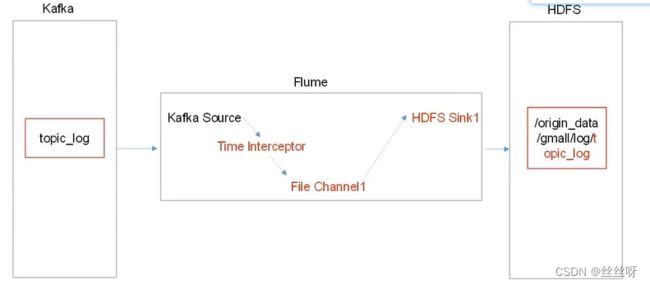

5 消费Kafka数据Flume

消费者Flume配置

在hadoop104的/opt/module/flume/conf目录下创建kafka-flume-hdfs.conf文件

(获取到a1.sources.r1.interceptors.i1.type之后再配置)

[zhang@hadoop104 conf]$ vim kafka-flume-hdfs.conf

## 组件

a1.sources=r1

a1.channels=c1

a1.sinks=k1

## source1

a1.sources.r1.type = org.apache.flume.source.kafka.KafkaSource

a1.sources.r1.batchSize = 5000

a1.sources.r1.batchDurationMillis = 2000

a1.sources.r1.kafka.bootstrap.servers = hadoop102:9092,hadoop103:9092,hadoop104:9092

a1.sources.r1.kafka.topics=topic_log

##时间拦截器

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = com.zhang.flume.interceptor.TimeStampInterceptor$Builder

## channel1

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /opt/module/flume/checkpoint/behavior1

a1.channels.c1.dataDirs = /opt/module/flume/data/behavior1/

## sink1

a1.sinks.k1.type = hdfs

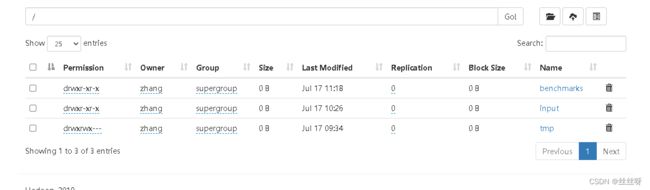

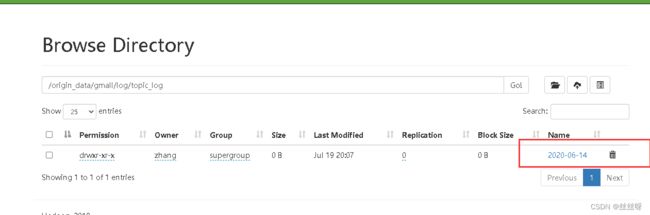

a1.sinks.k1.hdfs.path = /origin_data/gmall/log/topic_log/%Y-%m-%d

a1.sinks.k1.hdfs.filePrefix = log-

a1.sinks.k1.hdfs.round = false

#控制生成的小文件

a1.sinks.k1.hdfs.rollInterval = 10

a1.sinks.k1.hdfs.rollSize = 134217728

a1.sinks.k1.hdfs.rollCount = 0

## 控制输出文件是原生文件。

a1.sinks.k1.hdfs.fileType = CompressedStream

a1.sinks.k1.hdfs.codeC = lzop

## 拼装

a1.sources.r1.channels = c1

a1.sinks.k1.channel= c1

Flume时间戳拦截器(解决零点漂移问题)

在com.zhang.flume.interceptor包下创建TimeStampInterceptor类

package com.zhang.flume.interceptor;

import com.alibaba.fastjson.JSONObject;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import java.nio.charset.StandardCharsets;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

public class TimeStampInterceptor implements Interceptor {

private ArrayList events = new ArrayList<>();

@Override

public void initialize() {

}

@Override

public Event intercept(Event event) {

Map headers = event.getHeaders();

String log = new String(event.getBody(), StandardCharsets.UTF_8);

JSONObject jsonObject = JSONObject.parseObject(log);

String ts = jsonObject.getString("ts");

headers.put("timestamp", ts);

return event;

}

@Override

public List intercept(List list) {

events.clear();

for (Event event : list) {

events.add(intercept(event));

}

return events;

}

@Override

public void close() {

}

public static class Builder implements Interceptor.Builder {

@Override

public Interceptor build() {

return new TimeStampInterceptor();

}

@Override

public void configure(Context context) {

}

}

}

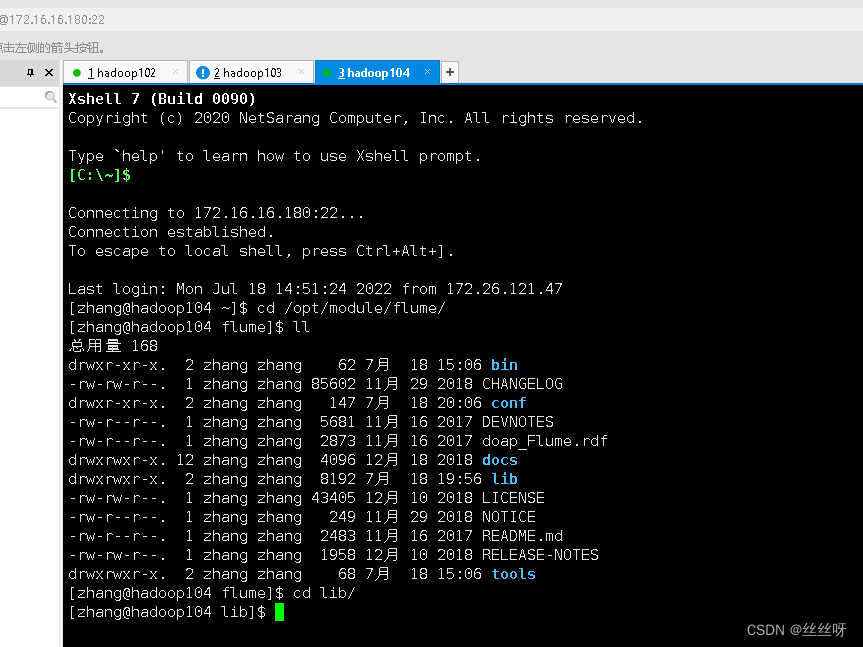

来到hadoop104上:

查看一下在不在

[zhang@hadoop104 lib]$ ls | grep interceptor

删除之前的

[zhang@hadoop104 lib]$ rm -rf flume-interceptor-1.0-SNAPSHOT-jar-with-dependencies.jar.0

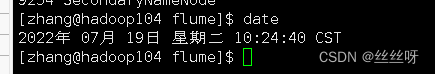

注意:建议查看一下时间,这里.0是新生成的文件,不是以前的。

com.zhang.flume.interceptor.TimeStampInterceptor

启动flume

[zhang@hadoop104 flume]$ nohup /opt/module/flume/bin/flume-ng agent --conf-file /opt/module/flume/conf/kafka-flume-hdfs.conf --name a1 -Dflume.root.logger=INFO,LOGFILE >/opt/module/flume/log2.txt 2>&1 &

查看一下日期(104上)

再看一下102上的之前配置的日志日期

现在再来看一下HDFS中取的日期是日志对应的时间,还是104这台机器对应的系统时间

[zhang@hadoop102 applog]$ lg.sh

查看时间,就是日志对应的时间

小插曲:最开始我的origin_data文件,怎么都不出来,但是我是跟着视频一步一步来的,最终发现问题:

导入jar包的时候,flume-interceptor-1.0-SNAPSHOT-jar-with-dependencies.jar文件是之前的,.0的文件才是最新文件,所以应该删除前面的文件,留下.0文件。当然出现问题之后直接回去重新删除jar包,重新导入就可以了。

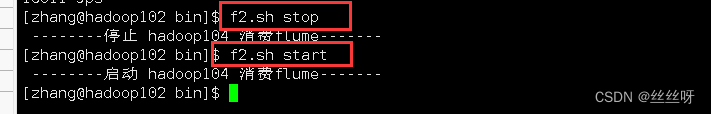

消费者Flume启动停止脚本

在/home/zhang/bin目录下创建脚本f2.sh

[zhang@hadoop102 bin]$ vim f2.sh

在脚本中填写如下内容

#! /bin/bash

case $1 in

"start"){

for i in hadoop104

do

echo " --------启动 $i 消费flume-------"

ssh $i "nohup /opt/module/flume/bin/flume-ng agent --conf-file /opt/module/flume/conf/kafka-flume-hdfs.conf --name a1 -Dflume.root.logger=INFO,LOGFILE >/opt/module/flume/log2.txt 2>&1 &"

done

};;

"stop"){

for i in hadoop104

do

echo " --------停止 $i 消费flume-------"

ssh $i "ps -ef | grep kafka-flume-hdfs | grep -v grep |awk '{print \$2}' | xargs -n1 kill"

done

};;

esac

[zhang@hadoop102 bin]$ chmod 777 f2.sh

项目经验之Flume内存优化

修改flume内存参数设置

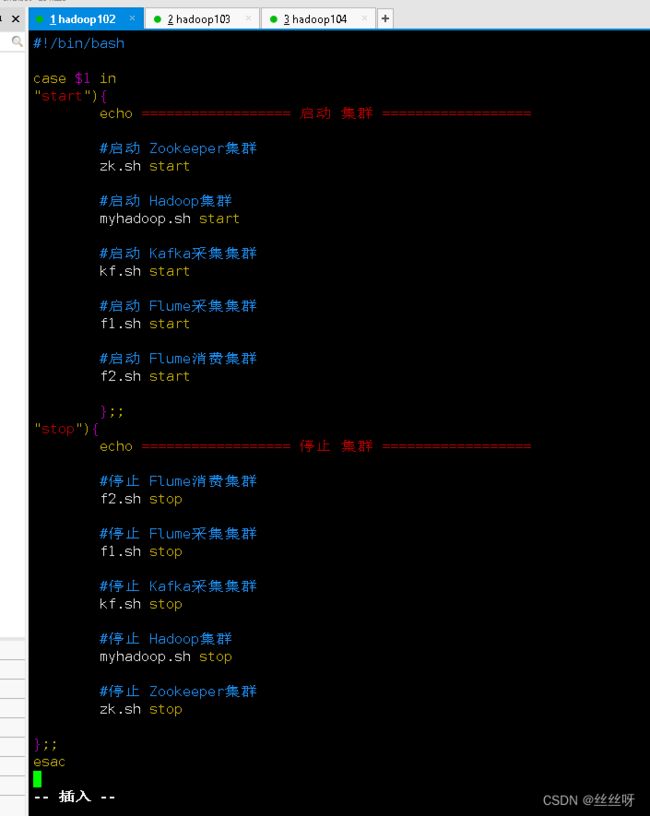

6 采集通道启动/停止脚本

在/home/zhang/bin目录下创建脚本cluster.sh

[zhang@hadoop102 bin]$ vim cluster.sh

在脚本中填写如下内容 (注意关闭顺序,停止Kafka需要一定的时间,如果关闭Kafka紧接着关闭Zookeeper,可能会由于延时问题,不能正常关闭)

#!/bin/bash

case $1 in

"start"){

echo ================== 启动 集群 ==================

#启动 Zookeeper集群

zk.sh start

#启动 Hadoop集群

myhadoop.sh start

#启动 Kafka采集集群

kf.sh start

#启动 Flume采集集群

f1.sh start

#启动 Flume消费集群

f2.sh start

};;

"stop"){

echo ================== 停止 集群 ==================

#停止 Flume消费集群

f2.sh stop

#停止 Flume采集集群

f1.sh stop

#停止 Kafka采集集群

kf.sh stop

#停止 Hadoop集群

myhadoop.sh stop

#停止 Zookeeper集群

zk.sh stop

};;

esac

[zhang@hadoop102 bin]$ chmod 777 cluster.sh

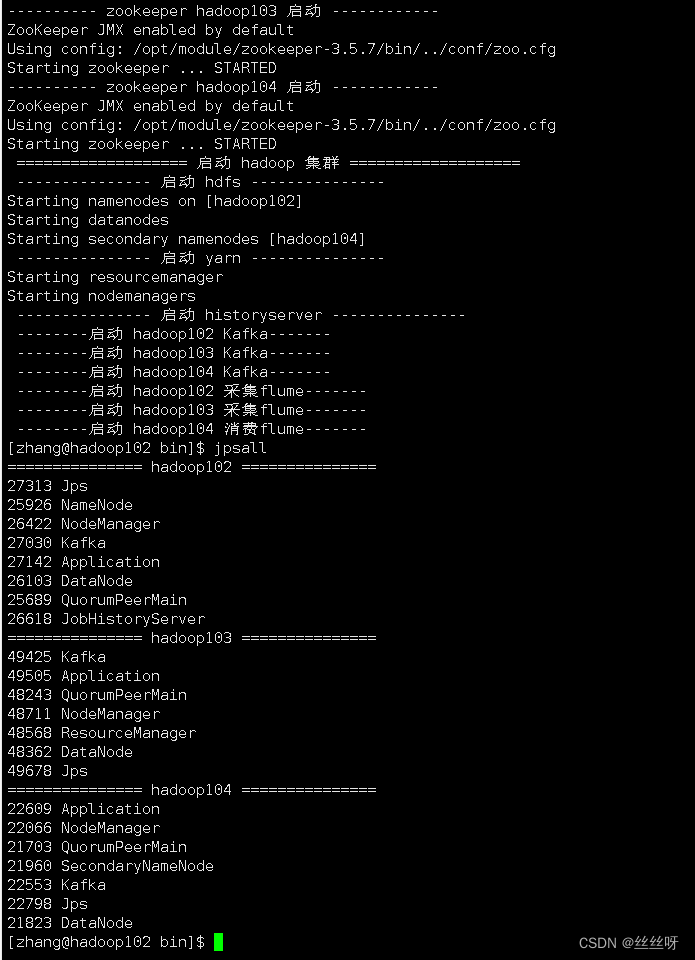

能够正常关闭,启动

7 常见问题及解决方案

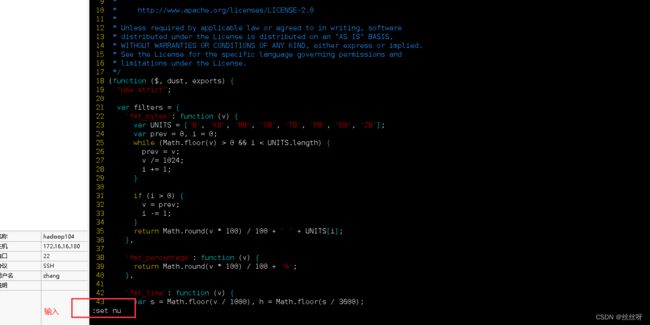

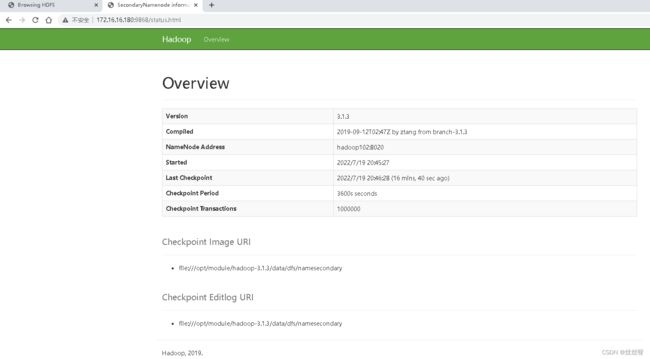

访问2NN页面http://hadoop104:9868,看不到详细信息

找到要修改的文件

[zhang@hadoop104 ~]$ cd /opt/module/hadoop-3.1.3/share/hadoop/hdfs/webapps/static/

[zhang@hadoop104 static]$ vim dfs-dust.js

修改61行

return new Date(Number(v)).toLocaleString();