AlexNet网络复现

AlexNet

学习流程

- 阅读AlexNet论文原文

- 搜集学习资源:视频讲解-博客资源

- 熟悉AlexNet网络结构

- 代码复现,清楚网络结构中层与层之间的操作

AlexNet论文

原论文:imagenet-classification-with-deep-convolutional-nn

CSDN博主论文翻译:ImageNet Classification with Deep Convolutional Neural Networks(AlexNet)(翻译大体没有什么太多错误,小细节能识别出来)

学习资源

博客资源

- CNN经典之AlexNet网络+PyTorch复现

- 一篇文章“简单”认识《卷积神经网络》

- 卷积神经网络中各个卷积层的设置及输出大小计算的详细讲解

- 对CNN感受野一些理解

- 卷积神经网络输出的计算公式推导证明

- 感受野-Receptive Field的理解

- 深入理解AlexNet网络

视频资源

-

霹雳吧啦Wz的的B站个人空间(博主B站搜索对应网络名称即可找到视频解析)

-

AlexNet网络结构详解与花分类数据集下载

-

使用pytorch搭建AlexNet并训练花分类数据集

-

使用tensorflow2搭建Alexnet

-

霹雳吧啦Wz-太阳花的小绿豆的CSDN博客(博主博客下搜索网络名称即可找到博主的网络解答)

-

霹雳吧啦Wz-github(代码可从github从下载)

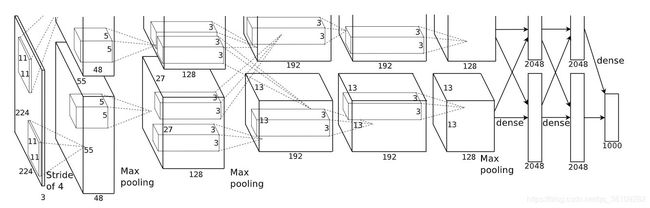

AlexNet网络结构

这里网络结构的理解要特别注意:

在上述视频链接讲解的视频中,(图片来自B站霹雳吧啦Wz的讲解视频)

Conv2中的卷积核是5x5x96,是一个三维的卷积核,核数有256个,意味着卷积核空间大小为5x5x96x256大小,前三个维度的核的维度,最后一个是核的个数。

卷积核是三维的≠卷积是三维的。二维卷积和三维卷积中的“二维、三维”是由卷积的方式决定的,如果卷积核是沿两个方向(也就是一个平面)移动,则是二维卷积,在特征图的channel方向上是不移动的。tf.nn.conv3d应该这样用

代码

pytorch-AlexNet的实现

文件目录

AlexNet.pth:网络训练保存的权重

class_indices.json:里面如下格式的json格式的花的类别数据(博主采用了5个类别的花作为训练,因此这里面只有5对键值

{

"0": "daisy",

"1": "dandelion",

"2": "roses",

"3": "sunflowers",

"4": "tulips"

}

model.py:AlexNet模型

import torch.nn as nn

import torch

class AlexNet(nn.Module):

def __init__(self, num_classes=1000, init_weights=False):

super(AlexNet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 48, kernel_size=11, stride=4, padding=2), # input[3, 224, 224] output[48, 55, 55]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[48, 27, 27]

nn.Conv2d(48, 128, kernel_size=5, padding=2), # output[128, 27, 27]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 13, 13]

nn.Conv2d(128, 192, kernel_size=3, padding=1), # output[192, 13, 13]

nn.ReLU(inplace=True),

nn.Conv2d(192, 192, kernel_size=3, padding=1), # output[192, 13, 13]

nn.ReLU(inplace=True),

nn.Conv2d(192, 128, kernel_size=3, padding=1), # output[128, 13, 13]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 6, 6]

)

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(128 * 6 * 6, 2048),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5),

nn.Linear(2048, 2048),

nn.ReLU(inplace=True),

nn.Linear(2048, num_classes),

)

if init_weights:

self._initialize_weights()

def forward(self, x):

x = self.features(x)

x = torch.flatten(x, start_dim=1)

x = self.classifier(x)

return x

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')#kaiming正态分布初始化权重,待了解

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01)#初始化权重,正态分布,均值0,方差0.01

nn.init.constant_(m.bias, 0)

predict.py:单图片预测分类

import os

import json

import torch

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

from model import AlexNet

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

data_transform = transforms.Compose(

[transforms.Resize((224, 224)),

transforms.ToTensor(),#将数据的灰度范围从0-255变换到0-1,变成一维张量

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

#执行数据归一化

#执行image=(image-mean)/std,mean表示各通道的均值,std表示各通道的标准差,inpalce表示是否原地操作

#torchvision.transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),参数的分布及意义,参数数量和通道有关

# load image

img_path = "../tulip.jpg"

assert os.path.exists(img_path), "file: '{}' dose not exist.".format(img_path)#输出报错

img = Image.open(img_path)

plt.imshow(img)

# [N, C, H, W]

img = data_transform(img)

# expand batch dimension

img = torch.unsqueeze(img, dim=0)

#pytorch中,处理图片必须一个batch一个batch的操作,所以我们要准备的数据的格式是 [batch_size, n_channels, hight, width]

# read class_indict

json_path = './class_indices.json'

assert os.path.exists(json_path), "file: '{}' dose not exist.".format(json_path)

json_file = open(json_path, "r")

class_indict = json.load(json_file)

# create model

model = AlexNet(num_classes=5).to(device)#这行代码的意思是将所有最开始读取数据时的变量copy一份到device所指定的GPU上去,之后的运算都在GPU上进行。

# load model weights

weights_path = "./AlexNet.pth"

assert os.path.exists(weights_path), "file: '{}' dose not exist.".format(weights_path)

model.load_state_dict(torch.load(weights_path))#加载权重

model.eval()

with torch.no_grad():#不反向传播梯度

# predict class

output = torch.squeeze(model(img.to(device))).cpu()#将img传入model,得到的输出后将batch这个维度压缩掉

predict = torch.softmax(output, dim=0)#将第一维的,就是第一行的数据变成概率分布

predict_cla = torch.argmax(predict).numpy()#返回一个维度上张量最大值的索引

print_res = "class: {} prob: {:.3}".format(class_indict[str(predict_cla)],

predict[predict_cla].numpy())

plt.title(print_res)

print(print_res)

plt.show()

if __name__ == '__main__':

main()

train.py:训练

import os

import json

import torch

import torch.nn as nn

from torchvision import transforms, datasets, utils

import matplotlib.pyplot as plt

import numpy as np

import torch.optim as optim

from tqdm import tqdm

from model import AlexNet

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("using {} device.".format(device))

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]),

"val": transforms.Compose([transforms.Resize((224, 224)), # cannot 224, must (224, 224)

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])}

data_root = os.path.abspath(os.path.join(os.getcwd(), "../..")) # get data root path

image_path = os.path.join(data_root, "data_set", "flower_data") # flower data set path

assert os.path.exists(image_path), "{} path does not exist.".format(image_path)

train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"),

transform=data_transform["train"])

train_num = len(train_dataset)

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx

cla_dict = dict((val, key) for key, val in flower_list.items())

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

batch_size = 32

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=nw)

validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"),

transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=4, shuffle=False,

num_workers=nw)

print("using {} images for training, {} images for validation.".format(train_num,

val_num))

# test_data_iter = iter(validate_loader)

# test_image, test_label = test_data_iter.next()

#

# def imshow(img):

# img = img / 2 + 0.5 # unnormalize

# npimg = img.numpy()

# plt.imshow(np.transpose(npimg, (1, 2, 0)))

# plt.show()

#

# print(' '.join('%5s' % cla_dict[test_label[j].item()] for j in range(4)))

# imshow(utils.make_grid(test_image))

net = AlexNet(num_classes=5, init_weights=True)

net.to(device)

loss_function = nn.CrossEntropyLoss()

# pata = list(net.parameters())

optimizer = optim.Adam(net.parameters(), lr=0.0002)

epochs = 10

save_path = './AlexNet.pth'

best_acc = 0.0

train_steps = len(train_loader)

for epoch in range(epochs):

# train

net.train()

running_loss = 0.0

train_bar = tqdm(train_loader)

for step, data in enumerate(train_bar):

images, labels = data

optimizer.zero_grad()

outputs = net(images.to(device))

loss = loss_function(outputs, labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

train_bar.desc = "train epoch[{}/{}] loss:{:.3f}".format(epoch + 1,

epochs,

loss)

# validate

net.eval()

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad():

val_bar = tqdm(validate_loader)

for val_data in val_bar:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

predict_y = torch.max(outputs, dim=1)[1]

acc += torch.eq(predict_y, val_labels.to(device)).sum().item()

val_accurate = acc / val_num

print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

(epoch + 1, running_loss / train_steps, val_accurate))

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(), save_path)

print('Finished Training')

if __name__ == '__main__':

main()

数据预处理

文件目录

data_set存储了训练集及测试集的数据

train存储训练集数据

val存储测试集数据

flower_photos存储原数据

split_data.py:将原数据分割为训练集和测试集

import os

from shutil import copy, rmtree

import random

def mk_file(file_path: str):

if os.path.exists(file_path):

# 如果文件夹存在,则先删除原文件夹在重新创建

rmtree(file_path)

os.makedirs(file_path)

def main():

# 保证随机可复现

random.seed(0)

# 将数据集中10%的数据划分到验证集中

split_rate = 0.1

# 指向你解压后的flower_photos文件夹

cwd = os.getcwd()

data_root = os.path.join(cwd, "flower_data")

origin_flower_path = os.path.join(data_root, "flower_photos")

assert os.path.exists(origin_flower_path), "path '{}' does not exist.".format(origin_flower_path)

flower_class = [cla for cla in os.listdir(origin_flower_path)

if os.path.isdir(os.path.join(origin_flower_path, cla))]

# 建立保存训练集的文件夹

train_root = os.path.join(data_root, "train")

mk_file(train_root)

for cla in flower_class:

# 建立每个类别对应的文件夹

mk_file(os.path.join(train_root, cla))

# 建立保存验证集的文件夹

val_root = os.path.join(data_root, "val")

mk_file(val_root)

for cla in flower_class:

# 建立每个类别对应的文件夹

mk_file(os.path.join(val_root, cla))

for cla in flower_class:

cla_path = os.path.join(origin_flower_path, cla)

images = os.listdir(cla_path)

num = len(images)

# 随机采样验证集的索引

eval_index = random.sample(images, k=int(num*split_rate))

for index, image in enumerate(images):

if image in eval_index:

# 将分配至验证集中的文件复制到相应目录

image_path = os.path.join(cla_path, image)

new_path = os.path.join(val_root, cla)

copy(image_path, new_path)

else:

# 将分配至训练集中的文件复制到相应目录

image_path = os.path.join(cla_path, image)

new_path = os.path.join(train_root, cla)

copy(image_path, new_path)

print("\r[{}] processing [{}/{}]".format(cla, index+1, num), end="") # processing bar

print()

print("processing done!")

if __name__ == '__main__':

main()

tensorflow-AlexNet的实现

文件目录

from tensorflow.keras import layers, models, Model, Sequential

def AlexNet_v1(im_height=224, im_width=224, num_classes=1000):

# tensorflow中的tensor通道排序是NHWC

input_image = layers.Input(shape=(im_height, im_width, 3), dtype="float32") # output(None, 224, 224, 3)

x = layers.ZeroPadding2D(((1, 2), (1, 2)))(input_image) # output(None, 227, 227, 3)

x = layers.Conv2D(48, kernel_size=11, strides=4, activation="relu")(x) # output(None, 55, 55, 48)

x = layers.MaxPool2D(pool_size=3, strides=2)(x) # output(None, 27, 27, 48)

x = layers.Conv2D(128, kernel_size=5, padding="same", activation="relu")(x) # output(None, 27, 27, 128)

x = layers.MaxPool2D(pool_size=3, strides=2)(x) # output(None, 13, 13, 128)

x = layers.Conv2D(192, kernel_size=3, padding="same", activation="relu")(x) # output(None, 13, 13, 192)

x = layers.Conv2D(192, kernel_size=3, padding="same", activation="relu")(x) # output(None, 13, 13, 192)

x = layers.Conv2D(128, kernel_size=3, padding="same", activation="relu")(x) # output(None, 13, 13, 128)

x = layers.MaxPool2D(pool_size=3, strides=2)(x) # output(None, 6, 6, 128)

x = layers.Flatten()(x) # output(None, 6*6*128)

x = layers.Dropout(0.2)(x)

x = layers.Dense(2048, activation="relu")(x) # output(None, 2048)

x = layers.Dropout(0.2)(x)

x = layers.Dense(2048, activation="relu")(x) # output(None, 2048)

x = layers.Dense(num_classes)(x) # output(None, 5)

predict = layers.Softmax()(x)

model = models.Model(inputs=input_image, outputs=predict)

return model

class AlexNet_v2(Model):

def __init__(self, num_classes=1000):

super(AlexNet_v2, self).__init__()

self.features = Sequential([

layers.ZeroPadding2D(((1, 2), (1, 2))), # output(None, 227, 227, 3)

layers.Conv2D(48, kernel_size=11, strides=4, activation="relu"), # output(None, 55, 55, 48)

layers.MaxPool2D(pool_size=3, strides=2), # output(None, 27, 27, 48)

layers.Conv2D(128, kernel_size=5, padding="same", activation="relu"), # output(None, 27, 27, 128)

layers.MaxPool2D(pool_size=3, strides=2), # output(None, 13, 13, 128)

layers.Conv2D(192, kernel_size=3, padding="same", activation="relu"), # output(None, 13, 13, 192)

layers.Conv2D(192, kernel_size=3, padding="same", activation="relu"), # output(None, 13, 13, 192)

layers.Conv2D(128, kernel_size=3, padding="same", activation="relu"), # output(None, 13, 13, 128)

layers.MaxPool2D(pool_size=3, strides=2)]) # output(None, 6, 6, 128)

self.flatten = layers.Flatten()

self.classifier = Sequential([

layers.Dropout(0.2),

layers.Dense(1024, activation="relu"), # output(None, 2048)

layers.Dropout(0.2),

layers.Dense(128, activation="relu"), # output(None, 2048)

layers.Dense(num_classes), # output(None, 5)

layers.Softmax()

])

def call(self, inputs, **kwargs):

x = self.features(inputs)

x = self.flatten(x)

x = self.classifier(x)

return x

trainGPU.py:GPU训练文件

import matplotlib.pyplot as plt

from model import AlexNet_v1, AlexNet_v2

import tensorflow as tf

import json

import os

import time

import glob

import random

os.environ["CUDA_DEVICE_ORDER"] = "PCI_BUS_ID"

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

def main():

gpus = tf.config.experimental.list_physical_devices("GPU")

if gpus:

try:

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

except RuntimeError as e:

print(e)

exit(-1)

data_root = os.path.abspath(os.path.join(os.getcwd(), "../..")) # get data root path

image_path = os.path.join(data_root, "data_set", "flower_data") # flower data set path

train_dir = os.path.join(image_path, "train")

validation_dir = os.path.join(image_path, "val")

assert os.path.exists(train_dir), "cannot find {}".format(train_dir)

assert os.path.exists(validation_dir), "cannot find {}".format(validation_dir)

# create direction for saving weights

if not os.path.exists("save_weights"):

os.makedirs("save_weights")

im_height = 224

im_width = 224

batch_size = 32

epochs = 10

# class dict

data_class = [cla for cla in os.listdir(train_dir) if os.path.isdir(os.path.join(train_dir, cla))]

class_num = len(data_class)

class_dict = dict((value, index) for index, value in enumerate(data_class))

# reverse value and key of dict

inverse_dict = dict((val, key) for key, val in class_dict.items())

# write dict into json file

json_str = json.dumps(inverse_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

# load train images list

train_image_list = glob.glob(train_dir+"/*/*.jpg")

random.shuffle(train_image_list)

train_num = len(train_image_list)

assert train_num > 0, "cannot find any .jpg file in {}".format(train_dir)

train_label_list = [class_dict[path.split(os.path.sep)[-2]] for path in train_image_list]

# load validation images list

val_image_list = glob.glob(validation_dir+"/*/*.jpg")

random.shuffle(val_image_list)

val_num = len(val_image_list)

assert val_num > 0, "cannot find any .jpg file in {}".format(validation_dir)

val_label_list = [class_dict[path.split(os.path.sep)[-2]] for path in val_image_list]

print("using {} images for training, {} images for validation.".format(train_num,

val_num))

def process_path(img_path, label):

label = tf.one_hot(label, depth=class_num)

image = tf.io.read_file(img_path)

image = tf.image.decode_jpeg(image)

image = tf.image.convert_image_dtype(image, tf.float32)

image = tf.image.resize(image, [im_height, im_width])

return image, label

AUTOTUNE = tf.data.experimental.AUTOTUNE

# load train dataset

train_dataset = tf.data.Dataset.from_tensor_slices((train_image_list, train_label_list))

train_dataset = train_dataset.shuffle(buffer_size=train_num)\

.map(process_path, num_parallel_calls=AUTOTUNE)\

.repeat().batch(batch_size).prefetch(AUTOTUNE)

# load train dataset

val_dataset = tf.data.Dataset.from_tensor_slices((val_image_list, val_label_list))

val_dataset = val_dataset.map(process_path, num_parallel_calls=tf.data.experimental.AUTOTUNE)\

.repeat().batch(batch_size)

# 实例化模型

model = AlexNet_v1(im_height=im_height, im_width=im_width, num_classes=5)

# model = AlexNet_v2(class_num=5)

# model.build((batch_size, 224, 224, 3)) # when using subclass model

model.summary()

# using keras low level api for training

loss_object = tf.keras.losses.CategoricalCrossentropy(from_logits=False)

optimizer = tf.keras.optimizers.Adam(learning_rate=0.0005)

train_loss = tf.keras.metrics.Mean(name='train_loss')

train_accuracy = tf.keras.metrics.CategoricalAccuracy(name='train_accuracy')

test_loss = tf.keras.metrics.Mean(name='test_loss')

test_accuracy = tf.keras.metrics.CategoricalAccuracy(name='test_accuracy')

@tf.function

def train_step(images, labels):

with tf.GradientTape() as tape:

predictions = model(images, training=True)

loss = loss_object(labels, predictions)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train_loss(loss)

train_accuracy(labels, predictions)

@tf.function

def test_step(images, labels):

predictions = model(images, training=False)

t_loss = loss_object(labels, predictions)

test_loss(t_loss)

test_accuracy(labels, predictions)

best_test_loss = float('inf')

train_step_num = train_num // batch_size

val_step_num = val_num // batch_size

for epoch in range(1, epochs+1):

train_loss.reset_states() # clear history info

train_accuracy.reset_states() # clear history info

test_loss.reset_states() # clear history info

test_accuracy.reset_states() # clear history info

t1 = time.perf_counter()

for index, (images, labels) in enumerate(train_dataset):

train_step(images, labels)

if index+1 == train_step_num:

break

print(time.perf_counter()-t1)

for index, (images, labels) in enumerate(val_dataset):

test_step(images, labels)

if index+1 == val_step_num:

break

template = 'Epoch {}, Loss: {}, Accuracy: {}, Test Loss: {}, Test Accuracy: {}'

print(template.format(epoch,

train_loss.result(),

train_accuracy.result() * 100,

test_loss.result(),

test_accuracy.result() * 100))

if test_loss.result() < best_test_loss:

model.save_weights("./save_weights/myAlex.ckpt".format(epoch), save_format='tf')

# # using keras high level api for training

# model.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=0.0005),

# loss=tf.keras.losses.CategoricalCrossentropy(from_logits=False),

# metrics=["accuracy"])

#

# callbacks = [tf.keras.callbacks.ModelCheckpoint(filepath='./save_weights/myAlex_{epoch}.h5',

# save_best_only=True,

# save_weights_only=True,

# monitor='val_loss')]

#

# # tensorflow2.1 recommend to using fit

# history = model.fit(x=train_dataset,

# steps_per_epoch=train_num // batch_size,

# epochs=epochs,

# validation_data=val_dataset,

# validation_steps=val_num // batch_size,

# callbacks=callbacks)

if __name__ == '__main__':

main()

predict.py:预测文件

import os

import json

from PIL import Image

import numpy as np

import matplotlib.pyplot as plt

from model import AlexNet_v1, AlexNet_v2

def main():

im_height = 224

im_width = 224

# load image

img_path = "../tulip.jpg"

assert os.path.exists(img_path), "file: '{}' dose not exist.".format(img_path)

img = Image.open(img_path)

# resize image to 224x224

img = img.resize((im_width, im_height))

plt.imshow(img)

# scaling pixel value to (0-1)

img = np.array(img) / 255.

# Add the image to a batch where it's the only member.

img = (np.expand_dims(img, 0))

# read class_indict

json_path = './class_indices.json'

assert os.path.exists(json_path), "file: '{}' dose not exist.".format(json_path)

json_file = open(json_path, "r")

class_indict = json.load(json_file)

# create model

model = AlexNet_v1(num_classes=5)

weighs_path = "./save_weights/myAlex.h5"

assert os.path.exists(img_path), "file: '{}' dose not exist.".format(weighs_path)

model.load_weights(weighs_path)

# prediction

result = np.squeeze(model.predict(img))

predict_class = np.argmax(result)

print_res = "class: {} prob: {:.3}".format(class_indict[str(predict_class)],

result[predict_class])

plt.title(print_res)

print(print_res)

plt.show()

if __name__ == '__main__':

main()

下一个目标复现VGG网络