监督学习无监督学习

To begin with, we should know that machine primarily consists of four major domain.

首先,我们应该知道机器主要由四个主要领域组成。

- Supervised learning: An agent or algorithm learns from the labeled data. 有监督的学习:代理或算法从标记的数据中学习。

- Unsupervised learning: An agent or algorithm learns from the unlabeled data i.e. it finds similar patterns in the dataset and groups them accordingly. 无监督学习:代理或算法从未标记的数据中学习,即,它在数据集中找到相似的模式并将其相应地分组。

- Semi-supervised learning: A combination of both Supervised and Unsupervised learning. 半监督学习:监督学习和无监督学习的组合。

- Reinforcement learning: An agent or algorithm learns patterns or behaviors by correcting itself over and over again until evolves into a better agent. 强化学习:代理或算法通过反复校正自身直到发展成为更好的代理来学习模式或行为。

Now let us see the methods which come under the unsupervised learning domain.

现在让我们看看无监督学习领域下的方法。

聚类 (Clustering)

Photo by Nareeta Martin on Unsplash Nareeta Martin在 Unsplash上 拍摄的照片The goal of clustering is to create groups of data points such that points in different clusters are dissimilar while points within a cluster are similar.

聚类的目的是创建数据点组,以使不同聚类中的点不相似,而聚类中的点相似。

Clustering also has its own subcategories.

群集也有其自己的子类别。

1. K-均值聚类 (1. K-means clustering)

With k-means clustering, we want to cluster our data points into k groups. A larger k creates smaller groups with more granularity, a lower k means larger groups and less granularity. It can be compared to the separate crowds of people surrounding different famous people at a party. The density of the crowd depends on the fame of that person.

通过k均值聚类,我们希望将数据点聚类为k组。 k越大,组越细,粒度越大; k越小,组越大,粒度越小。 可以将它与聚会上围绕着不同名人的独立人群进行比较。 人群的密度取决于那个人的名声。

2.层次聚类 (2. Hierarchical clustering)

Hierarchical clustering is similar to regular clustering, but it focuses on building a hierarchy of clusters. This type of clustering is used in the online shopping websites, where there are broad categories for simple navigation displayed on the homepage and as you click on it, further specific categories related to that would be displayed. This explains the more distinct cluster of items.

分层群集类似于常规群集,但是它专注于构建群集的层次结构。 这种类型的群集用于在线购物网站中,在主页上会显示大范围的简单导航,并且当您单击它时,将显示与之相关的其他特定类别。 这解释了更独特的项目集群。

降维 (Dimensionality-reduction)

1.主成分分析: (1. Principal Component Analysis:)

PCA is a dimensionality-reduction method in unsupervised learning which is used to reduce the dimensionality of large data sets into smaller ones by choosing the basis vectors on our own which are known as principal components. PCA remaps the space in which our data exists to make it more compressible. The transformed dimension is smaller than the original dimension.

PCA是一种无监督学习中的降维方法,用于通过自行选择被称为主要成分的基础向量,将大数据集的维数减少为较小的数据集。 PCA重新映射了我们数据存在的空间,以使其更具可压缩性。 变换后的尺寸小于原始尺寸。

2. K近邻 (2. K-nearest neighbor)

How do you determine the housing price of a house in a particular locality? We would take the average of the price of the houses in the nearby locality and determine the approximate price of the house we are about to buy. We label the test data point based on the average of the sample data in its neighborhood. We take the mean of the values if the variables are continuous and mode if they are categorical.

您如何确定特定地区房屋的房价? 我们将取附近地区房屋平ASP格,并确定我们将要购买的房屋的近似价格。 我们根据附近的样本数据的平均值来标记测试数据点。 如果变量是连续的,则取值的平均值;如果变量是分类的,则取值的平均值。

Applications of k-NN:

k-NN的应用:

- Helps in the update of new methods of fraud detection. 帮助更新欺诈检测的新方法。

- Determining the housing price and detection of the temperature in the locality. 确定房屋价格并检测当地温度。

- Imputing missing training data. 估算缺少的训练数据。

3. T分布随机邻居嵌入 (3. T-distributed Stochastic Neighbor Embedding)

t-SNE Embedding is an algorithm used to reduce a high dimensional dataset into a low dimensional graph that retains most of the original information. It is based on the principle of determining the similarity of all points in the scatter plot.

t-SNE嵌入是一种用于将高维数据集还原为保留大部分原始信息的低维图形的算法。 它基于确定散点图中所有点的相似性的原理。

The process done here is measuring the distance from the point we are interested in all the other points and plotting that distance on a normal distribution curve, which is centered on the point that we are interested in.

此处完成的过程是测量到我们在所有其他点上都感兴趣的点的距离,并将该距离绘制在正态分布曲线上,该分布曲线以我们感兴趣的点为中心。

Note: We use a normal distribution curve because distant points have low similarity values and close points have high similarity values.

注意:我们使用正态分布曲线,因为远点的相似度值低而闭合点的相似度值高。

Now it puts the data points on a number line in a random order, and t-SNE moves these points little by little based on their similarity values, until it has clustered them properly on a lower dimension.

现在,它将数据点以随机顺序放置在数字线上,然后t-SNE根据它们的相似性值一点一点地移动这些点,直到将它们正确地聚集在较低维度上为止。

生成建模 (Generative modeling)

1.生成对抗网络 (1. Generative adversarial network)

A generative adversarial network is deep learning-based generative model. Generative models are models that use unsupervised learning. GAN is a system where two neural networks compete to create or generate variations within a dataset.

生成对抗网络是基于深度学习的生成模型。 生成模型是使用无监督学习的模型。 GAN是一个系统,其中两个神经网络竞争在数据集中创建或生成变体。

It has a generator model and a discriminator model. The generator network takes a sample and generates a sample of data by learning the distribution of classes. The discriminator network learns the boundaries between those classes by estimating the probability of whether the sample is taken from the real sample.

它具有生成器模型和鉴别器模型。 生成器网络通过学习类的分布来获取样本并生成数据样本。 鉴别器网络通过估计是否从真实样本中提取样本的概率来学习这些类别之间的界限。

GAN的应用: (Applications of GAN :)

- They are used for image manipulation and generation. 它们用于图像处理和生成。

- They can be deployed for tasks in understanding risk and recovery in healthcare. 可以将它们部署用于了解医疗保健的风险和恢复的任务。

- Used in drug research to produce new chemical structures from the existing ones. 用于药物研究以从现有化学结构产生新的化学结构。

- Google brain project is an interesting application of GAN. Google的大脑项目是GAN的有趣应用。

The main advantage of GAN is to generate data when there is not much data available, without any human supervision.

GAN的主要优点是在没有可用数据的情况下在没有任何人工监督的情况下生成数据。

2.深度卷积生成对抗网络 (2. Deep Convolutional Generative adversarial Network)

DCGAN has convolutional layers between the input and the output image in the generator. And in the discriminator, it uses regular convolutional networks to classify the generated and the real images. The architecture of the DCGAN is:

DCGAN在生成器的输入和输出图像之间具有卷积层。 在鉴别器中,它使用常规的卷积网络对生成的图像和真实图像进行分类。 DCGAN的体系结构为:

- The pooling layers are replaced with generators and discriminators. 合并层被生成器和鉴别器代替。

- Batch normalization is used in both generators and discriminators. 批处理规范化在生成器和鉴别器中都使用。

- The fully connected layers are removed. 完全连接的层将被删除。

- ReLU is used as the activation function in the generator for all layers except the output layer. ReLU用作生成器中除输出层以外的所有层的激活函数。

- Leaky ReLU activation function is used in the discriminator for all layers. 鉴别器对所有层使用泄漏的ReLU激活功能。

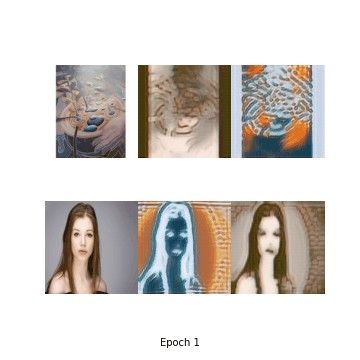

3.样式转移 (3. Style Transfer)

Style transfer is the method used to generate a new image by combining the content image with a style image. By using this we can make the environment image that we have looked a lot greater because it is being combined with the style of iconic paintings.

样式转移是用于通过将内容图像与样式图像组合来生成新图像的方法。 通过使用它,我们可以使我们看起来更大的环境图像,因为它已与标志性绘画的风格相结合。

The activations in the neural network of the content and the style image should match the activations in the generated image. So style transfer can make any image that you took on your trek look modified like the famous Hokusai Japanese painting.

内容和样式图像在神经网络中的激活应与生成的图像中的激活匹配。 因此,样式转移可以使您在跋涉中拍摄的任何图像看起来都像著名的北斋日本画一样被修改。

翻译自: https://medium.com/perceptronai/a-brief-introduction-to-unsupervised-learning-a18c6f1e32b0

监督学习无监督学习