kafka-- 创建最基础的生产者和消费者

创建最基础的生产者和消费者

1、 创建maven项目

learn-kafka

com.bfxy

2、引入pom依赖

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<parent>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-parentartifactId>

<version>2.1.5.RELEASEversion>

<relativePath/>

parent>

<groupId>com.bfxygroupId>

<artifactId>learn-kafkaartifactId>

<version>0.0.1-SNAPSHOTversion>

<dependencies>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-loggingartifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

<scope>testscope>

dependency>

<dependency>

<groupId>org.projectlombokgroupId>

<artifactId>lombokartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-log4j2artifactId>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>1.2.58version>

dependency>

<dependency>

<groupId>org.apache.kafkagroupId>

<artifactId>kafka_2.12artifactId>

dependency>

dependencies>

<build>

<finalName>learn-kafkafinalName>

<resources>

<resource>

<directory>src/main/javadirectory>

<includes>

<include>**/*.propertiesinclude>

<include>**/*.xmlinclude>

includes>

<filtering>truefiltering>

resource>

<resource>

<directory>src/main/resourcesdirectory>

resource>

resources>

<plugins>

<plugin>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-maven-pluginartifactId>

<configuration>

<mainClass>com.bfxy.kafka.api.ApplicationmainClass>

configuration>

plugin>

plugins>

build>

project>

3、全局启动类

package com.bfxy.kafka.api;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class Application {

public static void main(String[] args) {

SpringApplication app = new SpringApplication(Application.class);

app.run(args);

}

}

4、创建最基础的生产者

package com.bfxy.kafka.api.quickstart;

import java.util.Properties;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.common.serialization.StringSerializer;

import com.alibaba.fastjson.JSON;

import com.bfxy.kafka.api.Const;

import com.bfxy.kafka.api.User;

public class QuickStartProducer {

public static void main(String[] args) {

Properties properties = new Properties();

// 1.配置生产者启动的关键属性参数

// 1.1 BOOTSTRAP_SERVERS_CONFIG:连接kafka集群的服务列表,如果有多个,使用"逗号"进行分隔

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "172.16.144.133:9092");

// 1.2 CLIENT_ID_CONFIG:这个属性的目的是标记kafkaclient的ID

properties.put(ProducerConfig.CLIENT_ID_CONFIG, "quickstart-producer");

// 1.3 KEY_SERIALIZER_CLASS_CONFIG VALUE_SERIALIZER_CLASS_CONFIG

// Q: 对 kafka的 key 和 value 做序列化,为什么需要序列化?

// A: 因为KAFKA Broker 在接收消息的时候,必须要以二进制的方式接收,所以必须要对KEY和VALUE进行序列化

// 字符串序列化类:org.apache.kafka.common.serialization.StringSerializer

// KEY: 是kafka用于做消息投递计算具体投递到对应的主题的哪一个partition而需要的

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

// VALUE: 实际发送消息的内容

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

// 2.创建kafka生产者对象 传递properties属性参数集合

KafkaProducer<String, String> producer = new KafkaProducer<>(properties);

for(int i = 0; i <10; i ++) {

// 3.构造消息内容

User user = new User("00" + i, "张三");

ProducerRecord<String, String> record =

// arg1:topic , arg2:实际的消息体内容

new ProducerRecord<String, String>(Const.TOPIC_QUICKSTART,

//使用alibaba的fastjson

JSON.toJSONString(user));

// 4.发送消息

producer.send(record);

}

// 5.关闭生产者

producer.close();

}

}

package com.bfxy.kafka.api;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

@Data

@NoArgsConstructor

@AllArgsConstructor

//因为在value处已经做了序列化,此处就不用做序列化了

public class User {

private String id;

private String name;

}

5、创建最基础的消费者

package com.bfxy.kafka.api.quickstart;

import java.time.Duration;

import java.util.Collections;

import java.util.List;

import java.util.Properties;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.TopicPartition;

import org.apache.kafka.common.serialization.StringDeserializer;

import com.bfxy.kafka.api.Const;

public class QuickStartConsumer {

public static void main(String[] args) {

// 1. 配置属性参数

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "172.16.144.133:9092");

// org.apache.kafka.common.serialization.StringDeserializer 反序列化

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

// 非常重要的属性配置:与我们消费者订阅组有关系

properties.put(ConsumerConfig.GROUP_ID_CONFIG, "quickstart-group");

// 常规属性:会话连接超时时间

properties.put(ConsumerConfig.SESSION_TIMEOUT_MS_CONFIG, 10000);

// 消费者提交offset: 自动提交 & 手工提交,默认是自动提交

properties.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, true);

properties.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG, 5000);

// 2. 创建消费者对象

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(properties);

// 3. 订阅你感兴趣的主题:Const.TOPIC_QUICKSTART

consumer.subscribe(Collections.singletonList(Const.TOPIC_QUICKSTART));

System.err.println("quickstart consumer started...");

try {

// 4.采用拉取消息的方式消费数据

while(true) {

// 等待多久拉取一次消息

// 拉取TOPIC_QUICKSTART主题里面所有的消息

// topic 和 partition是 一对多的关系,一个topic可以有多个partition

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(1000));

// 因为消息是在partition中存储的,所以需要遍历partition集合

for(TopicPartition topicPartition : records.partitions()) {

// 通过TopicPartition获取指定的消息集合,获取到的就是当前topicPartition下面所有的消息

List<ConsumerRecord<String, String>> partitionRecords = records.records(topicPartition);

// 获取TopicPartition对应的主题名称

String topic = topicPartition.topic();

// 获取当前topicPartition下的消息条数

int size = partitionRecords.size();

System.err.println(String.format("--- 获取topic: %s, 分区位置:%s, 消息总数: %s",

topic,

topicPartition.partition(),

size));

for(int i = 0; i < size; i++) {

ConsumerRecord<String, String> consumerRecord = partitionRecords.get(i);

// 实际的数据内容

String value = consumerRecord.value();

// 当前获取的消息偏移量

long offset = consumerRecord.offset();

// ISR : High Watermark, 如果要提交的话,比如提交当前消息的offset+1

// 表示下一次从什么位置(offset)拉取消息

long commitOffser = offset + 1;

System.err.println(String.format("获取实际消息 value:%s, 消息offset: %s, 提交offset: %s",

value, offset, commitOffser));

}

}

}

} finally {

consumer.close();

}

}

}

主题类

package com.bfxy.kafka.api;

public interface Const {

String TOPIC_QUICKSTART = "topic-quickstart";

String TOPIC_NORMAL = "topic-normal";

String TOPIC_INTERCEPTOR = "topic-interceptor";

String TOPIC_SERIAL = "topic-serial";

String TOPIC_PARTITION = "topic-partition";

String TOPIC_MODULE = "topic-module";

String TOPIC_CORE = "topic-core";

String TOPIC_REBALANCE = "topic-rebalance";

String TOPIC_MT1 = "topic-mt1";

String TOPIC_MT2 = "topic-mt2";

}

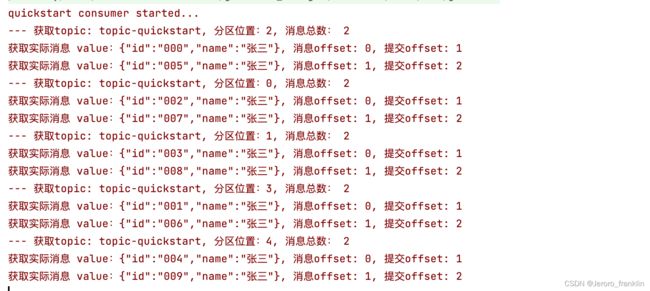

6、测试

启动消费者和生产者

http://172.16.144.133:9000

消费者日志输出