clickhouse集群安装部署

当前集群状态还不能解决分布式,单台机器压力大的问题,后期考虑引入chproxy

安装zookeeper集群

| 主机名 | IP地址 |

|---|---|

| zk01 | 192.168.124.81 |

| zk02 | 192.168.124.82 |

| zk03 | 192.168.124.83 |

zookeeper安装

三个节点进行相同配置

配置hosts

vim /etc/hosts

192.168.124.81 zk01

192.168.124.82 zk02

192.168.124.83 zk03

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

systemctl status firewalld

安装java

vim /etc/yum.repo.d/mnt.repo

[mnt]

name=mnt

baseurl=file:///mnt/

enabled=1

gpgcheck=0

yum clean all

yum install java -y

下载zookeeper

https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/

解压安装

tar -zxvf apache-zookeeper-3.6.3-bin.tar.gz -C /usr/local/

cd /usr/local

mv apache-zookeeper-3.6.3-bin/ zookeeper

mkdir /zookeeper

mkdir /zookeeper/data

mkdir /zookeeper/logs

环境变量

vim /etc/profile

export ZOOKEEPER_HOME=/usr/local/zookeeper/

export PATH=$ZOOKEEPER_HOME/bin:$PATH

source /etc/profile

echo $ZOOKEEPER_HOME

配置文件

cd $ZOOKEEPER_HOME/conf

vim zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/zookeeper/data

dataLogDir=/zookeeper/logs

clientPort=2181

server.1=192.168.124.81:2888:3888

server.2=192.168.124.82:2888:3888

server.3=192.168.124.83:2888:3888

ZooKeeper常见配置

- tickTime:CS通信心跳数;以毫秒为单位,可以使用默认配置。

- initLimit:LF初始通信时限;

- syncLimit:LF同步通信时限;数值不宜过高。

- dataDir:数据文件目录;

- dataLogDir:日志文件目录;

- clientPort:客户端连接端口;

- server.N:服务器名称与地址(服务编号,服务地址,LF通信端口,选举端口)

ZooKeeper高级配置

- gloabalOutstandingLimit:最大请求堆积属,默认1000;

- preAllocSize:预分配的Transaction log空间大小;

- snapCount:每进行snapCount次事务日志输出后,触发一次快照;

- maxClientCnxns:最大并发客户端数;

- forceSync:是否提交事务的同时同步到磁盘;

- leaderServes:是否禁止leader读功能;

- traceFile:是否记录所有请求的log;不建议使用

ID配置

zk01

echo "1" > /zookeeper/data/myid

zk02

echo "2" > /zookeeper/data/myid

zk03

echo "3" > /zookeeper/data/myid

启动

zkServer.sh start

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

查看状态

zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

关闭

zkServer.sh stop

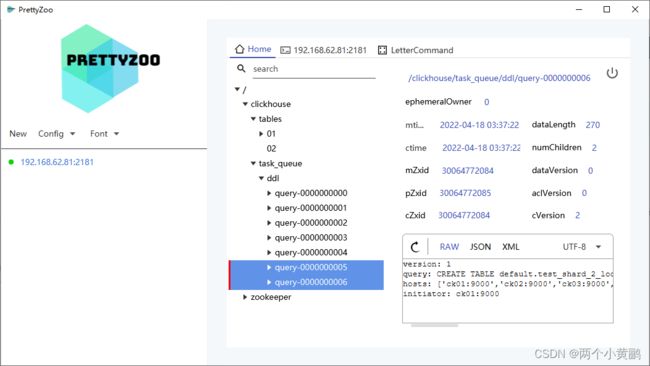

PrettyZoo

PrettyZoo是个人认为比较好用的客户端工具,下载地址如下

https://github.com/vran-dev/PrettyZoo

四字命令

ZooKeeper responds to a small set of commands. Each command is composed of four letters. You issue the commands to ZooKeeper via telnet or nc, at the client port.

-

dump

Lists the outstanding sessions and ephemeral nodes. This only works on the leader. -

envi

Print details about serving environment

kill

Shuts down the server. This must be issued from the machine the ZooKeeper server is running on. -

reqs

List outstanding requests -

ruok

Tests if server is running in a non-error state. The server will respond with imok if it is running. Otherwise it will not respond at all. -

srst

Reset statistics returned by stat command. -

stat

Lists statistics about performance and connected clients.

四字命令可以对系统进行查询或操作,需要在zoo.cfg加入白名单,根据需要将命令加入白名单

4lw.commands.whitelist=*

四字命令使用举例

echo mntr|nc 192.168.124.81 2181

安装clickhouse集群

| 主机名 | IP地址 |

|---|---|

| ck01 | 192.168.124.91 |

| ck02 | 192.168.124.92 |

| ck03 | 192.168.124.93 |

| ck04 | 192.168.124.94 |

clickhouse安装

四个节点进行相同配置

配置hosts

vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.124.91 ck01

192.168.124.92 ck02

192.168.124.93 ck03

192.168.124.94 ck04

下载clickhouse软件

https://mirrors.tuna.tsinghua.edu.cn/clickhouse/rpm/stable/x86_64/

安装

ls -lrt

-rw-r--r-- 1 root root 223610019 Apr 14 01:25 clickhouse-common-static-22.2.2.1-2.x86_64.rpm

-rw-r--r-- 1 root root 56238 Apr 14 01:25 clickhouse-server-22.2.2.1-2.noarch.rpm

-rw-r--r-- 1 root root 30955 Apr 14 01:25 clickhouse-client-22.2.2.1-2.noarch.rpm

-rw-r--r-- 1 root root 728126483 Apr 14 01:25 clickhouse-common-static-dbg-22.2.2.1-2.x86_64.rpm

rpm -ivh clickhouse*.rpm

数据副本

配置config.xml

<timezone>Asia/Shanghaitimezone>

<listen_host>0.0.0.0listen_host>

<zookeeper incl="zookeeper-servers" optional="true" />

<include_from>/etc/clickhouse-server/config.d/metrika.xmlinclude_from>

配置metrika.xml

在/etc/clickhouse-server/config.d/创建metrika.xml文件

<yandex>

<zookeeper-servers>

<node index="1">

<host>192.168.124.81host>

<port>2181port>

node>

<node index="2">

<host>192.168.124.82host>

<port>2181port>

node>

<node index="3">

<host>192.168.124.83host>

<port>2181port>

node>

zookeeper-servers>

yandex>

建表

ReplicatedMergeTree的定义方式如下:

ENGINE = ReplicatedMergeTree('zk_path', 'replica_name')

zk_path用于指定在ZooKeeper中创建的数据表的路径,路径名称是自定义的,并没有固定规则,用户可以设置成自己希望的任何路径。即便如此,ClickHouse还是提供了一些约定俗成的配置模板以供参考,例如:

/clickhouse/tables/{shard}/table_name

- /clickhouse/tables/是约定俗成的路径固定前缀,表示存放数据表的根路径。

- {shard}表示分片编号,通常用数值替代,例如01、02、03。一张数据表可以有多个分片,而每个分片都拥有自己的副本。

- table_name表示数据表的名称,为了方便维护,通常与物理表的名字相同(虽然ClickHouse并不强制要求路径中的表名称和物理表名相同);而replica_name的作用是定义在ZooKeeper中创建的副本名称,该名称是区分不同副本实例的唯一标识。一种约定成俗的命名方式是使用所在服务器的域名称。

对于zk_path而言,同一张数据表的同一个分片的不同副本,应该定义相同的路径;而对于replica_name而言,同一张数据表的同一个分片的不同副本,应该定义不同的名称

ck01

CREATE TABLE replicated_sales_1(

id String,

price Float64,

create_time DateTime

) ENGINE = ReplicatedMergeTree('/clickhouse/tables/01/replicated_sales_1','ck01')

PARTITION BY toYYYYMM(create_time)

ORDER BY id;

ck02

CREATE TABLE replicated_sales_1(

id String,

price Float64,

create_time DateTime

) ENGINE = ReplicatedMergeTree('/clickhouse/tables/01/replicated_sales_1','ck02')

PARTITION BY toYYYYMM(create_time)

ORDER BY id;

数据插入

ck01

INSERT INTO TABLE replicated_sales_1 VALUES('A001',100,'2019-05-10 00:00:00');

INSERT INTO TABLE replicated_sales_1 VALUES('A002',200,'2019-05-10 00:00:00');

ck02

INSERT INTO TABLE replicated_sales_1 VALUES('A003',300,'2019-05-10 00:00:00');

INSERT INTO TABLE replicated_sales_1 VALUES('A004',400,'2019-05-10 00:00:00');

数据查询

select * from replicated_sales_1

SELECT *

FROM replicated_sales_1

Query id: bc42df9d-c359-4ece-91bd-e1e03b2d69c8

┌─id───┬─price─┬─────────create_time─┐

│ A001 │ 100 │ 2019-05-10 00:00:00 │

│ A002 │ 200 │ 2019-05-10 00:00:00 │

└──────┴───────┴─────────────────────┘

┌─id───┬─price─┬─────────create_time─┐

│ A003 │ 300 │ 2019-05-10 00:00:00 │

│ A004 │ 400 │ 2019-05-10 00:00:00 │

└──────┴───────┴─────────────────────┘

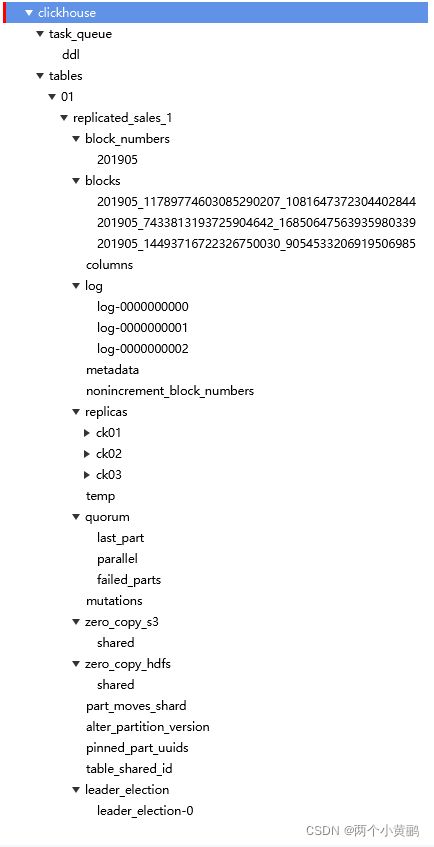

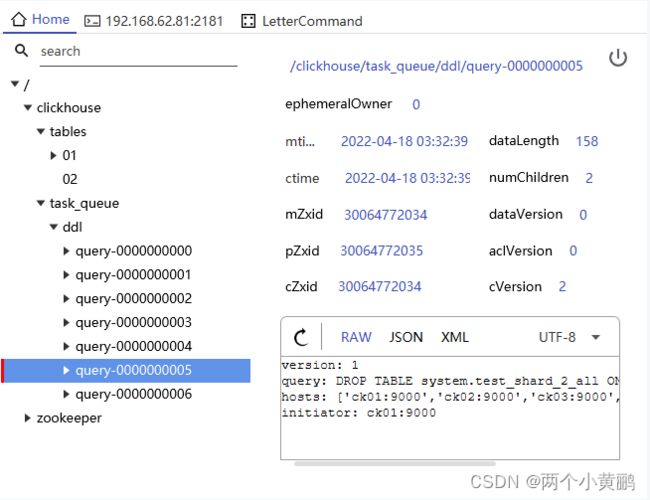

zookeeper

此时可以回过头查看zookeeper的数据,可以大概明白zookeeper的作用

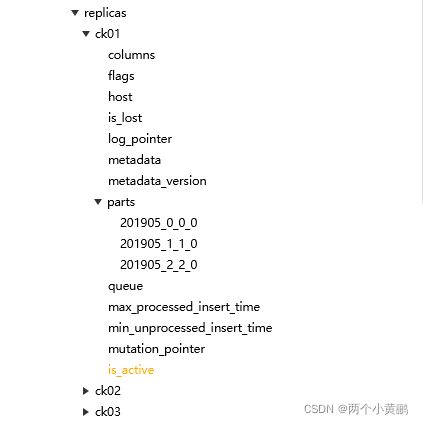

replicas下面对应这三个节点

每个节点对应的数据

分布式

配置后无需重启服务器

配置config.xml

所有节点

<remote_servers incl="remote_servers" optional="true" />

ck01

<macros>

<shard>01shard>

<replica>ck01replica>

macros>

ck02

<macros>

<shard>02shard>

<replica>ck02replica>

macros>

ck03

<macros>

<shard>03shard>

<replica>ck03replica>

macros>

ck04

<macros>

<shard>04shard>

<replica>ck04replica>

macros>

配置metrika.xml

需要配置每个节点的用户名密码,否则会报密码验证错误

<yandex>

<remote_servers>

<sharding_simple>

<shard>

<replica>

<host>ck01host>

<port>9000port>

<user>defaultuser>

<password>clickhousepassword>

replica>

<replica>

<host>ck02host>

<port>9000port>

<user>defaultuser>

<password>clickhousepassword>

replica>

shard>

<shard>

<replica>

<host>ck03host>

<port>9000port>

<user>defaultuser>

<password>clickhousepassword>

replica>

<replica>

<host>ck04host>

<port>9000port>

<user>defaultuser>

<password>clickhousepassword>

replica>

shard>

sharding_simple>

remote_servers>

<zookeeper-servers>

<node index="1">

<host>192.168.124.81host>

<port>2181port>

node>

<node index="2">

<host>192.168.124.82host>

<port>2181port>

node>

<node index="3">

<host>192.168.124.83host>

<port>2181port>

node>

zookeeper-servers>

yandex>

分布式表

--创建分布式表

CREATE TABLE test_shard_2_all ON CLUSTER sharding_simple (

id UInt64

)ENGINE = Distributed(sharding_simple, default, test_shard_2_local,rand())

--创建本地表

CREATE TABLE test_shard_2_local ON CLUSTER sharding_simple (

id UInt64

)ENGINE = MergeTree()

ORDER BY id

PARTITION BY id

--删除分布式表

DROP TABLE test_shard_2_all ON CLUSTER sharding_simple

--删除本地表

DROP TABLE test_shard_2_local ON CLUSTER sharding_simple

数据插入

insert into test_shard_2_all values (1);

insert into test_shard_2_all values (2);

insert into test_shard_2_all values (3);

insert into test_shard_2_all values (4);

数据查询

ck01

ck01 :) select * from test_shard_2_all

SELECT *

FROM test_shard_2_all

Query id: 2a64068e-b6d3-4253-8ebd-f9555fd70dbe

┌─id─┐

│ 2 │

└────┘

┌─id─┐

│ 1 │

└────┘

┌─id─┐

│ 3 │

└────┘

┌─id─┐

│ 4 │

└────┘

4 rows in set. Elapsed: 0.135 sec.

ck03

ck03 :) select * from test_shard_2_all;

SELECT *

FROM test_shard_2_all

Query id: e972df9a-ed3d-45ad-9b86-cf2639538c63

┌─id─┐

│ 3 │

└────┘

┌─id─┐

│ 1 │

└────┘

┌─id─┐

│ 4 │

└────┘

┌─id─┐

│ 2 │

└────┘

5 rows in set. Elapsed: 0.072 sec.

ck01

ck01 :) select * from test_shard_2_local;

SELECT *

FROM test_shard_2_local

Query id: 8474f384-155c-4c50-9943-c70ff7b72be5

┌─id─┐

│ 2 │

└────┘

1 rows in set. Elapsed: 0.002 sec.

ck03

ck03 :) select * from test_shard_2_local

SELECT *

FROM test_shard_2_local

Query id: 856726df-4bec-481d-b825-4dc7525946f7

┌─id─┐

│ 3 │

└────┘

┌─id─┐

│ 4 │

└────┘

┌─id─┐

│ 1 │

└────┘

3 rows in set. Elapsed: 0.010 sec.

zookeeper

维护语句

--查看集群状态

ck03 :) select * from system.clusters

SELECT *

FROM system.clusters

Query id: 41d03f02-3918-43a5-a324-b67e3e3f47bb

┌─cluster─────────┬─shard_num─┬─shard_weight─┬─replica_num─┬─host_name─┬─host_address──┬─port─┬─is_local─┬─user────┬─default_database─┬─errors_count─┬─slowdowns_count─┬─estimated_recovery_time─┐

│ sharding_simple │ 1 │ 1 │ 1 │ ck01 │ 192.168.62.81 │ 9000 │ 0 │ default │ │ 0 │ 0 │ 0 │

│ sharding_simple │ 1 │ 1 │ 2 │ ck02 │ 192.168.62.82 │ 9000 │ 0 │ default │ │ 0 │ 0 │ 0 │

│ sharding_simple │ 2 │ 1 │ 1 │ ck03 │ 192.168.62.83 │ 9000 │ 1 │ default │ │ 0 │ 0 │ 0 │

│ sharding_simple │ 2 │ 1 │ 2 │ ck04 │ 192.168.62.84 │ 9000 │ 0 │ default │ │ 0 │ 0 │ 0 │

└─────────────────┴───────────┴──────────────┴─────────────┴───────────┴───────────────┴──────┴──────────┴─────────┴──────────────────┴──────────────┴─────────────────┴─────────────────────────┘

4 rows in set. Elapsed: 0.002 sec.

--查看当前宏变量,修改宏变量无需修改服务器

ck01 :) SELECT * FROM system.macros

SELECT *

FROM system.macros

Query id: 2979e9fb-9a06-47ac-8027-4b95fdc5c667

┌─macro───┬─substitution─┐

│ replica │ ck01 │

│ shard │ 01 │

└─────────┴──────────────┘

2 rows in set. Elapsed: 0.001 sec.

--查看当前所在数据库

ck01 :) select database();

SELECT database()

Query id: a334757c-1cdd-42bf-a5f9-41849a523696

┌─DATABASE()─┐

│ default │

└────────────┘

1 rows in set. Elapsed: 0.001 sec.

--允许您访问远程服务器,而无需创建 Distributed 表。remoteSecure - 与 remote 相同,但是会使用加密链接。

--这两个函数都可以在 SELECT 和 INSERT 查询中使用。

--remote('addresses_expr', db, table[, 'user'[, 'password'], sharding_key])

--remote('addresses_expr', db.table[, 'user'[, 'password'], sharding_key])

ck02 :) SELECT * FROM remote('ck01', 'system', 'macros', 'default','clickhouse')

SELECT *

FROM remote('ck01', 'system', 'macros', 'default', 'clickhouse')

Query id: 8d4ab4e8-cab1-4b4d-a4dc-6c9a32d1b85b

┌─macro───┬─substitution─┐

│ replica │ ck01 │

│ shard │ 01 │

└─────────┴──────────────┘

2 rows in set. Elapsed: 0.058 sec.