《动手学深度学习 Pytorch版》 4.10 实战Kaggle比赛:预测比赛

4.10.1 下载和缓存数据集

import hashlib

import os

import tarfile

import zipfile

import requests

#@save

DATA_HUB = dict()

DATA_URL = 'http://d2l-data.s3-accelerate.amazonaws.com/'

def download(name, cache_dir=os.path.join('..', 'data')): #@save

"""下载一个DATA_HUB中的文件,返回本地文件名"""

assert name in DATA_HUB, f"{name} 不存在于 {DATA_HUB}"

url, sha1_hash = DATA_HUB[name]

os.makedirs(cache_dir, exist_ok=True)

fname = os.path.join(cache_dir, url.split('/')[-1])

if os.path.exists(fname):

sha1 = hashlib.sha1()

with open(fname, 'rb') as f:

while True:

data = f.read(1048576)

if not data:

break

sha1.update(data)

if sha1.hexdigest() == sha1_hash:

return fname # 命中缓存

print(f'正在从{url}下载{fname}...')

r = requests.get(url, stream=True, verify=True)

with open(fname, 'wb') as f:

f.write(r.content)

return fname

def download_extract(name, folder=None): #@save

"""下载并解压zip/tar文件"""

fname = download(name)

base_dir = os.path.dirname(fname)

data_dir, ext = os.path.splitext(fname)

if ext == '.zip':

fp = zipfile.ZipFile(fname, 'r')

elif ext in ('.tar', '.gz'):

fp = tarfile.open(fname, 'r')

else:

assert False, '只有zip/tar文件可以被解压缩'

fp.extractall(base_dir)

return os.path.join(base_dir, folder) if folder else data_dir

def download_all(): #@save

"""下载DATA_HUB中的所有文件"""

for name in DATA_HUB:

download(name)

4.10.2 Kaggle

好久没用的老帐号给我删了?

4.10.3 访问和读取数据集

%matplotlib inline

import numpy as np

import pandas as pd

import torch

from torch import nn

from d2l import torch as d2l

# 使用前面定义的脚本下载并缓存数据

DATA_HUB['kaggle_house_train'] = ( #@save

DATA_URL + 'kaggle_house_pred_train.csv',

'585e9cc93e70b39160e7921475f9bcd7d31219ce')

DATA_HUB['kaggle_house_test'] = ( #@save

DATA_URL + 'kaggle_house_pred_test.csv',

'fa19780a7b011d9b009e8bff8e99922a8ee2eb90')

# 使用pandas分别加载数据

train_data = pd.read_csv(download('kaggle_house_train'))

test_data = pd.read_csv(download('kaggle_house_test'))

print(train_data.shape)

print(test_data.shape)

print(train_data.iloc[0:4, [0, 1, 2, 3, -3, -2, -1]]) # 查看前四个和后两个

(1460, 81)

(1459, 80)

Id MSSubClass MSZoning LotFrontage SaleType SaleCondition SalePrice

0 1 60 RL 65.0 WD Normal 208500

1 2 20 RL 80.0 WD Normal 181500

2 3 60 RL 68.0 WD Normal 223500

3 4 70 RL 60.0 WD Abnorml 140000

all_features = pd.concat((train_data.iloc[:, 1:-1], test_data.iloc[:, 1:])) # 删除不带预测信息的Id

4.10.4 数据预处理

numeric_features = all_features.dtypes[all_features.dtypes != 'object'].index # 定位数值列

all_features[numeric_features] = all_features[numeric_features].apply(

lambda x: (x - x.mean()) / (x.std())) # 标准化数据

all_features[numeric_features] = all_features[numeric_features].fillna(0) # 将缺失值设为0

# 处理离散值 “Dummy_na=True”将“na”(缺失值)视为有效的特征值,并为其创建指示符特征

all_features = pd.get_dummies(all_features, dummy_na=True)

all_features.shape

(2919, 331)

n_train = train_data.shape[0] # 获取样本数

# 从pandas格式中提取NumPy格式,并将其转换为张量表示用于训练

train_features = torch.tensor(all_features[:n_train].values, dtype=torch.float32)

test_features = torch.tensor(all_features[n_train:].values, dtype=torch.float32)

train_labels = torch.tensor(

train_data.SalePrice.values.reshape(-1, 1), dtype=torch.float32)

4.10.5 训练

# 整一个带有损失平方的线性模型作为基线模型

loss = nn.MSELoss()

in_features = train_features.shape[1]

def get_net():

# net = nn.Sequential(nn.Linear(in_features, 1))

net = nn.Sequential(nn.Linear(in_features, 256),

nn.ReLU(),

nn.Linear(256, 64),

nn.ReLU(),

nn.Linear(64, 1))

return net

# 由于房价预测更在意相对误差,故进行取对数处理

def log_rmse(net, features, labels):

clipped_preds = torch.clamp(net(features), 1, float('inf')) # 将房价范围限制在1到无穷大,进一步稳定其值

rmse = torch.sqrt(loss(torch.log(clipped_preds),

torch.log(labels))) # 取对数再算均方根误差

return rmse.item()

# 使用对学习率不敏感的Adam优化器

def train(net, train_features, train_labels, test_features, test_labels,

num_epochs, learning_rate, weight_decay, batch_size):

train_ls, test_ls = [], []

train_iter = d2l.load_array((train_features, train_labels), batch_size) # 加载训练集数据

optimizer = torch.optim.Adam(net.parameters(),

lr = learning_rate,

weight_decay = weight_decay) # 使用Adam优化算法

for epoch in range(num_epochs):

for X, y in train_iter:

optimizer.zero_grad()

l = loss(net(X), y)

l.backward()

optimizer.step()

train_ls.append(log_rmse(net, train_features, train_labels))

if test_labels is not None:

test_ls.append(log_rmse(net, test_features, test_labels))

return train_ls, test_ls

4.10.6 K折交叉验证

def get_k_fold_data(k, i, X, y):

assert k > 1

fold_size = X.shape[0] // k # 计算子集数据量

X_train, y_train = None, None

for j in range(k):

idx = slice(j * fold_size, (j + 1) * fold_size)

X_part, y_part = X[idx, :], y[idx] # 截取当前子集数据

if j == i:

X_valid, y_valid = X_part, y_part

elif X_train is None:

X_train, y_train = X_part, y_part

else:

X_train = torch.cat([X_train, X_part], 0)

y_train = torch.cat([y_train, y_part], 0)

return X_train, y_train, X_valid, y_valid

# 完成训练后需要求误差的平均值

def k_fold(k, X_train, y_train, num_epochs, learning_rate, weight_decay,

batch_size):

train_l_sum, valid_l_sum = 0, 0

for i in range(k):

data = get_k_fold_data(k, i, X_train, y_train)

net = get_net()

train_ls, valid_ls = train(net, *data, num_epochs, learning_rate,

weight_decay, batch_size)

train_l_sum += train_ls[-1]

valid_l_sum += valid_ls[-1]

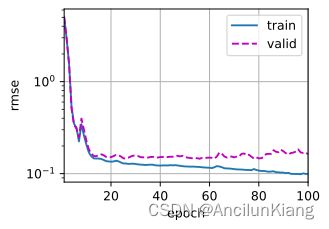

if i == 0:

d2l.plot(list(range(1, num_epochs + 1)), [train_ls, valid_ls],

xlabel='epoch', ylabel='rmse', xlim=[1, num_epochs],

legend=['train', 'valid'], yscale='log')

print(f'折{i + 1},训练log rmse{float(train_ls[-1]):f}, '

f'验证log rmse{float(valid_ls[-1]):f}')

return train_l_sum / k, valid_l_sum / k

4.10.7 模型选择

k, num_epochs, lr, weight_decay, batch_size = 10, 100, 0.03, 0.05, 256

train_l, valid_l = k_fold(k, train_features, train_labels, num_epochs, lr,

weight_decay, batch_size)

print(f'{k}-折验证: 平均训练log rmse: {float(train_l):f}, '

f'平均验证log rmse: {float(valid_l):f}')

折1,训练log rmse0.099098, 验证log rmse0.162470

折2,训练log rmse0.091712, 验证log rmse0.114310

折3,训练log rmse0.107151, 验证log rmse0.151471

折4,训练log rmse0.103659, 验证log rmse0.167303

折5,训练log rmse0.102100, 验证log rmse0.165151

折6,训练log rmse0.110199, 验证log rmse0.131012

折7,训练log rmse0.105075, 验证log rmse0.146769

折8,训练log rmse0.109164, 验证log rmse0.123824

折9,训练log rmse0.096305, 验证log rmse0.174747

折10,训练log rmse0.096146, 验证log rmse0.136332

10-折验证: 平均训练log rmse: 0.102061, 平均验证log rmse: 0.147339

4.10.8 提交 Kaggle 预测

def train_and_pred(train_features, test_features, train_labels, test_data,

num_epochs, lr, weight_decay, batch_size):

net = get_net()

train_ls, _ = train(net, train_features, train_labels, None, None,

num_epochs, lr, weight_decay, batch_size)

d2l.plot(np.arange(1, num_epochs + 1), [train_ls], xlabel='epoch',

ylabel='log rmse', xlim=[1, num_epochs], yscale='log')

print(f'训练log rmse:{float(train_ls[-1]):f}')

# 将网络应用于测试集。

preds = net(test_features).detach().numpy()

# 将其重新格式化以导出到Kaggle

test_data['SalePrice'] = pd.Series(preds.reshape(1, -1)[0])

submission = pd.concat([test_data['Id'], test_data['SalePrice']], axis=1)

submission.to_csv('submission.csv', index=False)

train_and_pred(train_features, test_features, train_labels, test_data,

num_epochs, lr, weight_decay, batch_size)

训练log rmse:0.091832