一文搞定Redis主从复制,哨兵集群,Cluster集群搭建与测试

在本文中,我们将准备三台虚拟机,安装三个Redis服务端,分别搭建Redis主从、哨兵和集群。

下面我会图文结合来讲解搭建的详细过程,并对注意点加以说明,开始吧~

一、安装Redis服务端

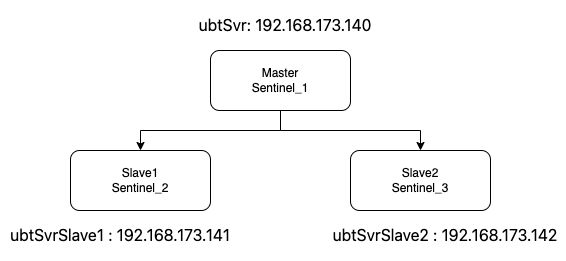

1、准备三台乌班图虚拟机:

下面以Ubuntu Server 20.04作为实验环境,对于CentOS中的安装过程自行百度。准备工作:

乌班图 Server 20.04 虚拟机三台,我用的是MacOS的vmware,架构图:

2、安装Redis和哨兵:

为了方便,用apt包管理安装Redis + Sentinel哨兵,版本为 v5.0.7。分别在三台机器执行下面的命令行:

sudo apt install redis redis-sentinel

安装完成后,查看三台机器上的redis/sentinel端口是否已监听,如下图红框部分:

6379为redis默认端口,26379为sentinel哨兵默认端口。

然后,分别在三台机器查看redis和sentinel的进程是否已运行:

查看 进程 + 端口 正常后,redis服务就启动成功了(redis和哨兵是两个独立服务)。

3、修改Redis配置文件:

分别修改三台机器的三个redis.conf配置文件:sudo vim /etc/redis/redis.conf

# 修改绑定ip,下面表示redis绑定本地和本机ip地址

# 每个机器上的 redis.conf 中只需要一行 bind xxx xxx 配置

bind 127.0.0.1 192.168.173.140 # master机器要改成这行

bind 127.0.0.1 192.168.173.141 # slave1机器改成这行

bind 127.0.0.1 192.168.173.142 # slave2机器改成这行

# 保护模式开启

protected-mode yes

# yes表示以后台进程方式运行redis

daemonize yes

# 改为yes,开启aof持久化,同时开启AOF+RDB则为混合持久化

appendonly yes

# 添加密码访问,protected-mode 选项为yes时需要密码访问redis

# 在 redis.conf 中的 # requirepass foobared 这行下面添加如下一行即可

requirepass 123456

然后重启三台机器的redis:

sudo systemctl restart redis-server.service

用上述步骤【2、安装Redis和哨兵】中的方法再次确认redis进程+端口监听是否正常。

注意:

1、三个redis.conf文件里的密码选项requirepass,都设置成一样,方便redis从节点或哨兵节点访问。

2、编译安装的redis无法用systemctl restart,可以先关闭:kill -15 redisPID,然后再启动:/path/to/redis-server /path/to/redis.conf

二、配置Redis主从复制

1、配置主从:

分别在两台slave上执行如下命令:

redis-cli -a 123456 # 登录本机redis,默认连127.0.0.1 6379上的redis服务

127.0.0.1:6379> REPLICAOF 192.168.173.140 6379 # 本机redis作为从,连接主的 IP + 端口

OK

127.0.0.1:6379> CONFIG SET masterauth 123456 # 主的登录密码,此密码即上文redis.conf中的requirepass 123456选项

OK

用命令设置主从是临时的,重启redis会失效,可以修改两个slave的redis.conf配置文件:

# 在 redis.conf 中的 # replicaof 这行下添加如下一行

replicaof 192.168.173.140 6379

# 在 # masterauth 下添加如下一行

masterauth 123456

注意:

masterauth 123456 选项在三个redis.conf配置文件中都要设置。

如果主的配置中不设置,在出现故障,切换新主的时候,主节点无法连接上新主节点。

修改完配置后,然后重启三台机器的redis:

sudo systemctl restart redis-server.service

2、查看主从info信息:

登录主的redis并查看info:

redis-cli -a 123456

127.0.0.1:6379> INFO replication

# Replication

role:master

connected_slaves:2 # 连接了两个从,下面是它们的ip+端口,状态以及slave目前同步的位置offset,lag是同步延迟

slave0:ip=192.168.173.141,port=6379,state=online,offset=1386,lag=0

slave1:ip=192.168.173.142,port=6379,state=online,offset=1386,lag=1

master_replid:9233b2810b60541532a1ea8fa083da081c5b5fee # master的id

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:1386 # master目前写入数据的位置

...

登录两个从的redis查看info:

redis-cli -a 123456

127.0.0.1:6379> INFO replication

# Replication

role:slave

master_host:192.168.173.140

master_port:6379

master_link_status:up # 已连上master

master_last_io_seconds_ago:4

master_sync_in_progress:0

slave_repl_offset:1386 # slave目前同步的位置,这个值应该与主的 master_repl_offset 的值一样

slave_priority:100

slave_read_only:1 # 默认情况,slave都是只读的,仅把master同步过来的数据写入slave

connected_slaves:0 # slave下面还可以连接别的slave,把自己当作经理角色

master_replid:9233b2810b60541532a1ea8fa083da081c5b5fee # master的id

master_replid2:0000000000000000000000000000000000000000

...

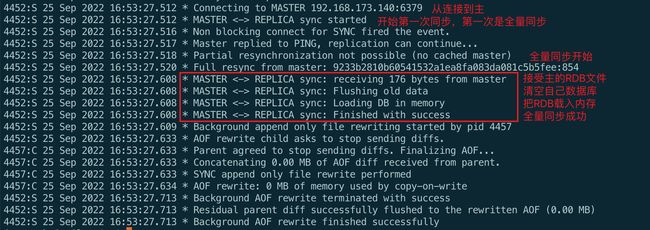

3、查看主从复制的日志:

下面是查看slave的日志(/var/log/redis/redis-server.log):

关于redis主从同步的原理,后面单独写文章讲解,本文主要以实践为主。

4、测试主从数据同步:

在master上添加两个键值对(name:Tom, age:22),在两个slave中也可访问,表示主从复制成功:

三、配置Redis哨兵

1、修改哨兵配置文件:

三个哨兵配置修改如下(sudo vim /etc/redis/sentinel.conf):

# 注释掉下面这行

#bind 127.0.0.1 ::1

# 关闭保护模式,v5.0.7没有找到requirepass选项,无法添加密码,因此关闭

protected-mode no

# 以后台进程方式运行

daemonize yes

# 核心配置,把 sentinel monitor mymaster 127.0.0.1 6379 2 这行改成如下:

# 指定哨兵把谁作为主节点,参数含义为:主节点别名、主的IP、主的端口、投票法定人数

# 2表示投票法定人数,即三个哨兵中有2个赞成时,哨兵才会采取下一步动作,如让主节点客观下线

sentinel monitor mymaster 192.168.173.140 6379 2

# 因为redis节点设置了密码,需要在 sentinel monitor mymaster xxx 的下面加一行:

sentinel auth-pass mymaster 123456

注意:

上面的bind选项,一定要把本机ip放前面,如 bind 192.168.173.140 127.0.0.1。不然会出现哨兵找不到其他哨兵的问题。或者直接注释掉 bind 这行【注1】。

2、重启主从共3个哨兵(顺序 主、从1、从2):

sudo systemctl restart redis-sentinel.service

3、查看哨兵信息:

登录master查看哨兵信息:

redis-cli -p 26379

127.0.0.1:26379> info sentinel

# Sentinel

sentinel_masters:1

sentinel_tilt:0

sentinel_running_scripts:0

sentinel_scripts_queue_length:0

sentinel_simulate_failure_flags:0

master0:name=mymaster,status=ok,address=192.168.173.140:6379,slaves=2,sentinels=3 # 如果sentinels=1,说明哨兵无法发现彼此

127.0.0.1:26379> sentinel master mymaster # 查看主的信息

...

127.0.0.1:26379> sentinel slaves mymaster # 查看两个从的信息

...

127.0.0.1:26379> sentinel sentinels mymaster # 查看其他两个哨兵的信息

...

注意:

如果三个哨兵之间无法发现彼此,检查三个哨兵配置文件里的 sentinel myid xxx 是否一样,如果一样,删除每个配置文件里的 myid 这行,然后分别重启三个哨兵,会自动生成随机id写入相应配置文件。

三个哨兵日志(/var/log/redis/redis-sentinel.log)中,也可以看到 +monitor, +sentinel, +slave 这样的事件,表示哨兵之间已经发现redis主从节点,以及其他哨兵节点。

4、测试故障转移:

在master机器执行:

redis-cli -a 123456 DEBUG sleep 100

表示执行调试命令,睡眠100秒,模拟redis主节点下线。30秒后sentinel会发现主节点主观下线(+sdown事件),然后哨兵之间判定客观下线(-odown事件),最后投票选举出领头(leader)哨兵,执行故障转移(failover),等下线的主节点重新上线后,作为新主节点的从节点。看如下日志:

此时在192.168.173.140机器上登录sentinel:

redis-cli -p 26379

127.0.0.1:26379> info sentinel # 192.168.173.140 此时已经变为从节点

# Sentinel

sentinel_masters:1

sentinel_tilt:0

sentinel_running_scripts:0

sentinel_scripts_queue_length:0

sentinel_simulate_failure_flags:0

master0:name=mymaster,status=ok,address=192.168.173.141:6379,slaves=2,sentinels=3 # 新主是192.168.173.141

四、配置Redis Cluster集群

1、集群介绍:

如同哨兵模式,redis cluster集群也是高可用方案,并且有hash slot(哈希槽)加持,使得集群具有高并发。此外,可以使用 hash tag 特性,指定 key 中第一对 {xxx} 花括号内包裹的内容来计算CRC16,这样可以实现具有固定hash tag的一组数据都存储到同一个redis节点上。如果没使用hash tag,就会对整个key进行CRC16计算。

由于cluster集群与哨兵无关,先关闭所有哨兵服务:

sudo systemctl stop redis-sentinel.service

并且把三个redis.conf文件里的 replicaof 192.168.173.140 6379 这行都注释掉。搭建集群需要3主3从共6个节点,三台机器上分别搭建1主1从,架构如下:

3主3从分别在三台机器上,端口号范围:7001~7006,如上图。

注意:组成集群的6个redis节点都需要清空数据。

2、创建集群:

在ubtSvr机器执行命令:

sudo mkdir -pv /data/redis_cluster/{7001,7004} # 创建集群目录

sudo cp /etc/redis/redis.conf /data/redis_cluster/7001 # 复制配置文件

sudo cp /etc/redis/redis.conf /data/redis_cluster/7004 # 复制配置文件

# 分别修改下面的配置文件

sudo vim /data/redis_cluster/7001/redis.conf

sudo vim /data/redis_cluster/7004/redis.conf

# 分别在配置文件修改下面的选项

# 7001的修改

# bind xxx xxx # 注释掉这行,不然下文执行创建集群命令可能卡在 Waiting for the cluster to join,原因可能与上文 注1 相同

protected-mode no # 关闭保护模式

port 7001 # 端口修改

cluster-enabled yes # 开启集群

cluster-config-file nodes-7001.conf # 集群节点自动写的配置,不需要手动修改

cluster-node-timeout 15000 # 判断集群节点是否挂掉的超时

appendonly yes # 开始aof持久化

# 7004的修改

# bind xxx xxx # 注释掉这行

protected-mode no # 关闭保护模式

port 7004

cluster-enabled yes

cluster-config-file nodes-7004.conf

cluster-node-timeout 15000

appendonly yes

# 启动redis节点,为了避免目录权限问题,用root权限启动,生产环境不推荐root用户运行

sudo redis-server /data/redis_cluster/7001/redis.conf

sudo redis-server /data/redis_cluster/7004/redis.conf

在ubtSvrSlave1机器执行命令:

sudo mkdir -pv /data/redis_cluster/{7002,7005}

sudo cp /etc/redis/redis.conf /data/redis_cluster/7002

sudo cp /etc/redis/redis.conf /data/redis_cluster/7005

sudo vim /data/redis_cluster/7002/redis.conf

sudo vim /data/redis_cluster/7005/redis.conf

# 7002

# bind xxx xxx # 注释掉这行

protected-mode no # 关闭保护模式

port 7002 # 端口修改

cluster-enabled yes # 开启集群

cluster-config-file nodes-7002.conf # 集群节点自动写的配置,不需要手动修改

cluster-node-timeout 15000 # 判断集群节点是否挂掉的超时

appendonly yes # 开始aof持久化

# 7005

# bind xxx xxx # 注释掉这行

protected-mode no # 关闭保护模式

port 7005 # 端口修改

cluster-enabled yes # 开启集群

cluster-config-file nodes-7005.conf # 集群节点自动写的配置,不需要手动修改

cluster-node-timeout 15000 # 判断集群节点是否挂掉的超时

appendonly yes # 开始aof持久化

# 启动redis节点,为了避免目录权限问题,用root权限启动,生产环境不推荐root用户运行

sudo redis-server /data/redis_cluster/7002/redis.conf

sudo redis-server /data/redis_cluster/7005/redis.conf

在ubtSvrSlave2机器执行命令:

sudo mkdir -pv /data/redis_cluster/{7003,7006}

sudo cp /etc/redis/redis.conf /data/redis_cluster/7003

sudo cp /etc/redis/redis.conf /data/redis_cluster/7006

sudo vim /data/redis_cluster/7003/redis.conf

sudo vim /data/redis_cluster/7006/redis.conf

# 7003

# bind xxx xxx # 注释掉这行

protected-mode no # 关闭保护模式

port 7003 # 端口修改

cluster-enabled yes # 开启集群

cluster-config-file nodes-7003.conf # 集群节点自动写的配置,不需要手动修改

cluster-node-timeout 15000 # 判断集群节点是否挂掉的超时

appendonly yes # 开始aof持久化

# 7006

# bind xxx xxx # 注释掉这行

protected-mode no # 关闭保护模式

port 7006 # 端口修改

cluster-enabled yes # 开启集群

cluster-config-file nodes-7006.conf # 集群节点自动写的配置,不需要手动修改

cluster-node-timeout 15000 # 判断集群节点是否挂掉的超时

appendonly yes # 开始aof持久化

# 启动redis节点,为了避免目录权限问题,用root权限启动,生产环境不推荐root用户运行

sudo redis-server /data/redis_cluster/7003/redis.conf

sudo redis-server /data/redis_cluster/7006/redis.conf

注意:

上面的redis节点启动后,每个节点都会监听两个端口,如7001节点,会监听:7001、17001,前者为集群服务端口,后者为集群总线的通信端口,用于集群节点之间互相检测、配置升级、故障转移等。

查看任意一个节点的日志,如7001节点,会看到节点id:

![]()

在 ubtSvr 机器执行如下命令,启动集群:

# 创建集群,前三个,即 7001, 7002, 7003 节点是主节点,后面三个是从节点

redis-cli -a 123456 --cluster create \

192.168.173.140:7001 192.168.173.141:7002 192.168.173.142:7003 \

192.168.173.140:7004 192.168.173.141:7005 192.168.173.142:7006 \

--cluster-replicas 1

输入 yes 继续:

注意:

创建集群可能遇到错误:[ERR] Node 192.168.173.140:7001 is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0. 解决办法,kill掉3台机器上的redis进程并删除持久化与配置文件:

sudo su

kill -15 $(ps -C redis-server -o pid=)

rm -f /var/lib/redis/{appendonly.aof,dump.rdb,nodes-*.conf}

按 CTRL + D 退出root然后重新执行上面的创建集群命令。

3、测试集群:

在ubtSvr上执行:

# 连接主节点7001

redis-cli -a 123456 -c -p 7001

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:7001> set for bar

-> Redirected to slot [11274] located at 192.168.173.142:7003

OK

192.168.173.142:7003> set hello world

-> Redirected to slot [866] located at 192.168.173.140:7001

OK

192.168.173.140:7001> get for

-> Redirected to slot [11274] located at 192.168.173.142:7003

"bar"

192.168.173.142:7003> get hello

-> Redirected to slot [866] located at 192.168.173.140:7001

"world"

# 连接从节点7004,注意这是 192.168.173.142:7003 的从节点

redis-cli -a 123456 -c -p 7004

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:7004> get hello

-> Redirected to slot [866] located at 192.168.173.140:7001

"world"

192.168.173.140:7001> get for

-> Redirected to slot [11274] located at 192.168.173.142:7003

"bar"

在ubtSvr上执行,查看集群信息:

# 查看集群信息

redis-cli -a 123456 -c -p 7001

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:7001> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:1173

cluster_stats_messages_pong_sent:1138

cluster_stats_messages_sent:2311

cluster_stats_messages_ping_received:1133

cluster_stats_messages_pong_received:1173

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:2311

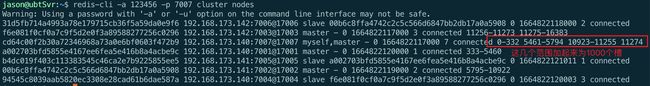

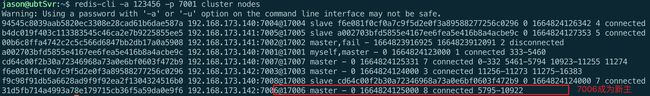

# 查看集群的所有节点

127.0.0.1:7001> cluster nodes

31d5fb714a4993a78e179715cb36f5a59da0e9f6 192.168.173.142:7006@17006 slave 00b6c8ffa4742c2c5c566d6847bb2db17a0a5908 0 1664812510000 6 connected

94545c8039aab5820ec3308e28cad61b6dae587a 192.168.173.140:7004@17004 slave f6e081f0cf0a7c9f5d2e0f3a89588277256c0296 0 1664812510117 4 connected

f6e081f0cf0a7c9f5d2e0f3a89588277256c0296 192.168.173.142:7003@17003 master - 0 1664812510000 3 connected 10923-16383

b4dc019f403c113383545c46ca2e7b9225855ee5 192.168.173.141:7005@17005 slave a002703bfd5855e4167ee6fea5e416b8a4acbe9c 0 1664812511124 5 connected

00b6c8ffa4742c2c5c566d6847bb2db17a0a5908 192.168.173.141:7002@17002 master - 0 1664812512131 2 connected 5461-10922

a002703bfd5855e4167ee6fea5e416b8a4acbe9c 192.168.173.140:7001@17001 myself,master - 0 1664812511000 1 connected 0-5460

4、集群节点扩容:

添加(扩容)新节点包括1主1从(至少1从)两个节点。下面在 ubtSvr 上添加主从节点,执行命令:

sudo mkdir /data/redis_cluster/{7007,7008}

# 拷贝配置

sudo cp /data/redis_cluster/7001/redis.conf /data/redis_cluster/7007/

sudo cp /data/redis_cluster/7001/redis.conf /data/redis_cluster/7008/

# 修改7007,7008的如下配置项

# 7007的

port 7007

cluster-config-file nodes-7007.conf

# 7008的

port 7008

cluster-config-file nodes-7008.conf

# 启动两个redis

sudo redis-server /data/redis_cluster/7007/redis.conf

sudo redis-server /data/redis_cluster/7008/redis.conf

添加7007主节点到集群中(在ubtSvr上执行):

redis-cli -a 123456 --cluster add-node 192.168.173.140:7007 192.168.173.140:7001

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Adding node 192.168.173.140:7007 to cluster 192.168.173.140:7001

>>> Performing Cluster Check (using node 192.168.173.140:7001)

M: a002703bfd5855e4167ee6fea5e416b8a4acbe9c 192.168.173.140:7001

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 94545c8039aab5820ec3308e28cad61b6dae587a 192.168.173.140:7004

slots: (0 slots) slave

replicates f6e081f0cf0a7c9f5d2e0f3a89588277256c0296

S: b4dc019f403c113383545c46ca2e7b9225855ee5 192.168.173.141:7005

slots: (0 slots) slave

replicates a002703bfd5855e4167ee6fea5e416b8a4acbe9c

M: 00b6c8ffa4742c2c5c566d6847bb2db17a0a5908 192.168.173.141:7002

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: f6e081f0cf0a7c9f5d2e0f3a89588277256c0296 192.168.173.142:7003

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 31d5fb714a4993a78e179715cb36f5a59da0e9f6 192.168.173.142:7006

slots: (0 slots) slave

replicates 00b6c8ffa4742c2c5c566d6847bb2db17a0a5908

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.173.140:7007 to make it join the cluster.

[OK] New node added correctly.

注意:

添加7007主节点时,如果出错:[ERR] Node 192.168.173.140:7007 is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0. 连接并清空此节点的数据:

redis-cli -a 123456 -p 7007 flushall

最后,重新执行上文的 “添加7007主节点到集群中…”

查看新添加的主节点(在ubtSvr上执行):

redis-cli -a 123456 -p 7007 cluster nodes

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

31d5fb714a4993a78e179715cb36f5a59da0e9f6 192.168.173.142:7006@17006 slave 00b6c8ffa4742c2c5c566d6847bb2db17a0a5908 0 1664820948226 2 connected

f6e081f0cf0a7c9f5d2e0f3a89588277256c0296 192.168.173.142:7003@17003 master - 0 1664820950241 3 connected 10923-11273 11275-16383

# 新增节点,注意7007节点的id=cd64c00f2b30a72346968a73a0e6bf0603f472b9,下文会用到

cd64c00f2b30a72346968a73a0e6bf0603f472b9 192.168.173.140:7007@17007 myself,master - 0 1664820948000 7 connected 11274

a002703bfd5855e4167ee6fea5e416b8a4acbe9c 192.168.173.140:7001@17001 master - 0 1664820949000 1 connected 0-5460

b4dc019f403c113383545c46ca2e7b9225855ee5 192.168.173.141:7005@17005 slave a002703bfd5855e4167ee6fea5e416b8a4acbe9c 0 1664820946209 1 connected

00b6c8ffa4742c2c5c566d6847bb2db17a0a5908 192.168.173.141:7002@17002 master - 0 1664820949232 2 connected 5461-10922

94545c8039aab5820ec3308e28cad61b6dae587a 192.168.173.140:7004@17004 slave f6e081f0cf0a7c9f5d2e0f3a89588277256c0296 0 1664820947000 3 connected

如果发现7007不小心变成了从节点,比如变成:

cd64c00f2b30a72346968a73a0e6bf0603f472b9 192.168.173.140:7007@17007 myself,slave f6e081f0cf0a7c9f5d2e0f3a89588277256c0296 0 1664814817000 0 connected

执行 手动故障转移 命令,把7007重新变成主节点:

redis-cli -a 123456 -p 7007 cluster failover

给新增主节点分配槽(在任一可用redis机器都可执行如下命令,这里选择ubtSvrSlave1机器):

redis-cli -a 123456 --cluster reshard 192.168.173.140:7007

输入需要分配的槽数量与7007节点的id:

查看7007上槽分配的结果(在ubtSvr上执行):

添加从节点7008(在ubtSvr上执行,下同):

redis-cli -a 123456 -p 7008 flushall # 先清空

# cd64c00f2b30a72346968a73a0e6bf0603f472b9 是 7007的id

redis-cli -a 123456 --cluster add-node 192.168.173.140:7008 192.168.173.140:7007 --cluster-slave --cluster-master-id cd64c00f2b30a72346968a73a0e6bf0603f472b9

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Adding node 192.168.173.140:7008 to cluster 192.168.173.140:7007

>>> Performing Cluster Check (using node 192.168.173.140:7007)

M: cd64c00f2b30a72346968a73a0e6bf0603f472b9 192.168.173.140:7007

slots:[0-332],[5461-5794],[10923-11255],[11274] (1001 slots) master

S: 31d5fb714a4993a78e179715cb36f5a59da0e9f6 192.168.173.142:7006

slots: (0 slots) slave

replicates 00b6c8ffa4742c2c5c566d6847bb2db17a0a5908

M: f6e081f0cf0a7c9f5d2e0f3a89588277256c0296 192.168.173.142:7003

slots:[11256-11273],[11275-16383] (5127 slots) master

1 additional replica(s)

M: a002703bfd5855e4167ee6fea5e416b8a4acbe9c 192.168.173.140:7001

slots:[333-5460] (5128 slots) master

1 additional replica(s)

S: b4dc019f403c113383545c46ca2e7b9225855ee5 192.168.173.141:7005

slots: (0 slots) slave

replicates a002703bfd5855e4167ee6fea5e416b8a4acbe9c

M: 00b6c8ffa4742c2c5c566d6847bb2db17a0a5908 192.168.173.141:7002

slots:[5795-10922] (5128 slots) master

1 additional replica(s)

S: 94545c8039aab5820ec3308e28cad61b6dae587a 192.168.173.140:7004

slots: (0 slots) slave

replicates f6e081f0cf0a7c9f5d2e0f3a89588277256c0296

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.173.140:7008 to make it join the cluster.

Waiting for the cluster to join

>>> Configure node as replica of 192.168.173.140:7007.

[OK] New node added correctly.

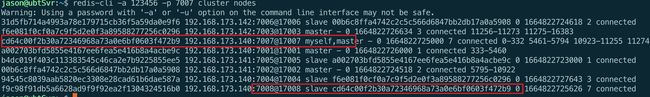

查看节点信息:

好了,目前已经有8个集群节点,4主4从。测试下get/set:

# 下面4个set,分别把数据存到了4个主节点 7001, 7002, 7003, 7007

redis-cli -c -a 123456 -p 7001

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:7001> set for me

-> Redirected to slot [11274] located at 192.168.173.140:7007

OK

192.168.173.140:7007> set foo bar

-> Redirected to slot [12182] located at 192.168.173.142:7003

OK

192.168.173.142:7003> set hello world

-> Redirected to slot [866] located at 192.168.173.140:7001

OK

192.168.173.140:7001> set name Tom

-> Redirected to slot [5798] located at 192.168.173.141:7002

OK

# 获取4个kv

redis-cli -c -a 123456 -p 7008

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:7008> get for

-> Redirected to slot [11274] located at 192.168.173.140:7007

"me"

192.168.173.140:7007> get foo

-> Redirected to slot [12182] located at 192.168.173.142:7003

"bar"

192.168.173.142:7003> get hello

-> Redirected to slot [866] located at 192.168.173.140:7001

"world"

192.168.173.140:7001> get name

-> Redirected to slot [5798] located at 192.168.173.141:7002

"Tom"

5、集群节点缩容:

尝试删除7007节点:

redis-cli -a 123456 --cluster del-node 127.0.0.1:7001 cd64c00f2b30a72346968a73a0e6bf0603f472b9

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Removing node cd64c00f2b30a72346968a73a0e6bf0603f472b9 from cluster 127.0.0.1:7001

[ERR] Node 192.168.173.140:7007 is not empty! Reshard data away and try again.

如上,删除非空的节点会报错,需要把7007上的槽挪走,才可以删除节点。

6、测试故障转移:

假如7002这个主节点故障了:

redis-cli -a 123456 -p 7002 debug segfault

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

Error: Server closed the connection

查看redis日志:

查看目前节点信息:

7、使用Hash Tag:

在集群中,一个key中用{}包裹的部分就是hash tag(哈希标签),比如有如下key:

name.{user:1000}.foo

age.{user:1000}.bar

这两个key会被分配到相同的槽,因为使用hash tag之后,只对{}中的内容计算哈希,这样只要hash tag一样,不同的key也可以分配到相同的槽中,比如:

redis-cli -c -a 123456 -p 7007

127.0.0.1:7007> set name.{user:1000}.foo Jerry

-> Redirected to slot [1649] located at 192.168.173.140:7001

OK

192.168.173.140:7001> set age.{user:1000}.bar 222

OK

# 这两个key被分配到相同的槽1649中,可以进行多键操作

192.168.173.140:7001> mget age.{user:1000}.bar name.{user:1000}.foo

1) "222"

2) "Jerry"

# 也可以使用mset

192.168.173.140:7001> mset login_{uid:1001} abc reg_{uid:1001} 123

OK

192.168.173.140:7001> mset login_1 abc reg_2 123 # 如果没有hash tag,会报错

(error) CROSSSLOT Keys in request don't hash to the same slot

所以使用hash tag的好处是可以支持多键操作(如mget,mset),让相同特征(比如同一个用户)的数据落到同一个节点中,便于管理。

不过也要注意,hash tag要具有一定的离散性,比如上面的 {user:1000} 这样的tag是基于用户id的,不同的用户会分配到不同的槽,如 {user:1000}, {user:1001}, {user:2000}。不要使用完全一样的hash tag,比如 {user:id},这样的tag不会变化,会造成大量的key都落在相同的槽上,造成数据倾斜,加重某个节点的负担。

参考:

https://redis.io/docs/manual/sentinel/

https://redis.io/docs/manual/scaling/

https://blog.csdn.net/liucy007/article/details/121227295