计算机网络之传输层

文章目录

-

- 1. Introduction and Transport Services (介绍与传输层服务)

-

- 1.1 Relation Between Transport and Network Layers

- 1.2 Overview of the Transport Layer in the Internet(网络中的传输层概览)

- 2. Mutiplexing and Demultiplexing(多路复用与多路分解)

- 3. UDP(用户数据报协议):无连接传输***

- 4. Principles of Reliable Data Transfer(可靠数据传输的原理)

-

- 4.1 构造可靠数据传输协议(略)

- 4.2 Pipelined Reliable Data Transfer Protocols(流水线可靠数据传输协议)

- 4.3 Go-Back-N(回退N)

- 4.4 Selective Repeat (SR,选择重传)

- 5. TCP(传输控制协议):面向连接传输

-

- 5.1 The TCP Connection

- 5.2 TCP Segment Structure(TCP报文段结构)***

- 5.3 Round-Trip Estimation and Timeout(往返时间的估计与超时)

- 5.4 Reliable Data Transfor(可靠数据传输)***

- 5.5 Flow Control

- 5.5 TCP Connection Management(TCP连接管理):三次握手与四次挥手******

- 6. Principles of Congestion Control(拥塞控制原理)

-

- 6.1 介绍

- 6.2 The Causes and the Costs of Congestion(拥塞原因与代价)

- 6.3 Approaches to Congestion Control(拥塞控制方法)

- 6.4 其它

- 7. TCP Congestion Control(TCP拥塞控制)***

Reference:

- Computer Networking:A Top-Down Approach,7-th

- PPT of NEU(lx)

1. Introduction and Transport Services (介绍与传输层服务)

应用进程之间的通信(端到端的通信):

两个主机进行通信实际上就是两个主机的应用进程互相通信;

1.1 Relation Between Transport and Network Layers

- 在协议栈中,传输层刚好位于网络层之上:传输层协议提供了在不同主机上运行的进程之间的逻辑通信,而网络层协议提供了主机之间的逻辑通信。

- The services that a transport protocol can provide are often constrained by the service model of the underlying network-layer protocol.

- If the network-layer protocol cannot provide delay or bandwidth guarantees for transport-layer segments sent between hosts, then the transport-layer protocol cannot provide delay or bandwidth guarantees for application messages sent between processes.

1.2 Overview of the Transport Layer in the Internet(网络中的传输层概览)

- 从通信和信息处理的角度看:传输层向它上面的应用层提供通信服务,它属于面向通信部分的最高层、用户功能的最底层。

- User Datagram Protocol (UDP,用户数据报协议): provides an unreliable, connectionless service to the invoking application. (RFC: Datagram,数据报)

- Process-to-process data delivery and error checking only.

- Transmission Control Protocol (TCP,传输控制协议): provides a reliable, connection-oriented service to the invoking application. (RFC: Segment,报文段)

- Congestion control(拥塞控制)

- Flow control(流量控制)

- Connection setup(连接建立)

- The most fundamental responsibility of UDP and TCP is to extend IP’s delivery service between two end systems to a delivery service between two processes running on the end systems.

- 运输协议数据单元TPDU (Transport Protocol Data Unit): 两个对等运输实体在通信时传送的数据单位。

- TCP传送的数据单位协议是 TCP 报文段(segment);

- UDP传送的数据单位协议是 UDP 报文或用户数据报;

传输层的端口

-

运行在计算机中的进程是用进程标识符(pid)来标志的;

-

运行在应用层的各种应用进程却不应当让计算机操作系统指派它的进程标识符:

- 这是因为在因特网上使用的计算机的操作系统种类很多,而不同的操作系统又使用不同格式的进程标识符。

-

为了使运行不同操作系统的计算机的应用进程能够互相通信,就必须用统一的方法对 TCP/IP 体系的应用进程进行标志;

需要解决的问题:

由于进程的创建和撤销都是动态的,发送方几乎无法识别其他机器上的进程

有时我们会改换接收报文的进程,但并不需要通知所有发送方

我们往往需要利用目的主机提供的功能来识别终点,而不需要知道实现这个功能的进程

-

解决问题的方法就是在运输层使用协议端口号(protocol port number),或通常简称为端口(port);

- 端口用一个 16 位端口号进行标志;

- 端口号只具有本地意义,即端口号只是为了标志本计算机应用层中的各进程,在因特网中不同计算机的相同端口号是没有联系的;

-

虽然通信的终点是应用进程,但我们可以把端口想象是通信的终点,因为我们只要把要传送的报文交到目的主机的某一个合适的目的端口,剩下的工作(即最后交付目的进程)就由TCP来完成;

-

软件端口和硬件端口

- 在协议栈层间的抽象的协议端口是软件端口(应用层的各种协议进程与运输实体进行层间交互的一种地址);

- 路由器或交换机上的端口是硬件端口(不同硬件设备进行交互的接口);

-

三类端口

- 熟知端口(well-known port numbers),数值一般为0~1023;

- Reserved for use by well-known application protocols such as HTTP (which uses port number 80) and FTP (which uses port number 21);

- 登记端口号,数值为1024~49151,为没有熟知端口号的应用程序使用的:

- 使用这个范围的端口号必须在IANA(互联网数字分配机构)登记,以防止重复;

- 客户端口号或短暂端口号,数值为49152~65535,留给客户进程选择暂时使用。当服务器进程收到客户进程的报文时,就知道了客户进程所使用的动态端口号。通信结束后,这个端口号可供其他客户进程以后使用。

- 熟知端口(well-known port numbers),数值一般为0~1023;

-

TCP的连接

-

TCP把连接作为最基本的抽象;

-

每一条TCP连接有两个端点;

-

TCP连接的端点不是主机,不是主机的IP地址,不是应用进程,也不是运输层的协议端口:

- TCP连接的端点叫做套接字(socket);

-

端口号拼接到 (contatenated with) IP地址即构成了套接字;

socket = (IP Address: Port Number)

每一条 TCP 连接唯一地被通信两端的两个端点(即两个套接字)所确定;

-

2. Mutiplexing and Demultiplexing(多路复用与多路分解)

-

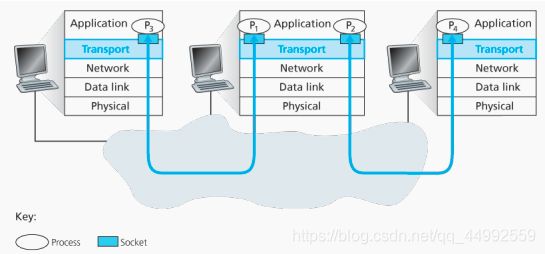

Multiplexing(多路复用):

- The job of gathering data chunks at the source host from different sockets, and then encapsulating each data chunk with header information (that will later be used in demultiplexing) to create segments, and passing the segments to the network layer is called multiplexing.

-

Demultiplexing(多路分解):

- This job of delivering the data in a transport-layer segment to the correct socket is called demultiplexing(运输层报文段的数据交付到正确的套接字的工作).

-

Transport-layer multiplexing and demultiplexing

- The transport layer in the receiving host does not actually deliver data directly to a process, but instead to an intermediary socket.

- Because at any given time there can be more than one socket in the receiving host, each socket has a unique identifier(the format of the identifier depends on whether the socket is a UDP or a TCP socket)

Connectionless Multiplexing and Demultiplexing(无连接的多路复用与多路分解)

-

When a UDP socket is created in this manner, the transport layer automatically assigns a port number to the socket.

- Typically, the client side of the application lets the transport layer automatically (and transparently) assign the port number, whereas the server side of the application assigns a specific port number.

-

When host receives UDP segement:

- Checks destination port number in segment;

- Directs UDP segment to socket with that port number;

-

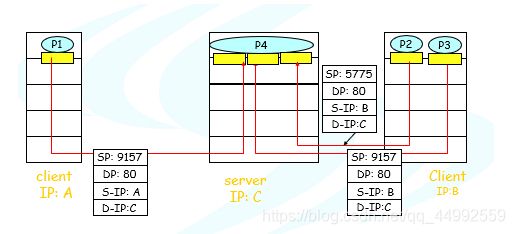

UDP socket identified by two-tuple: (dest IP address, dest port number)

- As a consequence, if two UDP segments have different source IP datagrams with different source IP addresses and/or source port numbers, but have the same destination IP address and destination port number, then the two segments will be directed to the same destination process via the same destination socket.

-

Connectionless demux:what is the purpose of the source port number?

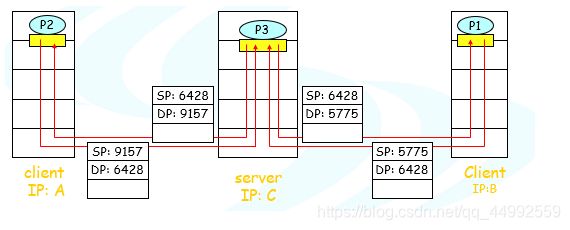

Connection-Oriented Multiplexing and Demultiplexing(面向连接的多路复用与多路分解)

-

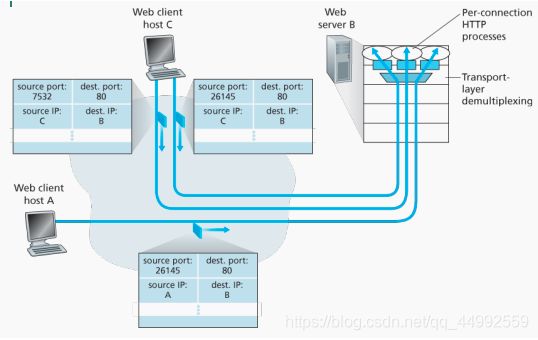

TCP socket identified by four-tuple: (source IP address, source port number,dest IP address, dest port number):

- Thus, when a TCP segment arrives from the network to a host, the host uses all four values to direct (demultiplex) the segment to the appropriate socket.

- In particular, and in contrast with UDP, two arriving TCP segments with different source IP addresses or source port numbers will (with the exception,例外 of a TCP segment carrying the original connection-establishment request) be directed to two different sockets.

-

Server host may support many simultaneous (并行的) TCP sockets:

- Each socket attached to a process;

- Each socket identified by its own 4-tuple;

-

Web servers have different sockets for each connecting client

-

Non-persistent (非持久) HTTP will have different socket for each request (http 短连接)

-

Connection-oriented demux:多进程Web服务器

- 两个客户使用相同的目的端口号 (80) 与同 Web 服务器应用通信

-

Connection-oriented demux:Multi-Threaded Web Server(多线程Web服务器)

- 连接套接字与进程之间不总是一一对应的,如多线程Web服务器

3. UDP(用户数据报协议):无连接传输***

-

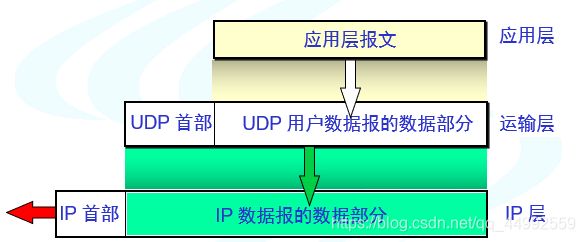

No-frills(不提供不必要服务的), bare-bones transport protocol.

- UDP: does just about as little as a transport protocol can do.

- Aside from (除…之外) the multiplexing/demultiplexing function and some light error checking, it adds nothing to IP.

- UDP: does just about as little as a transport protocol can do.

-

“Best-effort” service, UDP segments may be:

- lost;

- delivered out of order to app;

-

With UDP there is no handshaking between sending and receiving transport-layer entities before sending a segment.

-

为什么有些应用采用UDP?

- No connection establishment;

- 时延相对较低

- Simple: no connection state at sender, receiver;

- Small segment header

- 专门用于某种特定应用的服务器当应用程序运行在 UDP 之上而不是运行在 TCP 上时,一般都能支持更多的活跃客户

- No congestion control: UDP can blast(传输) away as fast as desired

- No connection establishment;

-

Often used for streaming multimedia apps: movie

- loss tolerant (容忍丢失)

- rate sensitive (速率敏感)

-

Other UDP Uses: DNS(域名服务器)\SNMP(简单网络管理协议) 使用UDP。

-

Reliable transfer over UDP(UDP上的可靠数据传输): add reliability at application layer (application-specific error recovery)

-

面向报文的UDP

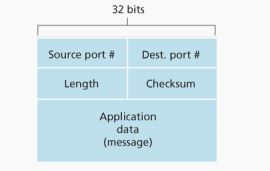

UDP Segment Structure(UDP 报文段结构)

-

The application data occupies the data field of the UDP segment.

- 可变长度

-

The port numbers allow the destination host to pass the application data to the correct process running on the destination end system(that is, to perform the demultiplexing function).

- 16bit,2bytes

-

The length field specifies the number of bytes in the UDP segment (header plus data).

- 16bits,2bytes

-

The checksum is used by the receiving host to check whether errors have been introduced into the segment.

- 16bits,2bytes

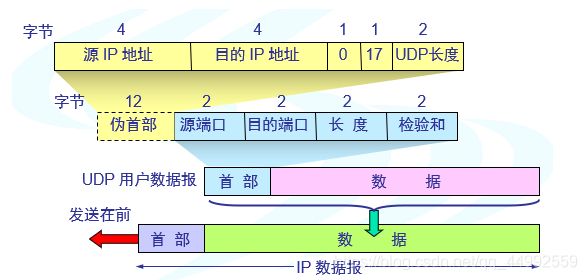

- 伪首部:用于计算校验和(只在传输层使用)

UDP校验和

- UDP 提供了差错检测

- UDP at the sender side performs the 1’s complement(1的补码,即反码) of the sum of all the 16-bit words(16比特字) in the segment, with any overflow(溢出) encountered during the sum being wrapped around.

As an example, suppose that we have the following three 16-bit words:

0110011001100000

0101010101010101

1000111100001100

The sum of first two of these 16-bit words is

0110011001100000

0101010101010101

1011101110110101

Adding the third word to the above sum gives

1011101110110101

1000111100001100

0100101011000001(溢出1,加0000000000000001)

0000000000000001

0100101011000010(加法)Note that this last addition had overflow, which was wrapped around(把溢出的最高位1和低16位做加法运算).

1’s component: 10110101 00111101

Although UDP provides error checking, it does not do anything to recover from an error.

4. Principles of Reliable Data Transfer(可靠数据传输的原理)

- The problem of implementing reliable data transfer occurs not only at the transport layer, but also at the link layer and the application layer as well(可靠数据传输的问题不仅会出现在传输层也会出现在链路层与应用层).

- Reliable Data Transfer: Service model and service implement(服务模型与服务实现)

The service abstraction provided to the upper-layer entities is that of a reliable channel through which data can be transferred.

It is the responsibility of a reliable data transfer protocol(可靠数据传输协议) to implement this service abstraction (This task is made difficult by the fact that the layer below the reliable data transfer protocol may be unreliable).

4.1 构造可靠数据传输协议(略)

- Reliable Data Transfer Protocol over a Perfectly Reliable Channel: rdt1.0

- Reliable Data Transfer over a Channel with Bit Errors: rdt2.0

- Reliable Data Transfer over a Lossy Channel with Bit Errors: rdt3.0

4.2 Pipelined Reliable Data Transfer Protocols(流水线可靠数据传输协议)

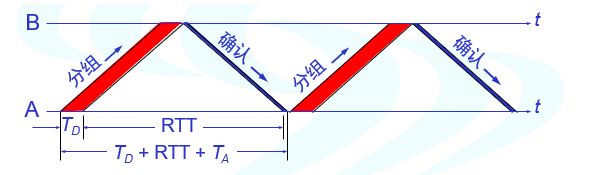

rdt 3.0 性能问题的核心在于它是一个停等协议

如何处理丢失、损坏与延时过大的分组。

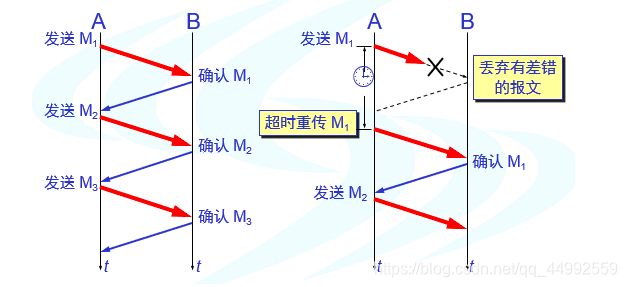

停止等待ARQ协议:

-

在发送完一个分组后,必须暂时保留已发送的分组的副本;

-

分组和确认分组都必须进行编号;

-

超时计时器的重传时间应当比数据在分组传输的平均往返时间更长一些;

-

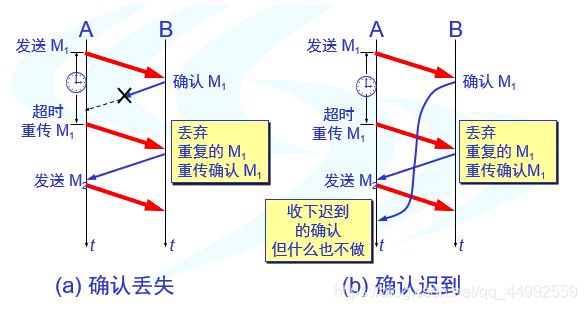

确认(ack)丢失与确认迟到:

-

可靠通信的实现:

1.使用上述的确认和重传机制,我们就可以在不可靠的传输网络上实现可靠的通信;

2.这种可靠传输协议常称为自动重传请求ARQ(Automatic Repeat re-Quest);

3.ARQ表明重传的请求是自动进行的,接收方不需要请求发送方重传某个出错的分组;

-

信道利用率:

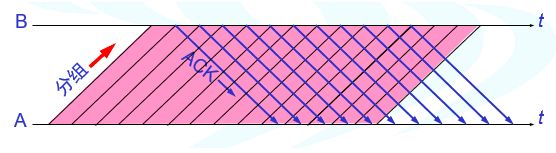

流水线协议:连续 ARQ 协议

-

发送方可连续发送多个分组,不必每发完一个分组就停顿下来等待对方的确认;

-

由于信道上一直有数据不间断地传送,这种传输方式可获得很高的信道利用率;

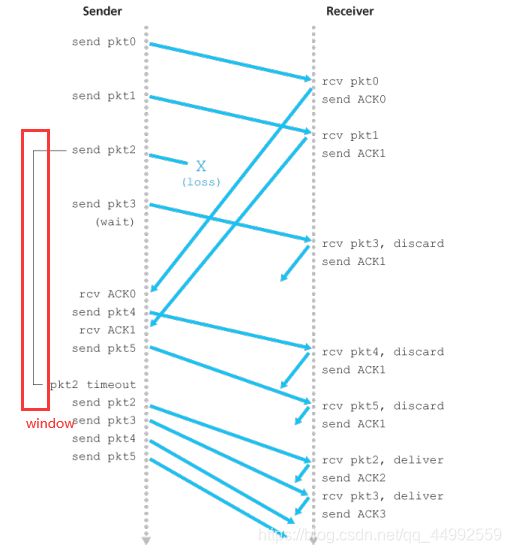

4.3 Go-Back-N(回退N)

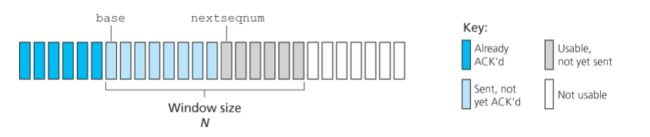

Go-Back-N(也称为滑动窗口协议,sliding-window-protocol)是解决流水线的差错恢复的方法,还有一种方法是选择重传(SR,selective repeat)。

在回退(GBN)协议中,允许发送方发送多个分组(当有多个分组可用时)而不需等待确认,但它也受限于在流水线中未确认的分组数不能超过某个最大允许数N。

- base:基序号,最早的未确认分组的序号

- nextseqnum:下一个序号,最小的可用序号

- N:窗口长度

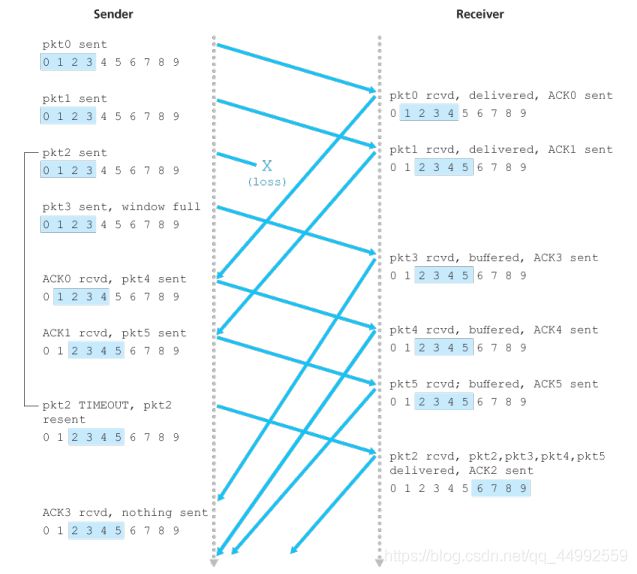

Figure shows the operation of the GBN protocol for the case of a window size of four packets.Because of this window size limitation(N=4), the sender sends packets 0 through 3 but then must wait for one or more of these packets to be acknowledged before proceeding. As each successive ACK (for example, ACK0 and ACK1 ) is received, the window slides forward and the sender can transmit one new packet (pkt4 and pkt5, respectively). On the receiver side, packet 2 is lost and thus packets 3, 4, and 5 are found to be out of order and are discarded.

- 如果发送方发送了前5个分组,而中间的第3个分组丢失了,这时接收方只能对前两个分组发出确认,发送方无法知道后面三个分组的下落,而只好把后面的三个分组都再重传一次;

- 这就叫做Go-back-N(回退N),表示需要再退回来重传已发送过的N个分组,可见当通信线路质量不好时,连续ARQ协议会带来负面的影响;

- 使用累积确认;

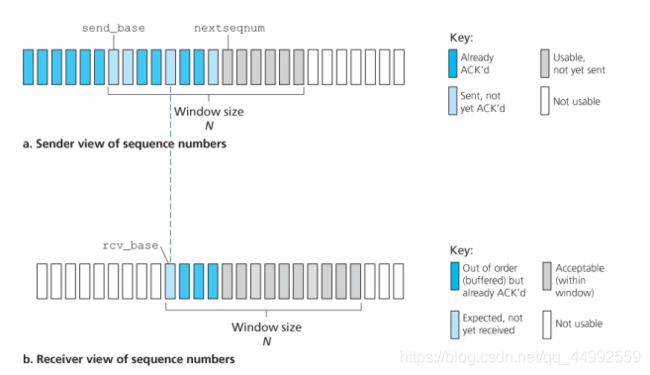

4.4 Selective Repeat (SR,选择重传)

5. TCP(传输控制协议):面向连接传输

5.1 The TCP Connection

-

TCP is said to be connection-oriented because before one application process can begin to send data to another, the two processes must first “handshake” with each other;

-

A TCP connection provides a full-duplex service;

-

A TCP connection is also always point-to-point, that is, between a single sender and a single receiver(“multicasting(多播)” isn’t possible with TCP);

-

Connection-establishment procedure: There-way handshake

- The client first sends a special TCP segment;

- The server responds with a second special TCP segment;

- And finally the client responds again with a third special segment;

-

Once a TCP connection is established, the two application processes can send data to each other:

- MSS(最大报文段长度): The maximum amount of data that can be grabbed from the send buffer(发送缓存) and placed in a segment is limited by the maximum segment size (MSS)

- Note that the MSS is the maximum amount of application-layer data in the segment, not the maximum size of the TCP segment including headers.

- MSS(最大报文段长度): The maximum amount of data that can be grabbed from the send buffer(发送缓存) and placed in a segment is limited by the maximum segment size (MSS)

-

- TCP连接是一条虚连接而不是一条真正的物理连接;

- TCP对应用进程一次把多长的报文发送到TCP的缓存中是不关心的;

- TCP根据对方给出的窗口值和当前网络拥塞的程度来决定一个报文段应包含多少个字节( UDP 发送的报文长度是应用进程给出的);

- TCP可把太长的数据块划分短一些再传送,TCP也可等待积累有足够多的字节后再构成报文段发送出去;

5.2 TCP Segment Structure(TCP报文段结构)***

-

Source and destination port numbers(源端口号和目的端口号):

- Both of them are 16-bits (2-bytes)

- Which are used for multiplexing/demultiplexing data from/to upper-layer applications.

-

Sequence number field and acknowledgment number field: 序号与确认号

-

Both of them are 32-bit (4-bytes);

-

Sequence number:

-

Acknowledgment number:

-

The acknowledgment number that Host A puts in its segment is the sequence number of the next byte Host A is expecting from Host B

Suppose that Host A has received all bytes numbered 0 through 535 from B and suppose that it is about to send a segment to Host B. Host A is waiting for byte 536 and all the subsequent bytes in Host B’s data stream. So Host A puts 536 in the acknowledgment number field of the segment it sends to B.

-

-

Used by the TCP sender and receiver in implementing a reliable data transfer service(用于实现可靠数据传输);

-

What does a host do when it receives out-of-order segments in a TCP connection? Interestingly, the TCP RFCs do not impose any rules here and leave the decision up to the programmers implementing a TCP implementation. There are basically two choices: either (1) the receiver immediately discards out-of-order segments (which, as we discussed earlier, can simplify receiver design), or (2) the receiver keeps the out-of-order bytes and waits for the missing bytes to fill in the gaps. Clearly, the latter choice is more efficient in terms of network bandwidth, and is the approach taken in practice.

-

-

Receive window(接收窗口): 16-bit

- Used for flow control(用于流量控制);

- It is used to indicate the number of bytes that a receiver is willing to accept.

-

Header length field(头部长度字段): 4-bit

- Specifies the length of the TCP header in 32-bit words (4-bytes 为计算单位).

- The TCP header can be of variable length due to the TCP options field(Typically, the options field is empty, so that the length of the typical TCP header is 20 bytes).

-

Flag field: 6 bits

-

The ACK bit is used to indicate that the value carried in the acknowledgment field is valid (That is, the segment contains an acknowledgment for a segment that has been successfully received)

-

The RST(Reset), SYN, and FIN bits are used for connection setup and teardow.

- 当 RST = 1 时,表明 TCP 连接中出现严重差错(如由于主机崩溃或其他原因),必须释放连接,然后再重新建立运输连接;

- 同步 SYN = 1 表示这是一个连接请求或连接接受报文;

- FIN = 1 表明此报文段的发送端的数据已发送完毕,并要求释放运输连接;

-

The PSH(Push) bit indicates that whether the receiver should pass the data to the upper layer immediately or not.

-

The URG bit is used to indicate that there is data in this segment that the sending-side upper-layer entity has marked as “urgent”(1: High priority)( The location of the last byte of this urgent data is indicated by the 16-bit urgent data pointer field).

-

-

The CWR and ECE bits are used in explicit congestion notification.

-

Checksum field:

- 检验和字段检验的范围包括首部和数据这两部分;

- 在计算检验和时,要在 TCP 报文段的前面加上 12 字节的伪首部;

-

Options field(选项字段): 可选与变长的

- MSS options

- Used when a sender and receiver negotiate(协商) the maximum segment size (MSS).

- 窗口扩大选项:3-bytes

- As a window scaling factor (窗口缩放因子) for use in high-speed networks;

- A time-stamping option (时间戳选项)

- 最主要的字段是时间戳值字段(4字节)和时间戳回送回答字段(4字节);

- 选择确认选项;

- MSS options

Cumulative acknowledgments (累积确认):

- 累积确认

- 接收方一般采用累积确认的方式:

- 即不必对收到的分组逐个发送确认,而是对按序到达的最后一个分组发送确认,这样就表示:到这个分组为止的所有分组都已正确收到;

- 优点:

- 容易实现,即使确认丢失也不必重传;

- 缺点:

- 不能向发送方反映出接收方已经正确收到的所有分组的信息;

- 接收方一般采用累积确认的方式:

Selective ACK

-

选择确认:

- 接收方收到了和前面的字节流不连续的两个字节块;

- 如果这些字节的序号都在接收窗口之内,那么接收方就先收下这些数据,但要把这些信息准确地告诉发送方,使发送方不要再重复发送这些已收到的数据;

-

RFC 2018 规定:

- 如果要使用选择确认,那么在建立TCP连接时,就要在TCP首部的选项中加上"允许SACK"的选项,而双方必须都事先商定好;

- 如果使用选择确认,那么原来首部中的“确认号字段”的用法仍然不变,只是以后在TCP报文段的首部中都增加了SACK选项,以便报告收到的不连续的字节块的边界;

- 由于首部选项的长度最多只有40字节,而指明一个边界(32bit)就要用掉4字节,因此在选项中最多只能指明4个字节块的边界信息;

5.3 Round-Trip Estimation and Timeout(往返时间的估计与超时)

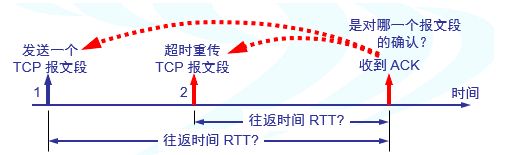

TCP uses a timeout/retransmit mechanism to recover from lost segments. Although this is conceptually simple, many subtle issues arise when we implement a timeout/retransmit mechanism in an actual protocol such as TCP. Perhaps the most obvious question is the length of the timeout intervals (超时间隔). Clearly, the timeout should be larger than the connection’s round-trip time (RTT), that is, the time from when a segment is sent until it is acknowledged. Otherwise, unnecessary retransmissions would be sent.

-

往返时延的方差很大:由于TCP的下层是一个互联网环境,IP数据报所选择的路由变化很大。因而运输层的往返时间的方差也很大;

-

Estimating the Round-Trip Time 估计往返时间

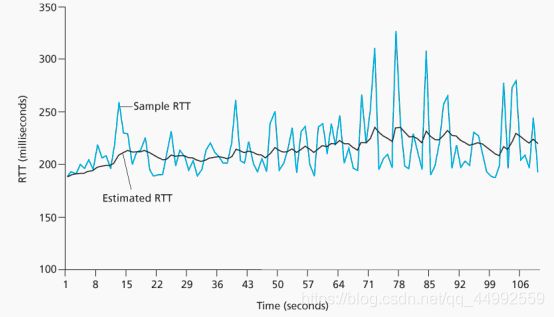

The sample RTT, denoted SampleRTT, for a segment is the amount of time between when the segment is sent (that is, passed to IP) and when an acknowledgment for the segment is received.

Obviously, the SampleRTT values will fluctuate from segment to segment due to congestion in the routers and to the varying load on the end systems. Because of this fluctuation, any given SampleRTT value may be atypical.

In order to estimate a typical RTT, it is therefore natural to take some sort of average of the SampleRTT values. TCP maintains an average, called EstimatedRTT , of theSampleRTT values.

Upon obtaining a new SampleRTT , TCP updates EstimatedRTT according to the following formula: (加权平均往返时间 or 平滑的往返时间 R T T s RTT_s RTTs)

EstimatedRTT=(1- α \alpha α)·EstimatedRTT+ α \alpha α·SampleRTT

The recommended value of α α α is $\alpha $ = 0.125 (that is, 1/8).

EstimatedRTT is a weighted average of the SampleRTT values. This weighted average puts more weight on recent samples than on old samples. as the more recent samples better reflect the current congestion in the network. In statistics, such an average is called an exponential weighted moving average (EWMA) (指数加权移动平均).

In addition to having an estimate of the RTT, it is also valuable to have a measure of the variability of the RTT. the RTT variation, DevRTT , as an estimate of how much SampleRTT typically deviates (偏离) from EstimatedRTT:RTT的偏差的加权平均值, R T T D RTT_D RTTD

DevRTT=(1−β)⋅DevRTT+β⋅|SampleRTT−EstimatedRTT|**

Note that DevRTT is an EWMA of the difference between SampleRTT and EstimatedRTT . If the SampleRTT values have little fluctuation (波动), then DevRTT will be small; on the other hand, if there is a lot of fluctuation, DevRTT will be large. The recommended value of β is 0.25.

-

Setting and Managing the Retransmission Timeout Interval

Given values of EstimatedRTT and DevRTT , what value should be used for TCP’s timeout interval?

Clearly, the interval should be greater than or equal to EstimatedRTT , or unnecessary retransmissions would be sent. But the timeout interval should not be too much larger than EstimatedRTT ; otherwise, when a segment is lost, TCP would not quickly retransmit the segment, leading to large data transfer delays.

It is therefore desirable to set the timeout equal to the EstimatedRTT plus some margin. The margin should be large when there is a lot of fluctuation in the SampleRTT values; it should be small when there is little fluctuation. The value of DevRTT should thus come into play here.

All of these considerations are taken into account in TCP’s method for determining the retransmission timeout interval: 超时重传时间(RetransmissionTime-Out, RTO)

TimeoutInterval=EstimatedRTT+4⋅DevRTT**

An initial TimeoutInterval value of 1 second is recommended.

-

Karn 算法:

- TCP never computes a SampleRTT for a segment that has been retransmitted; it only measures SampleRTT for segments that have been transmitted once.

- 修正的Karn算法

- 报文段每重传一次,就把RTO增大一些:RTO= γ × \gamma\times γ× RTO

- 系数 γ \gamma γ的典型值是2;

- 当不再发生报文段的重传时,才根据报文段的往返时延更新平均往返时延RTT和超时重传时间RTO的数值;

5.4 Reliable Data Transfor(可靠数据传输)***

-

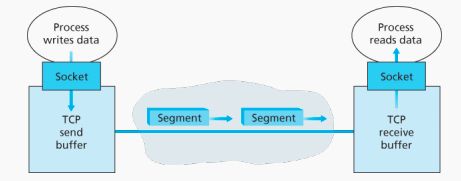

TCP可靠通信的实现

- TCP连接的每一端都必须设有两个窗口——一个发送窗口和一个接收窗口;

- TCP的可靠传输机制用字节的序号进行控制:TCP所有的确认都是基于序号而不是基于报文段;

- TCP两端的四个窗口经常处于动态变化之中;

- TCP连接的往返时间RTT也不是固定不变的,需要使用特定的算法估算较为合理的重传时间;

-

发送缓存与接收缓存

-

发送缓存与接收缓存的作用

- 发送缓存用来暂时存放:

- 发送应用程序传送给发送方TCP准备发送的数据;

- TCP已发送出但尚未收到确认的数据;

- 接收缓存用来暂时存放:

- 按序到达的、但尚未被接收应用程序读取的数据;

- 不按序到达的数据;

- 发送缓存用来暂时存放:

-

强调:

- 发送窗口并不总是和B的接收窗口一样大(因为有一定的时间滞后);

- TCP要求接收方必须有累积确认的功能,这样可以减小传输开销;

- TCP标准没有规定对不按序到达的数据应如何处理?通常是先临时存放在接收窗口中,等到字节流中所缺少的字节收到后,再按序交付上层的应用进程。

-

5.5 Flow Control

-

TCP provides a flow-control service to its applications to eliminate the possibility of the se-nder overflowing the receiver’s buffer.

-

Flow control is a speed-matching service—matching the rate at which the sender is sending against the rate at which the receiving application is reading.

-

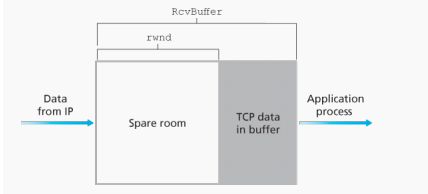

TCP provides flow control by having the sender maintain a variable called the receive window(接收窗口).

- Informally, the receive window is used to give the sender an idea of how much free buffer space is available at the receiver.

- The sender at each side of the connection maintains a distinct receive window(full-duplex)

-

Suppose that Host A is sending a large file to Host B over a TCP connection:

-

Host B allocates a receive buffer to this connection, denote its size by RcvBuffer;

-

The application process in Host B reads from the buffer, define the following variables;

- LastByteRead : the number of the last byte in the data stream read from the buffer by the application process in B;

- LastByteRcvd : the number of the last byte in the data stream that has arrived from the network and has been placed in the receive buffer at B;

-

Because TCP is not permitted to overflow the allocated buffer, we must have:

- LastByteRcvd-LastByteRead ≤ \leq ≤RcvBuffer;

-

The receive window, denoted rwnd is set to the amount of spare room in the buffer:

- rwnd=RcvBuffer-[LastByteRcvd-LastByteRead];

-

-

How does the connection use the variable rwnd to provide the flow-control service?

- Host B tells Host A how much spare room it has in the connection buffer by placing its current value of rwnd in the receive window field of every segment it sends to A.

- Initially, Host B sets rwnd = RcvBuffer

- Host A in turn keeps track of two variables, LastByteSent and LastByteAcked , which have obvious meanings. Note that the difference between these two variables, LastByteSent –LastByteAcked , is the amount of unacknowledged data that A has sent into the connection. By keeping the amount of unacknowledged data less than the value of rwnd , Host A is assured that it is not overflowing the receive buffer at Host B. (LastByteSent−LastByteAcked≤rwnd)

- Host B tells Host A how much spare room it has in the connection buffer by placing its current value of rwnd in the receive window field of every segment it sends to A.

-

持续计时器(Persistence timer)

- TCP为每一个连接设有一个持续计时器;

- 只要TCP连接的一方收到对方的零窗口通知时,就启动持续计时器;

- 若持续计时器的时间到期,就发送一个零窗口探测报文段(仅携带1字节的数据),而对方就在确认这个探测报文段时给出了现在的窗口值;

- 若窗口仍然是零,则收到这个报文段的一方就重新设置持续计时器;

- 若窗口不是零,则死锁的僵局打破;

- 利用滑动窗口实现流量控制;

必须考虑传输效率——TCP报文段的发送实际控制机制:

- 第一种机制是TCP维持一个变量,它等于最大报文段长度MSS,只要缓存中存放的数据达到MSS字节时,就组装成一个TCP报文段发送出去;

- 第二种机制是由发送方的应用进程指明要求发送报文段,即TCP支持的推送(push)操作;

- 第三种机制是发送方的一个计时器期限到了,这时就把当前已有的缓存数据装入报文段(但长度不能超过MSS)发送出去;

5.5 TCP Connection Management(TCP连接管理):三次握手与四次挥手******

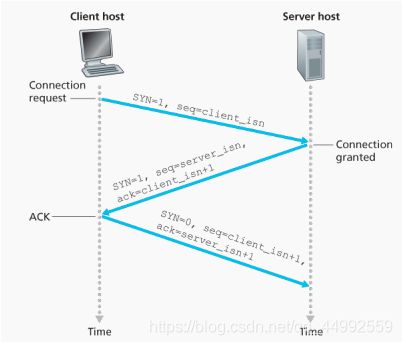

Connection: 三次握手***

-

Suppose a process running in one host (client) wants to initiate a connection with another process in another host (server).

-

The client application process first informs the client TCP that it wants to establish a con-nection to a process in the server.

-

The TCP in the client then proceeds to establish a TCP connection with the TCP in the server in the following manner (Three-way handshake,三次握手):

-

Step 1:

- The client-side TCP first sends a special TCP segment to the server-side TCP.

- This special segment contains no application-layer data. But one of the flag bits in the segment’s header, the SYN bit, is set to 1(For this reason, this special segment is referred to as a SYN segment).

- In addition, the client randomly chooses an initial sequence number (client_isn) and puts this number in the sequence number field of the initial TCP SYN segment (This segment is encapsulated within an IP datagram and sent to the server).

- There has been considerable interest in properly randomizing the choice of the client_isn in order to avoid certain security attacks.

-

Step 2:

Once the IP datagram containing the TCP SYN segment arrives at the server host, the server extracts the TCP SYN segment from the datagram, allocates the TCP buffers and variables to the connection, and sends a connection-granted segment to the client TCP. This connection-granted segment also contains no application-layer data (The connection-granted segment is referred to as a SYNACK segment).

However, it does contain three important pieces of information in the segment header:

-

First: the SYN bit is set to 1;

-

Second: the acknowledgment field of the TCP segment header is set to client_isn+1;

-

Finally: the server chooses its own initial sequence number (server_isn) and

puts this value in the sequence number field of the TCP segment header;

-

-

Step 3:

Upon receiving the SYNACK segment, the client also allocates buffers and variables to the connection. The client host then sends the server yet another segment; this last segment acknowledges the server’s connection-granted segment (the client does so by putting the value server_isn+1 in the acknowledgment field of the TCP segment header). The SYN bit is set to zero, since the connection is established. This third stage of the three-way handshake may carry client-to-server data in the segment payload(报文段负载).

-

-

Once these three steps have been completed, the client and server hosts can send segments containing data to each other (In each of these future segments, the SYN bit will be set to zero).

-

为啥要三次握手:

- 要使每一方能够确知对方的存且双方确认自己与对方的发送与接收是正常的;

- 双方协商一些参数(如最大报文段长度,最大窗口大小,服务质量等);

- 能够对运输实体资源(如缓存大小,连接表中的项目等)进行分配;

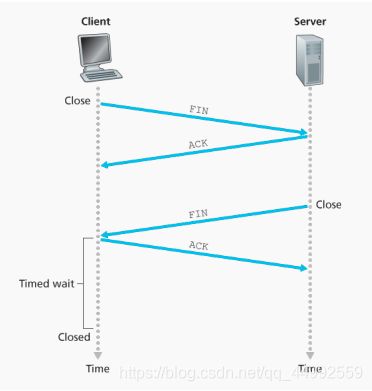

Deconnection: 四次挥手

-

When a connection ends, the “resources” (that is, the buffers and variables) in the hosts are deallocated.

-

Suppose the client decides to close the connection:

- The client application process issues a close command.

- This causes the client TCP to send a special TCP segment to the server process.

- This special segment has a flag bit in the segment’s header, the FIN bit, set to 1

- When the server receives this segment, it sends the client an acknowledgment segment in return. (Client receives the segment: 半关闭状态)

- The server then sends its own shutdown segment, which has the FIN bit set to 1.

- Finally, the client acknowledges the server’s shutdown segment.

- At this point, all the resources in the two hosts are now deallocated.

Client 必须等待2MSL(最长报文段寿命)的时间:

- 第一,为了保证其发送的最后一个 ACK 报文段能够到达 server;

- 第二,防止“已失效的连接请求报文段”出现在本连接中:A在发送完最后一个ACK报文段后,再经过时间2MSL,就可以使本连接持续的时间内所产生的所有报文段,都从网络中消失,这样就可以使下一个新的连接中不会出现这种旧的连接请求报文段;

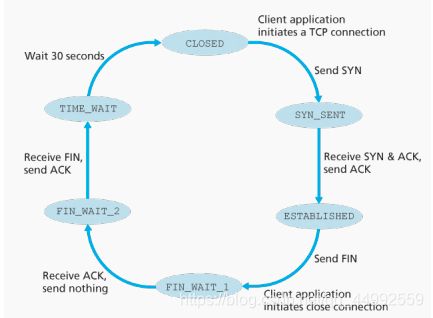

During the life of a TCP connection:

-

The TCP protocol running in each host makes transitions through various TCP states.

-

A typical swquence of TCP states visited by a client TCP:

-

A typical sequence of TCP states visited by a server-side TCP:

6. Principles of Congestion Control(拥塞控制原理)

6.1 介绍

Congestion:

- Informally: “too many sources sending too much data too fast for network to handle”;

- Different from flow control!

- Manifestations:

- lost packets (buffer overflow at routers);

- long delays (queueing in router buffers);

- A top-10 problem in networking;

拥塞控制的一般原理:

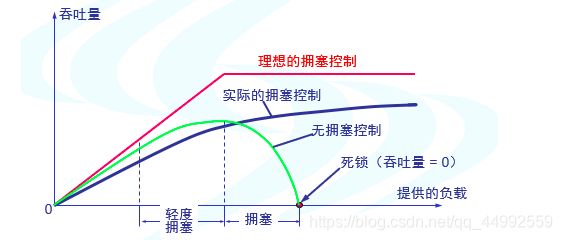

- 在某段时间,若对网络中某资源的需求超过了该资源所能提供的可用部分,网络的性能就要变坏——产生拥塞(congestion);

- 出现资源拥塞的条件:对资源需求的总和 > 可用资源;

- 若网络中有许多资源同时产生拥塞,网络的性能就要明显变坏,整个网络的吞吐量将随输入负荷的增大而下降;

- 拥塞控制是很难设计的,因为它是一个动态的(而不是静态的)问题;

- 当前网络正朝着高速化的方向发展,这很容易出现缓存不够大而造成分组的丢失,但分组的丢失是网络发生拥塞的征兆而不是原因;

- 在许多情况下,甚至正是拥塞控制本身成为引起网络性能恶化甚至发生死锁的原因,这点应特别引起重视;

6.2 The Causes and the Costs of Congestion(拥塞原因与代价)

略

- Scenario 1: Two Senders, a Router with Infinite Buffers

- Scenario 2: Two Senders and a Router with Finite Buffers

- Scenario 3: Four Senders, Routers with Finite Buffers, and Multihop Paths

6.3 Approaches to Congestion Control(拥塞控制方法)

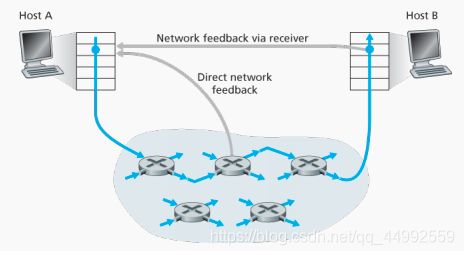

At the highest level, we can distinguish among congestion-control approaches by whether the network layer provides explicit assistance to the transport layer for congestion-control purposes:

-

End-to-end congestion control(端到端拥塞控制)

- In an end-to-end approach to congestion control, the network layer provides no explicit support to the transport layer for congestion-control purposes.

- The presence of network congestion must be inferred by the end systems based only on observed network behavior.

- TCP takes end-to-end approach toward congestion control, since the IP layer is not required to provide feedback to hosts regarding (关于) network congestion.

- Indications of network congestion:

- TCP segment loss;

- TCP decreases its window size accordingly;

-

Network-assisted congestion control

6.4 其它

-

拥塞控制与流量控制的关系

- 拥塞控制所要做的都有一个前提,就是网络能够承受现有的网络负荷;

- 拥塞控制是一个全局性的过程,涉及到所有的主机、所有的路由器,以及与降低网络传输性能有关的所有因素;

- 流量控制往往指在给定的发送端和接收端之间的点对点通信量的控制;

- 流量控制所要做的就是抑制发送端发送数据的速率,以便使接收端来得及接收;

-

开环控制和闭环控制

- 开环控制方法就是在设计网络时事先将有关发生拥塞的因素考虑周到,力求网络在工作时不产生拥塞;

- 闭环控制是基于反馈环路的概念,属于闭环控制的有以下几种措施:

- 监测网络系统以便检测到拥塞在何时、何处发生;

- 将拥塞发生的信息传送到可采取行动的地方;

- 调整网络系统的运行以解决出现的问题;

7. TCP Congestion Control(TCP拥塞控制)***

TCP Congestion Control

- 发送方维持一个叫做拥塞窗口 cwnd(congestion window) 的状态变量;拥塞窗口的大小取决于网络的拥塞程度,并且动态地在变化。

- 发送方让自己的发送窗口等于拥塞窗口;如再考虑到接收方的接收能力,则发送窗口还可能小于拥塞窗口。

- 发送方控制拥塞窗口的原则是:只要网络没有出现拥塞,拥塞窗口就再增大一些,以便把更多的分组发送出去;但只要网络出现拥塞,拥塞窗口就减小一些,以减少注入到网络中的分组数。

几种拥塞控制方法:重要

-

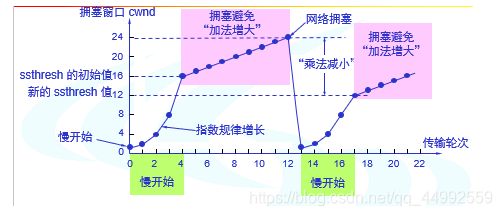

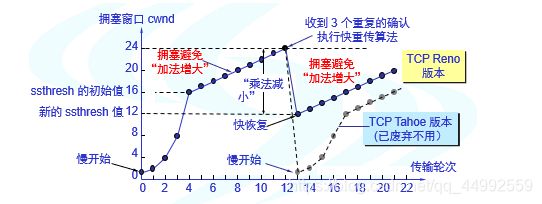

Slow Start (慢开始) and Congestion Avoidance (拥塞避免):

-

慢开始算法的原理:

-

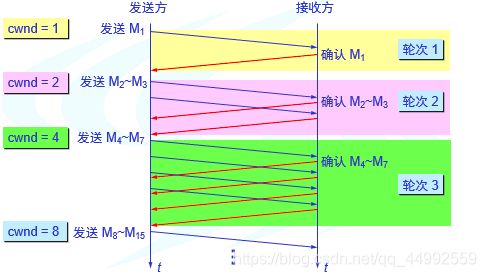

在主机刚刚开始发送报文段时可先设置拥塞窗口 cwnd = 1,即设置为一个最大报文段 MSS 的数值;

-

在每收到一个对新的报文段的确认后,将拥塞窗口加1,即增加一个MSS的数值;

- 1

- 1+1

- (1+1)+(1+1)

- ((1+1)+(1+1))+((1+1)+(1+1))

- …

-

用这样的方法逐步增大发送端的拥塞窗口 cwnd,可以使分组注入到网络的速率更加合理;

传输轮次(transmission round):

- 使用慢开始算法后,每经过一个传输轮次,拥塞窗口 cwnd 就加倍;

- 一个传输轮次所经历的时间其实就是往返时间 RTT;

- “传输轮次”更加强调:把拥塞窗口 cwnd 所允许发送的报文段都连续发送出去,并收到了对已发送的最后一个字节的确认;

- 例如,拥塞窗口 cwnd = 4,这时的往返时间 RTT 就是发送方连续发送 4 个报文段,并收到这 4 个报文段的确认,总共经历的时间;

-

-

拥塞避免算法的思路:

- 让拥塞窗口 cwnd 缓慢地增大,即每经过一个往返时间RTT就把发送方的拥塞窗口 cwnd 加 1,而不是加倍,使拥塞窗口 cwnd 按线性规律缓慢增长;

-

设置慢开始门限状态变量

- 慢开始门限 ssthresh 的用法如下:

- 当 cwnd < ssthresh 时,使用慢开始算法;

- 当 cwnd > ssthresh 时,停止使用慢开始算法而改用拥塞避免算法;

- 当 cwnd = ssthresh 时,既可使用慢开始算法,也可使用拥塞避免算法;

- 慢开始门限 ssthresh 的用法如下:

-

当网络出现拥塞时:

-

无论在慢开始阶段还是在拥塞避免阶段,只要发送方判断网络出现拥塞(其根据就是没有按时收到确认),就要把慢开始门限 ssthresh 设置为出现拥塞时的发送方窗口值的一半(但不能小于2);

-

然后把拥塞窗口 cwnd 重新设置为 1 ,执行慢开始算法;

-

这样做的目的就是要迅速减少主机发送到网络中的分组数,使得发生拥塞的路由器有足够时间把队列中积压的分组处理完毕;

假接收窗口足够大;

-

乘法减小(multiplicative decrease)

“乘法减小“是指不论在慢开始阶段还是拥塞避免阶段,只要出现一次超时(即出现一次网络拥塞),就把慢开始门限值 ssthresh 设置为当前的拥塞窗口值乘以0.5;

当网络频繁出现拥塞时,ssthresh 值就下降得很快,以大大减少注入到网络中的分组数;

-

加法增大(additive increase)

“加法增大”是指执行拥塞避免算法后,在收到对所有报文段的确认后(即经过一个往返时间),就把拥塞窗口 cwnd 增加一个 MSS 大小,使拥塞窗口缓慢增大,以防止网络过早出现拥塞;

-

-

强调:

- “拥塞避免”并非指完全能够避免了拥塞,利用以上的措施要完全避免网络拥塞还是不可能的;

- “拥塞避免”是说在拥塞避免阶段把拥塞窗口控制为按线性规律增长,使网络比较不容易出现拥塞;

-

-

-

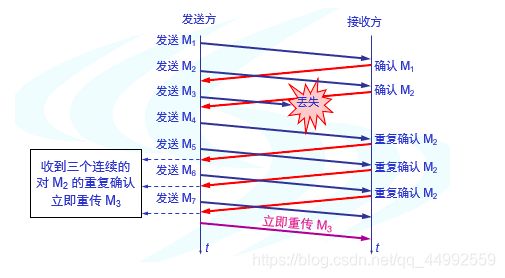

Fast Retransmit and Fast Recovery:

-

快重传:

-

快恢复:

发送窗口的上限值:

- 发送方的发送窗口的上限值应当取为接收方窗口 rwnd 和拥塞窗口 cwnd 这两个变量中较小的一个:

- 当 rwnd < cwnd 时,则是接收方的接收能力限制发送窗口的最大值;

- 当 cwnd < rwnd 时,则是网络的拥塞限制发送窗口的最大值;

-