gici-open学习日记(5):runMeasurementAddin函数引申——初始化

gici-open学习日记——初始化

- 回顾

- GNSS/INS初始化

-

- 初始化器的观测量添加

-

- `addGnssSolutionMeasurement`

- `optimize()`优化

- GNSS/INS初始化补充1:`RtkEstimator`流程

-

- `SppEstimator::addGnssMeasurementAndState`

- 视觉初始化

-

- `FeatureHandler::initializeLandmarks`

- `addImageMeasurementAndState`

- 总结

回顾

能进行到具体状态估计初始化的这步,是在具体的addMeasurement这个函数中,关于具体怎么调用到这个函数以及这个函数的其他作用,可以看我的上一篇博客runMeasurementAddin线程 ,这里看上去是分开进行了GNSS/IMU的初始化和视觉的初始化

这篇文章有大量的内容涉及到GNSS、IMU的具体解算和知识,如SPP、RTK以及预积分等,这些内容的具体原理会放在之后的博客,这里只是梳理一下流程

GNSS/INS初始化

首先关注一个变量,gnss_imu_initializer_,其涉及到了GINS初始化的具体操作和相关设置,它的定义是在RtkImuCameraRrrEstimator类的构造函数中(这里以RTK/IMU/视觉的紧组合为学习对象,所以很多变量的基础都在RtkImuCameraRrrEstimator里,同理如果选择其他的解算组合类型,对应的变量也就在对应的XXXEstimator中)

gnss_imu_initializer_.reset(new GnssImuInitializer(

init_options, gnss_loose_base_options, imu_base_options, // 这里都会用到GNSS松组合的设置

base_options, graph_, initializer_sub_estimator_));

manual手册里关于GNSS/INS的初始化有一段话的解释

The GNSS/INS initializer estimates initial poses, velocities, and biases for LC or TC estimators. Regardless of whether we use LC or TC integrations, we use the LC formulations, i.e. position and velocity, to form the initialization optimization graph for efficiency.

The pitch and roll angle is first computed by the acceleration measure. Then a batch optimization is conducted when a sufficient acceleration is detected. The optimization structure is mostly the same as GINS LC optimization. The difference is that we do not trust the position measurements during initialization because they exhibit large noise if the integer values of GNSS ambiguities are unsolved, which often leads to divergence. Instead, we use the velocity measurement to compute the increment of initial position parameters because we believe the GNSS doppler measurement (which mainly contributes to the velocity estimation) is precise and less affected by errors.

所以无论是松组合(LC)还是紧组合(TC),在初始化的时候都需要松组合的形式:即用位置、速度等形成优化框架,这也就解释了代码里为什么在构造的时候会包括gnss_loose_base_options这个变量

具体GNSS/INS初始化的调用,就直接调用了estimate函数

gnss_imu_initializer_->estimate(); // TODO GNSS/IMU初始化

但这个函数依旧只是一个接口,具体的初始化操作是通过调用optimize()实现的,剩下的都是一些日志信息

bool GnssImuInitializer::estimate()

{

optimize();

// Log information

if (base_options_.verbose_output) {

LOG(INFO) << "GNSS/IMU initialization: "

<< "Iterations: " << graph_->summary.iterations.size() << ", "

<< std::scientific << std::setprecision(3)

<< "Initial cost: " << graph_->summary.initial_cost << ", "

<< "Final cost: " << graph_->summary.final_cost;

}

finished_ = true;

return true;

}

初始化器的观测量添加

在执行estimate()之前,还有一个重要的操作,也就是向初始化器中添加观测量,可以理解为问题的构建过程

if (gnss_imu_initializer_->addMeasurement(measurement))

在addMeasurement里,先添加IMU

if (measurement.imu && measurement.imu_role == ImuRole::Major) {

addImuMeasurement(*measurement.imu);

}

如果此时有解,且解为速度或位置或速度位置的情况下,就把解算结果转成GNSS解的类型,然后把GNSS解加进去

if (measurement.solution &&

(measurement.solution_role == SolutionRole::Position ||

measurement.solution_role == SolutionRole::Velocity ||

measurement.solution_role == SolutionRole::PositionAndVelocity)) {

GnssSolution gnss_solution = convertSolutionToGnssSolution(

*measurement.solution, measurement.solution_role);

return addGnssSolutionMeasurement(gnss_solution);

}

这里说的加进去,都是加到对应的成员变量里去,IMU是加到了ImuEstimatorBase类的成员变量里面,GNSS解是加到了GnssImuInitializer类的成员变量里,后者是前者的继承类,不过前者只是观测量的添加,后者还涉及到解的添加,也加到图优化结构里,要比前者麻烦很多

如果是GNSS观测量的话,就要用到sub_gnss_estimator_变量了,这个变量就是初始化器构造时的最后一个参数,我觉得可以理解为对应于GNSS的初始解算,并且要把GNSS的观测量加到这里面去

sub_gnss_estimator_->addMeasurement(*measurement.gnss)

这里的addMeasurement,与gnss_imu_initializer_构造时最后一个参数是对应的,所以如果是RTK/IMU/视觉的解算策略,这里对应的就是

bool RtkEstimator::addMeasurement(const EstimatorDataCluster& measurement)

这个函数里面就涉及到GNSS的解算,同理,接下来调用的estimate也是RtkEstimator对应的优化函数

sub_gnss_estimator_->estimate()

把解算得到的值都赋给solution,最后同样是把GNSS解变量加进去

if (sub_gnss_estimator_ && measurement.gnss) {

if (sub_gnss_estimator_->addMeasurement(*measurement.gnss)) {

if (sub_gnss_estimator_->estimate()) {

Solution solution;

solution.coordinate = coordinate_;

solution.timestamp = sub_gnss_estimator_->getTimestamp();

solution.pose = sub_gnss_estimator_->getPoseEstimate();

solution.speed_and_bias = sub_gnss_estimator_->getSpeedAndBiasEstimate();

solution.covariance = sub_gnss_estimator_->getCovariance();

std::shared_ptr<GnssEstimatorBase> gnss_estimator =

std::dynamic_pointer_cast<GnssEstimatorBase>(sub_gnss_estimator_);

solution.status = gnss_estimator->getSolutionStatus();

solution.num_satellites = gnss_estimator->getNumberSatellite();

solution.differential_age = gnss_estimator->getDifferentialAge();

SolutionRole role;

if (gnss_estimator->hasVelocityEstimate()) {

role = SolutionRole::PositionAndVelocity;

}

else {

role = SolutionRole::Position;

}

GnssSolution gnss_solution = convertSolutionToGnssSolution(solution, role);

return addGnssSolutionMeasurement(gnss_solution);

}

}

}

addGnssSolutionMeasurement

首先调用的是slowMotionInitialization()函数,对应的是manual里的The pitch and roll angle is first computed by the acceleration measure,函数里的计算部分

if (initPoseAndBiases(imu_measurements_,

imu_base_options_.imu_parameters.g, T_WS_0_, speed_and_bias_0_)) {

zero_motion_finished_ = true;

}

initPoseAndBiases函数最核心的部分主要是最后,把T_WS进行了位姿变换

bool initPoseAndBiases(const ImuMeasurements& imu_measurements,

const double gravity,

Transformation& T_WS,

SpeedAndBias& speed_and_bias,

const double timestamp_start,

const double timestamp_end)

{

// set to zero

T_WS.setIdentity();

speed_and_bias.setZero();

if (imu_measurements.size() == 0) return false;

// Check zero motion

#if 1

int n_measurements = 0;

int n_valid_measurements = 0;

Eigen::Vector3d acc_B = Eigen::Vector3d::Zero();

Eigen::Vector3d gyro_B = Eigen::Vector3d::Zero();

for (size_t i = 0; i < imu_measurements.size(); ++i)

{

if (imu_measurements[i].timestamp < timestamp_start ||

imu_measurements[i].timestamp > timestamp_end) continue;

n_measurements++;

// zero motion check

// if (fabs(imu_measurements[i].linear_acceleration.norm() - gravity) > 0.5 ||

// fabs(imu_measurements[i].angular_velocity.norm()) > 0.05) continue;

double acc_norm = fabs(imu_measurements[i].linear_acceleration.norm() - gravity);

if (acc_norm > 0.5) continue; // 判断是不是低速运动状态

acc_B += imu_measurements[i].linear_acceleration;

gyro_B += imu_measurements[i].angular_velocity;

n_valid_measurements++;

}

if (n_measurements == 0) return false;

else if (n_valid_measurements != n_measurements) return false; // 确保用来初始化的IMU观测量都是低速运动状态

acc_B /= static_cast<double>(n_measurements); // 加速度和角速度取平均

gyro_B /= static_cast<double>(n_measurements);

/* 省略部分代码 */

Eigen::Vector3d e_acc = acc_B.normalized(); // 相当于平均加速度的单位向量

// align with ez_W:

Eigen::Vector3d ez_W(0.0, 0.0, 1.0); // world系的单位向量

Eigen::Matrix<double, 6, 1> pose_increment;

pose_increment.head<3>() = Eigen::Vector3d::Zero();

//! @todo this gives a bad result if ez_W.cross(e_acc) norm is

//! close to zero, deal with it!

pose_increment.tail<3>() = ez_W.cross(e_acc).normalized(); // cross得到的是旋转轴

double angle = std::acos(ez_W.transpose() * e_acc); // 两个向量之间的夹角

pose_increment.tail<3>() *= angle; // 相当于得到了绕着旋转轴旋转一定角度的旋转向量

T_WS = Transformation::exp(-pose_increment) * T_WS; // 位姿变换

T_WS.getRotation().normalize(); // 更新后的位姿归一化

// biases

// Eigen::Vector3d g(0.0, 0.0, gravity);

// speed_and_bias.segment<3>(3) = gyro_B;

// speed_and_bias.segment<3>(6) =

// acc_B - T_WS.getRotationMatrix().transpose() * g;

return true;

}

这里我目前理解的是把T_WS转换到e_acc,也就是当前根据IMU加速度得到的坐标系下

在初始姿态得到后,先把measurement加入到gnss_solution_measurements_之中

// Store measurements

gnss_solution_measurements_.push_back(measurement);

// Ensure that no duration overlaped with slow motion initialization

while (gnss_solution_measurements_.size() > 0 &&

gnss_solution_measurements_.front().timestamp < imu_measurements_.front().timestamp) {

gnss_solution_measurements_.pop_front();

}

加下来比较重要的一个地方是,在有速度的情况下,根据设置的car_motion对初始化进行了一个约束,所以car_motion这个设置可以理解成为针对初始化时载体运动的限制约束

- 这里后来又看了下manual,这里对应的应该是NHC

if (imu_base_options_.car_motion) { // 这里利用car_motion进行约束

const double angular_velocity_norm = getImuMeasurementNear(

gnss_solution_measurements_[i - 1].timestamp).angular_velocity.norm();

if (last_velocity.norm() <

imu_base_options_.car_motion_min_velocity ||

angular_velocity_norm >

imu_base_options_.car_motion_max_anguler_velocity) {

gnss_solution_measurements_.clear();

dynamic_window_full_ = false; break;

}

}

然后就是找到认为运动激励已经够了的加速度

initial_velocity = last_velocity;

double dt = gnss_solution_measurements_[i].timestamp -

gnss_solution_measurements_[i - 1].timestamp;

double horizontal_acc = (velocity.head<2>().norm() -

last_velocity.head<2>().norm()) / dt;

if (horizontal_acc > max_acc) max_acc = horizontal_acc;

if (horizontal_acc > options_.min_acceleration) acc_ensured = true; // 认为有足够的运动激励

没有速度的情况下就根据位置计算速度

if (!computeCoarseVelocityFromPosition(last, cur, velocity) ||

!computeCoarseVelocityFromPosition(last_last, last, last_velocity)) {

continue;

}

然后和有速度的判断方法一样。

最后,把初始的状态加到了图结构之中

double initial_yaw = 0.0;

speed_and_bias_0_.head<3>() = initial_velocity;

if (imu_base_options_.car_motion) {

// compute initial yaw from GNSS velocity

initial_yaw = -atan2(initial_velocity(0), initial_velocity(1)) * R2D; // yaw角不可观,这里根据速度获得

}

// set initial yaw and put items to graph

Eigen::Vector3d rpy = quaternionToEulerAngle(T_WS_0_.getEigenQuaternion());

rpy.z() = initial_yaw * D2R; // 恢复过的yaw

Eigen::Quaterniond q_WS = eulerAngleToQuaternion(rpy);

putMeasurementAndStateToGraph(q_WS, speed_and_bias_0_); // TODO 加到图优化框架里

optimize()优化

这里初始化直接就是用了松组合优化的结构,不过manual里给的说明是初始化阶段中不会相信位置值,而是选择相信多普勒观测量来通过速度计算位置增量,这也和之前addMeasurement是可以对应上的,这个函数里面没什么关键的内容,主要就是设置了优化求解的一些参数,然后求解完成后又更新了一下协方差

void EstimatorBase::optimize()

{

/* 不重要的代码,主要都是求解器的设置 */

// call solver

graph_->solve();

// update covariance

if (base_options_.compute_covariance && can_compute_covariance_) {

updateCovariance(latestState());

}

}

GNSS/INS初始化补充1:RtkEstimator流程

这里对应着上一节中的GNSS观测量添加,这个类在构造函数中会分别初始化一个SPP估计器和一个模糊度解算的估计器,在具体的addMeasurement函数中,先把流动站和基准站的观测都加了进去,之后又进行时间差(根据流动站找时间差得最少的基站)

meausrement_align_.add(measurement); // 这里会根据是rover还是base放到不同的成员变量里

if (meausrement_align_.get(rtk_options_.max_age, rov, ref)) { // get可以理解为把rov和ref对应起来了

return addGnssMeasurementAndState(rov, ref);

}

然后调用主要的函数addGnssMeasurementAndState,进行观测量的添加,这个是主要的函数

- 先调用

SppEstimator::addGnssMeasurementAndState函数

if (!spp_estimator_->addGnssMeasurementAndState(measurement_rov)) {

return false;

}

- 调用

estimate,进行优化

if (!spp_estimator_->estimate()) {

return false;

}

- TODO:这里完成了SPP的具体的迭代计算,不过目前还没有看具体的图优化结构,所以之后再看

- 设置相关的变量

Eigen::Vector3d position_prior = spp_estimator_->getPositionEstimate();

Eigen::Vector3d velocity_prior = spp_estimator_->getVelocityEstimate();

curState().status = GnssSolutionStatus::Single;

// Set to local measurement handle

curGnssRov() = measurement_rov;

curGnssRov().position = position_prior;

curGnssRef() = measurement_ref;

- 观测值重新排序:这里我感觉最终的目的就是每一个相位只留一个观测值

gnss_common::rearrangePhasesAndCodes(curGnssRov());

gnss_common::rearrangePhasesAndCodes(curGnssRef());

- 然后是双差类型的构建

// Form double difference pair

GnssMeasurementDDIndexPairs index_pairs = gnss_common::formPhaserangeDDPair(

curGnssRov(), curGnssRef(), gnss_base_options_.common); // 双差

- 周跳探测

// Cycle-slip detection

if (!isFirstEpoch()) {

cycleSlipDetectionSD(lastGnssRov(), lastGnssRef(),

curGnssRov(), curGnssRef(), gnss_base_options_.common);

}

- 加到图优化结构

// Add parameter blocks

double timestamp = curGnssRov().timestamp;

curState().timestamp = timestamp;

// position block

BackendId position_id = addGnssPositionParameterBlock(curGnssRov().id, position_prior);

curState().id = position_id;

curState().id_in_graph = position_id;

// ambiguity blocks

addSdAmbiguityParameterBlocks(curGnssRov(),

curGnssRef(), index_pairs, curGnssRov().id, curAmbiguityState());

if (rtk_options_.estimate_velocity) {

// velocity block

addGnssVelocityParameterBlock(curGnssRov().id, velocity_prior);

// frequency block

int num_valid_doppler_system = 0;

addFrequencyParameterBlocks(curGnssRov(), curGnssRov().id, num_valid_doppler_system);

}

// Add pseudorange residual blocks

int num_valid_satellite = 0;

addDdPseudorangeResidualBlocks(curGnssRov(),

curGnssRef(), index_pairs, curState(), num_valid_satellite);

- 查看有效卫星数够不够,不够的话就把加进去的再删了

if (!checkSufficientSatellite(num_valid_satellite, 0)) {

// erase parameters in current state

eraseGnssPositionParameterBlock(curState());

eraseAmbiguityParameterBlocks(curAmbiguityState());

if (rtk_options_.estimate_velocity) {

eraseGnssVelocityParameterBlock(curState());

eraseFrequencyParameterBlocks(curState());

}

return false;

}

- 满足的话继续加残差块

// Add phaserange residual blocks

addDdPhaserangeResidualBlocks(curGnssRov(), curGnssRef(), index_pairs, curState());

// Add doppler residual blocks

if (rtk_options_.estimate_velocity) {

addDopplerResidualBlocks(curGnssRov(), curState(), num_valid_satellite);

}

// Add relative errors

if (!isFirstEpoch()) {

if (!rtk_options_.estimate_velocity) {

// position

addRelativePositionResidualBlock(lastState(), curState());

}

else {

// position and velocity

addRelativePositionAndVelocityBlock(lastState(), curState());

// frequency

addRelativeFrequencyBlock(lastState(), curState());

}

// ambiguity

addRelativeAmbiguityResidualBlock(

lastGnssRov(), curGnssRov(), lastAmbiguityState(), curAmbiguityState());

}

- 最后更新DOP值

// Compute DOP

updateGdop(curGnssRov(), index_pairs);

SppEstimator::addGnssMeasurementAndState

先获得先验位置,如果不是第一个历元的话就用``getPositionEstimate函数去获得,是第一个历元的话就把当前的坐标转到ECEF系下作为先验坐标

Eigen::Vector3d position_prior = Eigen::Vector3d::Zero();

if (!isFirstEpoch()) {

position_prior = getPositionEstimate();

}

else if (coordinate_) {

position_prior = coordinate_->getZero(GeoType::ECEF);

}

都设置得差不多了之后就进行各种改正

- 首先是DCB改正:这里注释做了说明,说得很详细了,具体的函数在

CodeBias::getCodeBias这里

// Get code bias correction in relative with base frequency

// Apply the code bias by: P_corrected = P_raw + bias

// For the broadcast ephemeris, the base frequencies for each system are:

// GPS: L1P/L2P IF combination (L1W/L2W)

// GLONASS: L1P/L2P IF combination

// Galileo: E1/E5a IF combination (INAV)

// BDS: B3I

// For the precise ephemeris, user should specify the base frequencies, in

// defualt, we use the following configures:

// GPS: L1P/L2P IF combination (L1W/L2W)

// GLONASS: L1P/L2P IF combination

// Galileo: E1/E5a IF combination

// BDS: B1I/B3I IF combination

// Correct code bias

correctCodeBias(curGnss());

- 然后是电离层改正:看了下

IonoType,分为不改正(None)、广播星历改正(Broadcast)、双频改正(DualFrequency)或者增强(应该是指SBAS)(Augmentation)

// Compute ionosphere delays

computeIonosphereDelay(curGnss(), true);

- 把信息都加到图优化结构中:关于GNSS的图优化结构还没具体看

// position block

// TODO GNSS的图优化结构

BackendId position_id = addGnssPositionParameterBlock(curGnss().id, position_prior); // 位置先验

curState().id = position_id;

curState().id_in_graph = position_id;

// clock block

int num_valid_system = 0;

addClockParameterBlocks(curGnss(), curGnss().id, num_valid_system); // 钟差

// Add pseudorange residual blocks

int num_valid_satellite = 0;

addPseudorangeResidualBlocks(curGnss(), curState(), num_valid_satellite, true); // 伪距残差

- 判断卫星数量够不够:分为两部分,第一部分是基础的4(用载波相位)或者3(不用载波相位),第二部分是有效的系统数,两个加在一起是这里的判断阈值

if (!checkSufficientSatellite(num_valid_satellite, num_valid_system)) {

return false;

}

- 把当前历元外的信息从图优化结构中都移除

Graph::ParameterBlockCollection parameters = graph_->parameters();

for (auto parameter : parameters) {

if (BackendId(parameter.first).bundleId() == curState().id.bundleId()) continue;

graph_->removeParameterBlock(parameter.first);

}

视觉初始化

视觉初始化的调用也是在tkImuCameraRrrEstimator::addMeasurement中,涉及到的具体函数是visualInitialization,当视觉初始化的标志仍为false时,就会进入到这里执行视觉初始化的步骤。同时结合manual中的描述来看,视觉初始化这里是用到了GINS初始化的结果,根据位姿来进行三角化

The GNSS/INS/Camera initializer estimates initial poses, velocities, biases, and landmark positions for SRR or RRR estimators. The initialization is done in two steps: GNSS/INS initialization step and visual initialization step. The GNSS/INS initialization step has been introduced in subsection 3.9.3. After the GNSS/INS initialization, we triangulate the tracked features using the estimated poses.

具体的代码流程如下:

- 先把GINS对应的初始化结果储存起来,

init_solution_store_会存入不止一个结果

Solution solution; // 记录下目前最新状态的时间、位姿、速度等等

solution.timestamp = getTimestamp();

solution.pose = getPoseEstimate();

solution.speed_and_bias = getSpeedAndBiasEstimate();

init_solution_store_.push_back(solution);

- 把关键帧也储存起来,然后这里具体的设置是初始化只用两个关键帧

if (frame_bundle->isKeyframe()) {

init_keyframes_.push_back(frame_bundle); // 初始化相当于只用2个关键帧

while (init_keyframes_.size() > 2) init_keyframes_.pop_front();

}

if (init_keyframes_.size() < 2) return false;

- 接下来对yaw角的协方差进行判断,因为之前的yaw角是恢复出来的,所以这里结合设置的一个阈值进判断,要确保GINS充分收敛

double std_yaw = sqrt(computeAndGetCovariance(lastState())(5, 5));

if (std_yaw > rrr_options_.min_yaw_std_init_visual * D2R) return false;

- 然后会设置视觉的位姿,这里也会具体到IMU的预积分过程

for (auto& frame_bundle : init_keyframes_) {

bool found = false;

double timestamp = frame_bundle->getMinTimestampSeconds();

for (size_t i = 1; i < init_solution_store_.size(); i++) {

double dt1 = init_solution_store_[i].timestamp - timestamp;

double dt2 = init_solution_store_[i - 1].timestamp - timestamp;

if ((dt1 >= 0 && dt2 <= 0) || // 找到对应的时间段

(i == init_solution_store_.size() - 1) && dt1 < 0 && fabs(dt1) < 2.0) {

size_t idx = (dt1 >= 0 && dt2 <= 0) ? (i - 1) : i;

Transformation T_WS = init_solution_store_[idx].pose;

SpeedAndBias speed_and_bias = init_solution_store_[idx].speed_and_bias;

imuIntegration(init_solution_store_[idx].timestamp, // TODO IMU预积分

timestamp, T_WS, speed_and_bias);

speed_and_biases.push_back(speed_and_bias); // 预积分会更新速度和零偏

frame_bundle->set_T_W_B(T_WS);

found = true;

break;

}

}

CHECK(found);

}

- 接下来就是具体的三角化了,这里也就把相机的尺度恢复出来了,调用了

feature_handler_这个变量

feature_handler_->initializeLandmarks(init_keyframes_.back()->at(0));

feature_handler_->setGlobalScaleInitialized();

- 然后把两个关键帧都添加进去

CHECK(addImageMeasurementAndState(init_keyframes_.front(), speed_and_biases.front()));

CHECK(addImageMeasurementAndState(init_keyframes_.back(), speed_and_biases.back()));

- 最后设置一些解算的标志

visual_initialized_ = true;

do_not_remove_imu_measurements_ = false;

can_compute_covariance_ = true;

FeatureHandler::initializeLandmarks

首先得确保当前帧是关键帧,因为初始化的时候要求必须是关键帧

const FramePtr& frame = keyframe;

if (!frame->isKeyframe()) return;

然后遍历每一个特征点,如果是初始化过了或者类型是外点的特征点就跳过

// already initialized

if (isSeed(frame->type_vec_[i])) continue; // 初始化过的就跳过

// rejected by bundle adjuster

if (frame->type_vec_[i] == FeatureType::kOutlier) continue;

接下来找到参考帧,这里用了rbegin,我觉得是因为obs_是按时间顺序添加的,所以这里找出和当前帧时间离得尽可能近的参考帧,结果更精确一些

for (auto obs = landmark->obs_.rbegin(); obs != landmark->obs_.rend(); obs++) {

if (FramePtr f = obs->frame.lock()) {

if (f->isKeyframe() && f->id() < frame->id() && f->has_transform_) { // 这里理论上已经初始化过位姿了

ref_frame = f;

index_ref = obs->keypoint_index_;

}

}

}

而参考帧和当前帧之间也要满足视差要求,这样才会继续进行后面的三角化的过程

double disparity = (ref_frame->px_vec_.col(index_ref) -

frame->px_vec_.col(i)).norm();

if (disparity < options_.min_disparity_init_landmark) continue;

之后就是三角化的步骤了,这里先得到两帧之间的变换关系,以及两帧的单位向量

Transformation T_cur_ref = frame->T_f_w_ * ref_frame->T_f_w_.inverse(); // 这里得到的是ref-cur吧

BearingVector f_ref = ref_frame->f_vec_.col(index_ref);

BearingVector f_cur = frame->f_vec_.col(i);

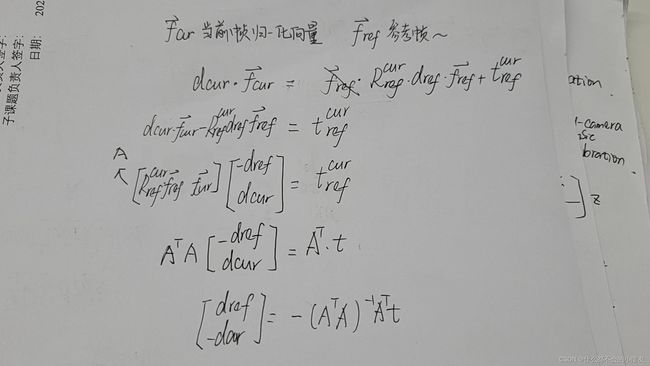

接下来调用depthFromTriangulation函数进行三角化,这里的三角化应该是和SVO中相同的办法,就是直接法,很好推,下面进行一个公式豪放地推

和代码也是可以直接对应上

FeatureMatcher::MatchResult depthFromTriangulation(

const Transformation& T_search_ref,

const Eigen::Vector3d& f_ref,

const Eigen::Vector3d& f_cur,

double* depth)

{

Eigen::Matrix<double,3,2> A; A << T_search_ref.getRotation().rotate(f_ref), f_cur;

const Eigen::Matrix2d AtA = A.transpose()*A;

if(AtA.determinant() < 0.000001)

return FeatureMatcher::MatchResult::kFailTriangulation;

const Eigen::Vector2d depth2 = - AtA.inverse()*A.transpose()*T_search_ref.getPosition();

(*depth) = std::fabs(depth2[0]);

return FeatureMatcher::MatchResult::kSuccess;

}

下面就是再把坐标恢复出来了,这里还贴心地给了注释,要注意转换时的乘法顺序

// Note that the follow equation should not be T * f_ref * depth.

// Because the operater "*" is applied in homogeneous coordinate

landmark->pos_ = ref_frame->T_world_cam() * (f_ref * depth);

接下来的这步是把特征点的FeatureType进行一下转换,也就是加上一个三角化过(或者说恢复过深度)的标志

for (auto obs : landmark->obs_) {

if (FramePtr f = obs.frame.lock()) {

changeFeatureTypeToSeed(f->type_vec_[obs.keypoint_index_]);

}

}

addImageMeasurementAndState

这个函数涉及到把当前帧观测加入的具体操作,首先根据当前帧和上一帧的时间差,对处理的频率进行判断(代码里设的是10Hz)

if (!frame_bundle->isKeyframe() && frame_bundles_.size() > 1) {

const double t_last = lastFrameBundle()->getMinTimestampSeconds();

const double t_cur = frame_bundle->getMinTimestampSeconds();

if (t_cur - t_last < 1.0 / visual_base_options_.max_frequency) return false;

}

接下来先加入IMU状态,这里其实没太看明白,函数声明的注释是

// Inserts a state inside or at ends of state window

// Retures the index of current added state in states_ buffer

// The pose block and speed and bias block will be added here, note that we do not extrinsics

// blocks here, it should be added by other sensor bases.

// If you want add a state at the front, you must specify the prior values.

if (speed_and_bias != SpeedAndBias::Zero()) {

index = insertImuState(timestamp, pose_id, // TODO 加入IMU状态

curFrame()->T_world_imu(), speed_and_bias, true);

}

else {

index = insertImuState(timestamp, pose_id);

}

接下来分别加入相机外参,进行三角化(视觉初始化阶段不会再三角化了)以及添加残差信息

// camera extrinsics

if (!camera_extrinsics_id_.valid()) {

camera_extrinsics_id_ =

addCameraExtrinsicsParameterBlock(bundle_id, curFrame()->T_imu_cam());

}

// Initialize landmarks

if (visual_initialized_ && curFrame()->isKeyframe()) { // 在最开始三角化的时候就不会进到这里

curFrame()->set_T_w_imu(getPoseEstimate(states_[index]));

feature_handler_->initializeLandmarks(curFrame()); // 三角化地图点

}

// Add Landmark parameters and minimal resiudals at keyframe

if (curFrame()->isKeyframe()) {

addLandmarkParameterBlocksWithResiduals(curFrame());

}

// Add landmark observations

addReprojectionErrorResidualBlocks(states_[index], curFrame()); // 重投影误差

总结

这里只是把GINS及视觉初始化的流程梳理了一遍,初始化这里还涉及GNSS的图优化结构、IMU预积分等等,还没有深入地去看。以及这里我感觉初始化完全就是用了松组合,先是GINS,然后再把GINS初始化的结果拿给视觉用。