Spark基本原理与使用--Spark Streaming

概念与原理

基本概念

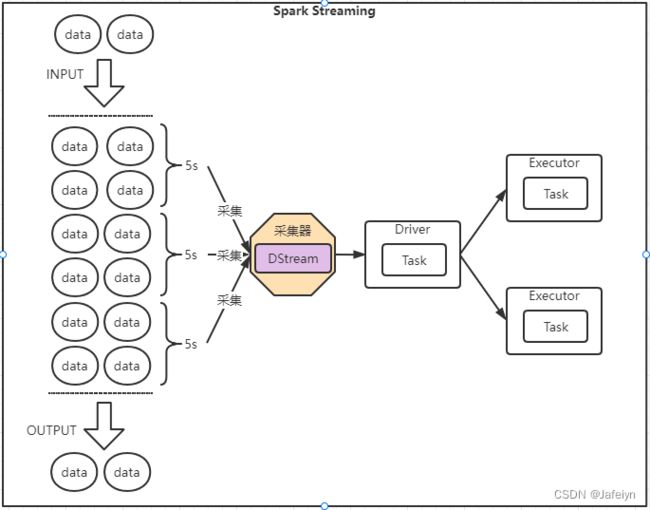

Spark Streaming用于流式数据处理,支持可扩展、高吞吐、可容错的准实时微批次(微时间)数据处理,支持多种数据输入源如Kafka、Flume、Twitter、ZeroMQ和简单TCP Socket等,同时也支持多种数据输出方式如HDFS和数据库等;

数据输入后可通过Spark的map、reduce、join等进行运算,运算后的结果可以输出到如HDFS或数据库等;

DStream:离散化流,即随时间推移而收到的数据序列,内部由每个时间区间收到的数据作为RDD,多个RDD所组成的序列即为离散化流;可以将DStream理解为对RDD在实时数据处理场景的一种封装。

特点

- 易用性

- 易整合到Spark生态圈

- 容错性

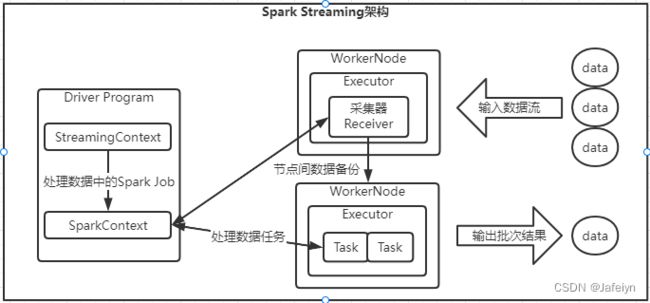

架构

背压机制

Spark1.5之前可以通过设置静态配置参数 spark.streaming.receiver.maxRate 值来实现限制Receiver的数据接收速率,该方式虽然可以防止内存溢出问题,但如果当前集群数据处理能力高于maxRate设定值时可能会导致资源浪费的问题;

为了协调数据接收速率与集群处理数据能力,Spark1.5开始引入背压机制可以动态控制数据接收速率来适配集群数据处理能力;

背压机制:即根据作业调度器反馈作业的执行信息来动态调整接收器数据接收率。通过配置参数 spark.streaming.backpressure.enabled=true 开启背压机制,默认为false-不开启。

DStream

RDD队列创建DStream

通过ssc.queueStream(queueOfRDDs)方式创建DStream,每个推送到队列中的RDD,都会作为一个DStream处理。

package com.itjeffrey.spark.streaming.dstream.rdd

import org.apache.spark.SparkConf

import org.apache.spark.rdd.RDD

import org.apache.spark.streaming.dstream.{DStream, InputDStream}

import org.apache.spark.streaming.{Seconds, StreamingContext}

import java.util.concurrent.TimeUnit

import scala.collection.mutable

/**

* RDD队列方式创建DStream

*

* @From: Jeffrey

* @Date: 2022/11/22

*/

object RddStream {

def main(args: Array[String]): Unit = {

val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark-Streaming")

val ssc = new StreamingContext(conf, Seconds(3))

//创建RDD队列获取DStream

val queue = new mutable.Queue[RDD[Int]]()

val ids: InputDStream[Int] = ssc.queueStream(queue, false)

val map: DStream[(Int, Int)] = ids.map((_, 1))

val value: DStream[(Int, Int)] = map.reduceByKey(_ + _)

value.print()

ssc.start()

//采集器启动后,每隔2s往创建的队列中放入5个RDD

for(i <- 1 to 5){

queue += ssc.sparkContext.makeRDD(1 to 300, 10)

TimeUnit.SECONDS.sleep(2)

}

ssc.awaitTermination()

}

}

自定义数据采集器

用法:继承Receiver,实现onStart, onStop方法

package com.itjeffrey.spark.streaming.dstream.custom

import org.apache.spark.SparkConf

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.dstream.ReceiverInputDStream

import org.apache.spark.streaming.receiver.Receiver

import org.apache.spark.streaming.{Seconds, StreamingContext}

import java.util.Random

import java.util.concurrent.TimeUnit

/**

* 自定义采集器,实现监控某端口获取数据

*

* @From: Jeffrey

* @Date: 2022/11/22

*/

object CustomReceiverDemo {

def main(args: Array[String]): Unit = {

val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark-Streaming")

val ssc = new StreamingContext(conf, Seconds(3))

//创建自定义采集器,采集数据并打印

val dStream: ReceiverInputDStream[String] = ssc.receiverStream(new CustomReceiver())

dStream.print()

ssc.start()

ssc.awaitTermination()

}

//自定义采集器,指定存储级别

class CustomReceiver extends Receiver[String](StorageLevel.MEMORY_ONLY) {

private var toStart = true

//启动采集器

override def onStart(): Unit = {

new Thread(new Runnable {

override def run(): Unit = {

while (toStart){

val data: String = new Random().nextInt(10).toString

//store,存储数据,按照指定采集器的存储级别将数据封装成对应RDD存放在指定存储级别所在的位置

store(data)

TimeUnit.MILLISECONDS.sleep(500)

}

}

}).start()

}

//关闭采集器

override def onStop(): Unit = {

toStart = false

}

}

}

Kafka数据源(重点)

SparkStreaming采集Kafaka数据源中的数据;

在版本选型方面,新版本通常采用DirectAPI方式,由计算节点Executor主动消费Kafka数据,速度由自身控制;而早起版本中是采用ReceiverAPI方式由一个专门的Executor采集节点采集收据然后发送到其他的Executor计算节点做计算操作,这可能会导致计算节点内存溢出等问题。

Kafka0-10 Direct模式

前提:本地得安装运行Kafka程序,Kafka中创建topic “spark_streaming_topic”;

导入依赖:

<!--kafka数据源依赖-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-10_2.12</artifactId>

<version>3.0.0</version>

</dependency>

<!--jackson依赖,注意:版本必须与spark-streaming-kafka对应否则启动会报错,我这里spark3.0.0要求jackson-core必须2.10.0以上版本才可以-->

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-core</artifactId>

<version>2.10.0</version>

</dependency>

采集kafka数据源中的消息

package com.itjeffrey.spark.streaming.dstream.kafka

import org.apache.kafka.clients.consumer.{ConsumerConfig, ConsumerRecord}

import org.apache.spark.SparkConf

import org.apache.spark.streaming.dstream.InputDStream

import org.apache.spark.streaming.kafka010.{ConsumerStrategies, KafkaUtils, LocationStrategies}

import org.apache.spark.streaming.{Seconds, StreamingContext}

/**

* Kafka数据源

*

* @From: Jeffrey

* @Date: 2022/11/22

*/

object KafkaStream {

def main(args: Array[String]): Unit = {

val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark-Streaming")

val ssc = new StreamingContext(conf, Seconds(3))

//kafka配置参数

val kafkaParams: Map[String, Object] = Map[String, Object](

ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG -> "127.0.0.1:9092",

ConsumerConfig.GROUP_ID_CONFIG -> "spark_kafka_group",

ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG -> "org.apache.kafka.common.serialization.StringDeserializer",

ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG -> "org.apache.kafka.common.serialization.StringDeserializer"

)

//通过KafkaUtils创建Direct模式的Kafka DStream

val kStream: InputDStream[ConsumerRecord[String, String]] = KafkaUtils.createDirectStream[String, String](

ssc,

//指定定位策略-选择如何在执行器上为一个给定的TopicPartition安排消费者,

//PreferConsistent-在大多数情况下使用这个,它将在所有的执行器上一致地分配分区

LocationStrategies.PreferConsistent,

//指定消费的topic和消费者相关的kafka配置参数

ConsumerStrategies.Subscribe[String, String](Set("spark_streaming_topic"), kafkaParams))

//打印每条消息的值

kStream.map(_.value()).print()

ssc.start()

ssc.awaitTermination()

}

}

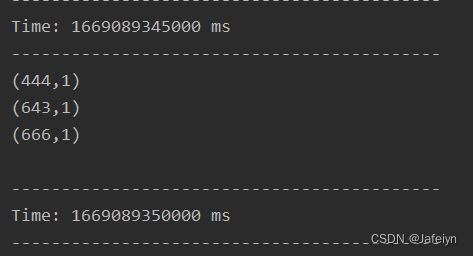

启动Kafka和上述KafkaStream测试程序,往Kafka测试topic “spark_streaming_topic”中放入测试消息

![]()

控制台打印结果如下:

DStream转换

无状态转化操作

无状态指的是数据,在无状态转换操作下,前一个采集周期的数据不会在下一个采集周期中使用;

无状态转化,即把简单的RDD转化操作分别应用到每个批次上,也就是转化DStream中每个RDD操作;(注:针对键值对的DStream转换操作需要添加import StreamingContext._才能在Scala使用)

无状态转化操作只处理当前采集周期内的数据,包含map, flatMap, filter, repartition, reduceByKey, groupByKey,join等操作;

有状态转化操作

有状态指的是在有状态转化操作下,前一个采集周期的数据会在下一个采集周期中使用;

有状态转化操作需要保留数据状态,包含updateStateByKey, transform及各种Window相关聚合等操作;

DStream转化示例

package com.itjeffrey.spark.streaming.dstream.transfer

import org.apache.spark.SparkConf

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.streaming.dstream.{DStream, ReceiverInputDStream}

/**

* 无状态转换操作--join

*

* @From: Jeffrey

* @Date: 2022/11/23

*/

object JoinTransfer {

def main(args: Array[String]): Unit = {

val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark-Streaming")

val ssc = new StreamingContext(conf, Seconds(5))

val lines8: ReceiverInputDStream[String] = ssc.socketTextStream("127.0.0.1", 8888)

val lines9: ReceiverInputDStream[String] = ssc.socketTextStream("127.0.0.1", 9999)

val map8: DStream[(String, Int)] = lines8.map((_, 8))

val map9: DStream[(String, Int)] = lines9.map((_, 9))

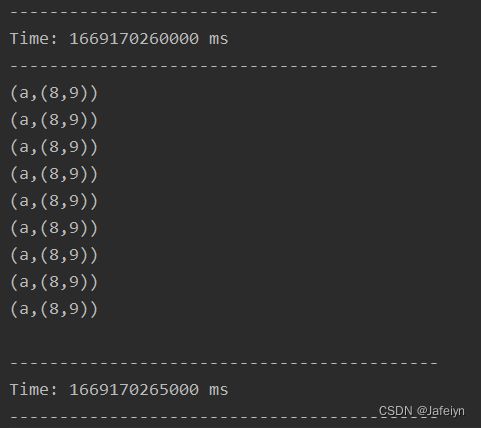

//join-将两个DStream流中的相同key数据的value组合在一起,底层其实是两个RDD的join操作

val res: DStream[(String, (Int, Int))] = map8.join(map9)

res.print()

ssc.start()

ssc.awaitTermination()

}

}

package com.itjeffrey.spark.streaming.dstream.transfer

import org.apache.spark.SparkConf

import org.apache.spark.streaming.dstream.{DStream, ReceiverInputDStream}

import org.apache.spark.streaming.{Duration, Seconds, StreamingContext}

/**

* DStream转换操作

* @From: Jeffrey

* @Date: 2022/11/22

*/

object StateTransfer {

def main(args: Array[String]): Unit = {

val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark-Streaming")

val duration: Duration = Seconds(5)

val ssc = new StreamingContext(conf, duration)

//有状态转化操作时必须加上checkpoint目录用于存放状态数据

ssc.checkpoint("datas/state")

//world-count task

val words: ReceiverInputDStream[String] = ssc.socketTextStream("127.0.0.1", 9000)

val map: DStream[(String, Int)] = words.map((_, 1))

//无状态转化操作

// val value: DStream[(String, Int)] = map.reduceByKey(_ + _)

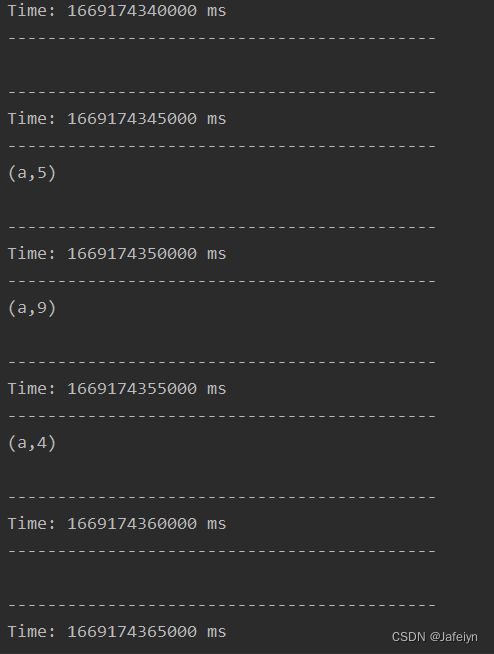

//有状态转化操作,根据key更新value状态数据

val value: DStream[(String, Int)] = map.updateStateByKey(

(seq: Seq[Int], buffer: Option[Int]) => {

//缓冲区的值加上当前采集周期相同key对应的value值之和

Option(buffer.getOrElse(0) + seq.sum)

}

)

value.print()

ssc.start()

ssc.awaitTermination()

}

}

- 有状态转换操作–transform

package com.itjeffrey.spark.streaming.dstream.transfer

import org.apache.spark.SparkConf

import org.apache.spark.streaming.dstream.ReceiverInputDStream

import org.apache.spark.streaming.{Seconds, StreamingContext}

/**

* transform转换操作

* @From: Jeffrey

* @Date: 2022/11/22

*/

object Transform {

def main(args: Array[String]): Unit = {

val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark-Streaming")

val ssc = new StreamingContext(conf, Seconds(5))

val words: ReceiverInputDStream[String] = ssc.socketTextStream("127.0.0.1", 9000)

/**

* transform: 获取底层的RDD进行操作

* 应用场景:

* 1.DStream功能不完善

* 2.需要代码周期性执行

*/

//Driver端执行(只执行一次)

words.transform(

rdd => {

//Driver端(周期性)执行,一个采集周期生成一个rdd

rdd.map(

str => {

//Executor端执行

str

})

}

)

//Driver端执行

words.map(

str => {

//Executor端执行

str

}

)

ssc.start()

ssc.awaitTermination()

}

}

- DStream转换操作–WindowOperations

WindowOperations可以设置窗口大小和滑动窗口间隔来动态获取当前Streaming的允许状态。基于窗口操作的两个参数分别为窗口时长和滑动步长。也可以理解为将多个采集周期的数据作为一个整体的窗口数据进行计算;

package com.itjeffrey.spark.streaming.dstream.transfer

import org.apache.spark.SparkConf

import org.apache.spark.streaming.dstream.{DStream, ReceiverInputDStream}

import org.apache.spark.streaming.{Seconds, StreamingContext}

/**

* 有状态转换操作--window operations

*

* @From: Jeffrey

* @Date: 2022/11/23

*/

object WindowTransfer {

def main(args: Array[String]): Unit = {

val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark-Streaming")

val ssc = new StreamingContext(conf, Seconds(5))

ssc.checkpoint("datas/state")

val lines: ReceiverInputDStream[String] = ssc.socketTextStream("127.0.0.1", 9000)

val map: DStream[(String, Int)] = lines.map((_, 1))

//window-(无状态操作)将多个采集周期作为一个整体,窗口每一次滑动触发一次计算

//1. 窗口大小应该是一个采集周期的整数倍

//2. 窗口滑动默认是一个采集周期的滑动,可能会出现计算重复数据的问题,可以通过滑动长度(步长)进行设置

//window参数一:windowDuration-窗口大小即所占的时长,参数二:slideDuration-滑动长度即滑动的时长

val windowDS: DStream[(String, Int)] = map.window(Seconds(10))

// val windowDS: DStream[(String, Int)] = map.window(Seconds(10), Seconds(10))

val res: DStream[(String, Int)] = windowDS.reduceByKey(_ + _)

//reduceByKeyAndWindow-(有状态操作)当窗口范围较大且滑动幅度较小时可以采用增加数据和删除数据的方式避免数据重复计算,提高了计算性能

// val res: DStream[(String, Int)] = ds.reduceByKeyAndWindow(_ + _, _ - _, Seconds(10), Seconds(5))

res.print()

ssc.start()

ssc.awaitTermination()

}

}

DStream输出

输出操作是指对流数据转换后得到的结果数据所进行的操作,如将结果数据输出到数据库或打印控制台等;

StreamingContext中如果没有设定输出操作,整个程序就会启动报错。

常用的DStream输出操作包含print, saveAsTextFiles, saveAsHadoopFiles, foreachRDD(通用输出)

//foreachRDD相对于print不会出现时间戳,底层使用RDD

res.foreachRDD(rdd => {

//可以重用rdd所有操作

rdd.collect().foreach(println)

println("---------------")

})

StreamingContext优雅关闭

package com.itjeffrey.spark.streaming.close

import org.apache.spark.SparkConf

import org.apache.spark.streaming.dstream.ReceiverInputDStream

import org.apache.spark.streaming.{Seconds, StreamingContext, StreamingContextState}

import java.util.Random

/**

* StreamingContext优雅关闭

*

* @From: Jeffrey

* @Date: 2022/11/23

*/

object StreamingGracefullyClose {

def main(args: Array[String]): Unit = {

val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark-Streaming")

val ssc = new StreamingContext(conf, Seconds(5))

val lines: ReceiverInputDStream[String] = ssc.socketTextStream("127.0.0.1", 9000)

lines.print()

//启动采集器

ssc.start()

//创建新线程关闭采集器

new Thread(new Runnable {

override def run(): Unit = {

while (true){

//TODO 可以在第三方程序中添加关闭状态,如mysql, redis, zk, hdfs, kafka等程序

//这里模拟从第三方获取到需要关闭StreamingContext的状态数据

val rand: Int = new Random().nextInt(10)

println("get toStop flag from Third-party programs: " + rand)

val toStop: Boolean = rand == 6

if(toStop){

//判断StreamingContext状态,如果是激活状态就关闭

val state: StreamingContextState = ssc.getState()

if(state == StreamingContextState.ACTIVE){

//优雅关闭StreamingContext--计算节点不再接受新的数据,而是将当前计算逻辑处理完毕后再停止

ssc.stop(stopSparkContext = true, stopGracefully = true)

}

//停止线程

System.exit(0)

}else{

Thread.sleep(2000)

}

}

}

}).start()

//block主线程,等待采集器停止

ssc.awaitTermination()

}

}

StreamingContext数据恢复

package com.itjeffrey.spark.streaming.close

import org.apache.spark.SparkConf

import org.apache.spark.streaming.dstream.ReceiverInputDStream

import org.apache.spark.streaming.{Seconds, StreamingContext, StreamingContextState}

import java.util.Random

/**

* StreamingContext恢复数据

*

* @From: Jeffrey

* @Date: 2022/11/23

*/

object StreamingResumeData {

def main(args: Array[String]): Unit = {

//参数一:设置checkpoint, 表示将之前的数据保存起来,重启时从checkpoint中恢复数据

//参数二:创建StreamingContext

val ssc: StreamingContext = StreamingContext.getActiveOrCreate("datas/cp", () => {

val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark-Streaming")

val ssc = new StreamingContext(conf, Seconds(5))

val lines: ReceiverInputDStream[String] = ssc.socketTextStream("127.0.0.1", 9000)

lines.print()

ssc

})

//设置checkpoint

ssc.checkpoint("datas/cp")

//可以启动一个线程优雅关闭StreamingContext

//new Thread(new GracefullyClose(ssc)).start()

ssc.start()

ssc.awaitTermination()

}

}

基础演示

- cmd启动9000端口(前提:Windows系统得安装netcat)

安装netcat,下载地址:https://eternallybored.org/misc/netcat/

点击 Small update: netcat 1.12

解压 netcat-win32-1.12,将解压后的目录配置到系统环境变量Path

cmd开启9000端口,等待输入文本

#Windows系统

nc -lp 9000

#Linux系统

nc -lk 9000

- worldcount代码演示,监听本机9000端口数据,每5s采集一次文本流数据进行批处理

package com.itjeffrey.spark.streaming.demo

import org.apache.spark.SparkConf

import org.apache.spark.streaming.dstream.{DStream, ReceiverInputDStream}

import org.apache.spark.streaming.{Duration, Seconds, StreamingContext}

/**

* Spark Streaming 准实时、微批次的流数据处理框架

*

* @From: Jeffrey

* @Date: 2022/11/21

*/

object SparkStreaming_WC {

def main(args: Array[String]): Unit = {

//注意:master至少要有2个分区线程,一个作为采集器采集数据,一个真正执行任务

val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark-Streaming")

//设置批处理周期(采集周期)

// val duration: Duration = Duration(5000)

// val duration1: Duration = Minutes(5000)

val duration: Duration = Seconds(5)

val ssc = new StreamingContext(conf, duration)

//world-count task

worldCountTask(ssc)

//spark-streaming中采集器是需要长期执行的任务,不能将StreamingContext关闭,同时也不能让主程序main执行结束

//启动采集器

ssc.start()

//等待采集器关闭

ssc.awaitTermination()

}

def worldCountTask(ssc: StreamingContext) = {

//获取端口数据,从socket文本流中获取一行行文本数据

val lines: ReceiverInputDStream[String] = ssc.socketTextStream("127.0.0.1", 9000)

val words: DStream[String] = lines.flatMap(_.split(" "))

val map: DStream[(String, Int)] = words.map((_, 1))

val value: DStream[(String, Int)] = map.reduceByKey(_ + _)

value.print()

}

}