13.初识Pytorch 复现VGG16及卷积神经网络图的可视化(Tensorboard)

-

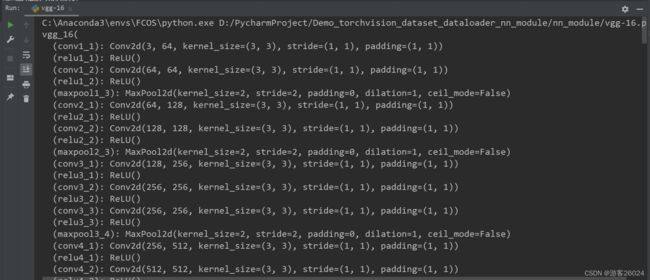

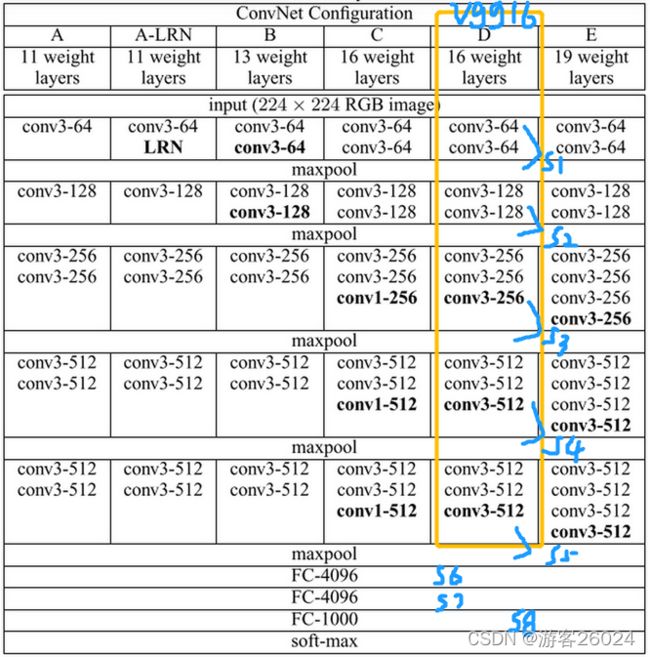

搭建VGG16网络

用黄框画出vgg中vgg16的部分,将此网络分为8个部分,s1(stage 1),s2(stage 2),s3(stage 3),s4,s5,s6,s7,s8,其中stage 出自RetinaNet

-

分析

公式 out_size = 1+(in_size+2*padding_size-kernel_size)/stride (1)

1. input_1 224*224*3 -> 112*112*64

其后有2层卷积层与1层最大池化层

224*224*3 -> 224*224*64

所以input_1_1 ->(conv, relu)

代入公式(1) 224 = 1+(224+2*padding_size-kernel_size)/stride

假设stride=1 -> 224 = 1+(224+2*padding_size-kernel_size)

-> 0 = 1+2*padding_size-kernel_size

假设padding_size = 1,kernel_size = 3

nn.Conv2d(in_channels=3,out_channels=64,padding=1,kernel_size=3,stride=1)

self.relu1_1=nn.ReLU(inplace=False)[inplace为是否覆盖以前的结果,False为不覆盖,且用一个新的变量来接,True为覆盖]

224*224*64 -> 224*224*64

input_1_2 -> (conv,relu)

nn.Conv2d(in_channels=64,out_channels=64,padding=1,kernel_size=3,stride=1)

self.relu1_2 = nn.ReLU(inplace=False)

224*224*64 -> 112*112*64

再计算maxpool层 input1_3

代入公式(1) 112 = 1+(224+2*padding_size-kernel_size)/stride

假设stride=2 -> 112 = 1+(112+padding_size-kernel_size/2)

-> 0 = 1+padding_size-kernel_size/2

假设padding_size=0,kernel_size/2=1

-> kernel_size=2

nn.MaxPool2d(padding=0,kernel_size=2,stride=2)

2.input_2 112*112*64 -> 56*56*128

其后有2层卷积层与1层最大池化层

112*112*64 -> 112*112*128

input2_1 -> (conv,relu)

nn.Conv2d(in_channels=64,out_channels=128,padding=1,kernel_size=3,stride=1)

self.relu2_1 = nn.ReLU(inplace=False)

112*112*128 -> 112*112*128

input2_2 -> (conv,relu)

nn.Conv2d(in_channels=128,out_channels=128,padding=1,kernel_size=3,stride=1)

self.relu2_2 = nn.ReLU(inplace=False)

112*112*128 -> 56*56*128

input2_3 -> maxpool

nn.MaxPool2d(padding=0,kernel_size=2,stride=2)

3.input_3 56*56*128 -> 28*28*256

其后有3层卷积层与1层最大池化层

56*56*128 -> 56*56*256

input3_1 -> (conv,relu)

nn.Conv2d(in_channels=128,out_channels=256,padding=1,kernel_size=3,stride=1)

self.relu3_1 = nn.ReLU(inplace=False)

56*56*256 -> 56*56*256

input3_2 -> (conv,relu)

nn.Conv2d(in_channels=256,out_channels=256,padding=1,kernel_size=3,stride=1)

self.relu3_2 = nn.ReLU(inplace=False)

56*56*256 -> 56*56*256

input3_3 -> (conv,relu)

nn.Conv2d(in_channels=256,out_channels=256,padding=1,kernel_size=3,stride=1)

self.relu3_3 = nn.ReLU(inplace=False)

56*56*256 -> 28*28*256

input_3_4 -> maxpool

nn.MaxPool2d(padding=0,kernel_size=2,stride=2)

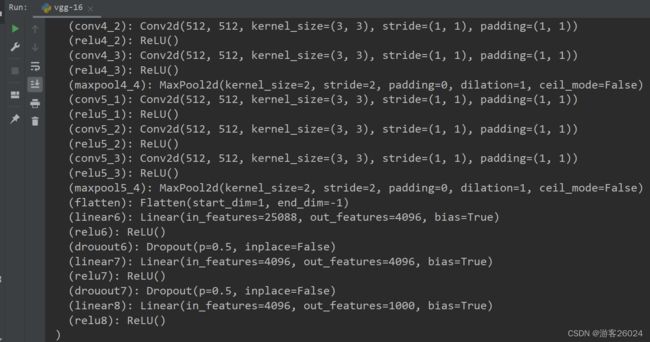

4.input_4 28*28*256 -> 14*14*512

其后有3层卷积层与1层最大池化层

28*28*256 -> 28*28*512

input_4_1 -> (conv,relu)

nn.Conv2d(in_channels=256,out_channels=512,padding=1,kernel_size=3,stride=1)

self.relu4_1 = nn.ReLU(inplace=False)

28*28*512 -> 28*28*512

input_4_2 -> (conv,relu)

nn.Conv2d(in_channels=512,out_channels=512,padding=1,kernel_size=3,stride=1)

self.relu4_2 = nn.ReLU(inplace=False)

28*28*512 -> 28*28*512

input_4_3 -> (conv,relu)

nn.Conv2d(in_channels=512,out_channels=512,padding=1,kernel_size=3,stride=1)

self.relu4_3 = nn.ReLU(inplace=False)

28*28*512 -> 14*14*512

input_4_4 -> maxpool

nn.MaxPool2d(padding=0,kernel_size=2,stride=2)

5.input_5 14*14*512 -> 7*7*512

其后有3层卷积层与1层最大池化层

14*14*512 -> 14*14*512

input5_1 -> (conv,relu)

nn.Conv2d(in_channels=512,out_channels=512,padding=1,kernel_size=3,stride=1)

self.relu5_1 = nn.ReLU(inplace=False)

14*14*512 -> 14*14*512

input5_2 -> (conv,relu)

nn.Conv2d(in_channels=512,out_channels=512,padding=1,kernel_size=3,stride=1)

self.relu5_2 = nn.ReLU(inplace=False)

14*14*512 -> 14*14*512

input5_3 -> (conv,relu)

nn.Conv2d(in_channels=512,out_channels=512,padding=1,kernel_size=3,stride=1

self.relu5_3 = nn.ReLU(inplace=False)

14*14*512 -> 7*7*512

input_5_4 -> maxpool

nn.MaxPool2d(padding=0,kernel_size=2,stride=2)

6.input_6 7*7*512 -> 1*1*4096

展平 nn.flatten()

nn.linear(7*7*512,1*1*4096)

self.relu6 = nn.ReLU(inplace=False)

nn.Dropout(0.5)

7.input_7 4096 -> 4096

nn.linear(4096,4096)

self.relu7 = nn.ReLU(inplace=False)

nn.Dropout(0.5)

8.input_8 1*1*4096 -> 1*1*100

nn.linear(4096,1000)

self.relu8 = nn.ReLU(inplace=False)

- 上代码 1

import torch

from torch import nn

class vgg_16(nn.Module):

def __init__(self):

super(vgg_16, self).__init__()

# input_1

# input_1_1 224*224*3 -> 224*224*64

self.conv1_1 = nn.Conv2d(in_channels=3, out_channels=64, padding=1, kernel_size=3, stride=1)

self.relu1_1 = nn.ReLU(inplace=False)

# input_1_2 224*224*64 -> 224*224*64

self.conv1_2 = nn.Conv2d(in_channels=64, out_channels=64, padding=1, kernel_size=3, stride=1)

self.relu1_2 = nn.ReLU(inplace=False)

# input_1_3 224*224*64 -> 112*112*64

self.maxpool1_3 = nn.MaxPool2d(padding=0, stride=2, kernel_size=2)

# input_2

# input_2_1 112*112*64 -> 112*112*128

self.conv2_1 = nn.Conv2d(in_channels=64, out_channels=128, padding=1, kernel_size=3, stride=1)

self.relu2_1 = nn.ReLU(inplace=False)

# input_2_2 112*112*128 -> 112*112*128

self.conv2_2 = nn.Conv2d(in_channels=128, out_channels=128, padding=1, kernel_size=3, stride=1)

self.relu2_2 = nn.ReLU(inplace=False)

# input_2_3 112*112*128 -> 56*56*128

self.maxpool2_3 = nn.MaxPool2d(padding=0, stride=2, kernel_size=2)

# input_3

# input_3_1 56*56*128 -> 56*56*256

self.conv3_1 = nn.Conv2d(in_channels=128, out_channels=256, padding=1, kernel_size=3, stride=1)

self.relu3_1 = nn.ReLU(inplace=False)

# input_3_2 56*56*256 -> 56*56*256

self.conv3_2 = nn.Conv2d(in_channels=256, out_channels=256, padding=1, kernel_size=3, stride=1)

self.relu3_2 = nn.ReLU(inplace=False)

# input_3_3 56*56*256 -> 56*56*256

self.conv3_3 = nn.Conv2d(in_channels=256, out_channels=256, padding=1, kernel_size=3, stride=1)

self.relu3_3 = nn.ReLU(inplace=False)

# input_3_4 56*56*256 -> 28*28*256

self.maxpool3_4 = nn.MaxPool2d(padding=0, stride=2, kernel_size=2)

# input_4

# input_4_1 28*28*256 -> 28*28*512

self.conv4_1 = nn.Conv2d(in_channels=256, out_channels=512, padding=1, kernel_size=3, stride=1)

self.relu4_1 = nn.ReLU(inplace=False)

# input_4_2 28*28*512 -> 28*28*512

self.conv4_2 = nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1)

self.relu4_2 = nn.ReLU(inplace=False)

# input_4_3 28*28*512 -> 28*28*512

self.conv4_3 = nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1)

self.relu4_3 = nn.ReLU(inplace=False)

# input_4_4 28*28*512 -> 14*14*512

self.maxpool4_4 = nn.MaxPool2d(padding=0, stride=2, kernel_size=2)

# input_5

# input_5_1 14*14*512 -> 14*14*512

self.conv5_1 = nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1)

self.relu5_1 = nn.ReLU(inplace=False)

# input_5_2 14*14*512 -> 14*14*512

self.conv5_2 = nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1)

self.relu5_2 = nn.ReLU(inplace=False)

# input_5_3 14*14*512 -> 14*14*512

self.conv5_3 = nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1)

self.relu5_3 = nn.ReLU(inplace=False)

# input_5_4 14*14*512 -> 7*7*512

self.maxpool5_4 = nn.MaxPool2d(padding=0, kernel_size=2, stride=2)

self.flatten = nn.Flatten()

self.linear6 = nn.Linear(7 * 7 * 512, 4096)

self.relu6 = nn.ReLU(inplace=False)

self.drouout6 = nn.Dropout(0.5)

self.linear7 = nn.Linear(4096, 4096)

self.relu7 = nn.ReLU(inplace=False)

self.drouout7 = nn.Dropout(0.5)

self.linear8 = nn.Linear(4096, 1000)

self.relu8 = nn.ReLU(inplace=False)

def forward(self, x):

# stage 1

x = self.conv1_1(x)

x = self.relu1_1(x)

x = self.conv1_2(x)

x = self.conv1_2(x)

x = self.maxpool1_3(x)

# stage 2

x = self.conv2_1(x)

x = self.relu2_1(x)

x = self.conv2_2(x)

x = self.relu2_2(x)

x = self.maxpool2_3(x)

# stage 3

x = self.conv3_1(x)

x = self.relu3_1(x)

x = self.conv3_2(x)

x = self.relu3_2(x)

x = self.conv3_3(x)

x = self.relu3_3(x)

x = self.maxpool3_4(x)

# stage 4

x = self.conv4_1(x)

x = self.relu4_1(x)

x = self.conv4_2(x)

x = self.relu4_2(x)

x = self.conv4_3(x)

x = self.relu4_3(x)

x = self.maxpool4_4(x)

# stage 5

x = self.conv5_1(x)

x = self.relu5_1(x)

x = self.conv5_2(x)

x = self.relu5_2(x)

x = self.conv5_3(x)

x = self.relu5_3(x)

x = self.maxpool5_4(x)

# stage 6

x = self.flatten(x)

x = self.linear6(x)

x = self.relu6(x)

x = self.drouout6(x)

# stage 7

x = self.linear7(x)

x = self.relu7(x)

x = self.drouout7(x)

# stage 8

x = self.linear8(x)

x = self.relu8(x)

return x

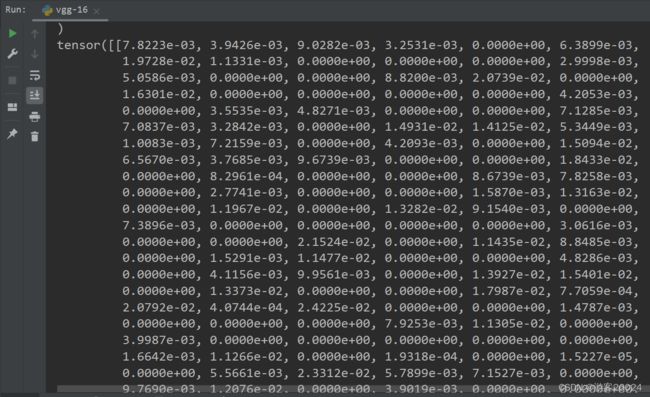

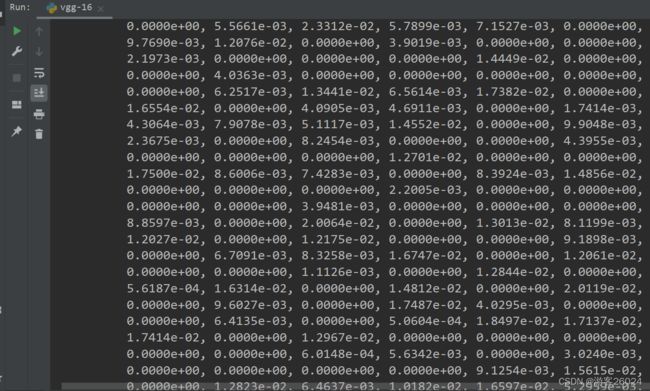

if __name__ == "__main__":

vgg_16 = vgg_16()

print(vgg_16)

input = torch.ones(1, 3, 224, 224)

output = vgg_16(input)

print(output)

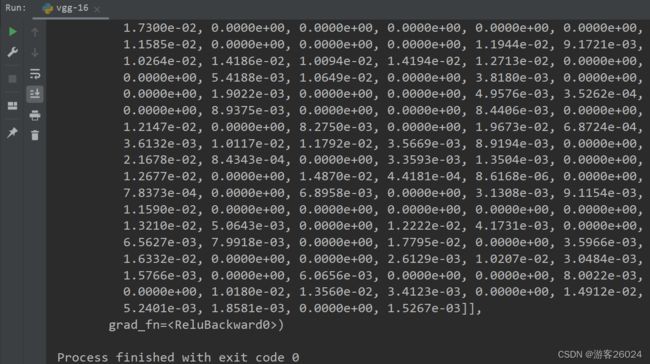

- 上代码 2

import torch

from torch import nn

class vgg_16(nn.Module):

def __init__(self):

super(vgg_16, self).__init__()

self.module = nn.Sequential(

# input_1

# input_1_1 224*224*3 -> 224*224*64

nn.Conv2d(in_channels=3, out_channels=64, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_1_2 224*224*64 -> 224*224*64

nn.Conv2d(in_channels=64, out_channels=64, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_1_3 224*224*64 -> 112*112*64

nn.MaxPool2d(padding=0, stride=2, kernel_size=2),

# input_2

# input_2_1 112*112*64 -> 112*112*128

nn.Conv2d(in_channels=64, out_channels=128, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_2_2 112*112*128 -> 112*112*128

nn.Conv2d(in_channels=128, out_channels=128, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_2_3 112*112*128 -> 56*56*128

nn.MaxPool2d(padding=0, stride=2, kernel_size=2),

# input_3

# input_3_1 56*56*128 -> 56*56*256

nn.Conv2d(in_channels=128, out_channels=256, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_3_2 56*56*256 -> 56*56*256

nn.Conv2d(in_channels=256, out_channels=256, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_3_3 56*56*256 -> 56*56*256

nn.Conv2d(in_channels=256, out_channels=256, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_3_4 56*56*256 -> 28*28*256

nn.MaxPool2d(padding=0, stride=2, kernel_size=2),

# input_4

# input_4_1 28*28*256 -> 28*28*512

nn.Conv2d(in_channels=256, out_channels=512, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_4_2 28*28*512 -> 28*28*512

nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_4_3 28*28*512 -> 28*28*512

nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_4_4 28*28*512 -> 14*14*512

nn.MaxPool2d(padding=0, stride=2, kernel_size=2),

# input_5

# input_5_1 14*14*512 -> 14*14*512

nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_5_2 14*14*512 -> 14*14*512

nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_5_3 14*14*512 -> 14*14*512

nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_5_4 14*14*512 -> 7*7*512

nn.MaxPool2d(padding=0, kernel_size=2, stride=2),

nn.Flatten(),

nn.Linear(7 * 7 * 512, 4096),

nn.ReLU(inplace=True),

nn.Dropout(0.5,inplace=True),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Dropout(0.5, inplace=True),

nn.Linear(4096, 1000),

nn.ReLU(inplace=True),

)

def forward(self, x):

x = self.module(x)

return x

if __name__ == "__main__":

vgg_16 = vgg_16()

print(vgg_16)

input = torch.ones(1, 3, 224, 224)

output = vgg_16(input)

print(output)

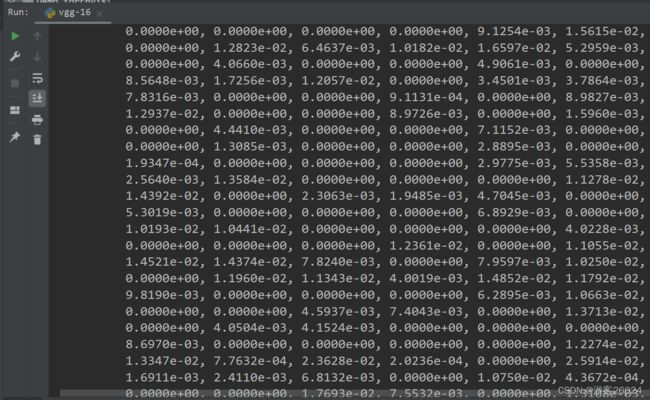

结果:

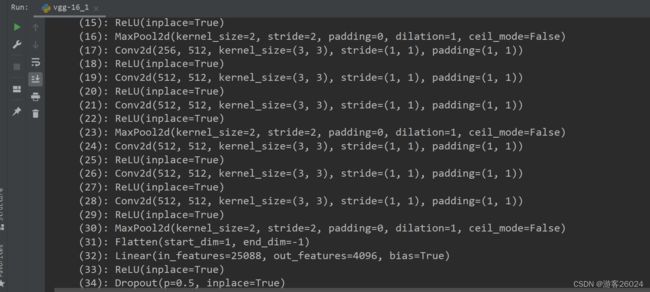

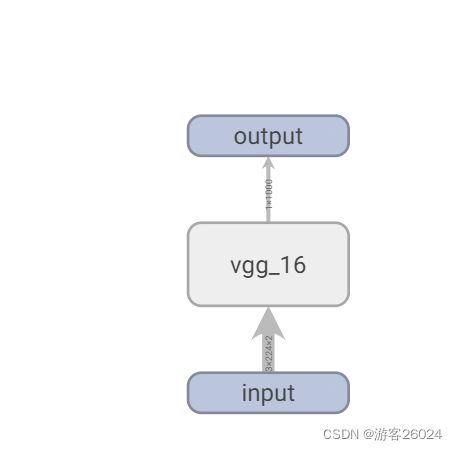

- 可视化 上代码

import torch

from torch import nn

from torch.utils.tensorboard import SummaryWriter

class vgg_16(nn.Module):

def __init__(self):

super(vgg_16, self).__init__()

self.module = nn.Sequential(

# input_1

# input_1_1 224*224*3 -> 224*224*64

nn.Conv2d(in_channels=3, out_channels=64, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_1_2 224*224*64 -> 224*224*64

nn.Conv2d(in_channels=64, out_channels=64, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_1_3 224*224*64 -> 112*112*64

nn.MaxPool2d(padding=0, stride=2, kernel_size=2),

# input_2

# input_2_1 112*112*64 -> 112*112*128

nn.Conv2d(in_channels=64, out_channels=128, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_2_2 112*112*128 -> 112*112*128

nn.Conv2d(in_channels=128, out_channels=128, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_2_3 112*112*128 -> 56*56*128

nn.MaxPool2d(padding=0, stride=2, kernel_size=2),

# input_3

# input_3_1 56*56*128 -> 56*56*256

nn.Conv2d(in_channels=128, out_channels=256, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_3_2 56*56*256 -> 56*56*256

nn.Conv2d(in_channels=256, out_channels=256, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_3_3 56*56*256 -> 56*56*256

nn.Conv2d(in_channels=256, out_channels=256, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_3_4 56*56*256 -> 28*28*256

nn.MaxPool2d(padding=0, stride=2, kernel_size=2),

# input_4

# input_4_1 28*28*256 -> 28*28*512

nn.Conv2d(in_channels=256, out_channels=512, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_4_2 28*28*512 -> 28*28*512

nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_4_3 28*28*512 -> 28*28*512

nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_4_4 28*28*512 -> 14*14*512

nn.MaxPool2d(padding=0, stride=2, kernel_size=2),

# input_5

# input_5_1 14*14*512 -> 14*14*512

nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_5_2 14*14*512 -> 14*14*512

nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_5_3 14*14*512 -> 14*14*512

nn.Conv2d(in_channels=512, out_channels=512, padding=1, kernel_size=3, stride=1),

nn.ReLU(inplace=True),

# input_5_4 14*14*512 -> 7*7*512

nn.MaxPool2d(padding=0, kernel_size=2, stride=2),

nn.Flatten(),

nn.Linear(7 * 7 * 512, 4096),

nn.ReLU(inplace=True),

nn.Dropout(0.5, inplace=True),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Dropout(0.5, inplace=True),

nn.Linear(4096, 1000),

nn.ReLU(inplace=True),

)

def forward(self, x):

x = self.module(x)

return x

if __name__ == "__main__":

vgg_16 = vgg_16()

print(vgg_16)

input = torch.ones(1, 3, 224, 224)

output = vgg_16(input)

print(output)

writer = SummaryWriter("logs")

writer.add_graph(vgg_16, input)

writer.close()

上一章 12.初识Pytorch搭建网络 LeNet-5复现(含nn.Sequential用法)

下一章 14.初识Pytorch损失函数