通过ceph-deploy部署ceph-luminous版(centos7.9)

1、部署环境(centos7.9最小化安装)

| 序号 | 主机 | 主机名 | 配置 | 备注 |

| 1 | 192.168.3.141 192.168.8.141 |

deploy | /dev/sda操作系统 |

部署节点 |

| 2 | 192.168.3.142 192.168.8.142 |

node1 | /dev/sda操作系统,另加三块硬盘 /dev/sdb /dev/sdc /dev/sdd |

mon、mgr、mds、osd,rgw |

| 3 | 192.168.3.143 192.168.8.143 |

node2 | /dev/sda操作系统,另加三块硬盘 /dev/sdb /dev/sdc /dev/sdd |

mon、mgr、mds、osd,rgw |

| 4 | 192.168.3.144 192.168.8.144 |

node3 | /dev/sda操作系统,另加三块硬盘 /dev/sdb /dev/sdc /dev/sdd |

mon、mgr、osd |

| 5 | 192.168.3.145 192.168.8.145 |

node4 | /dev/sda操作系统,另加三块硬盘 /dev/sdb /dev/sdc /dev/sdd |

后期扩展 |

2、准备工作(所有节点配置)

1)关闭防火墙及selinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

systemctl disable firewalld2) /etc/hosts

192.168.3.141 deploy

192.168.3.142 node1

192.168.3.143 node2

192.168.3.144 node3

192.168.3.145 node4

192.168.8.141 deploy

192.168.8.142 node1

192.168.8.143 node2

192.168.8.144 node3

192.168.8.145 node4

3) 更新yum源

rm -rf * /etc/yum.repos.d/*

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

yum install -y wget bash-completion

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repoceph源

[ceph-luminous]

name=ceph-luminous

baseurl=https://mirrors.aliyun.com/ceph/rpm-luminous/el7/x86_64/

enabled=1

ghgcheck=0

priority=1

[ceph-noarch]

name=ceph-noarch

baseurl=https://mirrors.aliyun.com/ceph/rpm-luminous/el7/noarch/

enabled=1

ghgcheck=0

priority=1

[ceph-source]

name=ceph-source

baseurl=https://mirrors.aliyun.com/ceph/rpm-luminous/el7/SRPMS/

enabled=1

ghgcheck=0

priority=1

4)新增用户admin 密码设计为 password 并配置好sudo

useradd admin

echo password | passwd --stdin adminvi /etc/sudoers 增加

admin ALL=(ALL) NOPASSWD: ALL

5)配置时间同步

yum install chrony -yvi /etc/chrony.conf

将原有server删除,填加如下

server ntp1.aliyun.com iburst

server ntp2.aliyun.com iburst

server ntp1.tencent.com iburst

server ntp2.tencent.com iburst

systemctl enable --now chronyd

chronyc sources6)执行免密登录

admin用户登录deploy节点

ssh-keygen

ssh-copy-id node1

ssh-copy-id node2

ssh-copy-id node3

ssh-copy-id node43、安装ceph(admin用户登录doploy节点)

1)安装deploy

sudo yum install -y vim net-tools ceph-deploy python-setuptools --nogpgchecksudo mkdir ceph; cd cephceph-deploy install node1 node2 node3 node4

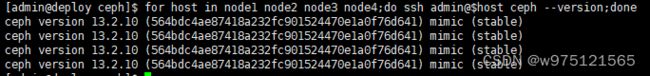

for host in node1 node2 node3 node4;do ssh admin@$host ceph --version;done2)创建集群

ceph-deploy new node1[admin@deploy ceph]$ ls

ceph.conf ceph-deploy-ceph.log ceph.mon.keyring

创建完成后目录下多三个文件

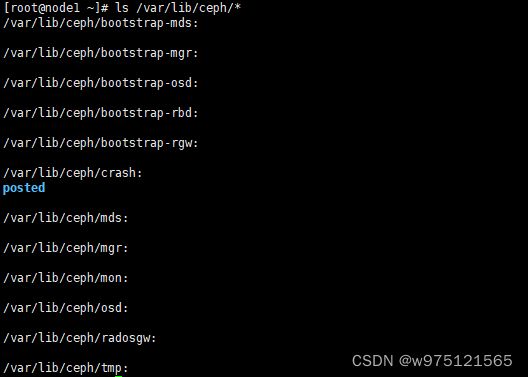

ssh root@node1

[root@node1 ~]# ls /var/lib/ceph/

bootstrap-mds bootstrap-mgr bootstrap-osd bootstrap-rbd bootstrap-rgw crash mds mgr mon osd radosgw tmp

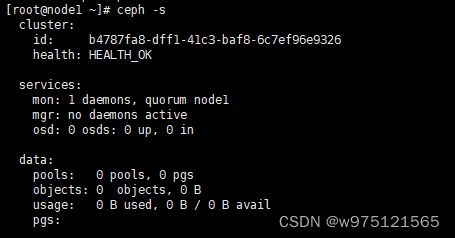

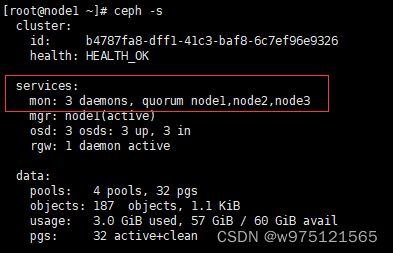

3)创建monitor

ceph-deploy mon create-initial

ceph-deploy admin node1 ceph-deploy admin node2 node34) 创建mgr

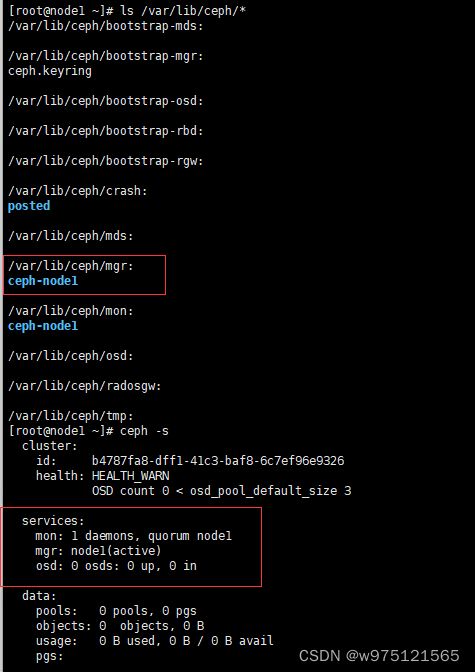

ceph-deploy mgr create node1ssh root@node1

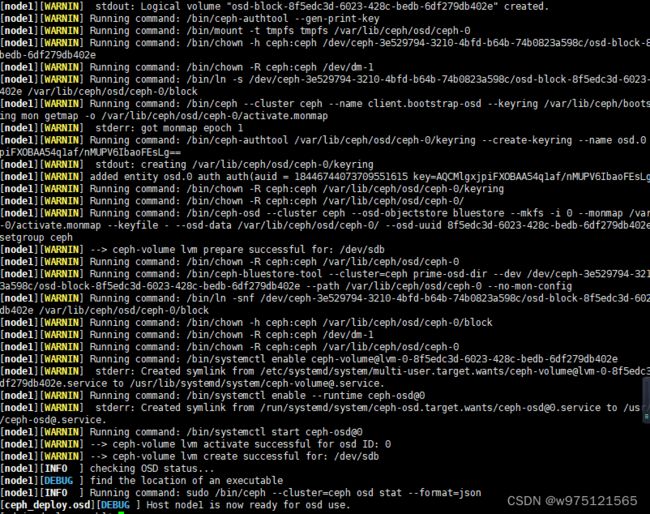

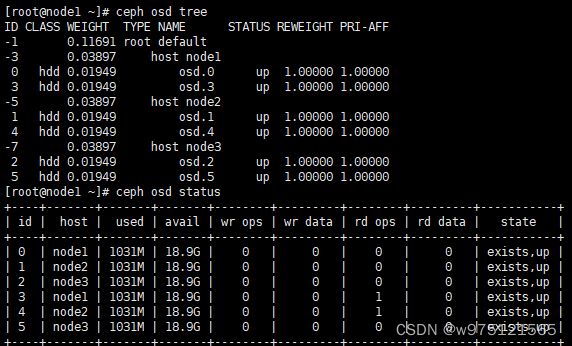

5) 创建osd

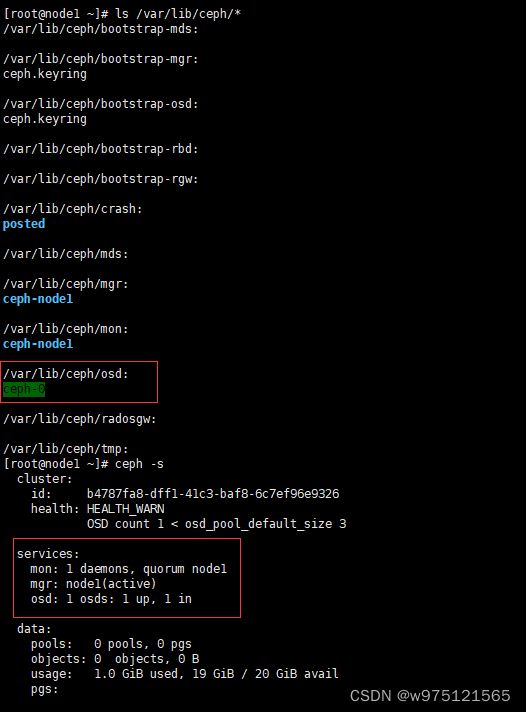

ceph-deploy osd create --data /dev/sdb node1ssh root@node1

此时 health: HEALTH_WARN

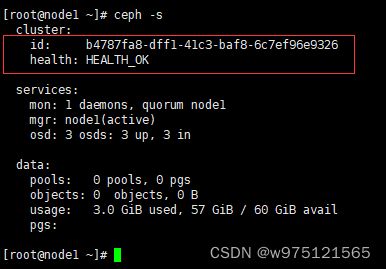

ceph-deploy osd create --data /dev/sdb node2

ceph-deploy osd create --data /dev/sdb node36)创建 mds

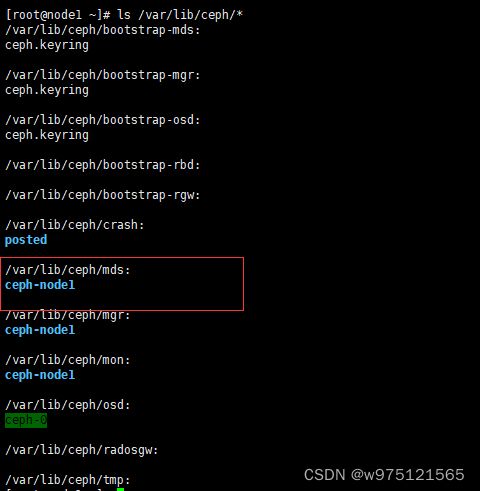

ceph-deploy mds create node1ssh root@node1

7)创建rgw

ceph-deploy rgw create node18)扩容mon

vim ceph.conf

[global]

fsid = b4787fa8-dff1-41c3-baf8-6c7ef96e9326

mon_initial_members = node1 node2 node3

mon_host = 192.168.3.142 192.168.3.143 192.168.3.144

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public_network = 192.168.3.0/24

cluster_network = 192.168.8.0/24

ceph-deploy --overwrite-conf mon createssh root@node1

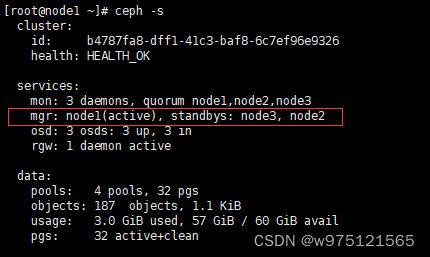

9) 扩容mgr

ceph-deploy mgr create node2 node3ssh root@node1

10)扩容mds

ceph-deploy mds create node2ssh root@node1

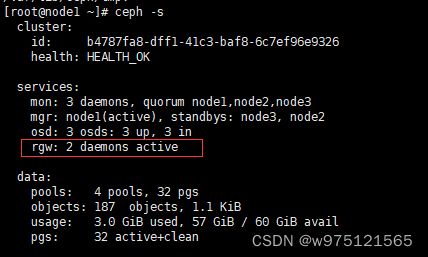

11)扩容rgw

ceph-deploy rgw create node212)扩容osd

ceph-deploy osd create --data /dev/sdc node3ssh root@node1