Hadoop完全分布式搭建(NameNode与SecondaryNameNode分离)

本文记录Hadoop完全分布式的搭建。搭建使用5台主机,且NameNode和SecondaryNameNode分别部署在不同的机器上。

一. 地址及角色规划

| 主机名 | 主机IP | 功能角色 | 主机角色 |

|---|---|---|---|

| node01 | 192.168.74.201 | NameNode | maseter |

| node02 | 192.168.74.202 | SecondaryNameNode | N/A |

| node03 | 192.168.74.203 | DataNode | worker |

| node04 | 192.168.74.204 | DataNode | worker |

| node05 | 192.168.74.205 | DataNode | worker |

上述地址的网络信息如下:

地址网段为192.168.74.0/24一个C,网关192.168.74.2,DNS为192.168.74.2,通过虚拟机NAT模式网卡实现互联。各主机连接到真机的VMnet8虚拟网卡,网卡地址192.168.74.1。

注:VMnet1是用于Host Only模式的网卡在真机上的对应虚拟网卡。

二. 基础环境搭建

1. 配置ssh免密登陆

可以参考这里。

2. 创建hadoop用户

假定创建bee用户作为hadoop用户。

3. 配置/etc/hosts

# 可以先对个时(可选)

ntpdate ntp.api.bz

# 然后配置如下

[root@node01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.74.201 node01

192.168.74.202 node02

192.168.74.203 node03

192.168.74.204 node04

192.168.74.205 node05

在node01~node05上都如上配置/etc/hosts。

4. 安装Java

在bee用户的家目录下创建三个目录:

- modules(负责放解压后的文件)

- software(负责放软件安装包)

- tools(负责存放工具)

# 解压jdk

cd ~/software

tar -zxvf jdk-8u191-linux-x64.tar.gz -C ../modules

cd ~/modules

mv jdk1.8.0_191/ jdk

# 配置环境变量

vim ~/.bashrc # 在此文件后追加以下两句

--------------------------------------

export JAVA_HOME=$HOME/modules/jdk

export PATH=$PATH:$JAVA_HOME/bin

--------------------------------------

source ~/.bash_profile

[bee@node01 ~]$ java -version

java version "1.8.0_191"

Java(TM) SE Runtime Environment (build 1.8.0_191-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.191-b12, mixed mode)

在node01~node05上都进行配置。

5. 安装hadoop

cd ~/software

tar xzvf hadoop-2.9.2.tar.gz -C ../modules/

cd ~/modules

mv hadoop-2.9.2/ hadoop

# 配置环境变量

vim ~/.bashrc # 在此文件后追加以下两句

-----------------------------------

export HADOOP_HOME=$HOME/modules/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

-----------------------------------

source ~/.bash_profile

在node01~node05上都进行配置。

6. 配置hadoop

配置文件所在目录 ~/modules/hadoop/etc/hadoop

可以再定义一个配置目录以供使用。

cp -r /home/bee/modules/hadoop/etc/hadoop /home/bee/modules/hadoop/etc/cluster

- 配置core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://node01value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/home/bee/tmpvalue>

property>

configuration>

- 配置hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>3value>

property>

configuration>

- 配置yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>node01value>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

configuration>

- 配置配置mapred-site.xml

cp mapred-site.xml.template mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

configuration>

- 配置slaves文件

一台主机一行,记录的均为DataNode节点主机名。

node03

node04

node05

- 配置hadoop-env.sh (设置java的环境变量)

export JAVA_HOME=$HOME/modules/jdk/

- 从NameNode主机上分发上述配置文件到其他主机

可以使用如下shell script脚本分发(可以在~/.bashrc文件中添加PATH环境变量,把脚本的路径加入环境变量)

(1)xcp.sh

#!/bin/bash

if [ $# -lt 1 ]; then

echo "Usage: $0 "

exit 1

fi

myfile=$1

user=`whoami`

filename=`basename $myfile`

dir=`dirname $myfile`

if [ $dir = "." ]; then

dir=`pwd`

fi

host=`hostname`

hosts_no=5

width=2

for i in $(seq $hosts_no) ; do

str=`printf "node%0${width}d" $i`

if [[ $str = $host ]] ; then

continue

fi

echo "---------- scp $myfile to $str ----------"

if [ -d $myfile ]; then

scp -r $myfile $user@$str:$dir

else

scp $myfile $user@$str:$dir

fi

echo

done

(2)xrm.sh

#!/bin/bash

if [ $# -lt 1 ]; then

echo "Usage: $0 "

exit 1

fi

myfile=$1

filename=`basename $myfile`

dir=`dirname $myfile`

if [ $dir = "." ]; then

dir=`pwd`

fi

echo "---------- remove $myfile on localhost ----------"

rm -rf $myfile

echo

host=`hostname`

hosts_no=5

width=2

for i in $(seq $hosts_no) ; do

str=`printf "node%0${width}d" $i`

if [[ $str = $host ]] ; then

continue

fi

echo "------ remove $myfile on $str ------"

ssh $str rm -rf $dir/$filename

echo

done

(3)xls.sh

#!/bin/bash

if [ $# -lt 1 ]; then

echo "Usage: $0 "

exit 1

fi

myfile=$1

filename=`basename $myfile`

dir=`dirname $myfile`

if [ $dir = "." ]; then

dir=`pwd`

fi

echo "---------- list $myfile on localhost ----------"

ls $dir/$filename

echo

host=`hostname`

hosts_no=5

width=2

for i in $(seq $hosts_no) ; do

str=`printf "node%0${width}d" $i`

if [[ $str = $host ]] ; then

continue

fi

echo "---------- list $myfile on $str ----------"

ssh $str ls $dir/$filename | xargs

echo

done

(4)xcall.sh

#!/bin/bash

if [ $# -lt 1 ]; then

echo "Usage: $0 "

exit 1

fi

echo "---------- run \"$@\" on localhost ----------"

$@

echo

host=`hostname`

hosts_no=5

width=2

for i in $(seq $hosts_no) ; do

str=`printf "node%0${width}d" $i`

if [[ $str = $host ]] ; then

continue

fi

echo "---------- run \"$@\" on $str----------"

ssh $str $@

echo

done

7. 格式化HDFS文件系统

只需在namenode上执行即可,其他节点会通过ssh自动同步执行。

hdfs --config ~/modules/hadoop/etc/cluster/ namenode -format

格式化HDFS之后的注意点:

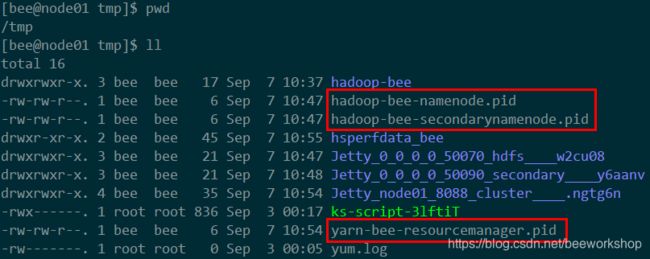

8. 启动hadoop的守护进程

只需在namenode上执行即可,其他节点会通过ssh自动同步执行。

start-dfs.sh --config ~/modules/hadoop/etc/cluster/

start-yarn.sh --config ~/modules/hadoop/etc/cluster/

启动hadoop进程之后的注意点:

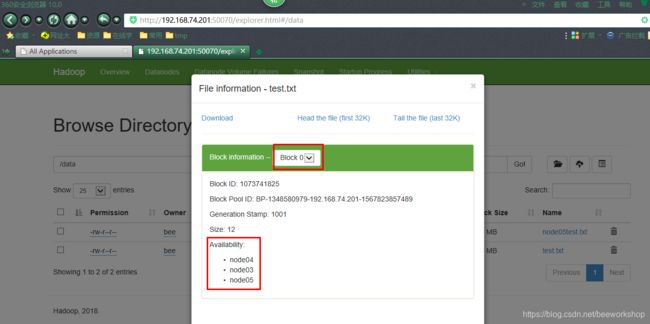

9. 向HDFS文件系统放置文件

hdfs --config ~/modules/hadoop/etc/cluster dfs -mkdir /data

hdfs --config ~/modules/hadoop/etc/cluster dfs -put test.txt /data

hdfs --config ~/modules/hadoop/etc/cluster dfs -cat /data/test.txt

hdfs --config ~/modules/hadoop/etc/cluster dfs -ls -R /

注意:上述命令在所有hadoop集群节点上都可以运行。

操作结果截图如下:

10. 使用shell script脚本运行hadoop的守护进程

以下脚本只需在NameNode节点上运行即可。

(1)xstarthadoop.sh

#!/bin/bash

echo "---------- formating hdfs ----------"

hdfs --config $HOME/modules/hadoop/etc/cluster namenode -format

echo

echo "---------- starting hdfs ----------"

start-dfs.sh --config $HOME/modules/hadoop/etc/cluster

echo

echo "---------- starting yarn ----------"

start-yarn.sh --config $HOME/modules/hadoop/etc/cluster

echo

echo "------ finished ------"

查看进程启动情况

xcall.sh jps

(2)xstophadoop.sh

#!/bin/bash

echo "---------- stop yarn ----------"

stop-yarn.sh --config $HOME/modules/hadoop/etc/cluster

echo

echo "---------- stop hdfs ----------"

stop-dfs.sh --config $HOME/modules/hadoop/etc/cluster

echo

echo "---------- remove tmp dir ----------"

echo

xrm.sh /tmp/hadoop-bee

echo

echo "---------- remove logs dir ----------"

echo

xrm.sh $HOME/modules/hadoop/logs

echo

echo "---------- finished ----------"

查看进程关闭情况

xcall.sh jps

11. 将SecondaryNameNode分离到单独的主机上运行

目前SecondaryNameNode的角色还没有分离到node02主机上运行。

11.1 第一种方法:在各主机上分别启动hadoop进程

- 可以使用如下命令分别启动hadoop进程使得SecondaryNameNode的角色在node02上单独运行:

# 在node01上格式化HDFS

hdfs --config ~/modules/hadoop/etc/cluster namenode -format

# 在node01上

hadoop-daemon.sh --config ~/modules/hadoop/etc/cluster start namenode

# 在node02上

hadoop-daemon.sh --config ~/modules/hadoop/etc/cluster start secondarynamenode

# 在node03~node05上分别执行

hadoop-daemon.sh --config ~/modules/hadoop/etc/cluster start datanode

# 在node01上启动yarn(start all)

start-yarn.sh --config ~/modules/hadoop/etc/cluster

# 或者

# 在node01上单独启动yarn

yarn-daemon.sh --config ~/modules/hadoop/etc/cluster start resourcemanager

# 在node03~node05上分别启动yarn

yarn-daemon.sh --config ~/modules/hadoop/etc/cluster start nodemanager

可以在上述命令的前边加上如下命令以达到远程执行的目的:

ssh node0[2-5] commands args ...

- 此外,可使用如下命令关闭单独启动的hadoop进程:

hadoop-daemon.sh --config ~/modules/hadoop/etc/cluster stop namenode

hadoop-daemon.sh --config ~/modules/hadoop/etc/cluster stop secondarynamenode

hadoop-daemon.sh --config ~/modules/hadoop/etc/cluster stop datanode

- 可以使用如下命令获得节点配置

hdfs getconf -namenodes

hdfs getconf -secondarynamenodes

11.2 第二种方法:修改hdfs-site.xml配置文件

- 修改hdfs-site.xml配置文件

增加如下属性配置

<configuration>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>node02:50090value>

property>

configuration>

使用 xcp.sh将修改后的hdfs-site.xml文件从node01上分发到node02~node05主机上。

12. 环境变量设置的位置

- ~/.bashrc

# .bashrc

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

# Uncomment the following line if you don't like systemctl's auto-paging feature:

# export SYSTEMD_PAGER=

# User specific aliases and functions

export JAVA_HOME=$HOME/modules/jdk

export PATH=$PATH:$JAVA_HOME/bin

export HADOOP_HOME=$HOME/modules/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export PATH=$PATH:$HOME/tools

- ~/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc #这里的点等价于source

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/.local/bin:$HOME/bin

export PATH