Improving Generalization with Domain Convex Game

文章目录

- Abstract

- Introduction

-

- Contributions

- Related Work

-

- Domain Generalization

- Convex Game

- Meta Learning

- Domain Convex Game

使用域凸策略改进领域泛化

Abstract

Domain generalization (DG) tends to alleviate the poor generalization capability of deep neural networks by learning model with multiple source domains.

领域泛化,旨在通过训练多源域的模型来减轻神经网络的低泛化能力

A classical solution to DG is domain augmentation, the common belief of which is that diversifying source domains will be conducive to the out-of-distribution generalization.

DG的通常做法是 领域增强,这种做法认为多样化源域对分布外泛化有益

However, these claims are understood intuitively, rather than mathematically.

重点:只凭直觉理解,而非数学证明

Our explorations empirically reveal that the correlation between model generalization and the diversity of domains may be not strictly positive, which limits the effectiveness of domain augmentation

本篇工作揭示了模型泛化和领域多样性之间可能不是严格正向关系,因此对领域增强产生限制

Introduction

A common belief is that generalizable models would become easier to learn when the training distributions become more diverse, which has been also emphasized by a recent work [47].

通常认为,泛化模型可以在训练分布更多样地情况下更容易地训练,这在最近的一些工作中被证明过。

Notwithstanding the promising results shown by this strand of approaches, the claims above are vague and lack of theoretical justification, formal analyses of the relation between domain diversity and model generalization are sparse.

尽管这些方法证明了结果实现的希望,但这些说法都是模糊、缺少理论证明的,缺少领域多样和模型泛化的正式相关性分析

Further, the transfer of knowledge may even hurt the performance on target domains in some cases, which is referred to as negative transfer [33, 41].

此外,知识转移很可能在某些情况下阻碍目标域的性能

Thus the relation of domain diversity and model generalization remains unclear. In light of these points, we begin by considering the question: The stronger the domain diversity, will it certainly help to improve the model generalization capability?

因此,领域多样性和模型泛化的关联始终不清晰。

开始考虑:领域越多样,一定可以帮助提升模型泛化能力?

The results presented in Fig 1 show that with the increase of domain diversity, the model generalization (measured by the accuracy on unseen target domain) may not necessarily increase, but sometimes decreases instead, as the solid lines show.

fig1显示随着提升领域多样性,模型泛化性能可能不升反降

On the one hand,this may be because the model does not best utilize the rich information of diversified domains; on the other hand, it may be due to the existence of low-quality samples which contain redundant or noisy information that is unprofitable to generalization [18].

一方面,可能是模型可能并没有最有效地利用多样化后地丰富资源;

另一方面,可能是包含大量低质量样本或不利于泛化地噪声信息

This discovery indicates that there is still room for improvement of the effectiveness of domain augmentation if we enable each domain to be certainly conducive to model generalization as the dash lines in Fig 1.

如果使每个域如fig1中实线那样对模型泛化有益,那么领域增强还是有提升空间的

In this work, we therefore aim to ensure the strictly positive correlation between model generalization and domain diversity to guarantee and further enhance the effectiveness of domain augmentation.h

本文旨在确保模型泛化和领域多样性的严格正相关性,并进一步提升领域增强的效果

To do this, we take inspiration from the literature of convex game that requires each player to bring profit to the coalition [4, 13, 40], which is consistent to our key insight, i.e, make each domain bring benefit to model generalization.

从凸博弈汲取灵感:(比如)使每个域对模型泛化有益

This regularization encourages each diversified domain to contribute to improving model generalization, thus enables the model to better exploit the diverse information.

这个正则化推动每个多样化后的域对提升模型泛化做贡献,使得模型能够更有效地利用多样化信息

In the meawhile, considering that there may exist samples with unprofitable or even harmful information to generalization, we further construct a sample filter based on the proposed regularization to get rid of the low-quality samples such as noisy or redundant ones, so that their deterioration to model generalization can be avoided.

同时,考虑到可能存在没有利用价值的样本,或者对泛化性能有弊的样本,因此进一步构建了基于提出的正则化而构建的样本分类器,来避免低质量的噪声样本或者数量过多的一类,从而避免他们对模型泛化的恶化

Thus, the limit of our regularization optimization is actually to achieve a constant marginal contribution, rather than an impracticable increasing marginal contribution.

正则优化的极限是实现一个恒定的边际贡献,而不是实现不切实际的上升边际贡献

Contributions

(i) Exploring the relation of model generalization and source domain diversity, which reveals the limit of previous domain augmentation strand;

探索模型泛化和源域多样性之间的相关性,揭示了先前领域增强链的局限

(ii) Introducing convex game into DG to guarantee and further enhance the validity of domain augmentation. The proposed framework encourages each domain to conducive to generalization while avoiding the negative impact of low-quality samples, enabling the model to better utilize the information within diversified domains;

将凸博弈引入DG,保证并进一步提高领域增强的有效性。提出的框架支持在避免低质量消极样本的情况下对泛化性能有益,从而使得多样化后的域更好地利用信息

(iii) Providing heuristic analysis and intuitive explanations about the rationality. The effectiveness and superiority are verified empirically across extensive real-world datasets.

对合理性提供启发式分析和直觉式探索,通过广泛的真实世界数据集验证了方法的有效性和先进性。

Related Work

Domain Generalization

Domain Generalization researches out-of-distribution generalization with knowledge only extracted from multiple source domains.

领域泛化研究的是只是多源的分布外泛化

L2AOT [54] creates pseudo-novel domains from source data by maximizing an optimal transport-based divergence measure.

从源域最大化基于传输的差异优化

CrossGrad [39] generates samples from fictitious domains via gradient-based domain perturbation while AdvAug [46] achieves so via adversarially perturbing images.

CrossGrad 通过基于梯度的领域扰动从人工领域中生成样本

AdvAug 通过对抗扰动图像 实现相同

MixStyle [56] and FACT [48] mix style information of different instances to synthetic novel domains.

MixStyle 和 FACT 混合不同实例的风格信息到综合的新域中

Instead of enriching domain diversity, another popular solution that learning domain-invariant representations by distribution alignment via kernel-based optimization [8, 30], adversarial learning [22, 29], or using uncertainty modeling [24] demonstrate effectiveness for model generalization.

不同于富态领域的多样性,新方法:通过基于核优化、对比学习,或者使用不确定建模的分布对齐来学习零域不变表征,来证明模型泛化的有效性

Convex Game

A game is called convex when it satisfies the condition that the profit obtained by the cooperation of two coalitions plus the profit obtained by their intersection will not be less than the sum of profit obtained by the two respectively (a.k.a. supermodularity) [4, 13, 40].

小结:1+1>2

Co-Mixup [15] formulates the optimal construction of mixup augmentation data while encouraging diversity among them by introducing supermodularity.

co-mixup 支持在混合增强数据中引入超模博弈来制定最优结构

To the best of our knowledge, this work is the first to introduce convex game into DG to enhance generalization capability.

本文第一个在DG中引入凸博弈来增强泛化能力

Meta Learning

pass

Domain Convex Game

Domain Convex Game (DCG)

域凸博弈

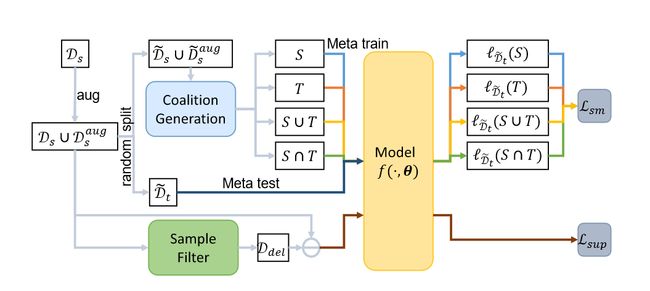

First, we cast DG as a convex game between domains and design a novel regularization term employing the supermodularity, which encourages each domain to benefit model generalization.

首先,将DG映射到一个介于域和使用超模博弈设计新式正则的凸博弈中,这种博弈能够使每个域从模型泛化中受益

Further, we construct a sample filter based on the regularization to exclude bad samples that may cause negative effect on generalization.

接着,构建一个基于正则来排除可能对泛化产生负面影响样本的样本分类器