【Flink入门(3)】Flink的流处理API

【时间】2022.04.29 周五

【题目】【Flink入门(3)】Flink的流处理API

本专栏是尚硅谷Flink课程的笔记与思维导图。

目录

引言

一、Environment环境

二、Source数据源

自定义Source例子

三、Transform转换

1)基本转换算子

map vs flatMap

例子

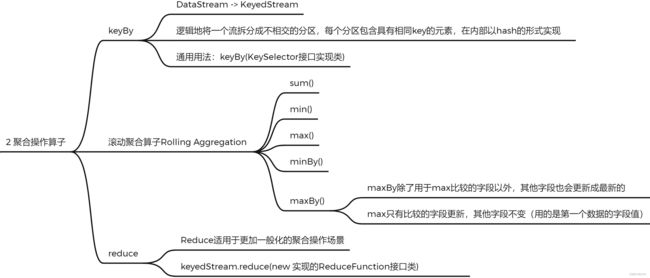

2)聚合算子

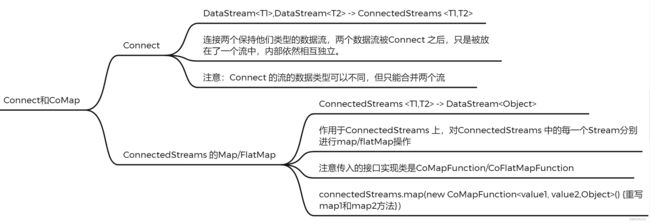

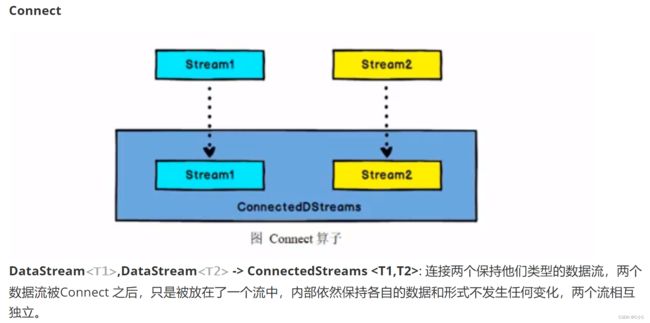

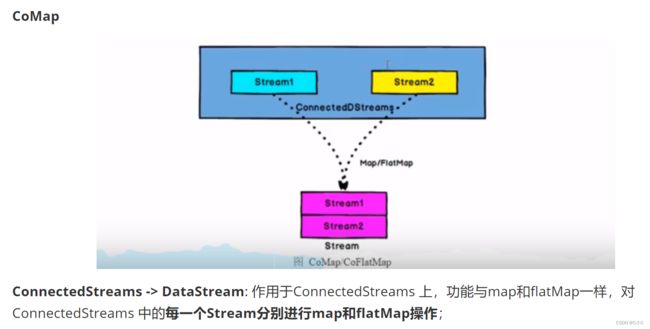

3)多流转换算子

spilt与select

connet与CoMap

Union

四、支持的数据类型

五、实现UDF函数——更细粒度的控制流

六、 数据重分区操作

七、Sink输出

总的导图

引言

flink流处理API主要分为4部分:Environment环境、Source数据源、Transform转换、Sink输出。

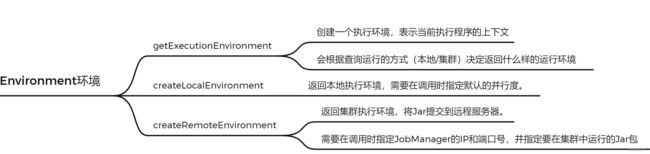

一、Environment环境

二、Source数据源

自定义Source例子

package com.atguigu.apitest.source;

import com.atguigu.apitest.beans.SensorReading;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import java.util.HashMap;

import java.util.Random;

public class SourceTest4_UDF {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 从文件读取数据

DataStream dataStream = env.addSource( new MySensorSource() );

// 打印输出

dataStream.print();

env.execute();

}

// 实现自定义的SourceFunction

public static class MySensorSource implements SourceFunction{

// 定义一个标识位,用来控制数据的产生

private boolean running = true;

// 定义一个随机数发生器

Random random = new Random();

//传感器数据map

private HashMap sensorTempMap = new HashMap<>();

{

// 设置10个传感器的初始温度

for(int i = 0; i <10; i++ ){

sensorTempMap.put("sensor_" + (i+1), 60 + random.nextGaussian() * 20);

}

}

@Override

public void run(SourceContext ctx) throws Exception {

while (running){

for( String sensorId: sensorTempMap.keySet() ){

// 在当前温度基础上随机波动

Double newtemp = sensorTempMap.get(sensorId) + random.nextGaussian();

sensorTempMap.put(sensorId, newtemp);

ctx.collect(new SensorReading(sensorId, System.currentTimeMillis(), newtemp));

}

// 控制输出频率

Thread.sleep(10000L);

}

}

@Override

public void cancel() {

running = false;

}

}}

三、Transform转换

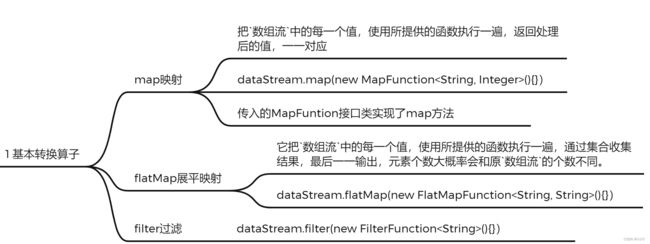

1)基本转换算子

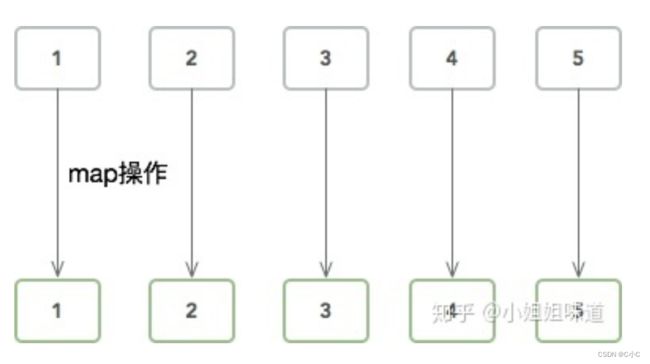

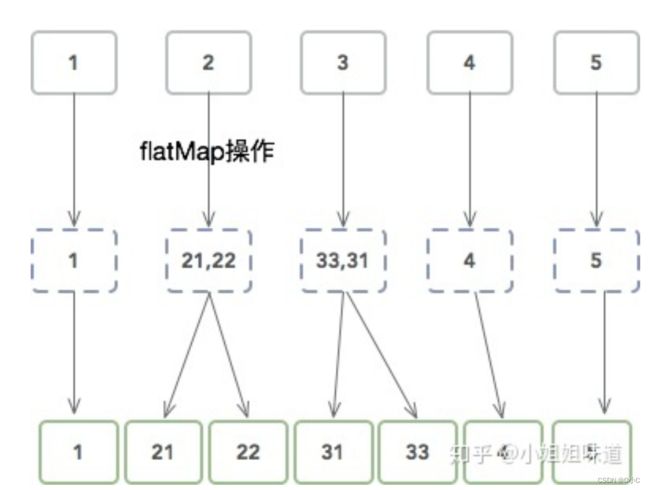

map vs flatMap

例子

package com.atguigu.apitest.transform;

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

public class TransformTest1_Base {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 从文件读取数据

DataStream inputStream = env.readTextFile("src\\main\\resources\\sensor.txt");

// 1. map,把String转换成长度输出

DataStream mapStream = inputStream.map(new MapFunction() {

@Override

public Integer map(String value) throws Exception {

return value.length();

}

});

// 2. flatmap,按逗号分字段

DataStream flatMapStream = inputStream.flatMap(new FlatMapFunction() {

@Override

public void flatMap(String value, Collector out) throws Exception {

String[] fields = value.split(",");

for( String field: fields )

out.collect(field);

}

});

// 3. filter, 筛选sensor_1开头的id对应的数据

DataStream filterStream = inputStream.filter(new FilterFunction() {

@Override

public boolean filter(String value) throws Exception {

return value.startsWith("sensor_1");

}

});

// 打印输出

mapStream.print("map");

flatMapStream.print("flatMap");

filterStream.print("filter");

env.execute();

}

}

2)聚合算子

- keyBy的几种写法

// 分组

KeyedStream keyedStream = dataStream.keyBy("id"); //新版本已丢弃

KeyedStream keyedStream1 = dataStream.keyBy(SensorReading::getId);//直接调用类方法的写法

KeyedStream keyedStream1 = dataStream.keyBy(

new KeySelector() {

@Override

public String getKey(SensorReading sensorReading) throws Exception {

return sensorReading.getId();

}

});//传入KeySelctor接口实行类

KeyedStream keyedStream1 = dataStream.keyBy(data -> data.getId());//lambda表达式

- reduce例子

// reduce聚合,取最大的温度值,以及当前最新的时间戳

SingleOutputStreamOperator resultStream = keyedStream.reduce(new ReduceFunction() {

@Override

public SensorReading reduce(SensorReading value1, SensorReading value2) throws Exception {

return new SensorReading(value1.getId(), value2.getTimestamp(), Math.max(value1.getTemperature(), value2.getTemperature()));

}

});

// SingleOutputStreamOperator resultStream =

// keyedStream.reduce( (curState, newData) -> {

// return new SensorReading(curState.getId(), newData.getTimestamp(), Math.max(curState.getTemperature(), newData.getTemperature()));

// });//lambda表达式写法 3)多流转换算子

spilt与select

- 新版flink已经移除了split算子,新版可以通过process + OutputTag侧输出流实现逻辑分流。

// 1. 分流,按照温度值30度为界分为两条流

OutputTag highStream = new OutputTag("high"){};

OutputTag lowStream = new OutputTag("low"){};

SingleOutputStreamOperator low> SensorReading{id='sensor_1', timestamp=1547718199, temperature=35.8}

high> SensorReading{id='sensor_6', timestamp=1547718201, temperature=15.4}

high> SensorReading{id='sensor_7', timestamp=1547718202, temperature=6.7}

low> SensorReading{id='sensor_10', timestamp=1547718205, temperature=38.1}

low> SensorReading{id='sensor_1', timestamp=1547718207, temperature=36.3}

low> SensorReading{id='sensor_1', timestamp=1547718209, temperature=32.8}

low> SensorReading{id='sensor_1', timestamp=1547718212, temperature=37.1}connet与CoMap

- 例子:

// 2. 合流 connect,将高温流转换成二元组类型,与低温流连接合并之后,输出状态信息

DataStream> warningStream = highTempStream.map(new MapFunction>() {

@Override

public Tuple2 map(SensorReading value) throws Exception {

return new Tuple2<>(value.getId(), value.getTemperature());

}

});

ConnectedStreams, SensorReading> connectedStreams = warningStream.connect(lowTempStream);

DataStream resultStream = connectedStreams.map(new CoMapFunction, SensorReading, Object>() {

@Override

public Object map1(Tuple2 value) throws Exception {

return new Tuple3<>(value.f0, value.f1, "high temp warning");

}

@Override

public Object map2(SensorReading value) throws Exception {

return new Tuple2<>(value.getId(), "normal");

}

});

resultStream.print("connect"); connect> (sensor_6,15.4,high temp warning)

connect> (sensor_1,normal)

connect> (sensor_7,6.7,high temp warning)

connect> (sensor_10,normal)

connect> (sensor_1,normal)

connect> (sensor_1,normal)

connect> (sensor_1,normal)Union

四、支持的数据类型

五、实现UDF函数——更细粒度的控制流

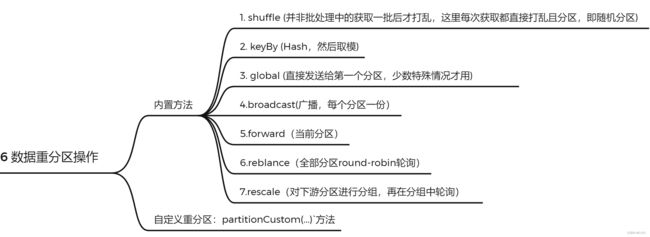

六、 数据重分区操作

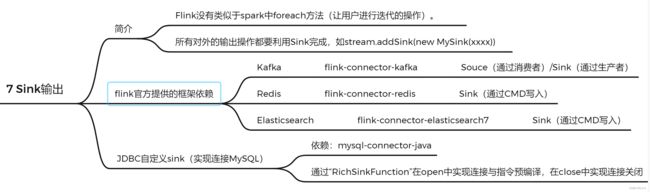

七、Sink输出

总的导图