Kafka入门实例

Kafka入门实例

-

- kafka安装过程

- 工程目录

- pom文件

- 生产者

- 消费者

- 监控页面

kafka安装过程

kafka安装过程参考:Windows系统下搭建Kafka

工程目录

pom文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>cesi.kafka</groupId>

<artifactId>kafkaDemo</artifactId>

<version>1.0-SNAPSHOT</version>

<name>kafkaDemo</name>

<!-- FIXME change it to the project's website -->

<url>http://www.example.com</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.7</maven.compiler.source>

<maven.compiler.target>1.7</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId >

<artifactId>kafka_2.10</artifactId >

<version>0.8.0</version>

</dependency>

</dependencies>

<build>

<pluginManagement><!-- lock down plugins versions to avoid using Maven defaults (may be moved to parent pom) -->

<plugins>

<!-- clean lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#clean_Lifecycle -->

<plugin>

<artifactId>maven-clean-plugin</artifactId>

<version>3.1.0</version>

</plugin>

<!-- default lifecycle, jar packaging: see https://maven.apache.org/ref/current/maven-core/default-bindings.html#Plugin_bindings_for_jar_packaging -->

<plugin>

<artifactId>maven-resources-plugin</artifactId>

<version>3.0.2</version>

</plugin>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.8.0</version>

</plugin>

<plugin>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.22.1</version>

</plugin>

<plugin>

<artifactId>maven-jar-plugin</artifactId>

<version>3.0.2</version>

</plugin>

<plugin>

<artifactId>maven-install-plugin</artifactId>

<version>2.5.2</version>

</plugin>

<plugin>

<artifactId>maven-deploy-plugin</artifactId>

<version>2.8.2</version>

</plugin>

<!-- site lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#site_Lifecycle -->

<plugin>

<artifactId>maven-site-plugin</artifactId>

<version>3.7.1</version>

</plugin>

<plugin>

<artifactId>maven-project-info-reports-plugin</artifactId>

<version>3.0.0</version>

</plugin>

</plugins>

</pluginManagement>

</build>

</project>

生产者

package cesi.kafka.producer;

import kafka.javaapi.producer.Producer;

import kafka.producer.KeyedMessage;

import kafka.producer.ProducerConfig;

import java.util.Properties;

public class KafkaProducer {

private final Producer<String, String> producer;

public final static String TOPIC = "mumu";

private KafkaProducer() {

Properties props = new Properties();

//此处配置的是kafka的端口

props.put("metadata.broker.list", "127.0.0.1:9092");

props.put("zk.connect", "127.0.0.1:2181");

//配置value的序列化类

props.put("serializer.class", "kafka.serializer.StringEncoder");

//配置key的序列化类

props.put("key.serializer.class", "kafka.serializer.StringEncoder");

props.put("request.required.acks", "-1");

producer = new Producer<String, String>(new ProducerConfig(props));

}

void produce() {

int messageNo = 1000;

final int COUNT = 10000;

while (messageNo < COUNT) {

String key = String.valueOf(messageNo);

String data = "kafka producer message " + key;

producer.send(new KeyedMessage<String, String>(TOPIC, key, data));

System.out.println(data);

messageNo++;

}

}

public static void main(String[] args) {

new KafkaProducer().produce();

}

}

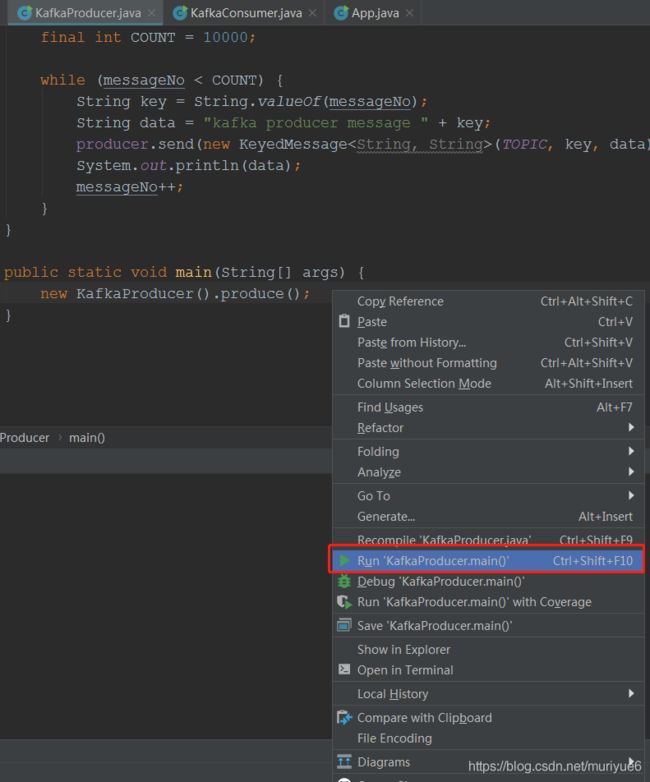

右键:Run ‘KafkaProducer.main()’

消费者

package cesi.kafka.consumer;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

import cesi.kafka.producer.KafkaProducer;

import kafka.consumer.ConsumerConfig;

import kafka.consumer.ConsumerIterator;

import kafka.consumer.KafkaStream;

import kafka.javaapi.consumer.ConsumerConnector;

import kafka.serializer.StringDecoder;

import kafka.utils.VerifiableProperties;

public class KafkaConsumer {

private final ConsumerConnector consumer;

private KafkaConsumer() {

Properties props = new Properties();

// zookeeper 配置

props.put("zookeeper.connect", "127.0.0.1:2181");

// group 代表一个消费组

props.put("group.id", "muGroup");

// zk连接超时

props.put("zookeeper.session.timeout.ms", "4000");

props.put("zookeeper.sync.time.ms", "200");

props.put("rebalance.max.retries", "5");

props.put("rebalance.backoff.ms", "1200");

props.put("auto.commit.interval.ms", "1000");

props.put("auto.offset.reset", "smallest");

// 序列化类

props.put("serializer.class", "kafka.serializer.StringEncoder");

ConsumerConfig config = new ConsumerConfig(props);

consumer = kafka.consumer.Consumer.createJavaConsumerConnector(config);

}

void consume() {

Map<String, Integer> topicCountMap = new HashMap<String, Integer>();

topicCountMap.put(KafkaProducer.TOPIC, new Integer(1));

StringDecoder keyDecoder = new StringDecoder(new VerifiableProperties());

StringDecoder valueDecoder = new StringDecoder(new VerifiableProperties());

Map<String, List<KafkaStream<String, String>>> consumerMap = consumer.createMessageStreams(topicCountMap, keyDecoder, valueDecoder);

KafkaStream<String, String> stream = consumerMap.get(KafkaProducer.TOPIC).get(0);

ConsumerIterator<String, String> it = stream.iterator();

while (it.hasNext())

System.out.println("kafka consumer message " + "<<<" + it.next().message() + ">>>");

}

public static void main(String[] args) {

new KafkaConsumer().consume();

}

}

监控页面

监控页面参考:Kafka web监控KafkaOffsetMonitor