Flink中的窗口函数

目录

1. 增量聚合函数(incremental aggregation functions)

(1)归约函数(ReduceFunction)

(2)聚合函数(AggregateFunction)

2. 全窗口函数(full window functions)

(1)窗口函数(WindowFunction)

(2)处理窗口函数(ProcessWindowFunction)

3. 增量聚合和全窗口函数的结合使用

4、窗口的生命周期

1. 窗口的创建

2. 窗口计算的触发

3. 窗口的销毁

4. 窗口 API 调用总结

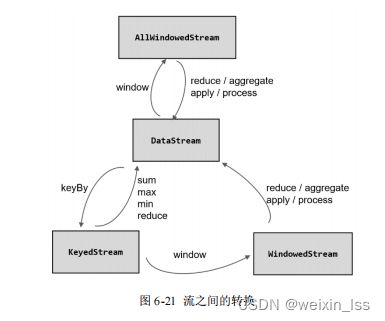

经窗口分配器处理之后,数据可以分配到对应的窗口中,而数据流经过转换得到的数据类

型是 WindowedStream 。这个类型并不是 DataStream ,所以并不能直接进行其他转换,而必须

进一步调用窗口函数,对收集到的数据进行处理计算之后,才能最终再次得到 DataStream ,如

图 6-21 所示。

窗口函数定义了要对窗口中收集的数据做的计算操作,根据处理的方式可以分为两类:增

量聚合函数和全窗口函数。下面我们来进行分别讲解。

1. 增量聚合函数(incremental aggregation functions)

为了提高实时性,我们可以再次将流处理的思路发扬光大:就像 DataStream 的简单聚合

一样,每来一条数据就立即进行计算,中间只要保持一个简单的聚合状态就可以了;区别只是

在于不立即输出结果,而是要等到窗口结束时间。等到窗口到了结束时间需要输出计算结果的

时候,我们只需要拿出之前聚合的状态直接输出,这无疑就大大提高了程序运行的效率和实时

性。

典型的增量聚合函数有两个: ReduceFunction 和 AggregateFunction。

(1)归约函数(ReduceFunction)

最基本的聚合方式就是归约(reduce)。

窗口函数中也提供了 ReduceFunction :只要基于 WindowedStream 调用 .reduce() 方法,然

后传入 ReduceFunction 作为参数,就可以指定以归约两个元素的方式去对窗口中数据进行聚

合了。这里的 ReduceFunction 其实与简单聚合时用到的 ReduceFunction 是同一个函数类接口,

所以使用方式也是完全一样的。

ReduceFunction 中需要重写一个 reduce 方法,它的两个参数代表输入的两 个元素,而归约最终输出结果的数据类型,与输入的数据类型必须保持一致。也就是说,中间 聚合的状态和输出的结果,都和输入的数据类型是一样的。

下面是使用 ReduceFunction 进行增量聚合的代码示例

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.ReduceFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import

org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindo

ws;

import org.apache.flink.streaming.api.windowing.time.Time;

import java.time.Duration;

public class WindowReduceExample {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 从自定义数据源读取数据,并提取时间戳、生成水位线

SingleOutputStreamOperator stream = env.addSource(new

ClickSource())

.assignTimestampsAndWatermarks(WatermarkStrategy.forBoun

dedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner()

{

@Override

public long extractTimestamp(Event element, long recordTimestamp)

{

return element.timestamp;

}

})); stream.map(new MapFunction>() {

@Override

public Tuple2 map(Event value) throws Exception {

// 将数据转换成二元组,方便计算

return Tuple2.of(value.user, 1L);

}

})

.keyBy(r -> r.f0)

// 设置滚动事件时间窗口

.window(TumblingEventTimeWindows.of(Time.seconds(5)))

.reduce(new ReduceFunction>() {

@Override

public Tuple2 reduce(Tuple2 value1,

Tuple2 value2) throws Exception {

// 定义累加规则,窗口闭合时,向下游发送累加结果

return Tuple2.of(value1.f0, value1.f1 + value2.f1);

}

})

.print();

env.execute();

}

} 代码中我们对每个用户的行为数据进行了开窗统计。与 word count 逻辑类似,首先将数

据转换成 (user, count) 的二元组形式(类型为 Tuple2

值叠加到状态上,并得到新的状态保存起来。等到了 5 秒窗口的结束时间,就把归约好的状态

直接输出。

这里需要注意,我们经过窗口聚合转换输出的数据,数据类型依然是二元组 Tuple2

Long> 。

(2)聚合函数(AggregateFunction)

ReduceFunction 可以解决大多数归约聚合的问题,但是这个接口有一个限制,就是聚合状

态的类型、输出结果的类型都必须和输入数据类型一样。这就迫使我们必须在聚合前,先将数

据转换( map )成预期结果类型;而在有些情况下,还需要对状态进行进一步处理才能得到输

出结果,这时它们的类型可能不同,使用 ReduceFunction 就会非常麻烦。

例如,如果我们希望计算一组数据的平均值,应该怎样做聚合呢?很明显,这时我们需要

计算两个状态量:数据的总和( sum ),以及数据的个数( count ),而最终输出结果是两者的商

( sum/count )。如果用 ReduceFunction ,那么我们应该先把数据转换成二元组 (sum, count) 的形

式,然后进行归约聚合,最后再将元组的两个元素相除转换得到最后的平均值。本来应该只是

一个任务,可我们却需要 map-reduce-map 三步操作,这显然不够高效。

于是自然可以想到,如果取消类型一致的限制,让输入数据、中间状态、输出结果三者类

型都可以不同,不就可以一步直接搞定了吗?

Flink 的 Window API 中的 aggregate 就提供了这样的操作。直接基于 WindowedStream 调

用 .aggregate() 方法,就可以定义更加灵活的窗口聚合操作。这个方法需要传入一个

AggregateFunction 的实现类作为参数。 AggregateFunction 在源码中的定义如下:

public interface AggregateFunction extends Function, Serializable

{

ACC createAccumulator();

ACC add(IN value, ACC accumulator);

OUT getResult(ACC accumulator);

155

ACC merge(ACC a, ACC b);

} AggregateFunction 可以看作是 ReduceFunction 的通用版本,这里有三种类型:输入类型

( IN )、累加器类型( ACC )和输出类型( OUT )。输入类型 IN 就是输入流中元素的数据类型;

累加器类型 ACC 则是我们进行聚合的中间状态类型;而输出类型当然就是最终计算结果的类

型了。

接口中有四个方法:

⚫ createAccumulator() :创建一个累加器,这就是为聚合创建了一个初始状态,每个聚

合任务只会调用一次。

⚫ add() :将输入的元素添加到累加器中。这就是基于聚合状态,对新来的数据进行进

一步聚合的过程。方法传入两个参数:当前新到的数据 value ,和当前的累加器

accumulator ;返回一个新的累加器值,也就是对聚合状态进行更新。每条数据到来之

后都会调用这个方法。

⚫ getResult() :从累加器中提取聚合的输出结果。也就是说,我们可以定义多个状态,

然后再基于这些聚合的状态计算出一个结果进行输出。比如之前我们提到的计算平均

值,就可以把 sum 和 count 作为状态放入累加器,而在调用这个方法时相除得到最终

结果。这个方法只在窗口要输出结果时调用。

⚫ merge() :合并两个累加器,并将合并后的状态作为一个累加器返回。这个方法只在

需要合并窗口的场景下才会被调用;最常见的合并窗口( Merging Window )的场景

就是会话窗口( Session Windows )。

所以可以看到,AggregateFunction 的工作原理是:首先调用 createAccumulator() 为任务初

始化一个状态 ( 累加器 ) ;而后每来一个数据就调用一次 add() 方法,对数据进行聚合,得到的

结果保存在状态中;等到了窗口需要输出时,再调用 getResult() 方法得到计算结果。很明显,

与 ReduceFunction 相同, AggregateFunction 也是增量式的聚合;而由于输入、中间状态、输

出的类型可以不同,使得应用更加灵活方便。

下面来看一个具体例子。我们知道,在电商网站中,PV (页面浏览量)和 UV (独立访客

数)是非常重要的两个流量指标。一般来说, PV 统计的是所有的点击量;而对用户 id 进行去

重之后,得到的就是 UV 。所以有时我们会用 PV/UV 这个比值,来表示“人均重复访问量”,

也就是平均每个用户会访问多少次页面,这在一定程度上代表了用户的粘度。

代码实现如下:

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import

org.apache.flink.streaming.api.windowing.assigners.SlidingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import java.util.HashSet;

public class WindowAggregateFunctionExample {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator stream = env.addSource(new

ClickSource())

.assignTimestampsAndWatermarks(WatermarkStrategy.forMono

tonousTimestamps()

.withTimestampAssigner(new SerializableTimestampAssigner()

{

@Override

public long extractTimestamp(Event element, long recordTimestamp)

{

return element.timestamp;

}

}));

// 所有数据设置相同的 key,发送到同一个分区统计 PV 和 UV,再相除

stream.keyBy(data -> true)

.window(SlidingEventTimeWindows.of(Time.seconds(10),

Time.seconds(2)))

.aggregate(new AvgPv())

.print();

env.execute();

}

public static class AvgPv implements AggregateFunction, Long>, Double> {

@Override

public Tuple2, Long> createAccumulator() {

// 创建累加器

return Tuple2.of(new HashSet(), 0L);

}

@Override

public Tuple2, Long> add(Event value,

Tuple2, Long> accumulator) {

// 属于本窗口的数据来一条累加一次,并返回累加器

accumulator.f0.add(value.user);

return Tuple2.of(accumulator.f0, accumulator.f1 + 1L);

}

@Override

public Double getResult(Tuple2, Long> accumulator) {

// 窗口闭合时,增量聚合结束,将计算结果发送到下游

return (double) accumulator.f1 / accumulator.f0.size();

}

@Override

public Tuple2, Long> merge(Tuple2, Long>

a, Tuple2, Long> b) {

return null;

}

}

} · 代码中我们创建了事件时间滑动窗口,统计 10 秒钟的“人均 PV ”,每 2 秒统计一次。由

于聚合的状态还需要做处理计算,因此窗口聚合时使用了更加灵活的 AggregateFunction 。为了

统计 UV ,我们用一个 HashSet 保存所有出现过的用户 id ,实现自动去重;而 PV 的统计则类

似一个计数器,每来一个数据加一就可以了。所以这里的状态,定义为包含一个 HashSet 和一

个 count 值的二元组( Tuple2, Long> ),每来一条数据,就将 user 存入 HashSet , 同时 count 加 1 。这里的 count 就是 PV ,而 HashSet 中元素的个数( size )就是 UV ;所以最终 窗口的输出结果,就是它们的比值。

这里没有涉及会话窗口,所以 merge() 方法可以不做任何操作。

另外,Flink 也为窗口的聚合提供了一系列预定义的简单聚合方法,可以直接基于

WindowedStream 调用。主要包括 .sum()/max()/maxBy()/min()/minBy() ,与 KeyedStream 的简单

聚合非常相似。它们的底层,其实都是通过 AggregateFunction 来实现的。

通过 ReduceFunction 和 AggregateFunction 我们可以发现,增量聚合函数其实就是在用流

处理的思路来处理有界数据集,核心是保持一个聚合状态,当数据到来时不停地更新状态。这

就是 Flink 所谓的“有状态的流处理”,通过这种方式可以极大地提高程序运行的效率,所以

在实际应用中最为常见。

2. 全窗口函数(full window functions)

窗口操作中的另一大类就是全窗口函数。与增量聚合函数不同,全窗口函数需要先收集窗

口中的数据,并在内部缓存起来,等到窗口要输出结果的时候再取出数据进行计算。

很明显,这就是典型的批处理思路了——先攒数据,等一批都到齐了再正式启动处理流程。

这样做毫无疑问是低效的:因为窗口全部的计算任务都积压在了要输出结果的那一瞬间,而在

之前收集数据的漫长过程中却无所事事。这就好比平时不用功,到考试之前通宵抱佛脚,肯定

不如把工夫花在日常积累上。

那为什么还需要有全窗口函数呢?这是因为有些场景下,我们要做的计算必须基于全部的

数据才有效,这时做增量聚合就没什么意义了;另外,输出的结果有可能要包含上下文中的一

些信息(比如窗口的起始时间),这是增量聚合函数做不到的。所以,我们还需要有更丰富的

窗口计算方式,这就可以用全窗口函数来实现。

在 Flink 中,全窗口函数也有两种: WindowFunction 和 ProcessWindowFunction。

(1)窗口函数(WindowFunction)

WindowFunction 字面上就是“窗口函数”,它其实是老版本的通用窗口函数接口。我们可

以基于 WindowedStream 调用 .apply() 方法,传入一个 WindowFunction 的实现类。

stream

.keyBy()

.window()

.apply(new MyWindowFunction()); 这个类中可以获取到包含窗口所有数据的可迭代集合(Iterable ),还可以拿到窗口

( Window )本身的信息。 WindowFunction 接口在源码中实现如下:

public interface WindowFunction extends Function,

Serializable {

void apply(KEY key, W window, Iterable input, Collector out) throws

Exception;

} 当窗口到达结束时间需要触发计算时,就会调用这里的 apply 方法。我们可以从 input 集

合中取出窗口收集的数据,结合 key 和 window 信息,通过收集器( Collector )输出结果。这

里 Collector 的用法,与 FlatMapFunction 中相同。

不过我们也看到了,WindowFunction 能提供的上下文信息较少,也没有更高级的功能。

事实上,它的作用可以被 ProcessWindowFunction 全覆盖,所以之后可能会逐渐弃用。一般在

实际应用,直接使用 ProcessWindowFunction 就可以了。

(2)处理窗口函数(ProcessWindowFunction)

ProcessWindowFunction 是 Window API 中最底层的通用窗口函数接口。之所以说它“最底

层”,是因为除了可以拿到窗口中的所有数据之外, ProcessWindowFunction 还可以获取到一个

“上下文对象”( Context )。这个上下文对象非常强大,不仅能够获取窗口信息,还可以访问当

前的时间和状态信息。这里的时间就包括了处理时间( processing time )和事件时间水位线( eventtime watermark)。这就使得 ProcessWindowFunction 更加灵活、功能更加丰富。事实上, ProcessWindowFunction 是 Flink 底层 API ——处理函数( process function )中的一员,关于处 理函数我们会在后续章节展开讲解。

当 然 , 这 些 好 处 是 以 牺 牲 性 能 和 资 源 为 代 价 的 。 作 为 一 个 全 窗 口 函 数 ,

ProcessWindowFunction 同样需要将所有数据缓存下来、等到窗口触发计算时才使用。它其实

就是一个增强版的 WindowFunction 。

具体使用跟 WindowFunction 非常类似,我们可以基于 WindowedStream 调用 .process() 方 法,传入一个 ProcessWindowFunction 的实现类。

下面是一个电商网站统计每小时 UV 的例子:

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import

org.apache.flink.streaming.api.functions.windowing.ProcessWindowFunction;

import

org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import java.sql.Timestamp;

import java.util.HashSet;

public class UvCountByWindowExample {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator stream = env.addSource(new

ClickSource())

.assignTimestampsAndWatermarks(WatermarkStrategy.forBound

edOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new

SerializableTimestampAssigner() {

@Override

public long extractTimestamp(Event element, long

recordTimestamp) {

return element.timestamp;

}

}));

// 将数据全部发往同一分区,按窗口统计 UV

stream.keyBy(data -> true)

.window(TumblingEventTimeWindows.of(Time.seconds(10)))

.process(new UvCountByWindow())

.print();

env.execute();

}

// 自定义窗口处理函数

public static class UvCountByWindow extends ProcessWindowFunction{

@Override

public void process(Boolean aBoolean, Context context, Iterable

elements, Collector out) throws Exception {

HashSet userSet = new HashSet<>();

// 遍历所有数据,放到 Set 里去重

for (Event event: elements){

userSet.add(event.user);

}

// 结合窗口信息,包装输出内容

Long start = context.window().getStart();

Long end = context.window().getEnd();

out.collect(" 窗 口 : " + new Timestamp(start) + " ~ " + new

Timestamp(end)

+ " 的独立访客数量是:" + userSet.size());

}

}

} 这里我们使用的是事件时间语义。定义 10 秒钟的滚动事件窗口后,直接使用

ProcessWindowFunction 来定义处理的逻辑。我们可以创建一个 HashSet ,将窗口所有数据的

userId 写入实现去重,最终得到 HashSet 的元素个数就是 UV 值。

当 然 , 这 里 我 们 并 没 有 用 到 上 下 文 中 其 他 信 息 , 所 以 其 实 没 有 必 要 使 用

ProcessWindowFunction 。全窗口函数因为运行效率较低,很少直接单独使用,往往会和增量

聚合函数结合在一起,共同实现窗口的处理计算。

3. 增量聚合和全窗口函数的结合使用

我们已经了解了 Window API 中两类窗口函数的用法,下面我们先来做个简单的总结。

增量聚合函数处理计算会更高效。举一个最简单的例子,对一组数据求和。大量的数据连

续不断到来,全窗口函数只是把它们收集缓存起来,并没有处理;到了窗口要关闭、输出结果

的时候,再遍历所有数据依次叠加,得到最终结果。而如果我们采用增量聚合的方式,那么只

需要保存一个当前和的状态,每个数据到来时就会做一次加法,更新状态;到了要输出结果的

时候,只要将当前状态直接拿出来就可以了。增量聚合相当于把计算量“均摊”到了窗口收集

数据的过程中,自然就会比全窗口聚合更加高效、输出更加实时。

而全窗口函数的优势在于提供了更多的信息,可以认为是更加“通用”的窗口操作。它只

负责收集数据、提供上下文相关信息,把所有的原材料都准备好,至于拿来做什么我们完全可

以任意发挥。这就使得窗口计算更加灵活,功能更加强大。

所以在实际应用中,我们往往希望兼具这两者的优点,把它们结合在一起使用。Flink 的

Window API 就给我们实现了这样的用法。

我们之前在调用 WindowedStream 的 .reduce() 和 .aggregate() 方法时,只是简单地直接传入

了一个 ReduceFunction 或 AggregateFunction 进行增量聚合。除此之外,其实还可以传入第二

个参数:一个全窗口函数,可以是 WindowFunction 或者 ProcessWindowFunction 。

// ReduceFunction 与 WindowFunction 结合

public SingleOutputStreamOperator reduce(

ReduceFunction reduceFunction, WindowFunction function)

// ReduceFunction 与 ProcessWindowFunction 结合

public SingleOutputStreamOperator reduce(

ReduceFunction reduceFunction, ProcessWindowFunction

function)

// AggregateFunction 与 WindowFunction 结合

public SingleOutputStreamOperator aggregate(

AggregateFunction aggFunction, WindowFunction

windowFunction)

// AggregateFunction 与 ProcessWindowFunction 结合

public SingleOutputStreamOperator aggregate(

AggregateFunction aggFunction,

ProcessWindowFunction windowFunction) 这样调用的处理机制是:基于第一个参数(增量聚合函数)来处理窗口数据,每来一个数

据就做一次聚合;等到窗口需要触发计算时,则调用第二个参数(全窗口函数)的处理逻辑输

出结果。需要注意的是,这里的全窗口函数就不再缓存所有数据了,而是直接将增量聚合函数

的结果拿来当作了 Iterable 类型的输入。一般情况下,这时的可迭代集合中就只有一个元素了。

下面我们举一个具体的实例来说明。在网站的各种统计指标中,一个很重要的统计指标就

是热门的链接;想要得到热门的 url ,前提是得到每个链接的“热门度”。一般情况下,可以用

url 的浏览量(点击量)表示热门度。我们这里统计 10 秒钟的 url 浏览量,每 5 秒钟更新一次;

另外为了更加清晰地展示,还应该把窗口的起始结束时间一起输出。我们可以定义滑动窗口,

并结合增量聚合函数和全窗口函数来得到统计结果。

具体实现代码如下:

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import

org.apache.flink.streaming.api.functions.windowing.ProcessWindowFunction;

import

org.apache.flink.streaming.api.windowing.assigners.SlidingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

public class UrlViewCountExample {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator stream = env.addSource(new

ClickSource())

.assignTimestampsAndWatermarks(WatermarkStrategy.forMonot

onousTimestamps()

.withTimestampAssigner(new

SerializableTimestampAssigner() {

@Override

public long extractTimestamp(Event element, long

recordTimestamp) {

return element.timestamp;

}

}));

// 需要按照 url 分组,开滑动窗口统计

stream.keyBy(data -> data.url)

.window(SlidingEventTimeWindows.of(Time.seconds(10),

Time.seconds(5)))

// 同时传入增量聚合函数和全窗口函数

.aggregate(new UrlViewCountAgg(), new UrlViewCountResult())

.print();

env.execute();

}

// 自定义增量聚合函数,来一条数据就加一

public static class UrlViewCountAgg implements AggregateFunction {

@Override

public Long createAccumulator() {

return 0L;

}

@Override

public Long add(Event value, Long accumulator) {

return accumulator + 1;

}

@Override

public Long getResult(Long accumulator) {

return accumulator;

}

@Override

public Long merge(Long a, Long b) {

return null;

}

}

// 自定义窗口处理函数,只需要包装窗口信息

public static class UrlViewCountResult extends ProcessWindowFunction {

@Override

public void process(String url, Context context, Iterable elements,

Collector out) throws Exception {

// 结合窗口信息,包装输出内容

Long start = context.window().getStart();

Long end = context.window().getEnd();

// 迭代器中只有一个元素,就是增量聚合函数的计算结果

out.collect(new UrlViewCount(url, elements.iterator().next(), start,

end));

}

}

} 这里我们为了方便处理,单独定义了一个 POJO 类 UrlViewCount 来表示聚合输出结果的

数据类型,包含了 url 、浏览量以及窗口的起始结束时间。

import java.sql.Timestamp;

public class UrlViewCount {

public String url;

public Long count;

public Long windowStart;

public Long windowEnd;

public UrlViewCount() {

}

public UrlViewCount(String url, Long count, Long windowStart, Long windowEnd)

{

this.url = url;

this.count = count;

this.windowStart = windowStart;

this.windowEnd = windowEnd;

}

@Override

public String toString() {

return "UrlViewCount{" +

"url='" + url + '\'' +

", count=" + count +

", windowStart=" + new Timestamp(windowStart) +

", windowEnd=" + new Timestamp(windowEnd) +

'}';

}

} 代码中用一个 AggregateFunction 来实现增量聚合,每来一个数据就计数加一;得到的结

果交给 ProcessWindowFunction ,结合窗口信息包装成我们想要的 UrlViewCount ,最终输出统

计结果。

注:ProcessWindowFunction 是处理函数中的一种,后面我们会详细讲解。这里只用它来

将增量聚合函数的输出结果包裹一层窗口信息。

窗口处理的主体还是增量聚合,而引入全窗口函数又可以获取到更多的信息包装输出,这

样的结合兼具了两种窗口函数的优势,在保证处理性能和实时性的同时支持了更加丰富的应用

场景。

4、窗口的生命周期

1. 窗口的创建

窗口的类型和基本信息由窗口分配器(window assigners )指定,但窗口不会预先创建好,

而是由数据驱动创建。当第一个应该属于这个窗口的数据元素到达时,就会创建对应的窗口。

2. 窗口计算的触发

除了窗口分配器,每个窗口还会有自己的窗口函数(window functions )和触发器( trigger )。 窗口函数可以分为增量聚合函数和全窗口函数,主要定义了窗口中计算的逻辑;而触发器则是指定调用窗口函数的条件。

对于不同的窗口类型,触发计算的条件也会不同。例如,一个滚动事件时间窗口,应该在

水位线到达窗口结束时间的时候触发计算,属于“定点发车”;而一个计数窗口,会在窗口中

元素数量达到定义大小时触发计算,属于“人满就发车”。所以 Flink 预定义的窗口类型都有

对应内置的触发器。

对于事件时间窗口而言,除去到达结束时间的“定点发车”,还有另一种情形。当我们设

置了允许延迟,那么如果水位线超过了窗口结束时间、但还没有到达设定的最大延迟时间,这

期间内到达的迟到数据也会触发窗口计算。这类似于没有准时赶上班车的人又追上了车,这时

车要再次停靠、开门,将新的数据整合统计进来。

3. 窗口的销毁

一般情况下,当时间达到了结束点,就会直接触发计算输出结果、进而清除状态销毁窗口。

这时窗口的销毁可以认为和触发计算是同一时刻。这里需要注意, Flink 中只对时间窗口

( TimeWindow )有销毁机制;由于计数窗口( CountWindow )是基于全局窗口( GlobalWindw )

实现的,而全局窗口不会清除状态,所以就不会被销毁。

在特殊的场景下,窗口的销毁和触发计算会有所不同。事件时间语义下,如果设置了允许

延迟,那么在水位线到达窗口结束时间时,仍然不会销毁窗口;窗口真正被完全删除的时间点,

是窗口的结束时间加上用户指定的允许延迟时间。

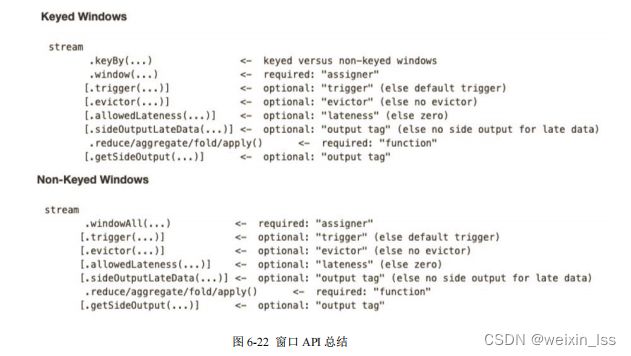

4. 窗口 API 调用总结

到目前为止,我们已经彻底明白了 Flink 中窗口的概念和 Window API 的调用,我们再用

一张图做一个完整总结,如图 6-22 所示。

Window API 首先按照时候按键分区分成两类。 keyBy 之后的 KeyedStream ,可以调

用 .window() 方法声明按键分区窗口( Keyed Windows );而如果不做 keyBy , DataStream 也可

以直接调用 .windowAll() 声明非按键分区窗口。之后的方法调用就完全一样了。

接下来首先是通过.window()/.windowAll() 方法定义窗口分配器,得到 WindowedStream ;

然 后 通 过 各 种 转 换 方 法 ( reduce/aggregate/apply/process ) 给 出 窗 口 函 数

(ReduceFunction/AggregateFunction/ProcessWindowFunction) ,定义窗口的具体计算处理逻辑,

转换之后重新得到 DataStream 。这两者必不可少,是窗口算子( WindowOperator )最重要的组

成部分。

此外,在这两者之间,还可以基于 WindowedStream 调用 .trigger() 自定义触发器、调

用 .evictor() 定义移除器、调用 .allowedLateness() 指定允许延迟时间、调用 .sideOutputLateData()

将迟到数据写入侧输出流,这些都是可选的 API ,一般不需要实现。而如果定义了侧输出流,

可以基于窗口聚合之后的 DataStream 调用 .getSideOutput() 获取侧输出流。