教女朋友学时间序列

学习笔记

时间序列

一、创建时间序列

1.1 date_range

- 可以指定开始时间与周期

- H:小时

- D:天

- M:月

import pandas as pd

import numpy as np

从2016-07-01开始,周期为10,间隔为3天,生成的时间序列为下:

rng = pd.date_range('2016-07-01', periods = 10, freq = '3D')

rng

DatetimeIndex(['2016-07-01', '2016-07-04', '2016-07-07', '2016-07-10',

'2016-07-13', '2016-07-16', '2016-07-19', '2016-07-22',

'2016-07-25', '2016-07-28'],

dtype='datetime64[ns]', freq='3D')

其中,起始日期也可以写成’2016 Jul 1’、‘7/1/2016’、‘1/7/2016’、‘2016-07-01’、'2016/07/01’中的任何一种形式:

# TIMES #2016 Jul 1 7/1/2016 1/7/2016 2016-07-01 2016/07/01

rng = pd.date_range('2016 Jul 1', periods = 10, freq = '3D')

rng

DatetimeIndex(['2016-07-01', '2016-07-04', '2016-07-07', '2016-07-10',

'2016-07-13', '2016-07-16', '2016-07-19', '2016-07-22',

'2016-07-25', '2016-07-28'],

dtype='datetime64[ns]', freq='3D')

在Series中,指定index,将时间作为索引,产生随机序列:

time=pd.Series(np.random.randn(20),

index=pd.date_range(dt.datetime(2016,1,1),periods=20))

print(time)

2016-01-01 -0.129379

2016-01-02 0.164480

2016-01-03 -0.639117

2016-01-04 -0.427224

2016-01-05 2.055133

2016-01-06 1.116075

2016-01-07 0.357426

2016-01-08 0.274249

2016-01-09 0.834405

2016-01-10 -0.005444

2016-01-11 -0.134409

2016-01-12 0.249318

2016-01-13 -0.297842

2016-01-14 -0.128514

2016-01-15 0.063690

2016-01-16 -2.246031

2016-01-17 0.359552

2016-01-18 0.383030

2016-01-19 0.402717

2016-01-20 -0.694068

Freq: D, dtype: float64

1.2 truncate过滤

过滤掉2016-1-10之前的数据:

time.truncate(before='2016-1-10')

2016-01-10 -0.005444

2016-01-11 -0.134409

2016-01-12 0.249318

2016-01-13 -0.297842

2016-01-14 -0.128514

2016-01-15 0.063690

2016-01-16 -2.246031

2016-01-17 0.359552

2016-01-18 0.383030

2016-01-19 0.402717

2016-01-20 -0.694068

Freq: D, dtype: float64

过滤掉2016-1-10之后的数据:

time.truncate(after='2016-1-10')

2016-01-01 -0.129379

2016-01-02 0.164480

2016-01-03 -0.639117

2016-01-04 -0.427224

2016-01-05 2.055133

2016-01-06 1.116075

2016-01-07 0.357426

2016-01-08 0.274249

2016-01-09 0.834405

2016-01-10 -0.005444

Freq: D, dtype: float64

通过时间索引,提取数据:

print(time['2016-01-15'])

0.063690487247

通过切片,将一段时间间隔的数据提取出来:

print(time['2016-01-15':'2016-01-20'])

2016-01-15 0.063690

2016-01-16 -2.246031

2016-01-17 0.359552

2016-01-18 0.383030

2016-01-19 0.402717

2016-01-20 -0.694068

Freq: D, dtype: float64

我们也可以指定起始时间和终止时间,产生时间序列:

data=pd.date_range('2010-01-01','2011-01-01',freq='M')

print(data)

DatetimeIndex(['2010-01-31', '2010-02-28', '2010-03-31', '2010-04-30',

'2010-05-31', '2010-06-30', '2010-07-31', '2010-08-31',

'2010-09-30', '2010-10-31', '2010-11-30', '2010-12-31'],

dtype='datetime64[ns]', freq='M')

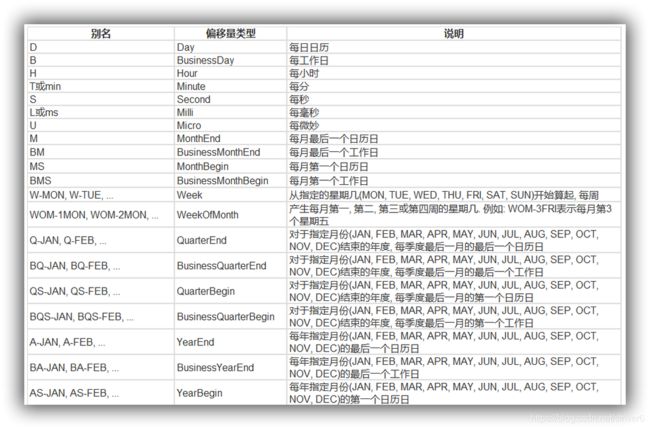

参数freq中可以选的数值:

1.3 时间戳

pd.Timestamp('2016-07-10')

Timestamp('2016-07-10 00:00:00')

可以指定更多细节

pd.Timestamp('2016-07-10 10')

Timestamp('2016-07-10 10:00:00')

pd.Timestamp('2016-07-10 10:15')

Timestamp('2016-07-10 10:15:00')

How much detail can you add?

t = pd.Timestamp('2016-07-10 10:15')

1.4 时间区间

2016年的一月份:

pd.Period('2016-01')

Period('2016-01', 'M')

2016年1月1号:

pd.Period('2016-01-01')

Period('2016-01-01', 'D')

1.5 时间加减

TIME OFFSETS

产生一个一天的时间偏移量:

pd.Timedelta('1 day')

Timedelta('1 days 00:00:00')

得到2016-01-01 10:10的后一天时刻:

pd.Period('2016-01-01 10:10') + pd.Timedelta('1 day')

Period('2016-01-02 10:10', 'T')

时间戳加减:

pd.Timestamp('2016-01-01 10:10') + pd.Timedelta('1 day')

Timestamp('2016-01-02 10:10:00')

加15 ns:

pd.Timestamp('2016-01-01 10:10') + pd.Timedelta('15 ns')

Timestamp('2016-01-01 10:10:00.000000015')

在时间间隔刹参数中,我们既可以写成25H,也可以写成1D1H这种通俗的表达:

p1 = pd.period_range('2016-01-01 10:10', freq = '25H', periods = 10)

p2 = pd.period_range('2016-01-01 10:10', freq = '1D1H', periods = 10)

p1

PeriodIndex(['2016-01-01 10:00', '2016-01-02 11:00', '2016-01-03 12:00',

'2016-01-04 13:00', '2016-01-05 14:00', '2016-01-06 15:00',

'2016-01-07 16:00', '2016-01-08 17:00', '2016-01-09 18:00',

'2016-01-10 19:00'],

dtype='period[25H]', freq='25H')

p2

PeriodIndex(['2016-01-01 10:00', '2016-01-02 11:00', '2016-01-03 12:00',

'2016-01-04 13:00', '2016-01-05 14:00', '2016-01-06 15:00',

'2016-01-07 16:00', '2016-01-08 17:00', '2016-01-09 18:00',

'2016-01-10 19:00'],

dtype='period[25H]', freq='25H')

1.6 指定索引

rng = pd.date_range('2016 Jul 1', periods = 10, freq = 'D')

rng

pd.Series(range(len(rng)), index = rng)

2016-07-01 0

2016-07-02 1

2016-07-03 2

2016-07-04 3

2016-07-05 4

2016-07-06 5

2016-07-07 6

2016-07-08 7

2016-07-09 8

2016-07-10 9

Freq: D, dtype: int32

构造任意的Series结构时间序列数据:

periods = [pd.Period('2016-01'), pd.Period('2016-02'), pd.Period('2016-03')]

ts = pd.Series(np.random.randn(len(periods)), index = periods)

ts

2016-01 -1.668569

2016-02 0.547351

2016-03 2.537183

Freq: M, dtype: float64

type(ts.index)

pandas.core.indexes.period.PeriodIndex

1.7 时间戳和时间周期可以转换

产生时间周期:

ts = pd.Series(range(10), pd.date_range('07-10-16 8:00', periods = 10, freq = 'H'))

ts

2016-07-10 08:00:00 0

2016-07-10 09:00:00 1

2016-07-10 10:00:00 2

2016-07-10 11:00:00 3

2016-07-10 12:00:00 4

2016-07-10 13:00:00 5

2016-07-10 14:00:00 6

2016-07-10 15:00:00 7

2016-07-10 16:00:00 8

2016-07-10 17:00:00 9

Freq: H, dtype: int32

将时间周期转化为时间戳:

ts_period = ts.to_period()

ts_period

2016-07-10 08:00 0

2016-07-10 09:00 1

2016-07-10 10:00 2

2016-07-10 11:00 3

2016-07-10 12:00 4

2016-07-10 13:00 5

2016-07-10 14:00 6

2016-07-10 15:00 7

2016-07-10 16:00 8

2016-07-10 17:00 9

Freq: H, dtype: int32

时间周期和时间戳区别:

对时间周期的切片操作:

ts_period['2016-07-10 08:30':'2016-07-10 11:45']

2016-07-10 08:00 0

2016-07-10 09:00 1

2016-07-10 10:00 2

2016-07-10 11:00 3

Freq: H, dtype: int32

对时间戳的切片操作结果:

ts['2016-07-10 08:30':'2016-07-10 11:45']

2016-07-10 09:00:00 1

2016-07-10 10:00:00 2

2016-07-10 11:00:00 3

Freq: H, dtype: int32

二、数据重采样

- 时间数据由一个频率转换到另一个频率

- 降采样:例如将365天数据变为12个月数据

- 升采样:相反

import pandas as pd

import numpy as np

从1/1/2011开始,时间间隔为1天,产生90个时间数据:

rng = pd.date_range('1/1/2011', periods=90, freq='D')

ts = pd.Series(np.random.randn(len(rng)), index=rng)

ts.head()

2011-01-01 -1.547635

2011-01-02 0.726423

2011-01-03 0.098872

2011-01-04 -0.513126

2011-01-05 0.308996

Freq: D, dtype: float64

2.1 降采样

将以上数据降采样为月数据,观察每个月数据之和:

ts.resample('M').sum()

2011-01-31 1.218451

2011-02-28 -8.133711

2011-03-31 -1.648535

Freq: M, dtype: float64

降采样为3天,并求和:

ts.resample('3D').sum()

2011-01-01 0.045643

2011-01-04 -2.255206

2011-01-07 0.571142

2011-01-10 0.835032

2011-01-13 -0.396766

2011-01-16 -1.156253

2011-01-19 -1.286884

2011-01-22 2.883952

2011-01-25 1.566908

2011-01-28 1.435563

2011-01-31 0.311565

2011-02-03 -2.541235

2011-02-06 0.317075

2011-02-09 1.598877

2011-02-12 -1.950509

2011-02-15 2.928312

2011-02-18 -0.733715

2011-02-21 1.674817

2011-02-24 -2.078872

2011-02-27 2.172320

2011-03-02 -2.022104

2011-03-05 -0.070356

2011-03-08 1.276671

2011-03-11 -2.835132

2011-03-14 -1.384113

2011-03-17 1.517565

2011-03-20 -0.550406

2011-03-23 0.773430

2011-03-26 2.244319

2011-03-29 2.951082

Freq: 3D, dtype: float64

计算降采样后数据均值:

day3Ts = ts.resample('3D').mean()

day3Ts

2011-01-01 -0.240780

2011-01-04 0.140980

2011-01-07 -0.041360

2011-01-10 -0.175434

2011-01-13 -0.348187

2011-01-16 -0.098252

2011-01-19 0.675025

2011-01-22 0.368577

2011-01-25 0.081462

2011-01-28 0.284014

2011-01-31 -0.217979

2011-02-03 -0.413876

2011-02-06 -0.801936

2011-02-09 -0.030326

2011-02-12 -0.139332

2011-02-15 -0.288397

2011-02-18 -0.842207

2011-02-21 0.689252

2011-02-24 -0.915056

2011-02-27 -0.164817

2011-03-02 0.273717

2011-03-05 -0.123553

2011-03-08 -0.402591

2011-03-11 0.115541

2011-03-14 -0.401329

2011-03-17 0.687958

2011-03-20 0.674243

2011-03-23 -1.724097

2011-03-26 0.313001

2011-03-29 0.211141

Freq: 3D, dtype: float64

2.2 升采样

直接升采样是有问题的,因为有数据缺失:

print(day3Ts.resample('D').asfreq())

2011-01-01 -0.240780

2011-01-02 NaN

2011-01-03 NaN

2011-01-04 0.140980

2011-01-05 NaN

2011-01-06 NaN

2011-01-07 -0.041360

2011-01-08 NaN

2011-01-09 NaN

2011-01-10 -0.175434

2011-01-11 NaN

2011-01-12 NaN

2011-01-13 -0.348187

2011-01-14 NaN

2011-01-15 NaN

2011-01-16 -0.098252

2011-01-17 NaN

2011-01-18 NaN

2011-01-19 0.675025

2011-01-20 NaN

2011-01-21 NaN

2011-01-22 0.368577

2011-01-23 NaN

2011-01-24 NaN

2011-01-25 0.081462

2011-01-26 NaN

2011-01-27 NaN

2011-01-28 0.284014

2011-01-29 NaN

2011-01-30 NaN

...

2011-02-28 NaN

2011-03-01 NaN

2011-03-02 0.273717

2011-03-03 NaN

2011-03-04 NaN

2011-03-05 -0.123553

2011-03-06 NaN

2011-03-07 NaN

2011-03-08 -0.402591

2011-03-09 NaN

2011-03-10 NaN

2011-03-11 0.115541

2011-03-12 NaN

2011-03-13 NaN

2011-03-14 -0.401329

2011-03-15 NaN

2011-03-16 NaN

2011-03-17 0.687958

2011-03-18 NaN

2011-03-19 NaN

2011-03-20 0.674243

2011-03-21 NaN

2011-03-22 NaN

2011-03-23 -1.724097

2011-03-24 NaN

2011-03-25 NaN

2011-03-26 0.313001

2011-03-27 NaN

2011-03-28 NaN

2011-03-29 0.211141

Freq: D, Length: 88, dtype: float64

这时,我们就要用到下面所讲的插值方法:

2.3 插值方法

- ffill 空值取前面的值

- bfill 空值取后面的值

- interpolate 线性取值

使用ffill插值:

day3Ts.resample('D').ffill(1)

2011-01-01 -0.240780

2011-01-02 -0.240780

2011-01-03 NaN

2011-01-04 0.140980

2011-01-05 0.140980

2011-01-06 NaN

2011-01-07 -0.041360

2011-01-08 -0.041360

2011-01-09 NaN

2011-01-10 -0.175434

2011-01-11 -0.175434

2011-01-12 NaN

2011-01-13 -0.348187

2011-01-14 -0.348187

2011-01-15 NaN

2011-01-16 -0.098252

2011-01-17 -0.098252

2011-01-18 NaN

2011-01-19 0.675025

2011-01-20 0.675025

2011-01-21 NaN

2011-01-22 0.368577

2011-01-23 0.368577

2011-01-24 NaN

2011-01-25 0.081462

2011-01-26 0.081462

2011-01-27 NaN

2011-01-28 0.284014

2011-01-29 0.284014

2011-01-30 NaN

...

2011-02-28 -0.164817

2011-03-01 NaN

2011-03-02 0.273717

2011-03-03 0.273717

2011-03-04 NaN

2011-03-05 -0.123553

2011-03-06 -0.123553

2011-03-07 NaN

2011-03-08 -0.402591

2011-03-09 -0.402591

2011-03-10 NaN

2011-03-11 0.115541

2011-03-12 0.115541

2011-03-13 NaN

2011-03-14 -0.401329

2011-03-15 -0.401329

2011-03-16 NaN

2011-03-17 0.687958

2011-03-18 0.687958

2011-03-19 NaN

2011-03-20 0.674243

2011-03-21 0.674243

2011-03-22 NaN

2011-03-23 -1.724097

2011-03-24 -1.724097

2011-03-25 NaN

2011-03-26 0.313001

2011-03-27 0.313001

2011-03-28 NaN

2011-03-29 0.211141

Freq: D, Length: 88, dtype: float64

使用bfill插值:

day3Ts.resample('D').bfill(1)

2011-01-01 0.015214

2011-01-02 NaN

2011-01-03 -0.751735

2011-01-04 -0.751735

2011-01-05 NaN

2011-01-06 0.190381

2011-01-07 0.190381

2011-01-08 NaN

2011-01-09 0.278344

2011-01-10 0.278344

2011-01-11 NaN

2011-01-12 -0.132255

2011-01-13 -0.132255

2011-01-14 NaN

2011-01-15 -0.385418

2011-01-16 -0.385418

2011-01-17 NaN

2011-01-18 -0.428961

2011-01-19 -0.428961

2011-01-20 NaN

2011-01-21 0.961317

2011-01-22 0.961317

2011-01-23 NaN

2011-01-24 0.522303

2011-01-25 0.522303

2011-01-26 NaN

2011-01-27 0.478521

2011-01-28 0.478521

2011-01-29 NaN

2011-01-30 0.103855

...

2011-02-28 NaN

2011-03-01 -0.674035

2011-03-02 -0.674035

2011-03-03 NaN

2011-03-04 -0.023452

2011-03-05 -0.023452

2011-03-06 NaN

2011-03-07 0.425557

2011-03-08 0.425557

2011-03-09 NaN

2011-03-10 -0.945044

2011-03-11 -0.945044

2011-03-12 NaN

2011-03-13 -0.461371

2011-03-14 -0.461371

2011-03-15 NaN

2011-03-16 0.505855

2011-03-17 0.505855

2011-03-18 NaN

2011-03-19 -0.183469

2011-03-20 -0.183469

2011-03-21 NaN

2011-03-22 0.257810

2011-03-23 0.257810

2011-03-24 NaN

2011-03-25 0.748106

2011-03-26 0.748106

2011-03-27 NaN

2011-03-28 0.983694

2011-03-29 0.983694

Freq: D, Length: 88, dtype: float64

使用interpolate线性取值:

day3Ts.resample('D').interpolate('linear')

2011-01-01 0.015214

2011-01-02 -0.240435

2011-01-03 -0.496085

2011-01-04 -0.751735

2011-01-05 -0.437697

2011-01-06 -0.123658

2011-01-07 0.190381

2011-01-08 0.219702

2011-01-09 0.249023

2011-01-10 0.278344

2011-01-11 0.141478

2011-01-12 0.004611

2011-01-13 -0.132255

2011-01-14 -0.216643

2011-01-15 -0.301030

2011-01-16 -0.385418

2011-01-17 -0.399932

2011-01-18 -0.414447

2011-01-19 -0.428961

2011-01-20 0.034465

2011-01-21 0.497891

2011-01-22 0.961317

2011-01-23 0.814979

2011-01-24 0.668641

2011-01-25 0.522303

2011-01-26 0.507709

2011-01-27 0.493115

2011-01-28 0.478521

2011-01-29 0.353632

2011-01-30 0.228744

...

2011-02-28 0.258060

2011-03-01 -0.207988

2011-03-02 -0.674035

2011-03-03 -0.457174

2011-03-04 -0.240313

2011-03-05 -0.023452

2011-03-06 0.126218

2011-03-07 0.275887

2011-03-08 0.425557

2011-03-09 -0.031310

2011-03-10 -0.488177

2011-03-11 -0.945044

2011-03-12 -0.783820

2011-03-13 -0.622595

2011-03-14 -0.461371

2011-03-15 -0.138962

2011-03-16 0.183446

2011-03-17 0.505855

2011-03-18 0.276080

2011-03-19 0.046306

2011-03-20 -0.183469

2011-03-21 -0.036376

2011-03-22 0.110717

2011-03-23 0.257810

2011-03-24 0.421242

2011-03-25 0.584674

2011-03-26 0.748106

2011-03-27 0.826636

2011-03-28 0.905165

2011-03-29 0.983694

Freq: D, Length: 88, dtype: float64

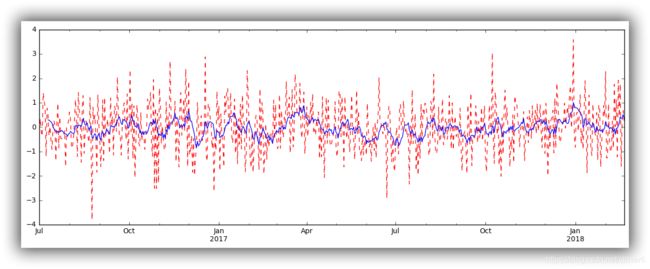

三、Pandas滑动窗口

为了提升数据的准确性,将某个点的取值扩大到包含这个点的一段区间,用区间来进行判断,这个区间就是窗口。例如想使用2011年1月1日的一个数据,单取这个时间点的数据当然是可行的,但是太过绝对,有没有更好的办法呢?可以选取2010年12月16日到2011年1月15日,通过求均值来评估1月1日这个点的值,2010-12-16到2011-1-15就是一个窗口,窗口的长度window=30.

移动窗口就是窗口向一端滑行,默认是从右往左,每次滑行并不是区间整块的滑行,而是一个单位一个单位的滑行。例如窗口2010-12-16到2011-1-15,下一个窗口并不是2011-1-15到2011-2-15,而是2010-12-17到2011-1-16(假设数据的截取是以天为单位),整体向右移动一个单位,而不是一个窗口。这样统计的每个值始终都是30单位的均值。

也就是我们在统计学中的移动平均法。

%matplotlib inline

import matplotlib.pylab

import numpy as np

import pandas as pd

指定六百个数据的序列:

df = pd.Series(np.random.randn(600), index = pd.date_range('7/1/2016', freq = 'D', periods = 600))

df.head()

2016-07-01 0.490170

2016-07-02 -0.381746

2016-07-03 0.765849

2016-07-04 -0.513293

2016-07-05 -2.284776

Freq: D, dtype: float64

指定该序列一个单位长度为10的滑块

r = df.rolling(window = 10)

r

Rolling [window=10,center=False,axis=0]

输出滑块内的平均值,窗口中的值从覆盖整个窗口的位置开始产生,在此之前即为NaN,举例如下:窗口大小为10,前9个都不足够为一个一个窗口的长度,因此都无法取值。

#r.max, r.median, r.std, r.skew, r.sum, r.var

print(r.mean())

2016-07-01 NaN

2016-07-02 NaN

2016-07-03 NaN

2016-07-04 NaN

2016-07-05 NaN

2016-07-06 NaN

2016-07-07 NaN

2016-07-08 NaN

2016-07-09 NaN

2016-07-10 -0.731681

2016-07-11 -0.741944

2016-07-12 -0.841750

2016-07-13 -0.824005

2016-07-14 -0.760116

2016-07-15 -0.607035

2016-07-16 -0.669249

2016-07-17 -0.440359

2016-07-18 -0.291586

2016-07-19 -0.226081

2016-07-20 0.099771

2016-07-21 -0.201909

2016-07-22 -0.136984

2016-07-23 -0.219586

2016-07-24 -0.175016

2016-07-25 -0.107554

2016-07-26 -0.065601

2016-07-27 -0.220129

2016-07-28 -0.085098

2016-07-29 -0.114384

2016-07-30 -0.363240

...

2018-01-22 0.076906

2018-01-23 0.133465

2018-01-24 0.301593

2018-01-25 0.147387

2018-01-26 0.046669

2018-01-27 0.211237

2018-01-28 0.305431

2018-01-29 0.263660

2018-01-30 0.050792

2018-01-31 0.035849

2018-02-01 0.106649

2018-02-02 0.231164

2018-02-03 -0.015120

2018-02-04 0.133317

2018-02-05 0.304489

2018-02-06 0.123427

2018-02-07 -0.133892

2018-02-08 -0.184399

2018-02-09 -0.080139

2018-02-10 -0.211622

2018-02-11 -0.177756

2018-02-12 -0.027888

2018-02-13 0.244256

2018-02-14 0.329209

2018-02-15 0.167602

2018-02-16 0.167141

2018-02-17 0.369997

2018-02-18 0.276210

2018-02-19 0.297868

2018-02-20 0.479243

Freq: D, Length: 600, dtype: float64

通过画图库来看原始序列与滑动窗口产生序列的关系图,原始数据用红色表示,移动平均后数据用蓝色点表示:

import matplotlib.pyplot as plt

%matplotlib inline

plt.figure(figsize=(15, 5))

df.plot(style='r--')

df.rolling(window=10).mean().plot(style='b')

可以看到,原始值浮动差异较大,而移动平均后数值较为平稳。

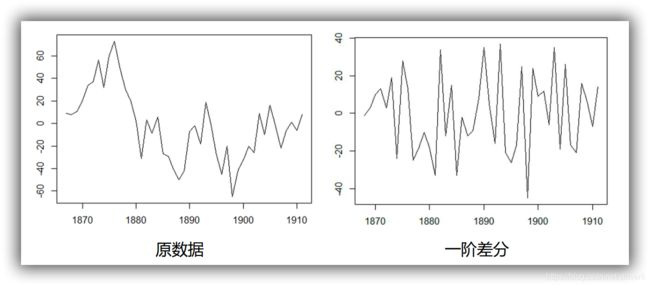

四、数据平稳性与差分法

平稳性:

- 平稳性就是要求经由样本时间序列所得到的拟合曲线在未来的一段期间内仍能顺着现有的形态“惯性”地延续下去

- 平稳性要求序列的均值和方差不发生明显变化

严平稳与弱平稳:

- 严平稳:严平稳表示的分布不随时间的改变而改变。

如:白噪声(正态),无论怎么取,都是期望为0,方差为1 - 弱平稳:期望与相关系数(依赖性)不变

未来某时刻的t的值Xt就要依赖于它的过去信息,所以需要依赖性

差分法:时间序列在t与t-1时刻的差值:

导入包,设置绘图风格:

%load_ext autoreload

%autoreload 2

%matplotlib inline

%config InlineBackend.figure_format='retina'

from __future__ import absolute_import, division, print_function

# http://www.lfd.uci.edu/~gohlke/pythonlibs/#xgboost

import sys

import os

import pandas as pd

import numpy as np

# # Remote Data Access

# import pandas_datareader.data as web

# import datetime

# # reference: https://pandas-datareader.readthedocs.io/en/latest/remote_data.html

# TSA from Statsmodels

import statsmodels.api as sm

import statsmodels.formula.api as smf

import statsmodels.tsa.api as smt

# Display and Plotting

import matplotlib.pylab as plt

import seaborn as sns

pd.set_option('display.float_format', lambda x: '%.5f' % x) # pandas

np.set_printoptions(precision=5, suppress=True) # numpy

pd.set_option('display.max_columns', 100)

pd.set_option('display.max_rows', 100)

# seaborn plotting style

sns.set(style='ticks', context='poster')

The autoreload extension is already loaded. To reload it, use:

%reload_ext autoreload

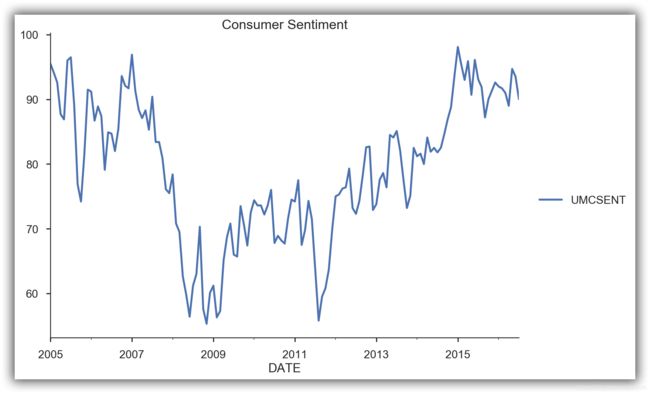

Read the data:美国消费者信心指数

Sentiment = 'sentiment.csv'

Sentiment = pd.read_csv(Sentiment, index_col=0, parse_dates=[0])

Sentiment.head()

.dataframe thead th {

text-align: left;

}

.dataframe tbody tr th {

vertical-align: top;

}

| UMCSENT | |

|---|---|

| DATE | |

| 2000-01-01 | 112.00000 |

| 2000-02-01 | 111.30000 |

| 2000-03-01 | 107.10000 |

| 2000-04-01 | 109.20000 |

| 2000-05-01 | 110.70000 |

Select the series from 2005 - 2016:

sentiment_short = Sentiment.loc['2005':'2016']

绘制消费者信心指数随着时间的变化情况:

sentiment_short.plot(figsize=(12,8))

plt.legend(bbox_to_anchor=(1.25, 0.5))

plt.title("Consumer Sentiment")

sns.despine()

可见数据变化较不稳定,我们来做一阶差分和二阶差分:

sentiment_short['diff_1'] = sentiment_short['UMCSENT'].diff(1)

sentiment_short['diff_2'] = sentiment_short['diff_1'].diff(1)

sentiment_short.plot(subplots=True, figsize=(18, 12))

D:\Anaconda\lib\site-packages\ipykernel_launcher.py:1: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: http://pandas.pydata.org/pandas-docs/stable/indexing.html#indexing-view-versus-copy

"""Entry point for launching an IPython kernel.

D:\Anaconda\lib\site-packages\ipykernel_launcher.py:3: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: http://pandas.pydata.org/pandas-docs/stable/indexing.html#indexing-view-versus-copy

This is separate from the ipykernel package so we can avoid doing imports until

array([,

,

], dtype=object)

五、ARIMA模型

5.1 AR模型

自回归模型(AR):

- 描述当前值与历史值之间的关系,用变量自身的历史时间数据对自身进行预测

- 自回归模型必须满足平稳性的要求

- p阶自回归过程的公式定义:

是当前值 是常数项 P 是阶数 是自相关系数 是误差

自回归模型的限制:

- 自回归模型是用自身的数据来进行预测

- 必须具有平稳性

- 必须具有自相关性,如果自相关系数(φi)小于0.5,则不宜采用

- 自回归只适用于预测与自身前期相关的现象

5.2 MA模型

移动平均模型(MA)

- 移动平均模型关注的是自回归模型中的误差项的累加

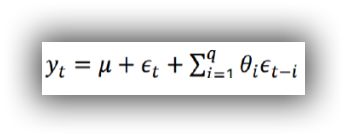

- q阶自回归过程的公式定义:

- 移动平均法能有效地消除预测中的随机波动

5.3 ARMA模型

自回归移动平均模型(ARMA)

- 自回归与移动平均的结合

- 公式定义:

5.4 ARIMA模型

ARIMA(p,d,q)模型全称为差分自回归移动平均模型

(Autoregressive Integrated Moving Average Model,简记ARIMA)

- AR是自回归, p为自回归项; MA为移动平均q为移动平均项数,d为时间序列成为平稳时所做的差分次数,一般做一阶差分就够了,很少有做二阶差分的

- 原理:将非平稳时间序列转化为平稳时间序列然后将因变量仅对它的滞后值以及随机误差项的现值和滞后值进行回归所建立的模型

5.5 相关函数评估(选择p、q值)方法

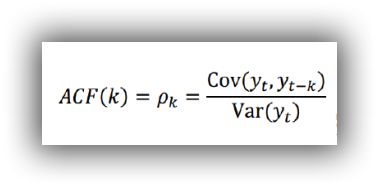

1.自相关函数ACF(autocorrelation function)

- 有序的随机变量序列与其自身相比较自相关函数反映了同一序列在不同时序的取值之间的相关性

- 公式:

- Pk的取值范围为[-1,1]

2.偏自相关函数(PACF)(partial autocorrelation function)

- 对于一个平稳AR§模型,求出滞后k自相关系数p(k)时实际上得到并不是x(t)与x(t-k)之间单纯的相关关系

- x(t)同时还会受到中间k-1个随机变量x(t-1)、x(t-2)、……、x(t-k+1)的影响而这k-1个随机变量又都和x(t-k)具有相关关系

所以自相关系数p(k)里实际掺杂了其他变量对x(t)与x(t-k)的影响 - 剔除了中间k-1个随机变量x(t-1)、x(t-2)、……、x(t-k+1)的干扰之后x(t-k)对x(t)影响的相关程度。

- ACF还包含了其他变量的影响而偏自相关系数PACF是严格这两个变量之间的相关性

3.ARIMA(p,d,q)阶数确定:

- 截尾:落在置信区间内(95%的点都符合该规则)

ARIMA(p,d,q)阶数确定:

- AR§ 看PACF

- MA(q) 看ACF

4.利用AIC与BIC准则: 选择参数p、q

- AIC:赤池信息准则(Akaike Information Criterion,AIC)

??? = 2? − 2ln(?) - BIC:贝叶斯信息准则(Bayesian Information Criterion,BIC)

??? = ??? ? − 2ln(?) - k为模型参数个数,n为样本数量,L为似然函数

5.模型残差检验:

- ARIMA模型的残差是否是平均值为0且方差为常数的正态分布

- QQ图:线性即正态分布

5.5 ARIMA建模流程:

- 将序列平稳(差分法确定d)

- p和q阶数确定:ACF与PACF

- ARIMA(p,d,q)

六、实战分析

6.1 数据’sentiment.csv’ARIMA模型

接上面数据定义:

del sentiment_short['diff_2']

del sentiment_short['diff_1']

sentiment_short.head()

print (type(sentiment_short))

绘制ACF图、PACF图确定p、q值,其中阴影部分代表p、q的置信区间:

fig = plt.figure(figsize=(12,8))

ax1 = fig.add_subplot(211)

fig = sm.graphics.tsa.plot_acf(sentiment_short, lags=20,ax=ax1)

ax1.xaxis.set_ticks_position('bottom')

fig.tight_layout();

ax2 = fig.add_subplot(212)

fig = sm.graphics.tsa.plot_pacf(sentiment_short, lags=20, ax=ax2)

ax2.xaxis.set_ticks_position('bottom')

fig.tight_layout();

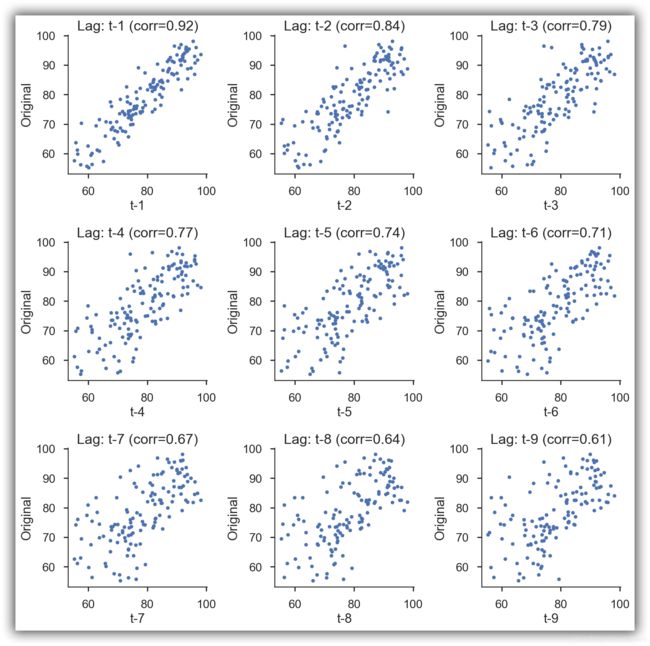

使用散点图绘制原始数据和k阶差分数据之间的关系,并求出相关系数:

lags=9

ncols=3

nrows=int(np.ceil(lags/ncols))

fig, axes = plt.subplots(ncols=ncols, nrows=nrows, figsize=(4*ncols, 4*nrows))

for ax, lag in zip(axes.flat, np.arange(1,lags+1, 1)):

lag_str = 't-{}'.format(lag)

X = (pd.concat([sentiment_short, sentiment_short.shift(-lag)], axis=1,

keys=['y'] + [lag_str]).dropna())

X.plot(ax=ax, kind='scatter', y='y', x=lag_str);

corr = X.corr().as_matrix()[0][1]

ax.set_ylabel('Original')

ax.set_title('Lag: {} (corr={:.2f})'.format(lag_str, corr));

ax.set_aspect('equal');

sns.despine();

fig.tight_layout();

在下图,分别绘制原始数据的残差图、直方图、ACF图和PACF图:

def tsplot(y, lags=None, title='', figsize=(14, 8)):

fig = plt.figure(figsize=figsize)

layout = (2, 2)

ts_ax = plt.subplot2grid(layout, (0, 0))

hist_ax = plt.subplot2grid(layout, (0, 1))

acf_ax = plt.subplot2grid(layout, (1, 0))

pacf_ax = plt.subplot2grid(layout, (1, 1))

y.plot(ax=ts_ax)

ts_ax.set_title(title)

y.plot(ax=hist_ax, kind='hist', bins=25)

hist_ax.set_title('Histogram')

smt.graphics.plot_acf(y, lags=lags, ax=acf_ax)

smt.graphics.plot_pacf(y, lags=lags, ax=pacf_ax)

[ax.set_xlim(0) for ax in [acf_ax, pacf_ax]]

sns.despine()

plt.tight_layout()

return ts_ax, acf_ax, pacf_ax

tsplot(sentiment_short, title='Consumer Sentiment', lags=36);

6.2 数据“series1.csv”ARIMA模型

导入包,载入新数据文件:

%load_ext autoreload

%autoreload 2

%matplotlib inline

%config InlineBackend.figure_format='retina'

from __future__ import absolute_import, division, print_function

import sys

import os

import pandas as pd

import numpy as np

# TSA from Statsmodels

import statsmodels.api as sm

import statsmodels.formula.api as smf

import statsmodels.tsa.api as smt

# Display and Plotting

import matplotlib.pylab as plt

import seaborn as sns

pd.set_option('display.float_format', lambda x: '%.5f' % x) # pandas

np.set_printoptions(precision=5, suppress=True) # numpy

pd.set_option('display.max_columns', 100)

pd.set_option('display.max_rows', 100)

# seaborn plotting style

sns.set(style='ticks', context='poster')

D:\Anaconda\lib\site-packages\statsmodels\compat\pandas.py:56: FutureWarning: The pandas.core.datetools module is deprecated and will be removed in a future version. Please use the pandas.tseries module instead.

from pandas.core import datetools

filename_ts = 'series1.csv'

ts_df = pd.read_csv(filename_ts, index_col=0, parse_dates=[0])

n_sample = ts_df.shape[0]

查看数据:

print(ts_df.shape)

print(ts_df.head())

(120, 1)

value

2006-06-01 0.21507

2006-07-01 1.14225

2006-08-01 0.08077

2006-09-01 -0.73952

2006-10-01 0.53552

Create a training sample and testing sample before analyzing the series

n_train=int(0.95*n_sample)+1

n_forecast=n_sample-n_train

#ts_df

ts_train = ts_df.iloc[:n_train]['value']

ts_test = ts_df.iloc[n_train:]['value']

print(ts_train.shape)

print(ts_test.shape)

print("Training Series:", "\n", ts_train.tail(), "\n")

print("Testing Series:", "\n", ts_test.head())

(115,)

(5,)

Training Series:

2015-08-01 0.60371

2015-09-01 -1.27372

2015-10-01 -0.93284

2015-11-01 0.08552

2015-12-01 1.20534

Name: value, dtype: float64

Testing Series:

2016-01-01 2.16411

2016-02-01 0.95226

2016-03-01 0.36485

2016-04-01 -2.26487

2016-05-01 -2.38168

Name: value, dtype: float64

分别绘制原始数据的残差图、直方图、ACF图和PACF图:

def tsplot(y, lags=None, title='', figsize=(14, 8)):

fig = plt.figure(figsize=figsize)

layout = (2, 2)

ts_ax = plt.subplot2grid(layout, (0, 0))

hist_ax = plt.subplot2grid(layout, (0, 1))

acf_ax = plt.subplot2grid(layout, (1, 0))

pacf_ax = plt.subplot2grid(layout, (1, 1))

y.plot(ax=ts_ax)

ts_ax.set_title(title)

y.plot(ax=hist_ax, kind='hist', bins=25)

hist_ax.set_title('Histogram')

smt.graphics.plot_acf(y, lags=lags, ax=acf_ax)

smt.graphics.plot_pacf(y, lags=lags, ax=pacf_ax)

[ax.set_xlim(0) for ax in [acf_ax, pacf_ax]]

sns.despine()

fig.tight_layout()

return ts_ax, acf_ax, pacf_ax

tsplot(ts_train, title='A Given Training Series', lags=20);

Model Estimation

Fit the model

arima200 = sm.tsa.SARIMAX(ts_train, order=(2,0,0))

model_results = arima200.fit()

计算AIC、BIC值:

import itertools

p_min = 0

d_min = 0

q_min = 0

p_max = 4

d_max = 0

q_max = 4

# Initialize a DataFrame to store the results

results_bic = pd.DataFrame(index=['AR{}'.format(i) for i in range(p_min,p_max+1)],

columns=['MA{}'.format(i) for i in range(q_min,q_max+1)])

for p,d,q in itertools.product(range(p_min,p_max+1),

range(d_min,d_max+1),

range(q_min,q_max+1)):

if p==0 and d==0 and q==0:

results_bic.loc['AR{}'.format(p), 'MA{}'.format(q)] = np.nan

continue

try:

model = sm.tsa.SARIMAX(ts_train, order=(p, d, q),

#enforce_stationarity=False,

#enforce_invertibility=False,

)

results = model.fit()

results_bic.loc['AR{}'.format(p), 'MA{}'.format(q)] = results.bic

except:

continue

results_bic = results_bic[results_bic.columns].astype(float)

D:\Anaconda\lib\site-packages\statsmodels\tsa\statespace\tools.py:405: RuntimeWarning: invalid value encountered in sqrt

x = r / ((1 - r**2)**0.5)

D:\Anaconda\lib\site-packages\statsmodels\base\model.py:496: ConvergenceWarning: Maximum Likelihood optimization failed to converge. Check mle_retvals

"Check mle_retvals", ConvergenceWarning)

D:\Anaconda\lib\site-packages\statsmodels\tools\numdiff.py:96: RuntimeWarning: invalid value encountered in maximum

h = EPS**(1. / s) * np.maximum(np.abs(x), 0.1)

D:\Anaconda\lib\site-packages\statsmodels\base\model.py:496: ConvergenceWarning: Maximum Likelihood optimization failed to converge. Check mle_retvals

"Check mle_retvals", ConvergenceWarning)

D:\Anaconda\lib\site-packages\statsmodels\base\model.py:496: ConvergenceWarning: Maximum Likelihood optimization failed to converge. Check mle_retvals

"Check mle_retvals", ConvergenceWarning)

D:\Anaconda\lib\site-packages\statsmodels\base\model.py:496: ConvergenceWarning: Maximum Likelihood optimization failed to converge. Check mle_retvals

"Check mle_retvals", ConvergenceWarning)

D:\Anaconda\lib\site-packages\statsmodels\base\model.py:496: ConvergenceWarning: Maximum Likelihood optimization failed to converge. Check mle_retvals

"Check mle_retvals", ConvergenceWarning)

D:\Anaconda\lib\site-packages\statsmodels\base\model.py:496: ConvergenceWarning: Maximum Likelihood optimization failed to converge. Check mle_retvals

"Check mle_retvals", ConvergenceWarning)

D:\Anaconda\lib\site-packages\statsmodels\base\model.py:496: ConvergenceWarning: Maximum Likelihood optimization failed to converge. Check mle_retvals

"Check mle_retvals", ConvergenceWarning)

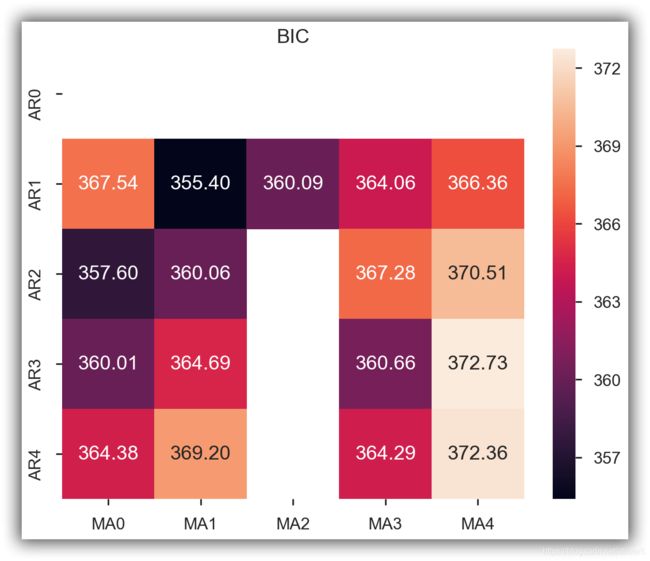

绘制AIC、BIC值热图:

fig, ax = plt.subplots(figsize=(10, 8))

ax = sns.heatmap(results_bic,

mask=results_bic.isnull(),

ax=ax,

annot=True,

fmt='.2f',

);

ax.set_title('BIC');

Alternative model selection method, limited to only searching AR and MA parameters

train_results = sm.tsa.arma_order_select_ic(ts_train, ic=['aic', 'bic'], trend='nc', max_ar=4, max_ma=4)

print('AIC', train_results.aic_min_order)

print('BIC', train_results.bic_min_order)

D:\Anaconda\lib\site-packages\statsmodels\base\model.py:473: HessianInversionWarning: Inverting hessian failed, no bse or cov_params available

'available', HessianInversionWarning)

D:\Anaconda\lib\site-packages\statsmodels\base\model.py:496: ConvergenceWarning: Maximum Likelihood optimization failed to converge. Check mle_retvals

"Check mle_retvals", ConvergenceWarning)

D:\Anaconda\lib\site-packages\statsmodels\base\model.py:496: ConvergenceWarning: Maximum Likelihood optimization failed to converge. Check mle_retvals

"Check mle_retvals", ConvergenceWarning)

AIC (4, 2)

BIC (1, 1)

D:\Anaconda\lib\site-packages\statsmodels\base\model.py:496: ConvergenceWarning: Maximum Likelihood optimization failed to converge. Check mle_retvals

"Check mle_retvals", ConvergenceWarning)

残差分析 正态分布 QQ图线性

model_results.plot_diagnostics(figsize=(16, 12));

D:\Anaconda\lib\site-packages\matplotlib\axes\_axes.py:6462: UserWarning: The 'normed' kwarg is deprecated, and has been replaced by the 'density' kwarg.

warnings.warn("The 'normed' kwarg is deprecated, and has been "

七、维基百科词条EDA

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import re

%matplotlib inline

附:数据文件

提取码:ryno

读取关于维基百科点击量的数据:

train = pd.read_csv('train_1.csv').fillna(0)

train.head()

.dataframe thead th {

text-align: left;

}

.dataframe tbody tr th {

vertical-align: top;

}

| Page | 2015-07-01 | 2015-07-02 | 2015-07-03 | 2015-07-04 | 2015-07-05 | 2015-07-06 | 2015-07-07 | 2015-07-08 | 2015-07-09 | ... | 2016-12-22 | 2016-12-23 | 2016-12-24 | 2016-12-25 | 2016-12-26 | 2016-12-27 | 2016-12-28 | 2016-12-29 | 2016-12-30 | 2016-12-31 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2NE1_zh.wikipedia.org_all-access_spider | 18.0 | 11.0 | 5.0 | 13.0 | 14.0 | 9.0 | 9.0 | 22.0 | 26.0 | ... | 32.0 | 63.0 | 15.0 | 26.0 | 14.0 | 20.0 | 22.0 | 19.0 | 18.0 | 20.0 |

| 1 | 2PM_zh.wikipedia.org_all-access_spider | 11.0 | 14.0 | 15.0 | 18.0 | 11.0 | 13.0 | 22.0 | 11.0 | 10.0 | ... | 17.0 | 42.0 | 28.0 | 15.0 | 9.0 | 30.0 | 52.0 | 45.0 | 26.0 | 20.0 |

| 2 | 3C_zh.wikipedia.org_all-access_spider | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 4.0 | 0.0 | 3.0 | 4.0 | ... | 3.0 | 1.0 | 1.0 | 7.0 | 4.0 | 4.0 | 6.0 | 3.0 | 4.0 | 17.0 |

| 3 | 4minute_zh.wikipedia.org_all-access_spider | 35.0 | 13.0 | 10.0 | 94.0 | 4.0 | 26.0 | 14.0 | 9.0 | 11.0 | ... | 32.0 | 10.0 | 26.0 | 27.0 | 16.0 | 11.0 | 17.0 | 19.0 | 10.0 | 11.0 |

| 4 | 52_Hz_I_Love_You_zh.wikipedia.org_all-access_s... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 48.0 | 9.0 | 25.0 | 13.0 | 3.0 | 11.0 | 27.0 | 13.0 | 36.0 | 10.0 |

5 rows × 551 columns

其中,左边一列是词条,右边的是随着时间变化的点击率。假若我们要通过这些记录数据预测以后时间的点击量,接下来先分析数据,对数据进行一些可视化展示:

查看数据信息:

train.info()

RangeIndex: 145063 entries, 0 to 145062

Columns: 551 entries, Page to 2016-12-31

dtypes: float64(550), object(1)

memory usage: 609.8+ MB

可以看到,数据量还是很大的,一共占了609.8+ MB,但这也只是取了维基百科的一小部分。一共有145063行,551列,即145063个词条的551个时间点下的点击量。

我们看到数据都是浮点型形式保存,因为没有小数,我们没必要保存为浮点型,并且浮点型是非常占用内存的。整体相对会好很多,我们可以将其转化为整型:

for col in train.columns[1:]:

train[col] = pd.to_numeric(train[col],downcast='integer')

train.head()

.dataframe thead th {

text-align: left;

}

.dataframe tbody tr th {

vertical-align: top;

}

| Page | 2015-07-01 | 2015-07-02 | 2015-07-03 | 2015-07-04 | 2015-07-05 | 2015-07-06 | 2015-07-07 | 2015-07-08 | 2015-07-09 | ... | 2016-12-22 | 2016-12-23 | 2016-12-24 | 2016-12-25 | 2016-12-26 | 2016-12-27 | 2016-12-28 | 2016-12-29 | 2016-12-30 | 2016-12-31 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2NE1_zh.wikipedia.org_all-access_spider | 18 | 11 | 5 | 13 | 14 | 9 | 9 | 22 | 26 | ... | 32 | 63 | 15 | 26 | 14 | 20 | 22 | 19 | 18 | 20 |

| 1 | 2PM_zh.wikipedia.org_all-access_spider | 11 | 14 | 15 | 18 | 11 | 13 | 22 | 11 | 10 | ... | 17 | 42 | 28 | 15 | 9 | 30 | 52 | 45 | 26 | 20 |

| 2 | 3C_zh.wikipedia.org_all-access_spider | 1 | 0 | 1 | 1 | 0 | 4 | 0 | 3 | 4 | ... | 3 | 1 | 1 | 7 | 4 | 4 | 6 | 3 | 4 | 17 |

| 3 | 4minute_zh.wikipedia.org_all-access_spider | 35 | 13 | 10 | 94 | 4 | 26 | 14 | 9 | 11 | ... | 32 | 10 | 26 | 27 | 16 | 11 | 17 | 19 | 10 | 11 |

| 4 | 52_Hz_I_Love_You_zh.wikipedia.org_all-access_s... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 48 | 9 | 25 | 13 | 3 | 11 | 27 | 13 | 36 | 10 |

5 rows × 551 columns

查看修改为整型后的数据信息:

train.info()

RangeIndex: 145063 entries, 0 to 145062

Columns: 551 entries, Page to 2016-12-31

dtypes: int32(550), object(1)

memory usage: 305.5+ MB

效果很明显,当数据从浮点型改为整型后,占用内存从609.8+ MB变为了305.5+ MB,缩小了一半。

统计不同国家出现的词条的频数:

def get_language(page):

res = re.search('[a-z][a-z].wikipedia.org',page)

#print (res.group()[0:2])

if res:

return res.group()[0:2]

return 'na'

train['lang'] = train.Page.map(get_language)

from collections import Counter

print(Counter(train.lang))

Counter({'en': 24108, 'ja': 20431, 'de': 18547, 'na': 17855, 'fr': 17802, 'zh': 17229, 'ru': 15022, 'es': 14069})

可见英国有24108个,中国有17229个,等等。当前国家出现错误的时候,我们指定为na值。

基于国家对所有词条进行划分:

lang_sets = {}

lang_sets['en'] = train[train.lang=='en'].iloc[:,0:-1]

lang_sets['ja'] = train[train.lang=='ja'].iloc[:,0:-1]

lang_sets['de'] = train[train.lang=='de'].iloc[:,0:-1]

lang_sets['na'] = train[train.lang=='na'].iloc[:,0:-1]

lang_sets['fr'] = train[train.lang=='fr'].iloc[:,0:-1]

lang_sets['zh'] = train[train.lang=='zh'].iloc[:,0:-1]

lang_sets['ru'] = train[train.lang=='ru'].iloc[:,0:-1]

lang_sets['es'] = train[train.lang=='es'].iloc[:,0:-1]

sums = {}

for key in lang_sets:

sums[key] = lang_sets[key].iloc[:,1:].sum(axis=0) / lang_sets[key].shape[0]

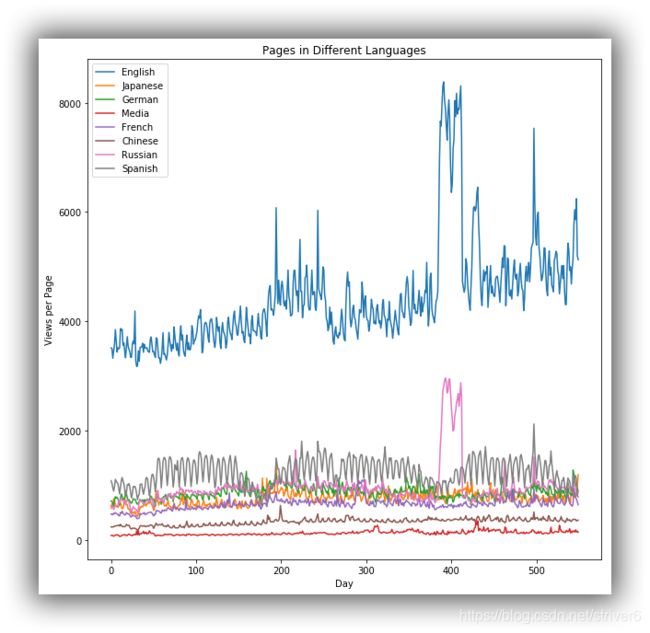

来观察不同国家点击量总数随时间变化的情况:

days = [r for r in range(sums['en'].shape[0])]

fig = plt.figure(1,figsize=[10,10])

plt.ylabel('Views per Page')

plt.xlabel('Day')

plt.title('Pages in Different Languages')

labels={'en':'English','ja':'Japanese','de':'German',

'na':'Media','fr':'French','zh':'Chinese',

'ru':'Russian','es':'Spanish'

}

for key in sums:

plt.plot(days,sums[key],label = labels[key] )

plt.legend()

plt.show()

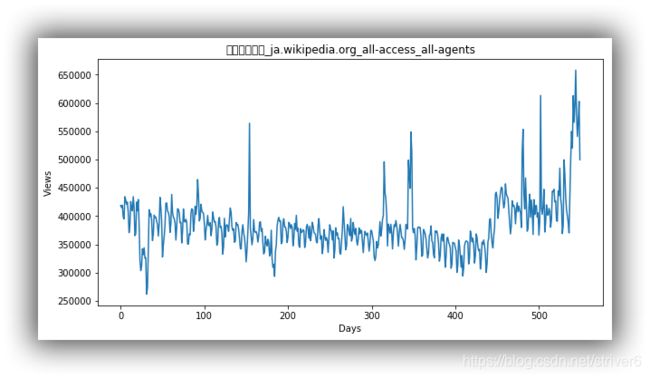

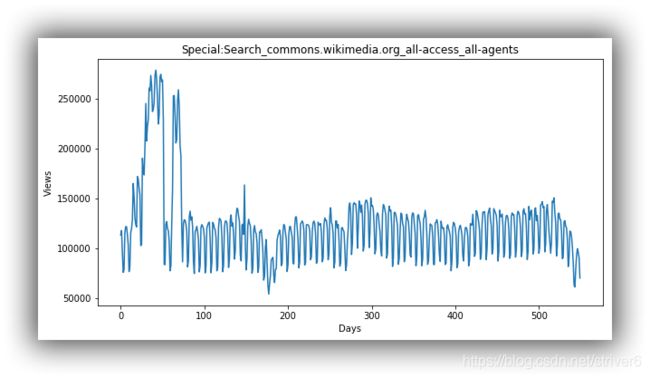

可以看出,英文要明显比其他语言要高一些。其他语言点击率曲线有时候会有奇怪,像粉色的俄罗斯,在第400天左右的时候发生了突变,阅读量开始猛增,可能是在这个时候发生了一些国民性的重大事件。

而中国整体点击量比较低,这也是合乎情理的。因为大家用的一般都是百度嘛,维基百科不常用。Goolge也被屏蔽了,百度百科处于垄断地位。

由于不同国家的词频点击量差异较大,所以我们可以分国家建模。

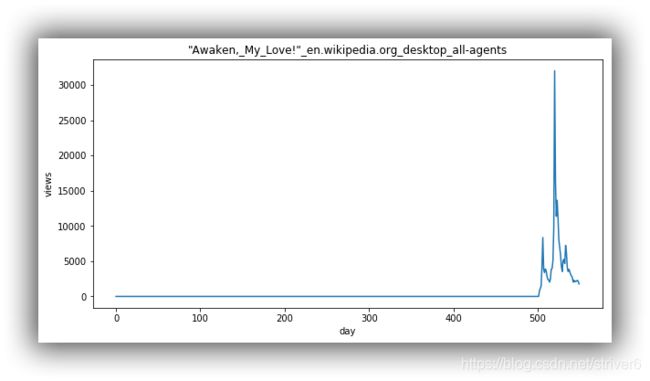

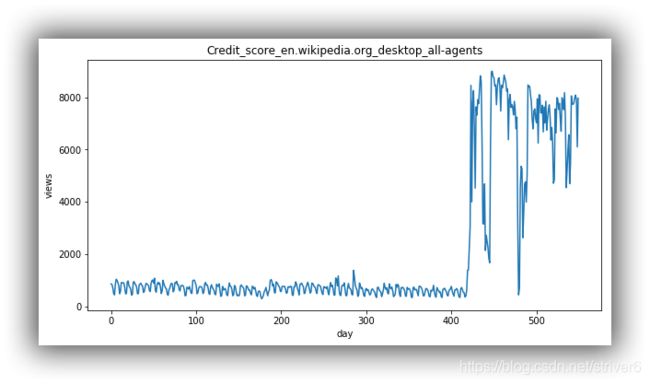

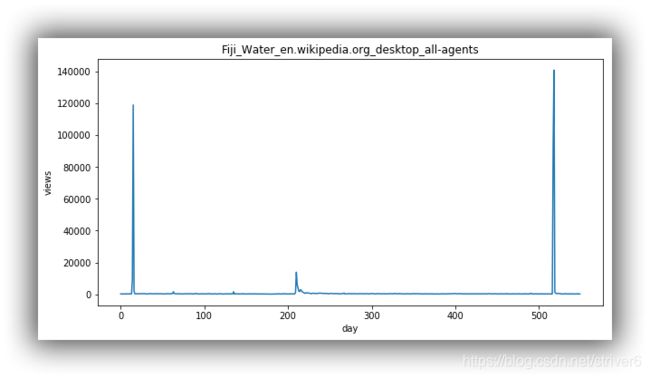

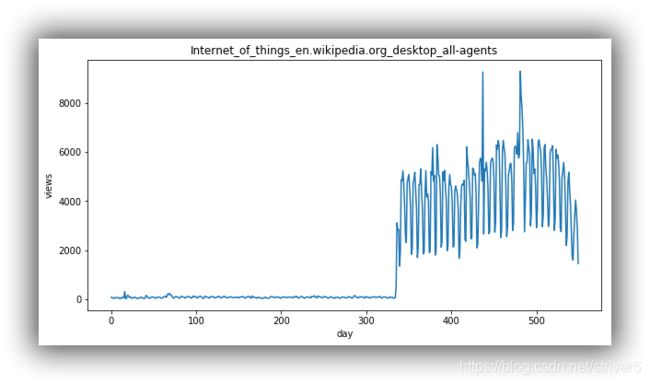

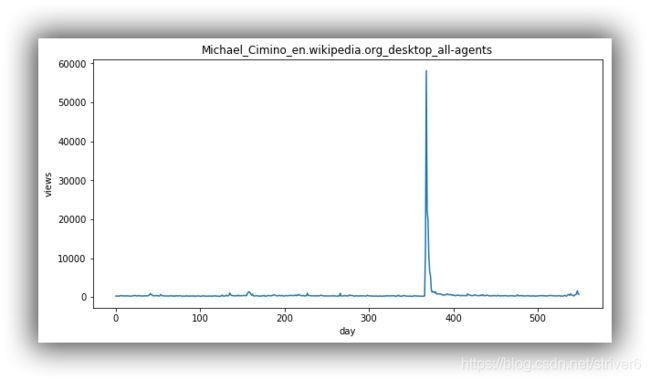

当然,我们也可以分词条进行建模,随机选取词条,观察点击量随时间变化情况:

def plot_entry(key,idx):

data = lang_sets[key].iloc[idx,1:]

fig = plt.figure(1,figsize=(10,5))

plt.plot(days,data)

plt.xlabel('day')

plt.ylabel('views')

plt.title(train.iloc[lang_sets[key].index[idx],0])

plt.show()

idx = [1, 5, 10, 50, 100, 250,500, 750,1000,1500,2000,3000,4000,5000]

for i in idx:

plot_entry('en',i)

可以看出,对于每个词条都是有着一定的时间热度的。比如说第一个,在前500天都是默默无闻的,突然在第500天爆发,且爆发量很大。针对很多词条来说,都会呈现出这样一种趋势。

我们也可以对不同国家的词条点击量进行排序,一次推断大众的关注点,即热点:

npages = 5

top_pages = {}

for key in lang_sets:

print(key)

sum_set = pd.DataFrame(lang_sets[key][['Page']])

sum_set['total'] = lang_sets[key].sum(axis=1)

sum_set = sum_set.sort_values('total',ascending=False)

print(sum_set.head(10))

top_pages[key] = sum_set.index[0]

print('\n\n')

en

Page total

38573 Main_Page_en.wikipedia.org_all-access_all-agents 12066181102

9774 Main_Page_en.wikipedia.org_desktop_all-agents 8774497458

74114 Main_Page_en.wikipedia.org_mobile-web_all-agents 3153984882

39180 Special:Search_en.wikipedia.org_all-access_all... 1304079353

10403 Special:Search_en.wikipedia.org_desktop_all-ag... 1011847748

74690 Special:Search_en.wikipedia.org_mobile-web_all... 292162839

39172 Special:Book_en.wikipedia.org_all-access_all-a... 133993144

10399 Special:Book_en.wikipedia.org_desktop_all-agents 133285908

33644 Main_Page_en.wikipedia.org_all-access_spider 129020407

34257 Special:Search_en.wikipedia.org_all-access_spider 124310206

ja

Page total

120336 メインページ_ja.wikipedia.org_all-access_all-agents 210753795

86431 メインページ_ja.wikipedia.org_desktop_all-agents 134147415

123025 特別:検索_ja.wikipedia.org_all-access_all-agents 70316929

89202 特別:検索_ja.wikipedia.org_desktop_all-agents 69215206

57309 メインページ_ja.wikipedia.org_mobile-web_all-agents 66459122

119609 特別:最近の更新_ja.wikipedia.org_all-access_all-agents 17662791

88897 特別:最近の更新_ja.wikipedia.org_desktop_all-agents 17627621

119625 真田信繁_ja.wikipedia.org_all-access_all-agents 10793039

123292 特別:外部リンク検索_ja.wikipedia.org_all-access_all-agents 10331191

89463 特別:外部リンク検索_ja.wikipedia.org_desktop_all-agents 10327917

de

Page total

139119 Wikipedia:Hauptseite_de.wikipedia.org_all-acce... 1603934248

116196 Wikipedia:Hauptseite_de.wikipedia.org_mobile-w... 1112689084

67049 Wikipedia:Hauptseite_de.wikipedia.org_desktop_... 426992426

140151 Spezial:Suche_de.wikipedia.org_all-access_all-... 223425944

66736 Spezial:Suche_de.wikipedia.org_desktop_all-agents 219636761

140147 Spezial:Anmelden_de.wikipedia.org_all-access_a... 40291806

138800 Special:Search_de.wikipedia.org_all-access_all... 39881543

68104 Spezial:Anmelden_de.wikipedia.org_desktop_all-... 35355226

68511 Special:MyPage/toolserverhelferleinconfig.js_d... 32584955

137765 Hauptseite_de.wikipedia.org_all-access_all-agents 31732458

na

Page total

45071 Special:Search_commons.wikimedia.org_all-acces... 67150638

81665 Special:Search_commons.wikimedia.org_desktop_a... 63349756

45056 Special:CreateAccount_commons.wikimedia.org_al... 53795386

45028 Main_Page_commons.wikimedia.org_all-access_all... 52732292

81644 Special:CreateAccount_commons.wikimedia.org_de... 48061029

81610 Main_Page_commons.wikimedia.org_desktop_all-ag... 39160923

46078 Special:RecentChangesLinked_commons.wikimedia.... 28306336

45078 Special:UploadWizard_commons.wikimedia.org_all... 23733805

81671 Special:UploadWizard_commons.wikimedia.org_des... 22008544

82680 Special:RecentChangesLinked_commons.wikimedia.... 21915202

fr

Page total

27330 Wikipédia:Accueil_principal_fr.wikipedia.org_a... 868480667

55104 Wikipédia:Accueil_principal_fr.wikipedia.org_m... 611302821

7344 Wikipédia:Accueil_principal_fr.wikipedia.org_d... 239589012

27825 Spécial:Recherche_fr.wikipedia.org_all-access_... 95666374

8221 Spécial:Recherche_fr.wikipedia.org_desktop_all... 88448938

26500 Sp?cial:Search_fr.wikipedia.org_all-access_all... 76194568

6978 Sp?cial:Search_fr.wikipedia.org_desktop_all-ag... 76185450

131296 Wikipédia:Accueil_principal_fr.wikipedia.org_a... 63860799

26993 Organisme_de_placement_collectif_en_valeurs_mo... 36647929

7213 Organisme_de_placement_collectif_en_valeurs_mo... 36624145

zh

Page total

28727 Wikipedia:首页_zh.wikipedia.org_all-access_all-a... 123694312

61350 Wikipedia:首页_zh.wikipedia.org_desktop_all-agents 66435641

105844 Wikipedia:首页_zh.wikipedia.org_mobile-web_all-a... 50887429

28728 Special:搜索_zh.wikipedia.org_all-access_all-agents 48678124

61351 Special:搜索_zh.wikipedia.org_desktop_all-agents 48203843

28089 Running_Man_zh.wikipedia.org_all-access_all-ag... 11485845

30960 Special:链接搜索_zh.wikipedia.org_all-access_all-a... 10320403

63510 Special:链接搜索_zh.wikipedia.org_desktop_all-agents 10320336

60711 Running_Man_zh.wikipedia.org_desktop_all-agents 7968443

30446 瑯琊榜_(電視劇)_zh.wikipedia.org_all-access_all-agents 5891589

ru

Page total

99322 Заглавная_страница_ru.wikipedia.org_all-access... 1086019452

103123 Заглавная_страница_ru.wikipedia.org_desktop_al... 742880016

17670 Заглавная_страница_ru.wikipedia.org_mobile-web... 327930433

99537 Служебная:Поиск_ru.wikipedia.org_all-access_al... 103764279

103349 Служебная:Поиск_ru.wikipedia.org_desktop_all-a... 98664171

100414 Служебная:Ссылки_сюда_ru.wikipedia.org_all-acc... 25102004

104195 Служебная:Ссылки_сюда_ru.wikipedia.org_desktop... 25058155

97670 Special:Search_ru.wikipedia.org_all-access_all... 24374572

101457 Special:Search_ru.wikipedia.org_desktop_all-ag... 21958472

98301 Служебная:Вход_ru.wikipedia.org_all-access_all... 12162587

es

Page total

92205 Wikipedia:Portada_es.wikipedia.org_all-access_... 751492304

95855 Wikipedia:Portada_es.wikipedia.org_mobile-web_... 565077372

90810 Especial:Buscar_es.wikipedia.org_all-access_al... 194491245

71199 Wikipedia:Portada_es.wikipedia.org_desktop_all... 165439354

69939 Especial:Buscar_es.wikipedia.org_desktop_all-a... 160431271

94389 Especial:Buscar_es.wikipedia.org_mobile-web_al... 34059966

90813 Especial:Entrar_es.wikipedia.org_all-access_al... 33983359

143440 Wikipedia:Portada_es.wikipedia.org_all-access_... 31615409

93094 Lali_Espósito_es.wikipedia.org_all-access_all-... 26602688

69942 Especial:Entrar_es.wikipedia.org_desktop_all-a... 25747141

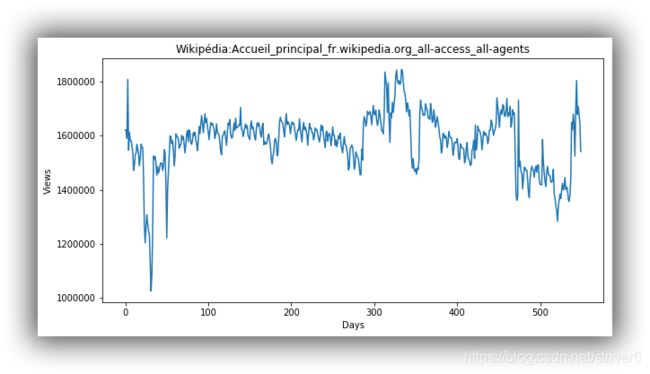

绘图观察这些热点随时间变化情况:

for key in top_pages:

fig = plt.figure(1,figsize=(10,5))

cols = train.columns

cols = cols[1:-1]

data = train.loc[top_pages[key],cols]

plt.plot(days,data)

plt.xlabel('Days')

plt.ylabel('Views')

plt.title(train.loc[top_pages[key],'Page'])

plt.show()