【第3次实验】卷积神经网络

卷积神经网络

文章目录

- 卷积神经网络

-

- 1.MNIST 数据集分类

- 2.CIFAR10数据分类

- 3.使用 VGG16 对 CIFAR10 分类

1.MNIST 数据集分类

构建简单的CNN对 mnist 数据集进行分类。同时,还会在实验中学习池化与卷积操作的基本作用。

深度卷积神经网络中,有如下特性

- 很多层: compositionality

- 卷积: locality + stationarity of images

- 池化: Invariance of object class to translations

代码过程:

- 引入库

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

import numpy

# 一个函数,用来计算模型中有多少参数

def get_n_params(model):

np=0

for p in list(model.parameters()):

np += p.nelement()

return np

# 使用GPU训练,可以在菜单 "代码执行工具" -> "更改运行时类型" 里进行设置

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

- 加载数据 (MNIST)

PyTorch里包含了 MNIST, CIFAR10 等常用数据集,调用 torchvision.datasets 即可把这些数据由远程下载到本地,下面给出MNIST的使用方法:

torchvision.datasets.MNIST(root, train=True, transform=None, target_transform=None, download=False)

- root 为数据集下载到本地后的根目录,包括 training.pt 和 test.pt 文件

- train,如果设置为True,从training.pt创建数据集,否则从test.pt创建。

- download,如果设置为True, 从互联网下载数据并放到root文件夹下

- transform, 一种函数或变换,输入PIL图片,返回变换之后的数据。

- target_transform 一种函数或变换,输入目标,进行变换。

另外值得注意的是,DataLoader是一个比较重要的类,提供的常用操作有:batch_size(每个batch的大小), shuffle(是否进行随机打乱顺序的操作), num_workers(加载数据的时候使用几个子进程)

input_size = 28*28 # MNIST上的图像尺寸是 28x28

output_size = 10 # 类别为 0 到 9 的数字,因此为十类

train_loader = torch.utils.data.DataLoader(

datasets.MNIST('./data', train=True, download=True,

transform=transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))])),

batch_size=64, shuffle=True)

test_loader = torch.utils.data.DataLoader(

datasets.MNIST('./data', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))])),

batch_size=1000, shuffle=True)

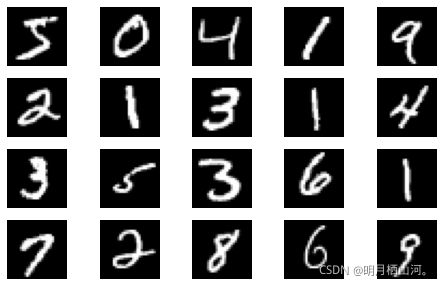

plt.figure(figsize=(8, 5))

for i in range(20):

plt.subplot(4, 5, i + 1)

image, _ = train_loader.dataset.__getitem__(i)

plt.imshow(image.squeeze().numpy(),'gray')

plt.axis('off');

Downloading http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz to ./data/MNIST/raw/train-images-idx3-ubyte.gz

9913344/? [00:00<00:00, 47589970.48it/s]

Extracting ./data/MNIST/raw/train-images-idx3-ubyte.gz to ./data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz to ./data/MNIST/raw/train-labels-idx1-ubyte.gz

29696/? [00:00<00:00, 658364.75it/s]

Extracting ./data/MNIST/raw/train-labels-idx1-ubyte.gz to ./data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz to ./data/MNIST/raw/t10k-images-idx3-ubyte.gz

1649664/? [00:00<00:00, 8994310.62it/s]

Extracting ./data/MNIST/raw/t10k-images-idx3-ubyte.gz to ./data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz to ./data/MNIST/raw/t10k-labels-idx1-ubyte.gz

5120/? [00:00<00:00, 116450.95it/s]

Extracting ./data/MNIST/raw/t10k-labels-idx1-ubyte.gz to ./data/MNIST/raw

/usr/local/lib/python3.7/dist-packages/torchvision/datasets/mnist.py:498: UserWarning: The given NumPy array is not writeable, and PyTorch does not support non-writeable tensors. This means you can write to the underlying (supposedly non-writeable) NumPy array using the tensor. You may want to copy the array to protect its data or make it writeable before converting it to a tensor. This type of warning will be suppressed for the rest of this program. (Triggered internally at /pytorch/torch/csrc/utils/tensor_numpy.cpp:180.)

return torch.from_numpy(parsed.astype(m[2], copy=False)).view(*s)

- 创建网络

定义网络时,需要继承nn.Module,并实现它的forward方法,把网络中具有可学习参数的层放在构造函数init中。

只要在nn.Module的子类中定义了forward函数,backward函数就会自动被实现(利用autograd)。

class FC2Layer(nn.Module):

def __init__(self, input_size, n_hidden, output_size):

# nn.Module子类的函数必须在构造函数中执行父类的构造函数

# 下式等价于nn.Module.__init__(self)

super(FC2Layer, self).__init__()

self.input_size = input_size

# 这里直接用 Sequential 就定义了网络,注意要和下面 CNN 的代码区分开

self.network = nn.Sequential(

nn.Linear(input_size, n_hidden),

nn.ReLU(),

nn.Linear(n_hidden, n_hidden),

nn.ReLU(),

nn.Linear(n_hidden, output_size),

nn.LogSoftmax(dim=1)

)

def forward(self, x):

# view一般出现在model类的forward函数中,用于改变输入或输出的形状

# x.view(-1, self.input_size) 的意思是多维的数据展成二维

# 代码指定二维数据的列数为 input_size=784,行数 -1 表示我们不想算,电脑会自己计算对应的数字

# 在 DataLoader 部分,我们可以看到 batch_size 是64,所以得到 x 的行数是64

# 大家可以加一行代码:print(x.cpu().numpy().shape)

# 训练过程中,就会看到 (64, 784) 的输出,和我们的预期是一致的

# forward 函数的作用是,指定网络的运行过程,这个全连接网络可能看不啥意义,

# 下面的CNN网络可以看出 forward 的作用。

x = x.view(-1, self.input_size)

return self.network(x)

class CNN(nn.Module):

def __init__(self, input_size, n_feature, output_size):

# 执行父类的构造函数,所有的网络都要这么写

super(CNN, self).__init__()

# 下面是网络里典型结构的一些定义,一般就是卷积和全连接

# 池化、ReLU一类的不用在这里定义

self.n_feature = n_feature

self.conv1 = nn.Conv2d(in_channels=1, out_channels=n_feature, kernel_size=5)

self.conv2 = nn.Conv2d(n_feature, n_feature, kernel_size=5)

self.fc1 = nn.Linear(n_feature*4*4, 50)

self.fc2 = nn.Linear(50, 10)

# 下面的 forward 函数,定义了网络的结构,按照一定顺序,把上面构建的一些结构组织起来

# 意思就是,conv1, conv2 等等的,可以多次重用

def forward(self, x, verbose=False):

x = self.conv1(x)

x = F.relu(x)

x = F.max_pool2d(x, kernel_size=2)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, kernel_size=2)

x = x.view(-1, self.n_feature*4*4)

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

x = F.log_softmax(x, dim=1)

return x

定义训练和测试函数

# 训练函数

def train(model):

model.train()

# 主里从train_loader里,64个样本一个batch为单位提取样本进行训练

for batch_idx, (data, target) in enumerate(train_loader):

# 把数据送到GPU中

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % 100 == 0:

print('Train: [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

def test(model):

model.eval()

test_loss = 0

correct = 0

for data, target in test_loader:

# 把数据送到GPU中

data, target = data.to(device), target.to(device)

# 把数据送入模型,得到预测结果

output = model(data)

# 计算本次batch的损失,并加到 test_loss 中

test_loss += F.nll_loss(output, target, reduction='sum').item()

# get the index of the max log-probability,最后一层输出10个数,

# 值最大的那个即对应着分类结果,然后把分类结果保存在 pred 里

pred = output.data.max(1, keepdim=True)[1]

# 将 pred 与 target 相比,得到正确预测结果的数量,并加到 correct 中

# 这里需要注意一下 view_as ,意思是把 target 变成维度和 pred 一样的意思

correct += pred.eq(target.data.view_as(pred)).cpu().sum().item()

test_loss /= len(test_loader.dataset)

accuracy = 100. * correct / len(test_loader.dataset)

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

accuracy))

- 在小型全连接网络上训练(Fully-connected network)

n_hidden = 8 # number of hidden units

model_fnn = FC2Layer(input_size, n_hidden, output_size)

model_fnn.to(device)

optimizer = optim.SGD(model_fnn.parameters(), lr=0.01, momentum=0.5)

print('Number of parameters: {}'.format(get_n_params(model_fnn)))

train(model_fnn)

test(model_fnn)

运行结果

Number of parameters: 6442

Train: [0/60000 (0%)] Loss: 2.293912

Train: [6400/60000 (11%)] Loss: 1.654353

Train: [12800/60000 (21%)] Loss: 1.149511

Train: [19200/60000 (32%)] Loss: 0.747604

Train: [25600/60000 (43%)] Loss: 0.874187

Train: [32000/60000 (53%)] Loss: 0.601633

Train: [38400/60000 (64%)] Loss: 0.479874

Train: [44800/60000 (75%)] Loss: 0.188910

Train: [51200/60000 (85%)] Loss: 0.542274

Train: [57600/60000 (96%)] Loss: 0.245706

Test set: Average loss: 0.4099, Accuracy: 8776/10000 (88%)

- 在卷积神经网络上训练

需要注意的是,上在定义的CNN和全连接网络,拥有相同数量的模型参数

# Training settings

n_features = 6 # number of feature maps

model_cnn = CNN(input_size, n_features, output_size)

model_cnn.to(device)

optimizer = optim.SGD(model_cnn.parameters(), lr=0.01, momentum=0.5)

print('Number of parameters: {}'.format(get_n_params(model_cnn)))

train(model_cnn)

test(model_cnn)

运行结果

Number of parameters: 6422

Train: [0/60000 (0%)] Loss: 2.299771

/usr/local/lib/python3.7/dist-packages/torch/nn/functional.py:718: UserWarning: Named tensors and all their associated APIs are an experimental feature and subject to change. Please do not use them for anything important until they are released as stable. (Triggered internally at /pytorch/c10/core/TensorImpl.h:1156.)

return torch.max_pool2d(input, kernel_size, stride, padding, dilation, ceil_mode)

Train: [6400/60000 (11%)] Loss: 2.205741

Train: [12800/60000 (21%)] Loss: 0.529737

Train: [19200/60000 (32%)] Loss: 0.419136

Train: [25600/60000 (43%)] Loss: 0.410910

Train: [32000/60000 (53%)] Loss: 0.269722

Train: [38400/60000 (64%)] Loss: 0.297657

Train: [44800/60000 (75%)] Loss: 0.202161

Train: [51200/60000 (85%)] Loss: 0.224796

Train: [57600/60000 (96%)] Loss: 0.132175

Test set: Average loss: 0.1583, Accuracy: 9517/10000 (95%)

通过上面的测试结果,可以发现,含有相同参数的 CNN 效果要明显优于 简单的全连接网络,是因为 CNN 能够更好的挖掘图像中的信息,主要通过两个手段:

- 卷积:Locality and stationarity in images

- 池化:Builds in some translation invariance

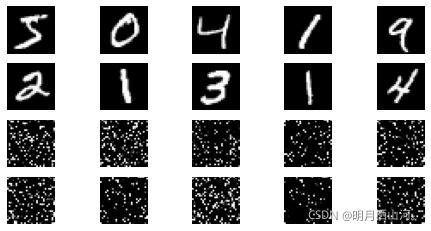

- 打乱像素顺序再次在两个网络上训练与测试

考虑到CNN在卷积与池化上的优良特性,如果我们把图像中的像素打乱顺序,这样 卷积 和 池化 就难以发挥作用了,为了验证这个想法,我们把图像中的像素打乱顺序再试试。

首先下面代码展示随机打乱像素顺序后,图像的形态:

# 这里解释一下 torch.randperm 函数,给定参数n,返回一个从0到n-1的随机整数排列

perm = torch.randperm(784)

plt.figure(figsize=(8, 4))

for i in range(10):

image, _ = train_loader.dataset.__getitem__(i)

# permute pixels

image_perm = image.view(-1, 28*28).clone()

image_perm = image_perm[:, perm]

image_perm = image_perm.view(-1, 1, 28, 28)

plt.subplot(4, 5, i + 1)

plt.imshow(image.squeeze().numpy(), 'gray')

plt.axis('off')

plt.subplot(4, 5, i + 11)

plt.imshow(image_perm.squeeze().numpy(), 'gray')

plt.axis('off')

重新定义训练与测试函数,我们写了两个函数 train_perm 和 test_perm,分别对应着加入像素打乱顺序的训练函数与测试函数。

与之前的训练与测试函数基本上完全相同,只是对 data 加入了打乱顺序操作。

在全连接网络上测试

perm = torch.randperm(784)

n_hidden = 8 # number of hidden units

model_fnn = FC2Layer(input_size, n_hidden, output_size)

model_fnn.to(device)

optimizer = optim.SGD(model_fnn.parameters(), lr=0.01, momentum=0.5)

print('Number of parameters: {}'.format(get_n_params(model_fnn)))

train_perm(model_fnn, perm)

test_perm(model_fnn, perm)

运行结果

Number of parameters: 6442

Train: [0/60000 (0%)] Loss: 2.326663

Train: [6400/60000 (11%)] Loss: 1.994924

Train: [12800/60000 (21%)] Loss: 1.676664

Train: [19200/60000 (32%)] Loss: 1.136867

Train: [25600/60000 (43%)] Loss: 0.700869

Train: [32000/60000 (53%)] Loss: 0.803411

Train: [38400/60000 (64%)] Loss: 0.510214

Train: [44800/60000 (75%)] Loss: 0.608723

Train: [51200/60000 (85%)] Loss: 0.668663

Train: [57600/60000 (96%)] Loss: 0.398532

Test set: Average loss: 0.5395, Accuracy: 8386/10000 (84%)

在卷积神经网络上测试

perm = torch.randperm(784)

n_features = 6 # number of feature maps

model_cnn = CNN(input_size, n_features, output_size)

model_cnn.to(device)

optimizer = optim.SGD(model_cnn.parameters(), lr=0.01, momentum=0.5)

print('Number of parameters: {}'.format(get_n_params(model_cnn)))

train_perm(model_cnn, perm)

test_perm(model_cnn, perm)

运行结果

Number of parameters: 6422

Train: [0/60000 (0%)] Loss: 2.299754

Train: [6400/60000 (11%)] Loss: 2.264622

Train: [12800/60000 (21%)] Loss: 2.161319

Train: [19200/60000 (32%)] Loss: 1.903410

Train: [25600/60000 (43%)] Loss: 1.121634

Train: [32000/60000 (53%)] Loss: 1.064046

Train: [38400/60000 (64%)] Loss: 0.838334

Train: [44800/60000 (75%)] Loss: 0.854761

Train: [51200/60000 (85%)] Loss: 0.487476

Train: [57600/60000 (96%)] Loss: 0.737010

Test set: Average loss: 0.5694, Accuracy: 8131/10000 (81%)

从打乱像素顺序的实验结果来看,全连接网络的性能基本上没有发生变化,但是 卷积神经网络的性能明显下降。

这是因为对于卷积神经网络,会利用像素的局部关系,但是打乱顺序以后,这些像素间的关系将无法得到利用。

2.CIFAR10数据分类

对于视觉数据,PyTorch 创建了一个叫做 totchvision 的包,该包含有支持加载类似Imagenet,CIFAR10,MNIST 等公共数据集的数据加载模块 torchvision.datasets 和支持加载图像数据数据转换模块 torch.utils.data.DataLoader。

下面将使用CIFAR10数据集,它包含十个类别:‘airplane’, ‘automobile’, ‘bird’, ‘cat’, ‘deer’, ‘dog’, ‘frog’, ‘horse’, ‘ship’, ‘truck’。CIFAR-10 中的图像尺寸为3x32x32,也就是RGB的3层颜色通道,每层通道内的尺寸为32*32。

测试源码

import torch

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import numpy as np

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

# 使用GPU训练,可以在菜单 "代码执行工具" -> "更改运行时类型" 里进行设置

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

# 注意下面代码中:训练的 shuffle 是 True,测试的 shuffle 是 false

# 训练时可以打乱顺序增加多样性,测试是没有必要

trainset = torchvision.datasets.CIFAR10(root='./data', train=True,

download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=64,

shuffle=True, num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False,

download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=8,

shuffle=False, num_workers=2)

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

def imshow(img):

plt.figure(figsize=(8,8))

img = img / 2 + 0.5 # 转换到 [0,1] 之间

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

# 得到一组图像

images, labels = iter(trainloader).next()

# 展示图像

imshow(torchvision.utils.make_grid(images))

# 展示第一行图像的标签

for j in range(8):

print(classes[labels[j]])

运行结果

Downloading https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz to ./data/cifar-10-python.tar.gz

170499072/? [00:03<00:00, 53391061.16it/s]

Extracting ./data/cifar-10-python.tar.gz to ./data

Files already downloaded and verified

truck

deer

frog

car

truck

dog

ship

dog

接下来定义网络,损失函数和优化器:

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

# 网络放到GPU上

net = Net().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.001)

for epoch in range(10): # 重复多轮训练

for i, (inputs, labels) in enumerate(trainloader):

inputs = inputs.to(device)

labels = labels.to(device)

# 优化器梯度归零

optimizer.zero_grad()

# 正向传播 + 反向传播 + 优化

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# 输出统计信息

if i % 100 == 0:

print('Epoch: %d Minibatch: %5d loss: %.3f' %(epoch + 1, i + 1, loss.item()))

print('Finished Training')

运行结果

Epoch: 1 Minibatch: 1 loss: 2.298

Epoch: 1 Minibatch: 101 loss: 1.910

Epoch: 1 Minibatch: 201 loss: 1.837

Epoch: 1 Minibatch: 301 loss: 1.939

Epoch: 1 Minibatch: 401 loss: 1.450

Epoch: 1 Minibatch: 501 loss: 1.532

Epoch: 1 Minibatch: 601 loss: 1.667

Epoch: 1 Minibatch: 701 loss: 1.557

Epoch: 2 Minibatch: 1 loss: 1.556

Epoch: 2 Minibatch: 101 loss: 1.428

Epoch: 2 Minibatch: 201 loss: 1.471

Epoch: 2 Minibatch: 301 loss: 1.387

Epoch: 2 Minibatch: 401 loss: 1.466

Epoch: 2 Minibatch: 501 loss: 1.244

Epoch: 2 Minibatch: 601 loss: 1.135

Epoch: 2 Minibatch: 701 loss: 1.375

Epoch: 3 Minibatch: 1 loss: 1.147

Epoch: 3 Minibatch: 101 loss: 1.235

Epoch: 3 Minibatch: 201 loss: 1.405

Epoch: 3 Minibatch: 301 loss: 1.214

Epoch: 3 Minibatch: 401 loss: 1.104

Epoch: 3 Minibatch: 501 loss: 1.124

Epoch: 3 Minibatch: 601 loss: 1.158

Epoch: 3 Minibatch: 701 loss: 1.286

Epoch: 4 Minibatch: 1 loss: 0.993

Epoch: 4 Minibatch: 101 loss: 1.037

Epoch: 4 Minibatch: 201 loss: 1.076

Epoch: 4 Minibatch: 301 loss: 1.505

Epoch: 4 Minibatch: 401 loss: 1.124

Epoch: 4 Minibatch: 501 loss: 1.367

Epoch: 4 Minibatch: 601 loss: 1.028

Epoch: 4 Minibatch: 701 loss: 1.038

Epoch: 5 Minibatch: 1 loss: 1.137

Epoch: 5 Minibatch: 101 loss: 1.229

Epoch: 5 Minibatch: 201 loss: 1.093

Epoch: 5 Minibatch: 301 loss: 1.135

Epoch: 5 Minibatch: 401 loss: 1.085

Epoch: 5 Minibatch: 501 loss: 0.970

Epoch: 5 Minibatch: 601 loss: 1.162

Epoch: 5 Minibatch: 701 loss: 1.061

Epoch: 6 Minibatch: 1 loss: 0.965

Epoch: 6 Minibatch: 101 loss: 1.003

Epoch: 6 Minibatch: 201 loss: 1.009

Epoch: 6 Minibatch: 301 loss: 1.013

Epoch: 6 Minibatch: 401 loss: 0.912

Epoch: 6 Minibatch: 501 loss: 0.948

Epoch: 6 Minibatch: 601 loss: 0.858

Epoch: 6 Minibatch: 701 loss: 0.919

Epoch: 7 Minibatch: 1 loss: 1.154

Epoch: 7 Minibatch: 101 loss: 0.921

Epoch: 7 Minibatch: 201 loss: 1.006

Epoch: 7 Minibatch: 301 loss: 0.992

Epoch: 7 Minibatch: 401 loss: 1.190

Epoch: 7 Minibatch: 501 loss: 0.856

Epoch: 7 Minibatch: 601 loss: 0.805

Epoch: 7 Minibatch: 701 loss: 1.037

Epoch: 8 Minibatch: 1 loss: 0.619

Epoch: 8 Minibatch: 101 loss: 0.805

Epoch: 8 Minibatch: 201 loss: 1.089

Epoch: 8 Minibatch: 301 loss: 1.126

Epoch: 8 Minibatch: 401 loss: 1.033

Epoch: 8 Minibatch: 501 loss: 1.072

Epoch: 8 Minibatch: 601 loss: 0.877

Epoch: 8 Minibatch: 701 loss: 0.975

Epoch: 9 Minibatch: 1 loss: 0.882

Epoch: 9 Minibatch: 101 loss: 0.610

Epoch: 9 Minibatch: 201 loss: 1.207

Epoch: 9 Minibatch: 301 loss: 0.768

Epoch: 9 Minibatch: 401 loss: 0.902

Epoch: 9 Minibatch: 501 loss: 1.019

Epoch: 9 Minibatch: 601 loss: 0.985

Epoch: 9 Minibatch: 701 loss: 0.997

Epoch: 10 Minibatch: 1 loss: 1.035

Epoch: 10 Minibatch: 101 loss: 0.847

Epoch: 10 Minibatch: 201 loss: 0.819

Epoch: 10 Minibatch: 301 loss: 0.854

Epoch: 10 Minibatch: 401 loss: 0.715

Epoch: 10 Minibatch: 501 loss: 0.708

Epoch: 10 Minibatch: 601 loss: 0.684

Epoch: 10 Minibatch: 701 loss: 0.789

Finished Training

从测试集中取出8张图片并输入模型,测试CNN把这些图片识别成什么:

# 得到一组图像

images, labels = iter(testloader).next()

# 展示图像

imshow(torchvision.utils.make_grid(images))

# 展示图像的标签

for j in range(8):

print(classes[labels[j]])

outputs = net(images.to(device))

_, predicted = torch.max(outputs, 1)

# 展示预测的结果

for j in range(8):

print(classes[predicted[j]])

原始:

cat

ship

ship

plane

frog

frog

car

frog

测试后:

cat

ship

plane

ship

frog

frog

car

deer

检测在整个网络集上的表现:

correct = 0

total = 0

for data in testloader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: %d %%' % (

100 * correct / total))

运行结果

Accuracy of the network on the 10000 test images: 63 %

准确率还可以,通过改进网络结构,性能还可以进一步提升。

3.使用 VGG16 对 CIFAR10 分类

VGG是由Simonyan 和Zisserman在文献《Very Deep Convolutional Networks for Large Scale Image Recognition》中提出卷积神经网络模型,其名称来源于作者所在的牛津大学视觉几何组(Visual Geometry Group)的缩写。

该模型参加2014年的 ImageNet图像分类与定位挑战赛,取得了优异成绩:在分类任务上排名第二,在定位任务上排名第一。

- 定义 dataloader

这里的 transform,dataloader 和之前定义的有所不同。

代码

import torch

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import numpy as np

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

# 使用GPU训练,可以在菜单 "代码执行工具" -> "更改运行时类型" 里进行设置

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

transform_train = transforms.Compose([

transforms.RandomCrop(32, padding=4),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))])

transform_test = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform_train)

testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=transform_test)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=128, shuffle=True, num_workers=2)

testloader = torch.utils.data.DataLoader(testset, batch_size=128, shuffle=False, num_workers=2)

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

- VGG网络定义

模型实现代码:

class VGG(nn.Module):

def __init__(self):

super(VGG, self).__init__()

self.cfg = [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M']

self.features = self._make_layers(cfg)

self.classifier = nn.Linear(2048, 10)

def forward(self, x):

out = self.features(x)

out = out.view(out.size(0), -1)

out = self.classifier(out)

return out

def _make_layers(self, cfg):

layers = []

in_channels = 3

for x in cfg:

if x == 'M':

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

layers += [nn.Conv2d(in_channels, x, kernel_size=3, padding=1),

nn.BatchNorm2d(x),

nn.ReLU(inplace=True)]

in_channels = x

layers += [nn.AvgPool2d(kernel_size=1, stride=1)]

return nn.Sequential(*layers)

# 初始化网络,根据实际需要,修改分类层。因为 tiny-imagenet 是对200类图像分类,这里把输出修改为200。

# 网络放到GPU上

net = VGG().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.001)

- 网络训练

for epoch in range(10): # 重复多轮训练

for i, (inputs, labels) in enumerate(trainloader):

inputs = inputs.to(device)

labels = labels.to(device)

# 优化器梯度归零

optimizer.zero_grad()

# 正向传播 + 反向传播 + 优化

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# 输出统计信息

if i % 100 == 0:

print('Epoch: %d Minibatch: %5d loss: %.3f' %(epoch + 1, i + 1, loss.item()))

print('Finished Training')

问题:

NameError Traceback (most recent call last)

<ipython-input-2-f1f643d5b514> in <module>()

58

59 # 网络放到GPU上

---> 60 net = VGG().to(device)

61 criterion = nn.CrossEntropyLoss()

62 optimizer = optim.Adam(net.parameters(), lr=0.001)

<ipython-input-2-f1f643d5b514> in __init__(self)

34 super(VGG, self).__init__()

35 self.cfg = [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M']

---> 36 self.features = self._make_layers(cfg)

37 self.classifier = nn.Linear(2048, 10)

38

NameError: name 'cfg' is not defined

将self.features = self._make_layers(cfg)改为self.features = self._make_layers(self.cfg)后,出现新报错:

CUDA error: CUBLAS_STATUS_INVALID_VALUE when calling `cublasSgemm( handle, opa, opb, m, n, k, &alpha, a, lda, b, ldb, &beta, c, ldc)`

网上查阅后得知,应该是self.classifier = nn.Linear(2048, 10)出现问题,应该为self.classifier = nn.Linear(512, 10).参考博客:

https://blog.csdn.net/xiaoxiaowantong/article/details/109689146

更改后可执行,运行结果如下

Files already downloaded and verified

Files already downloaded and verified

Epoch: 1 Minibatch: 1 loss: 2.647

Epoch: 1 Minibatch: 101 loss: 1.494

Epoch: 1 Minibatch: 201 loss: 1.205

Epoch: 1 Minibatch: 301 loss: 1.144

Epoch: 2 Minibatch: 1 loss: 0.847

Epoch: 2 Minibatch: 101 loss: 0.890

Epoch: 2 Minibatch: 201 loss: 0.920

Epoch: 2 Minibatch: 301 loss: 0.832

Epoch: 3 Minibatch: 1 loss: 0.839

Epoch: 3 Minibatch: 101 loss: 0.877

Epoch: 3 Minibatch: 201 loss: 0.727

Epoch: 3 Minibatch: 301 loss: 0.588

Epoch: 4 Minibatch: 1 loss: 0.744

Epoch: 4 Minibatch: 101 loss: 0.582

Epoch: 4 Minibatch: 201 loss: 0.563

Epoch: 4 Minibatch: 301 loss: 0.576

Epoch: 5 Minibatch: 1 loss: 0.622

Epoch: 5 Minibatch: 101 loss: 0.545

Epoch: 5 Minibatch: 201 loss: 0.413

Epoch: 5 Minibatch: 301 loss: 0.525

Epoch: 6 Minibatch: 1 loss: 0.493

Epoch: 6 Minibatch: 101 loss: 0.555

Epoch: 6 Minibatch: 201 loss: 0.548

Epoch: 6 Minibatch: 301 loss: 0.559

Epoch: 7 Minibatch: 1 loss: 0.414

Epoch: 7 Minibatch: 101 loss: 0.324

Epoch: 7 Minibatch: 201 loss: 0.653

Epoch: 7 Minibatch: 301 loss: 0.494

Epoch: 8 Minibatch: 1 loss: 0.338

Epoch: 8 Minibatch: 101 loss: 0.487

Epoch: 8 Minibatch: 201 loss: 0.526

Epoch: 8 Minibatch: 301 loss: 0.425

Epoch: 9 Minibatch: 1 loss: 0.343

Epoch: 9 Minibatch: 101 loss: 0.485

Epoch: 9 Minibatch: 201 loss: 0.367

Epoch: 9 Minibatch: 301 loss: 0.414

Epoch: 10 Minibatch: 1 loss: 0.507

Epoch: 10 Minibatch: 101 loss: 0.465

Epoch: 10 Minibatch: 201 loss: 0.414

Epoch: 10 Minibatch: 301 loss: 0.334

Finished Training

- 测试验证准确率:

correct = 0

total = 0

for data in testloader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: %.2f %%' % (

100 * correct / total))

执行结果

Accuracy of the network on the 10000 test images: 84.16 %

易得,使用一个简化版的VGG网络,准确率都能从63%提升至84.16%