pyspark使用KMeans聚类

01.导入模块,生成对象

from pyspark.sql import SparkSession

from pyspark.ml.feature import VectorAssembler

from pyspark.ml.clustering import KMeans,KMeansSummary

spark = SparkSession.builder.config("spark.driver.host","192.168.1.4")\

.config("spark.ui.showConsoleProgress","false")\

.appName("seed").master("local[*]").getOrCreate()

02.导入数据,查看数据内容和结构

data = spark.read.csv("/mnt/e/win_ubuntu/Code/DataSet/MLdataset/seeds_dataset.csv",header=True,inferSchema=True)

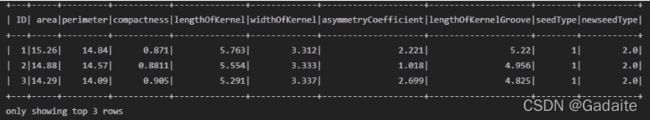

data.show(3)

data.printSchema()

输出结果:

root |-- ID: integer (nullable = true) |-- area: double (nullable = true) |-- perimeter: double (nullable = true) |-- compactness: double (nullable = true) |-- lengthOfKernel: double (nullable = true) |-- widthOfKernel: double (nullable = true) |-- asymmetryCoefficient: double (nullable = true) |-- lengthOfKernelGroove: double (nullable = true) |-- seedType: integer (nullable = true)

03.查看列名

data.columns

输出结果:

['ID', 'area', 'perimeter', 'compactness', 'lengthOfKernel', 'widthOfKernel', 'asymmetryCoefficient', 'lengthOfKernelGroove', 'seedType']

04.分类结果数值化,并查看结果

from pyspark.ml.feature import StringIndexer stringIndexer = StringIndexer(inputCol="seedType",outputCol="newseedType") model = stringIndexer.fit(data) data = model.transform(data) data.show(3)

输出结果:

05.将被数值化的列去掉,并将数值化的列名改为原有列的名称

data = data.select('area','perimeter','compactness','lengthOfKernel','widthOfKernel'\

,'asymmetryCoefficient','lengthOfKernelGroove','newseedType')\

.withColumnRenamed("newseedType","seedType")

06.选取特征标签列,并进行向量化,选取需要的列,再查看前三条数据

vectorAssembler = VectorAssembler(inputCols=['area','perimeter','compactness',\

'lengthOfKernel','widthOfKernel','asymmetryCoefficient',\

'lengthOfKernelGroove'],outputCol="features")

tansdata = vectorAssembler.transform(data).select("features","seedType")

tansdata.show(3)

输出结果:

+--------------------+--------+ | features|seedType| +--------------------+--------+ |[15.26,14.84,0.87...| 2.0| |[14.88,14.57,0.88...| 2.0| |[14.29,14.09,0.90...| 2.0| +--------------------+--------+ only showing top 3 rows

07.详细查看上面三条数据内容

tansdata.head(3)

输出结果:

[Row(features=DenseVector([15.26, 14.84, 0.871, 5.763, 3.312, 2.221, 5.22]), seedType=2.0), Row(features=DenseVector([14.88, 14.57, 0.8811, 5.554, 3.333, 1.018, 4.956]), seedType=2.0), Row(features=DenseVector([14.29, 14.09, 0.905, 5.291, 3.337, 2.699, 4.825]), seedType=2.0)]

08.进行数据归一化(标准化),并查看前3条数据结果

from pyspark.ml.feature import StandardScaler standardScaler = StandardScaler(inputCol="features",outputCol="scaledFeatures") model_one = standardScaler.fit(tansdata) stddata = model_one.transform(tansdata) stddata.show(3) stddata.head(3)

输出结果:

+--------------------+--------+--------------------+ | features|seedType| scaledFeatures| +--------------------+--------+--------------------+ |[15.26,14.84,0.87...| 2.0|[5.24452795332028...| |[14.88,14.57,0.88...| 2.0|[5.11393027165175...| |[14.29,14.09,0.90...| 2.0|[4.91116018695588...| +--------------------+--------+--------------------+ only showing top 3 rows [Row(features=DenseVector([15.26, 14.84, 0.871, 5.763, 3.312, 2.221, 5.22]), seedType=2.0, scaledFeatures=DenseVector([5.2445, 11.3633, 36.8608, 13.0072, 8.7685, 1.4772, 10.621])), Row(features=DenseVector([14.88, 14.57, 0.8811, 5.554, 3.333, 1.018, 4.956]), seedType=2.0, scaledFeatures=DenseVector([5.1139, 11.1566, 37.2883, 12.5354, 8.8241, 0.6771, 10.0838])), Row(features=DenseVector([14.29, 14.09, 0.905, 5.291, 3.337, 2.699, 4.825]), seedType=2.0, scaledFeatures=DenseVector([4.9112, 10.789, 38.2997, 11.9419, 8.8347, 1.7951, 9.8173]))]

09.拆分训练数据集和预测数据集,并查看各自的条数

traindata,testdata = stddata.randomSplit([0.7,0.3]) print(traindata.count(),testdata.count())

输出结果:144 66

10.查看原始数据有多少类(决定聚类时的参数),且不同类的数据条数

data.groupBy("seedType").count().show()

输出结果:

+--------+-----+ |seedType|count| +--------+-----+ | 0.0| 70| | 1.0| 70| | 2.0| 70| +--------+-----+

11.使用KMeans进行聚类,获取聚类模型

kmeans = KMeans(featuresCol="scaledFeatures",k=3) model_two = kmeans.fit(traindata)

12.查看集合内误差平方和,用于度量聚类的有效性

通过 WSSSE 的计算构建出 K-WSSSE 间的相关关系,从而确定K的值,一般来说,最优的K值即是 K-WSSSE 曲 线的 拐点(Elbow) 位置(当然,对于某些情况来说,我们还需要考虑K值的语义可解释性,而不仅仅是教条地 参考WSSSE曲线)

model_two.computeCost(traindata)

输出结果:278.93528584662363

13.查看每个类别的聚类中心

model_two.clusterCenters()

输出结果:

[array([ 4.81493032, 10.80927774, 37.32735111, 12.22557524, 8.52734653, 1.77975592, 10.19931557]), array([ 6.27124632, 12.33416237, 37.34515336, 13.89858067, 9.68210196, 2.37627184, 12.26465184]), array([ 4.04007365, 10.12681315, 35.71260412, 11.82304628, 7.46653997, 3.24431969, 10.43654837])]

14.通过测试数据,查看模型预测情况

这里的类别和我们原有的类别seedType是一一对应的,值不一定相等

model_two.transform(testdata).show()

输出结果:

+--------------------+--------+--------------------+----------+ | features|seedType| scaledFeatures|prediction| +--------------------+--------+--------------------+----------+ |[10.82,12.83,0.82...| 1.0|[3.71859714645645...| 2| |[10.91,12.8,0.837...| 1.0|[3.74952817632531...| 2| |[11.35,13.12,0.82...| 1.0|[3.90074654457308...| 2| |[11.42,12.86,0.86...| 2.0|[3.92480401224886...| 0| |[11.65,13.07,0.85...| 1.0|[4.00384997746928...| 2| |[11.82,13.4,0.827...| 1.0|[4.06227525611046...| 2| |[11.87,13.02,0.87...| 1.0|[4.07945916159316...| 0| |[12.01,13.52,0.82...| 1.0|[4.12757409694473...| 2| ................................................................

15.通过训练数据,验证上面"这里的类别和我们原有的类别seedType是一一对应的,值不一定相等"

model_two.transform(traindata).show()

输出结果:

+--------------------+--------+--------------------+----------+ | features|seedType| scaledFeatures|prediction| +--------------------+--------+--------------------+----------+ |[10.59,12.41,0.86...| 1.0|[3.63955118123602...| 2| |[10.74,12.73,0.83...| 1.0|[3.69110289768413...| 2| |[10.79,12.93,0.81...| 1.0|[3.70828680316683...| 2| |[10.8,12.57,0.859...| 1.0|[3.71172358426337...| 2| |[10.83,12.96,0.80...| 1.0|[3.72203392755299...| 2| |[10.93,12.8,0.839...| 1.0|[3.75640173851839...| 2| ..................................................................

16.通过观察发现原有类别,与预测出来的类别的对应关系为:

| 原有类别:seedType | 预测结果:prediction |

|---|---|

| 0.0 | 1 |

| 1.0 | 2 |

| 2.0 | 0 |

17.根据上方关系表,编写注册UDF函数进行转换

from pyspark.sql.functions import udf from pyspark.sql.types import DoubleType def func(x): if x==1: return 0.0 elif x==2: return 1.0 else : return 2.0 fudf = udf(func,DoubleType())

18.将上面训练数据和测试数据的结果进行赋值

trainres = model_two.transform(traindata) testres = model_two.transform(testdata)

19.训练结果通过UDF进行转换,并查看结果

trainres = trainres.withColumn("res",fudf("prediction"))

trainres.show()

输出结果:

+--------------------+--------+--------------------+----------+---+ | features|seedType| scaledFeatures|prediction|res| +--------------------+--------+--------------------+----------+---+ |[10.59,12.41,0.86...| 1.0|[3.63955118123602...| 2|1.0| |[10.74,12.73,0.83...| 1.0|[3.69110289768413...| 2|1.0| |[10.79,12.93,0.81...| 1.0|[3.70828680316683...| 2|1.0| |[10.8,12.57,0.859...| 1.0|[3.71172358426337...| 2|1.0| |[10.83,12.96,0.80...| 1.0|[3.72203392755299...| 2|1.0| |[10.93,12.8,0.839...| 1.0|[3.75640173851839...| 2|1.0| |[11.02,13.0,0.818...| 1.0|[3.78733276838725...| 2|1.0| |[11.14,12.79,0.85...| 1.0|[3.82857414154573...| 2|1.0| ......................................................................

20.查看训练数据的预测结果的结构,以便下面筛选时进行判断

trainres.printSchema()

输出结果:同为double,可以直接取==判断

root |-- features: vector (nullable = true) |-- seedType: double (nullable = false) |-- scaledFeatures: vector (nullable = true) |-- prediction: integer (nullable = false) |-- res: double (nullable = true)

21.计算训练数据的预测结果中,与真实相符的数据条数

trainres.filter(trainres.res == trainres.seedType).count()

输出结果:133

前面统计过训练数据的总条数共-------------144条

22.同理测试数据的预测结果中,与真实相符的数据条数

testres = testres.withColumn("res",fudf("prediction"))

testres.filter(testres.res == testres.seedType).count()

testres.count()

输出结果:66

前面统计过测试数据的总条数共---------------66条