yolov8 ncnn

目录

1. yolov8 目标检测

2. 分类

3. 参考

1. yolov8 目标检测

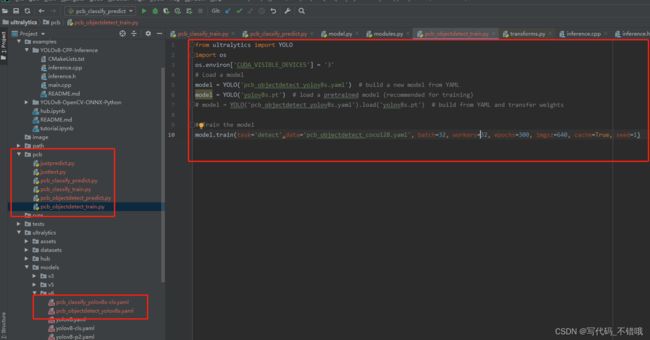

1. 目标检测按照官方代码 8.0.81直接训练,训练后会得到 .pt 文件。

from ultralytics import YOLO

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '3'

# Load a model

model = YOLO('pcb_objectdetect_yolov8s.yaml') # build a new model from YAML

model = YOLO('yolov8s.pt') # load a pretrained model (recommended for training)

# model = YOLO('pcb_objectdetect_yolov8s.yaml').load('yolov8s.pt') # build from YAML and transfer weights

# Train the model

model.train(task='detect',data='pcb_objectdetect_coco128.yaml', batch=32, workers=32, epochs=300, imgsz=640, cache=True, seed=1)先测试下是否有问题,如果没问题,就用官方自带的 export.py 进行转换,这样参照参考把 669-486 注释,换为如下的,输出 torchscript 格式:

# def forward(self, x):

# shape = x[0].shape # BCHW

# for i in range(self.nl):

# x[i] = torch.cat((self.cv2[i](x[i]), self.cv3[i](x[i])), 1)

# if self.training:

# return x

# elif self.dynamic or self.shape != shape:

# self.anchors, self.strides = (x.transpose(0, 1) for x in make_anchors(x, self.stride, 0.5))

# self.shape = shape

#

# box, cls = torch.cat([xi.view(shape[0], self.no, -1) for xi in x], 2).split((self.reg_max * 4, self.nc), 1)

# dbox = dist2bbox(self.dfl(box), self.anchors.unsqueeze(0), xywh=True, dim=1) * self.strides

# y = torch.cat((dbox, cls.sigmoid()), 1)

# return y if self.export else (y, x)

def forward(self, x):

z = [] # inference output

for i in range(self.nl):

boxes = self.cv2[i](x[i]).permute(0, 2, 3, 1)

scores = self.cv3[i](x[i]).sigmoid().permute(0, 2, 3, 1)

feat = torch.cat((boxes, scores), -1)

z.append(feat)

return tuple(z)2. 接着用 ncnn github上提供的 pnnx,按照GitHub - pnnx/pnnx: PyTorch Neural Network eXchange 说明进行把torchscript转换为 ncnn的param 和 bin 格式

3. 接着用参考的代码,进行测试

#include "layer.h"

#include "net.h"

#include "opencv2/opencv.hpp"

#include

#include

#include

#define MAX_STRIDE 32 // if yolov8-p6 model modify to 64

struct Object

{

cv::Rect_ rect;

int label;

float prob;

};

static float softmax(

const float* src,

float* dst,

int length

)

{

float alpha = -FLT_MAX;

for (int c = 0; c < length; c++)

{

float score = src[c];

if (score > alpha)

{

alpha = score;

}

}

float denominator = 0;

float dis_sum = 0;

for (int i = 0; i < length; ++i)

{

dst[i] = expf(src[i] - alpha);

denominator += dst[i];

}

for (int i = 0; i < length; ++i)

{

dst[i] /= denominator;

dis_sum += i * dst[i];

}

return dis_sum;

}

static void generate_proposals(

int stride,

const ncnn::Mat& feat_blob,

const float prob_threshold,

std::vector 2. 分类

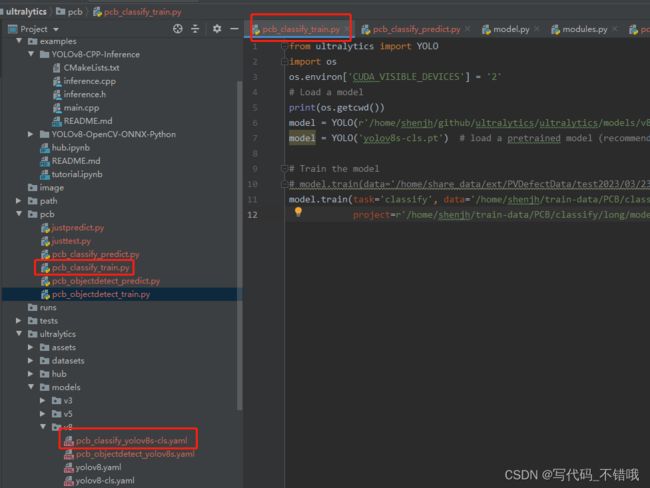

1. 训练分类,也参考官方提供的示例,我是自建了一个PCB文件夹,把训练分类脚本放进去,内容如下:

from ultralytics import YOLO

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '2'

# Load a model

print(os.getcwd())

model = YOLO(r'/home/shenjh/github/ultralytics/ultralytics/models/v8/pcb_classify_yolov8s-cls.yaml') # build a new model from YAML

model = YOLO('yolov8s-cls.pt') # load a pretrained model (recommended for training)

# Train the model

# model.train(data='/home/share_data/ext/PVDefectData/test2023/03/23/pcb/pcbclassify/long/', batch=32, workers=32, epochs=100, imgsz=224, cache=True, seed=2023)

model.train(task='classify', data='/home/shenjh/train-data/PCB/classify/long/', batch=32, workers=32, epochs=100, imgsz=224, cache=True, seed=2023,

project=r'/home/shenjh/train-data/PCB/classify/long/models/')2. 接着先验证训练好的模型,可以直接用训练的NG和OK先验证下,看看结果对不对,如下:

from ultralytics import YOLO

model = YOLO("/home/shenjh/train-data/PCB/classify/long/models/train/weights/best.pt")

model.predict(task='classify', source='/home/share_data/ext/PVDefectData/test2023/04/23/long/NG/', imgsz=224)3. 如果没问题,就用官方自带的export.py,转为 onnx,如下,但是opset=9为啥设为9,我记不清在哪里看到的:

from ultralytics import YOLO

model = YOLO("/home/shenjh/train-data/PCB/classify/long/models/train/weights/best.pt")

model.export(format="onnx", opset=9)4. 接着再测试下 onnx,如下:

model = YOLO("/home/shenjh/train-data/PCB/classify/long/models/train/weights/best.onnx")

model.predict(task='classify', source='/home/share_data/ext/PVDefectData/test2023/04/23/long/NG/', imgsz=224)5. 没问题的话,就用c++加载测试下onnx,如下,这里是参照参考3的,只不过我把 Normalize 那一块注释了,并把原来的 cv::dnn::blobFromImage(crop_image, blob, 1, cv::Size(crop_image.cols, crop_image.rows), cv::Scalar(), true, false); 改为 cv::dnn::blobFromImage(crop_image, blob, 1.0 / 255.0, cv::Size(crop_image.cols, crop_image.rows), cv::Scalar(), true, false); 差异见红色字体

#include

#include

#include

#include

//预处理

void pre_process(cv::Mat& image, cv::Mat& blob, int INPUT_WIDTH=224, int INPUT_HEIGHT=224)

{

//CenterCrop

int crop_size = std::min(image.cols, image.rows);

int left = (image.cols - crop_size) / 2, top = (image.rows - crop_size) / 2;

cv::Mat crop_image = image(cv::Rect(left, top, crop_size, crop_size));

cv::resize(crop_image, crop_image, cv::Size(INPUT_WIDTH, INPUT_HEIGHT));

//Normalize

//crop_image.convertTo(crop_image, CV_32FC3, 1. / 255.);

//cv::subtract(crop_image, cv::Scalar(0.406, 0.456, 0.485), crop_image);

//cv::divide(crop_image, cv::Scalar(0.225, 0.224, 0.229), crop_image);

cv::dnn::blobFromImage(crop_image, blob, 1.0 / 255.0, cv::Size(crop_image.cols, crop_image.rows), cv::Scalar(), true, false);

}

//网络推理

void process(cv::Mat& blob, cv::dnn::Net& net, std::vector& outputs)

{

net.setInput(blob);

net.forward(outputs, net.getUnconnectedOutLayersNames());

}

//后处理

std::string post_process(std::vector& detections, std::vector& class_name)

{

std::vector values;

for (size_t i = 0; i < detections[0].cols; i++)

{

values.push_back(detections[0].at(0, i));

}

int id = std::distance(values.begin(), std::max_element(values.begin(), values.end()));

return class_name[id];

}

int main(int argc, char** argv)

{

//常量

const int INPUT_WIDTH = 224;

const int INPUT_HEIGHT = 224;

std::ifstream ifs("Z:/temp/longlast.txt");

std::string imgRoot = "Z:/temp/long/NG";

cv::dnn::Net net = cv::dnn::readNet("Z:/temp/202304231600/long.onnx");

std::vector class_name;

std::string line;

while (getline(ifs, line))

{

class_name.push_back(line);

}

_finddata64i32_t fileInfo;

intptr_t hFile = _findfirst((imgRoot + "\\*.bmp").c_str(), &fileInfo);

if (hFile == -1) {

std::cout << "not find image!\n";

return -1;

}

static int count = 0;

do

{

std::string imgPath = imgRoot + "/" + std::string(fileInfo.name);

cv::Mat image = cv::imread(imgPath), blob;

clock_t start = clock();

pre_process(image, blob, INPUT_WIDTH, INPUT_HEIGHT);

std::vector outputs;

process(blob, net, outputs);

std::string ret = post_process(outputs, class_name);

clock_t ends = clock();

std::cout << fileInfo.name << " pcb_time: " << (double)(ends - start) / CLOCKS_PER_SEC << " ret: " << ret << std::endl;

count++;

} while (_findnext(hFile, &fileInfo) == 0);

return 0;

}

7. 测试没问题后,利用ncnn自带的onnx2ncnn,把 .onnx 转为 ncnn,这里下载的是:ncnn-20230223-windows-vs2019版本

8.利用C++代码测试ncnn,如下:

#include

#include

#include

#include

#include "opencv2/opencv.hpp"

#include "layer.h"

#include "net.h"

bool _long_load_model = false;

int _long_target_size;

std::string _long_param_path;

std::string _long_bin_path;

void* pcb_classify_get_net(std::string& param_path, std::string& bin_path, int target_size)

{

static ncnn::Net long_ptr;

if (!_long_load_model)

{

_long_target_size = target_size;

_long_load_model = true;

long_ptr.opt.use_vulkan_compute = false;

int ret_long_para = long_ptr.load_param(param_path.c_str());

if (ret_long_para != 0)

exit(-1);

int ret_long_bin = long_ptr.load_model(bin_path.c_str());

if (ret_long_bin != 0)

exit(-1);

}

return &long_ptr;

}

int pcb_classify_run(const cv::Mat& bgr, ncnn::Net* lound_ptr)

{

ncnn::Mat in_pad = ncnn::Mat::from_pixels_resize(bgr.data, ncnn::Mat::PIXEL_BGR2RGB, bgr.cols, bgr.rows, _long_target_size, _long_target_size);

const float norm_vals[3] = { 1 / 255.f, 1 / 255.f, 1 / 255.f };

in_pad.substract_mean_normalize(0, norm_vals);

ncnn::Extractor ex = lound_ptr->create_extractor();

ex.input("images", in_pad);

ncnn::Mat out;

ex.extract("output0", out);

//ex.extract(outBlobName.c_str(), out);

// manually call softmax on the fc output

// convert result into probability

// skip if your model already has softmax operation

{

ncnn::Layer* softmax = ncnn::create_layer("Softmax");

ncnn::ParamDict pd;

softmax->load_param(pd);

softmax->forward_inplace(out, lound_ptr->opt);

delete softmax;

}

out = out.reshape(out.h * out.w * out.c);

// trans output

std::vector predicted_scores;

//predicted_scores.clear();

predicted_scores.resize(out.w);

for (int j = 0; j < out.w; j++)

{

predicted_scores[j] = out[j];

}

int maxIndex = (int)(std::max_element(predicted_scores.begin(),

predicted_scores.end()) - predicted_scores.begin());

return maxIndex;

}

int main()

{

std::vector class_name;

std::ifstream ifs("Z:/temp/longlast.txt");

std::string line;

std::string imgRoot = "Z:/temp/long/OK";

std::string param_path = "Z:/temp/202304231600/long.param";

std::string bin_path = "Z:/temp/202304231600/long.bin";

int target_size = 224;

while (getline(ifs, line))

{

class_name.push_back(line);

}

void* ptr = pcb_classify_get_net(param_path, bin_path, target_size);

_finddata64i32_t fileInfo;

intptr_t hFile = _findfirst((imgRoot + "\\*.bmp").c_str(), &fileInfo);

if (hFile == -1) {

std::cout << "not find image!\n";

return -1;

}

static int count = 0;

do

{

std::string imgPath = imgRoot + "/" + std::string(fileInfo.name);

cv::Mat image = cv::imread(imgPath), blob;

clock_t start = clock();

int ret = pcb_classify_run(image, (ncnn::Net*)ptr);

clock_t ends = clock();

std::cout << fileInfo.name << " pcb_time: " << (double)(ends - start) / CLOCKS_PER_SEC << " ret: " << class_name[ret] << std::endl;

count++;

} while (_findnext(hFile, &fileInfo) == 0);

return 0;

}

3. 参考

1.Tutorial - deploy YOLOv5 with ncnn · Tencent/ncnn · Discussion #4541 · GitHub

2.详细记录u版YOLOv8目标检测ncnn实现 - 知乎

3.yolov5-v7.0分类&检测&分割C++部署_给算法爸爸上香的博客-CSDN博客