Python 爬虫基础

Python 爬虫基础

1.1 理论

在浏览器通过网页拼接【/robots.txt】来了解可爬取的网页路径范围

例如访问: https://www.csdn.net/robots.txt

User-agent: *

Disallow: /scripts

Disallow: /public

Disallow: /css/

Disallow: /images/

Disallow: /content/

Disallow: /ui/

Disallow: /js/

Disallow: /scripts/

Disallow: /article_preview.html*

Disallow: /tag/

Disallow: /?

Disallow: /link/

Disallow: /tags/

Disallow: /news/

Disallow: /xuexi/

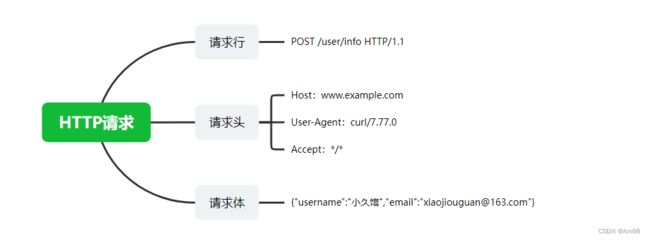

通过Python Requests 库发送HTTP【Hypertext Transfer Protocol “超文本传输协议”】请求

通过Python Beautiful Soup 库来解析获取到的HTML内容

HTTP请求

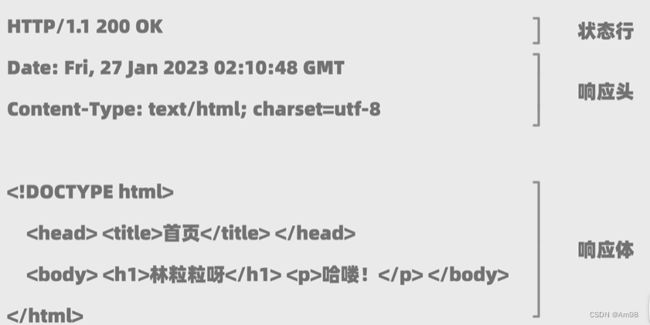

HTTP响应

1.2 实践代码 【获取价格&书名】

import requests

# 解析HTML

from bs4 import BeautifulSoup

# 将程序伪装成浏览器请求

head = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64)"

}

requests = requests.get("http://books.toscrape.com/",headers= head)

# 指定编码

# requests.encoding= 'gbk'

if requests.ok:

# file = open(r'C:\Users\root\Desktop\Bug.html', 'w')

# file.write(requests.text)

# file.close

content = requests.text

## html.parser 指定当前解析 HTML 元素

soup = BeautifulSoup(content, "html.parser")

## 获取价格

all_prices = soup.findAll("p", attrs={"class":"price_color"})

for price in all_prices:

print(price.string[2:])

## 获取名称

all_title = soup.findAll("h3")

for title in all_title:

## 获取h3下面的第一个a元素

print(title.find("a").string)

else:

print(requests.status_code)

1.3 实践代码 【获取 Top250 的电影名】

import requests

# 解析HTML

from bs4 import BeautifulSoup

head = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64)"

}

# 获取 TOP 250个电影名

for i in range(0,250,25):

response = requests.get(f"https://movie.douban.com/top250?start={i}", headers= head)

if response.ok:

content = response.text

soup = BeautifulSoup(content, "html.parser")

all_titles = soup.findAll("span", attrs={"class": "title"})

for title in all_titles:

if "/" not in title.string:

print(title.string)

else:

print(response.status_code)

1.4 实践代码 【下载图片】

import requests

# 解析HTML

from bs4 import BeautifulSoup

head = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64)"

}

response = requests.get(f"https://www.maoyan.com/", headers= head)

if response.ok:

soup = BeautifulSoup(response.text, "html.parser")

for img in soup.findAll("img", attrs={"class": "movie-poster-img"}):

img_url = img.get('data-src')

alt = img.get('alt')

path = 'img/' + alt + '.jpg'

res = requests.get(img_url)

with open(path, 'wb') as f:

f.write(res.content)

else:

print(response.status_code)