python: pandas 、dataframe 与hdf5

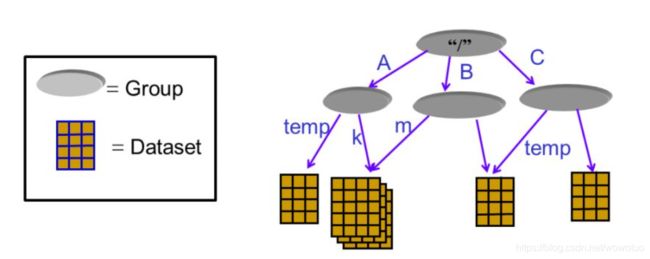

HDF(Hierarchical Data Format),翻译一下就是层次数据格式,其实就是一个高级文件夹,或一个文件系统。

一、group、dataset

h5文件中有两个核心的概念:组“group”和数据集“dataset”。 一个h5文件就是 “dataset” 和 “group” 二合一的容器。

dataset :简单来讲类似数组组织形式的数据集合,一个类似n维的矩阵。

group:包含了其它 dataset(数组) 和 其它 group ,像字典一样工作。

关于group和dataset:

path =r"C:\Users\rustr\Desktop\test.h5"

arr1 = np.array([(1,2,3),(4,5,6)])

arr2 = np.array([(2,4,6),(8,9,10)])

f = h5py.File(path,'w')

g1=f.create_group("\data1")

g2=f.create_group("\data2")

g3 =f.create_group("\data3")

g1.create_dataset("arr1",data =arr1)

g2.create_dataset("arr1",data =arr2)

print("f keys:",f.keys())

print("f keys len:", len(f.keys()))

# 如何判断 某group是否存在?

if "\data1" in f:

print("\\data1 is a existing group!" )

# 如何判断某dataset是否存在?

if "arr1" in f["\data1"]:

print("arr1 is a existing dataset!")

for p in f: # group

print("group p value:",f[p]) # get group value

print("p:",p," ,f[p] keys: ",f[p].keys()," len:",len(f[p].keys()))

for q in f[p]: # dataset

print("=>")

print("dataset:", q," value:",f[p][q][()]) # get dataset value

f.close()

输出如下:

\data1 is a existing group!

arr1 is a existing dataset!

group p value:

p: \data1 ,f[p] keys:len: 1

=>

dataset: arr1 value: [[1 2 3]

[4 5 6]]

group p value:

p: \data2 ,f[p] keys:len: 1

=>

dataset: arr1 value: [[ 2 4 6]

[ 8 9 10]]

group p value:

p: \data3 ,f[p] keys:len: 0

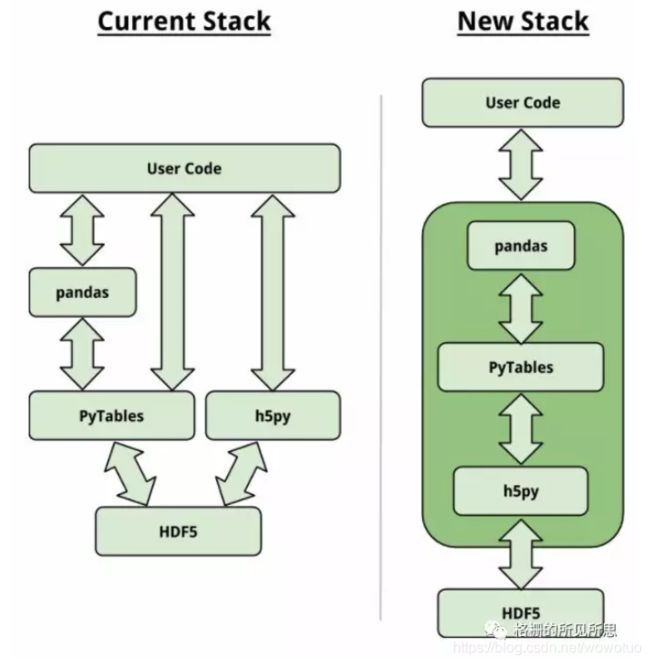

二、python 中库:PyTables和h5py

Python中的HDF5库通常有PyTables和h5py。

h5py提供了一种直接而高级的HDF5 API访问接口;

PyTables则抽象了HDF5的许多细节以提供多种灵活的数据容器、表索引、查询功能等支持。比如可以进行where索引。

pandas有一个最小化的类似于字典的HDFStore类,它通过PyTables存储pandas对象。

在h5的文件存储中,storer(fixed format)和table(more scalable random-access and query abilities)格式的存储,在读写也是存在着很大的差异。前者的速度快,但是更占空间。后者的速度要慢一些。

从方便程度来看,pandas内置的方法对df类型的数据封装较好,应用最为方便,但有人认为速度较差;从速度来看,h5py最优,但在具体数据处理上更复杂。

在2015年SciPy上,来自PyTables,h5py,HDF Group,Pandas以及社区成员的开发人员坐下来讨论如何使Python和HDF5的协作更加精简和更易于维护。

# -*- coding: utf-8 -*-

import h5py

import pandas as pd

import numpy as np

import time as t

# 把日期分解为int 数组,提高效率;

# 把代码存为int,建立解析关系

path =r'C:\Users\rustr\Desktop\000001.XSHE.csv'

path_h5 =r'C:\Users\rustr\Desktop\000001.h5'

path_h5_2 =r'C:\Users\rustr\Desktop\000001_2.h5'

def to_hdf_test():

t0= t.time()

df1 = pd.read_csv(path, encoding='gb18030')

print('csv=>df read cost time:',t.time()-t0,'s')

df2 = df1;

print('df1的格式:',type(df1))

print('df 的行',df1.shape[0],' 列:',df1.shape[1])

print("hdf5=>write")

t1 =t.time()

df1.to_hdf(path_h5,'df',mode='w', format='table')

print("to_hdf write=> cost time :",t.time()-t1,'s')

t2=t.time()

print("hdf5=>append")

df2.to_hdf(path_h5, 'data', append=True)

print("append mode: to_hdf write=> cost time :",t.time()-t2,'s')

t3 =t.time()

print("hdf5=>read")

df3 = pd.read_hdf(path_h5, 'data')

print("read hdf5 =>cost time:",t.time()-t3,'s')

print('type df3: ',type(df3))

#print(df3)

def hdf5Store_test():

# read csv

t0 =t.time()

df = pd.read_csv(path, encoding='gb18030')

print('read csv cost time:',t.time()-t0,'s')

print('df 的行',df.shape[0],' 列:',df.shape[1])

# write

# way 1

print("hdf5=>write")

store = pd.HDFStore(path_h5_2)

store['data'] = df

store.close()

# way 2 :append

print("hdf5=>write")

store = pd.HDFStore(path_h5_2)

for i in range(2):

store.append('data', df) #追加的形式,label为data,也可以取别的名

print('hdf5 append work is close')

store.close()

# read

print('hdf5 => read')

t1= t.time()

store = pd.HDFStore(path_h5_2)

data = store['data']

store.close()

print('read hdf5 cost time:',t.time()-t1,'s')

print('hdf5 data 的行',data.shape[0],' 列:',data.shape[1])

print('type data:',type(data))

#print(data)

def h5py_test():

# hdf5 象是文件夹方式

# 比如'\files\IC.csv'

# dataset是数据元素的一个多维数组以及支持元数据(metadata)

#

n = 10000 #重复次数

now_time_str = '2019-08-18 13:53:00'.encode('utf-8')*n

now_time_int = np.array([2019, 8, 18,13.,53,0])*n

path1 = r'C:\Users\rustr\Desktop\test1.h5'

path2 = r'C:\Users\rustr\Desktop\test2.h5'

# 写入HDF5

# 1、str

print('now is writing hdf5 file test')

t1 = t.time()

f1 = h5py.File(path1,'w')

#f.create_dataset('/group/time_str', data=now_time_str) # 方式1

#放在mygroup下,以dataset存放

f1['/mygroup/time_str'] = now_time_str #方式2

# 打个标识

f1['/mygroup/time_str'].attrs["string"] = 'string'

f1.close()

print('dt 写入hdf5 =>字符串花时:',t.time()-t1,'s')

# 2、int ; 不设group

t2 = t.time()

f2 = h5py.File(path2,'w')

f2.create_dataset('time_int', data=now_time_int)

#f2['time_int'] = now_time_int

f2.close()

print('dt 写入hdf5 =>int花时:',t.time()-t2,'s')

# 读取HDF5

print('now is reading hdf5 file test......')

# str

_t1 =t.time()

for i in range(100):

g1 = h5py.File(path1,'r')

time_str_dataset =g1[r'/mygroup/time_str'] # dataset

time_str = time_str_dataset[()] # 从dataset中取出元素

#time_str = g1[r'/mygroup/time_str'].value => depreciated

#print(time_str)

g1.close()

print('dt str type => read hdf5=> cost:',t.time()-_t1,'s')

#int

_t2 =t.time()

for i in range(100):

g2 = h5py.File(path2,'r')

time_int_dataset = g2['time_int'] #取出dataset

time_int = time_int_dataset [()] #取出value

#print(time_int)

g2.close()

print('dt int type => read hdf5=> cost:',t.time()-_t2,'s')

关于不同的字符串和数值型的读写比较:

to_hdf_test()

csv=>df read cost time: 0.2543447017669678 s

df1的格式:

df 的行 116638 列: 14

hdf5=>write

to_hdf write=> cost time : 0.09382462501525879 s

hdf5=>append

append mode: to_hdf write=> cost time : 0.09407901763916016 s

hdf5=>read

read hdf5 =>cost time: 0.12476396560668945 s

type df3:

pandas的read_csv的速度也让我很吃惊。

h5py_test()

now is writing hdf5 file test

dt 写入hdf5 =>字符串花时: 0.001994609832763672 s

dt 写入hdf5 =>int花时: 0.001024007797241211 s

now is reading hdf5 file test…

dt str type => read hdf5=> cost: 0.051293134689331055 s

dt int type => read hdf5=> cost: 0.03966259956359863 s

可以看出,hdf5读的速度非常快,优势非常明显。