【postgres】4、安装、配置、主备、归档

文章目录

- 一、安装部署pg11.5

-

- 1.1 create db

-

- 1.1.1 编译安装建库,需包含文档及所有contrib

-

- 1.1.1.1 源码安装

- 1.1.1.2 mac brew 安装

- 1.1.1.3 yum安装

- apt源设置

- 依赖

- 提前设置环境变量

- 环境变量

- 正式安装

- initdb

- 启动

- 设置密码

- 设定层级子账号, 隔离权限

- 目录

- 设置环境变量

- 1.2 network

-

- 端口5433,监听所有IP

- authicen

- 允许任意ip的任意用户连接任意数据库,需密码

- 1.3 用 ubuntu专用的 pg_create_cluster 搭建主从流复制

- 管理

-

- parameter

-

- log

- 查日志

- 配置归档(删除7天前的归档)

- 创建一个用户user1,最大连接数为10,work_mem为1MB

- sql

-

- 创建pg_stat_statements插件并启用

- 记录最大sql数量为10000

- sql语句记录最大字节为4096

- log记录所有ddl语句

- 重置一次pg_stat_statements

- template设置

-

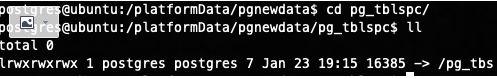

- 创建一个新的表空间pg_tbs位置/pgdata/pg_tbs

- 默认模板数据库的默认表空间为pg_tbs

- 回收所有普通用户对public的操作权限

- 所有以默认模板创建的库包含一个public.dual表,任意用户可操作

- 以template0创建一个数据库db1

- pg partition

-

- 创建range分区表t1_range, 以gmt_time为分区创建三个分区

- 创建组合分区

- 备份,主备

-

- backup

-

- 题目要求

- 操作答案

- master-slave

-

- 创建2个流复制备库,端口5434同步只读备库, 端口5435异步只读备库

- 当5434宕机, 5435可升为同步备库

- 主备切换

- 延迟应用日志

- 强化:应用管理

-

- 对象管理

- 逻辑导入

- 逻辑导出

- 物理备份

- PITR恢复

- 流复制主库(host1)配置:

- 流复制备库(host2)配置:

- 主备切换:

- 同步流复制:

- 逻辑复制,订阅发布模型配置(使用超级用户):

- 强化练习:SQL实验

一、安装部署pg11.5

PostgreSQL是最强开源关系型数据库, 二进制文件只有20MB, 它的安装很简单, 今天我们学习编译安装

1.1 create db

1.1.1 编译安装建库,需包含文档及所有contrib

1.1.1.1 源码安装

- 源码下载地址

- wget https://ftp.postgresql.org/pub/source/v14beta2/postgresql-14beta2.tar.gz

https://ftp.postgresql.org/pub/source/v14.6/postgresql-14.6.tar.bz2

- 源码编译安装Postgresql 11.4

如果用 docker 部署的 pg,但宿主机没有 psql 命令,可用对应的 os(如 ubuntu 18.04)编译 pg 源码,指定 LD_LIBRARY_PATH 即可

root@ubuntu:/data/psql-14.6# ll

total 16

drwxr-xr-x 2 root root 4096 Sep 15 17:03 bin/

drwxr-xr-x 4 root root 4096 Sep 15 17:03 include/

drwxr-xr-x 4 root root 4096 Sep 15 17:03 lib/

drwxr-xr-x 3 root root 4096 Sep 15 17:03 share/

root@ubuntu:/data/psql-14.6# ls bin

clusterdb dropdb initdb pg_basebackup pg_config pg_dump pg_receivewal pg_restore pg_test_timing pg_waldump psql

createdb dropuser pg_amcheck pgbench pg_controldata pg_dumpall pg_recvlogical pg_rewind pg_upgrade postgres reindexdb

createuser ecpg pg_archivecleanup pg_checksums pg_ctl pg_isready pg_resetwal pg_test_fsync pg_verifybackup postmaster vacuumdb

root@ubuntu:/data/psql-14.6# ls lib

libecpg.a libecpg_compat.so.3 libecpg.so.6 libpgcommon_shlib.a libpgport_shlib.a libpgtypes.so.3 libpq.so pkgconfig

libecpg_compat.a libecpg_compat.so.3.14 libecpg.so.6.14 libpgfeutils.a libpgtypes.a libpgtypes.so.3.14 libpq.so.5 postgresql

libecpg_compat.so libecpg.so libpgcommon.a libpgport.a libpgtypes.so libpq.a libpq.so.5.14

root@ubuntu:/data/psql-14.6# psql -Upostgres -h 127.0.0.1

Password for user postgres:

# 设置动态链接库的位置和全局PATH变量

export LD_LIBRARY_PATH=/data/psql-14.6/lib

export PATH=$PATH:/data/psql-14.6/bin

1.1.1.2 mac brew 安装

brew install postgresql@14

1.1.1.3 yum安装

参考 Centos yum postgres11

yum install https://download.postgresql.org/pub/repos/yum/reporpms/EL-7-x86_64/pgdg-redhat-repo-latest.noarch.rpm

yum install postgresql11-server

yum install postgresql11-contrib # 装完后才能有一些必要的 extensions, 比如 uuid-ossp

/usr/pgsql-11/bin/postgresql-11-setup initdb # 注意$PGDATA目录的权限需为 0700 或 0750

systemctl enable postgresql-11.service # reboot 后自动拉起 pg

systemctl start postgresql-11.service

apt源设置

参考

vim /etc/apt/sources.list, 添加如下

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ xenial main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ xenial-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ xenial-backports main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ xenial-security main restricted universe multiverse

依赖

apt update

apt install gcc make libreadline6 libreadline6-dev

apt install zlib1g-dev #参考https://www.systutorials.com/how-to-install-the-zlib-library-in-ubuntu/

提前设置环境变量

- 设置在/etc/profile, 便于root/postgres/ubuntu各用户公用

- 如果切换到postgres/ubuntu用户时, source /etc/profile即可

groupadd postgres

useradd -g postgres postgres

环境变量

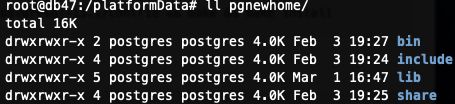

- 2.130的pg11在/usr/lib/postgres, pg13在PGNEWHOME里

- 注意PGHOME值得就是bin的上层目录, 其中有

bin include lib share四个文件夹 - 同时设置了PGDATA, PGUSER, PGCOLOR, 这样直接输入psql命令就可以很方便的连接PG, 免去输入缺省参数的烦恼

export PGNEWHOME=/platformData/pgnewhome

mkdir -p $PGNEWHOME

#chown -R postgres:postgres $PGNEWHOME

export PGNEWDATA=/platformData/pgnewdata

mkdir -p $PGNEWDATA

#chown -R postgres:postgres $PGNEWDATA

export PGNEWSOURCE=/platformData/pgnewsource

mkdir -p $PGNEWSOURCE

#chown -R postgres:postgres $PGNEWSOURCE

export PATH="$PGNEWHOME/bin:$PATH"

export LD_LIBRARY_PATH="$PGNEWHOME/lib:$LD_LIBRARY_PATH"

export PGDATA=$PGNEWDATA

export PGDATABASE=postgres

export PGUSER=postgres

export PG_COLOR=always

echo $PGUSER $PGNEWHOME $PGNEWDATA $PGNEWSOURCE

export LANG=en_US.utf8

export DATE=`date +"%Y-%m-%d %H:%M:%S"`

alias ll='ls -lh'

export PGDATA=/platformData/postgresql/11/main

export PATH="/usr/lib/postgresql/11/bin":$PATH

- 注意切换用户要用

su - postgres(这样才会把执行环境也切换掉), 而不要用su postgres

正式安装

# 开始编译

cd $PGNEWSOURCE/postgresql-13.1 && ./configure --prefix=$PGNEWHOME && make world && make install

这个prefix指的是二进制$PGNEWHOME的路径 (其下会有bin/include/lib/share4个文件夹)

make world (和make一样均为4min)

- extensions安装

cd $PGNEWSOURCE/postgresql-13.1/contrib && make && make install

- 新建用户

groupadd postgres && useradd -g postgres postgres

groupadd y && useradd -g y y

- 更改属组

cd /platformData && chown -R postgres:postgres pgnew*

- 目录

postgres@db130:/platformData/pgnewhome/bin$ ls

clusterdb dropdb initdb pg_archivecleanup pg_checksums pg_ctl pg_isready pg_resetwal pg_test_fsync pg_verifybackup postmaster vacuumdb

createdb dropuser oid2name pg_basebackup pg_config pg_dump pg_receivewal pg_restore pg_test_timing pg_waldump psql vacuumlo

createuser ecpg pg_amcheck pgbench pg_controldata pg_dumpall pg_recvlogical pg_rewind pg_upgrade postgres reindexdb

initdb

# 注意职能在postgres用户下执行

postgres@ubuntu:/platformData/pgnewhome/bin$ ./initdb -D $PGNEWDATA --data-checksums

启动

./pg_ctl -D /platformData/pgnewdata start

- 准备便捷启动脚本

root@db130:/platformData/pgnewhome/bin# cat pgstop.sh

# /bin/bash

su - postgres -c "pg_ctl stop -D $PGNEWDATA"

root@db130:/platformData/pgnewhome/bin# cat pgstart.sh

# /bin/bash

su - postgres -c "pg_ctl start -D $PGNEWDATA"

root@db130:/platformData/pgnewhome/bin# cat pgrestart.sh

# /bin/bash

bash pgstop.sh

bash pgstart.sh

- 开机自动启动

在/etc/rc.local中添加

su postgres -c "/usr/lib/postgresql/bin/pg_ctl -D /data/postgres start

- 命令行传递参数

su postgres -c "/usr/lib/postgresql/11/bin/pg_ctl start -D /data/data_gas/citus_fake_nodes/worker5433/db_data -o \"-h '*' -p 5433\""

设置密码

是整个postgres 实例共享这个密码。

ALTER USER postgres WITH PASSWORD 'postgres';

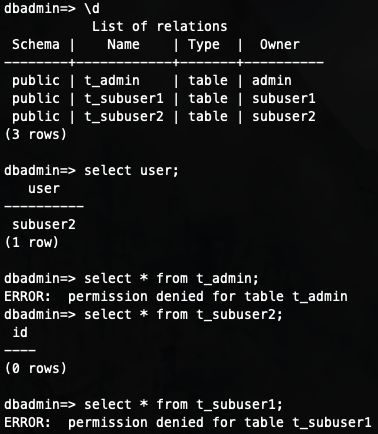

设定层级子账号, 隔离权限

PostgreSQL 用户组权限管理

- 先通过postgres user建admin user;

- 接下来就可以admin user 建 subuser1, subuser2, 并

grant subuser1与2 to admin; - admin 建t_admin; subuser1建t_subuser1; subuser2建t_subuser2;

- 然后各subuser虽能看到所有表名, 但都只能select * from 自己的表;

目录

pgdata:/u01/app/pg11/pgdata

pghome:/u01/app/pg11/pghome

pg waldir:/pg_wal

设置环境变量

http://www.postgres.cn/docs/11/libpq-envars.html

1.2 network

端口5433,监听所有IP

cd $PGNEWDATA && vim postgresql.conf

port = 5433

listen_addresses = '*'

authicen

- pg_hba.conf

本地postgres用户无需密码登录,其他用户需密码

cd $PGNEWDATA && vim pg_hba.conf

local all all trust # 本机从 peer 改为 trust 和 md5

host all all 0.0.0.0/0 md5 # 其他机器改为 md5(密码校验)

- .pgpass

- 参考官网格式

echo 'hostname:port:database:username:password' > ~/.pgpass # 放在用户家目录的.pgpass文件中

chmod 0600 ~/.pgpass && chown ubuntu:ubuntu ~/.pgpass # 切换为所在用户的权限和属组

允许任意ip的任意用户连接任意数据库,需密码

同上

1.3 用 ubuntu专用的 pg_create_cluster 搭建主从流复制

- pg_lsclusters

- pg_createcluster

- pg_ctlcluster

- pg_dropcluster

详见Creating and Managing Cluster with pg wrappers (Ubuntu only)

痛点:数据库所在磁盘受限,希望换到同机器的另一个磁盘,可通过搭建主备+切换主备+再删除原废弃的主实现,具体步骤如下:

pg_createcluster -d /backup/postgresql/11/main -s /var/run/postgresql-backup -e utf8 -p 5433 --start --start-conf auto 11 backup # 新建一个 5433 端口的从库

pg_ctlcluster # 查看实例列表

/usr/bin/pg_ctlcluster 11 backup restart # 重启

/usr/bin/pg_basebackup -h 数据库主节点ip -p 5432 -U postgres -F p -X stream -P -R -D /backup/postgresql/11/main/ -l replbackup # 执行后会等待数据库checkpoint再同步数据,且可看到同步数据的进度条。如果被卡主很久也可手动在主节点执行 checkpoint

在主节点 select * from pg_stat_replication; # 可看到主从同步的进度

/usr/bin/pg_ctlcluster 11 main stop # 关闭原主库

pg_ctlcluster 11 backup promote # 将备库提升为主库

vim /etc/postgresql/11/backup/postgresql.conf # 把5433修改为5432

pg_ctlcluster 11 backup restart # 重启backup数据库

netstat -lntup|grep postgres #检查数据库端口是否监听

管理

parameter

log

- 打开日志,格式csvlog

- 日志目录 /u01/app/pglog

- 日志最大size 50MB

- 每天切一个日志

- 同名日志覆盖

cd $PGNEWDATA && vim postgresql.conf

logging_collector = on # 打开日志, 必要条件

log_destination = 'csvlog' # 格式csvlog

log_directory = 'pg_log' # 日志目录 /u01/app/pglog

log_rotation_size = 50MB # 日志最大size 50MB

log_rotation_age = 1d #每天切一个日志

log_truncate_on_rotation = on #同名日志覆盖

pg_ctl -D $PGNEWDATA start

查日志

pg14的日志格式必须参照官网

外部表定义参照digoal

alisql详细定义

CREATE EXTENSION file_fdw;

CREATE SERVER pglog FOREIGN DATA WRAPPER file_fdw;

CREATE FOREIGN TABLE pglog (

log_time timestamp(3) with time zone,

user_name text,

database_name text,

process_id integer,

connection_from text,

session_id text,

session_line_num bigint,

command_tag text,

session_start_time timestamp with time zone,

virtual_transaction_id text,

transaction_id bigint,

error_severity text,

sql_state_code text,

message text,

detail text,

hint text,

internal_query text,

internal_query_pos integer,

context text,

query text,

query_pos integer,

location text,

application_name text,

backend_type text

) SERVER pglog

OPTIONS ( program 'find /platformData/pgnewdata/log -type f -name "*.csv" -exec cat {} \;', format 'csv' );

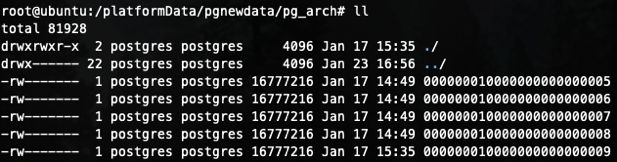

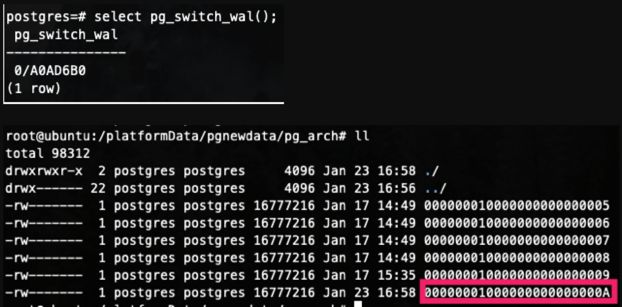

配置归档(删除7天前的归档)

- 归档目录:/pg_arch

mdkir /platformData/pg_arch # pg不会自动建目录, 需要使用者手动建目录, 因为下面的命令都是目标文件存在才会拷贝归档

# 尽量不要放在$PGNEWDATA下, 那就没有归档的意义了, 应分离放在别的目录

archive_mode = on

archive_command = 'cp %p /platformData/pg_arch/%f'

# 如果打开test ! -f会导致pg_switch_wal()实验失败

# 如果打开删除前x天的文件, 也会有问题

# 'test ! -f /platformData/pg_arch/%f && cp %p /platformData/pg_arch/%f && find /pg_arch/ -type f -mtime +7 -exec rm -f {} \;'

在pgsql中手动触发归档

产生的归档如下:

创建一个用户user1,最大连接数为10,work_mem为1MB

ALTER ROLE (ALTER USER是其别名)

# 最大连接数10 和 登陆

create user user1;

alter role user1 login;

alter role user1 CONNECTION LIMIT 10;

psql -d postgres -U user1 -p 5433

select current_user;

# 改密码

alter role user1 with password '123';

psql -d postgres -U user1 -p 123 -p5433

# 设置内存

ALTER ROLE user1 SET maintenance_work_mem = 10000;

alter role user1 set work_mem = 10000;

sql

创建pg_stat_statements插件并启用

https://www.postgresql.org/docs/11/pgstatstatements.html

# 配置文件

shared_preload_libraries = 'pg_stat_statements'

# 重启生效

pg_ctl start -D $PGNEWDATA

create extension pg_stat_statements

记录最大sql数量为10000

pg_stat_statements.max = 10000

pg_stat_statements.track = all

sql语句记录最大字节为4096

log记录所有ddl语句

log_statement = 'ddl'

重置一次pg_stat_statements

SELECT pg_stat_statements_reset();

template设置

postgresql 表空间创建、删除

创建一个新的表空间pg_tbs位置/pgdata/pg_tbs

mkdir -p /pg_tbs #一般不要和$PGNEWDATA放在一起

postgres=# create tablespace tbs owner postgres location '/pg_tbs'; # 表空间只能用绝对路径, 注意表空间不要已pg开头

create table t (id integer) tablespace tbs;

create database yy with template xx: 可用于复制数据库xx到yy

pg 常见各种操作, 其中包括template相关的

默认模板数据库的默认表空间为pg_tbs

ALTER DATABASE template1 SET TABLESPACE tbs;

回收所有普通用户对public的操作权限

http://postgres.cn/docs/11/sql-revoke.html

所有用户?? 目前只能revoke单个用户的…

revoke all PRIVILEGES on schema public from user1;

所有以默认模板创建的库包含一个public.dual表,任意用户可操作

- 设置模板库

postgres=# \c template1

You are now connected to database "template1" as user "postgres".

template1=# \d

Did not find any relations.

template1=# create table tb_default1(id int8);

template1=# insert into tb_default1 values (1);

template1=# insert into tb_default1 values (2);

template1=# insert into tb_default1 values (3);

template1=# \d

List of relations

Schema | Name | Type | Owner

--------+-------------+-------+----------

public | tb_default1 | table | postgres

- 应用模板库

postgres=# create database c;

postgres=# \c c

CREATE DATABASE

c=# select * from tb_default1 ;

id

----

1

2

3

以template0创建一个数据库db1

c=# create database db1 template template0;

CREATE DATABASE

c=# \c db1

You are now connected to database "db1" as user "postgres".

db1=# \d

Did not find any relations.

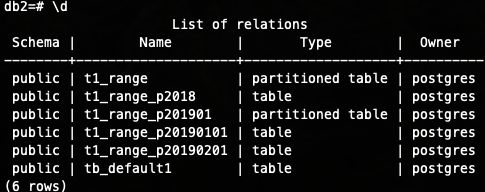

pg partition

db:postgres user:postgres schema:public

创建range分区表t1_range, 以gmt_time为分区创建三个分区

- t1_range_p2018:2018及以前的数据

- t1_range_p201901:2019年1月份的数据

- t1_range_p20190201:2019年2月1号的数据

- 注意如果要建二级分区参考pg多级分区,

create partition句尾要加PARTITION BY RANGE (a);

create table t1_range (name text, gmt_time timestamp) partition by range (gmt_time);

create table t1_range_p2018 partition of t1_range for values from

('-infinity') to ('2018-01-01 00:00:00');

-- 建一级分区时指定partition by range

create table t1_range_p201901 partition of t1_range for values from

('2019-01-01 00:00:00') to ('2019-02-01 00:00:00') partition by range (gmt_time);

-- 这是二级分区

create table t1_range_p20190101 partition of t1_range_p201901 for values from ('2019-01-01 00:00:00') to ('2019-01-02 00:00:00');

create table t1_range_p20190201 partition of t1_range for values from

('2019-02-01 00:00:00') to ('2019-02-02 00:00:00');

创建组合分区

- 创建t1_range的一个range分区t1_range_p2020 (2020全年数据)

create table if not exists t1_range (name text, gmt_time timestamp) partition by range (gmt_time);

create table t1_range_2020 partition of t1_range for values from

('2020-01-01 00:00:00') to ('2021-01-01 00:00:00') partition by list(name);

- 创建t1_range_p2020的一个分list分区,(name为分区键,包含’AAA’,‘BBBB’)

- pg分区表

- CREATE TABLE

- Table Partitioning

create table t1_range_2020_a partition of t1_range_2020 for values in ('AAA', 'BBB');

备份,主备

backup

pg_basebackup流程图原理

pg_basebackup源码原理

pg中checkPoint原理

full_page_writes陈华军

full_page_write EDB

简单讲就是pg在启动时先recovery, 会读wal, apply wal, apply时需要在每个页上apply, (每个page上记录了已经落盘的pd_lsn)

而page可能被损坏(如pg以8KB写入, linux以4KB为原子写入, 所以一个pg的page可能在崩溃的时候 只写了4KB(即一半, 没写完))

解决方式: checkPoint之后, 对每个page的第一次修改, 都要把整个page写入WAL, 这样在checkPoint之后, 就可以从WAL恢复出整个page => 拿到整个page的pd_lsn => apply每一行WAL记录

题目要求

- 执行以下脚本

db:postgres user:postgres schema:public

create table t1(id int);

insert into t1 (id) values (1),(2),(3);

- 正常操作

pg_basebackup备份全库

insert into t1 (id) values (4),(5),(6);

- 误操作

insert into t1 (id) values (7),(8),(9);

drop table t1;

- 发现7.3为误操作,恢复数据库到7.3 insert前的时间点

操作答案

- 前置准备

- 执行pg_switch_wal之前

- 新建bak文件夹, 用于存放pg_basebackup的文件

- 开始pg_basebackup(必须su -postgres用户下才可执行, su postgres都不行…)

- 写入正常数据+开始落盘wal

- 此时123456都把wal落盘了, 可靠的时间为 2021-01-23 21:54:18.826786+08, 然后insert

789三条数据

- 把base_backup的/bak的base.tar文件拷贝到这里PGDATA目录

-

把归档的wal拷到PGDATA里

-

/pg_archive是放wal的归档路径, 因为pg_switch_wal()了, 所以456三行会在/pg_archive中, 所以需要cp pg_archive/* pgnewdata/pg_wal -

而pg_base_backup产生的/bak目录下的base.tar+pg_wal.tar中有123三行数据

-

所以启动后会在base.tar+pg_wal.tar的基础上, 应用/pg_archive, 会得到123456六行数据

-

-

拷贝备份的表空间到PGDATA/pg_tblspc(存疑, 好像这一步不需要)

mkdir -p /platformData/pgnewdata/pg_tblspc/16685

cd /platformData/pgnewdata/pg_tblspc/16685

cp /bak/16685.tar .

tar -xf 16685.tar

root@db47:/platformData/pgnewdata# cat pg_log/postgresql-2021-03-13_211442.csv

2021-03-13 21:14:42.937 CST,,,85054,,604cbac2.14c3e,1,,2021-03-13 21:14:42 CST,,0,LOG,00000,"ending log output to stderr",,"Future log output will go to log destination ""csvlog"".",,,,,,,"","postmaster"

2021-03-13 21:14:42.937 CST,,,85054,,604cbac2.14c3e,2,,2021-03-13 21:14:42 CST,,0,LOG,00000,"starting PostgreSQL 13.1 on x86_64-pc-linux-gnu, compiled by gcc (Ubuntu 5.4.0-6ubuntu1~16.04.11) 5.4.0 20160609, 64-bit",,,,,,,,,"","postmaster"

2021-03-13 21:14:42.937 CST,,,85054,,604cbac2.14c3e,3,,2021-03-13 21:14:42 CST,,0,LOG,00000,"listening on IPv4 address ""0.0.0.0"", port 5432",,,,,,,,,"","postmaster"

2021-03-13 21:14:42.937 CST,,,85054,,604cbac2.14c3e,4,,2021-03-13 21:14:42 CST,,0,LOG,00000,"listening on IPv6 address ""::"", port 5432",,,,,,,,,"","postmaster"

2021-03-13 21:14:43.023 CST,,,85054,,604cbac2.14c3e,5,,2021-03-13 21:14:42 CST,,0,LOG,00000,"listening on Unix socket ""/tmp/.s.PGSQL.5432""",,,,,,,,,"","postmaster"

2021-03-13 21:14:43.111 CST,,,85056,,604cbac3.14c40,1,,2021-03-13 21:14:43 CST,,0,LOG,00000,"database system was interrupted; last known up at 2021-03-13 18:05:46 CST",,,,,,,,,"","startup"

2021-03-13 21:14:43.371 CST,,,85056,,604cbac3.14c40,2,,2021-03-13 21:14:43 CST,,0,LOG,00000,"entering standby mode",,,,,,,,,"","startup"

2021-03-13 21:14:43.400 CST,,,85056,,604cbac3.14c40,3,,2021-03-13 21:14:43 CST,,0,LOG,00000,"restored log file ""000000010000000000000018"" from archive",,,,,,,,,"","startup"

2021-03-13 21:14:43.714 CST,,,85056,,604cbac3.14c40,4,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"redo starts at 0/18000028",,,,,,,,,"","startup"

2021-03-13 21:14:43.745 CST,,,85056,,604cbac3.14c40,5,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"consistent recovery state reached at 0/18000100",,,,,,,,,"","startup"

2021-03-13 21:14:43.745 CST,,,85054,,604cbac2.14c3e,6,,2021-03-13 21:14:42 CST,,0,LOG,00000,"database system is ready to accept read only connections",,,,,,,,,"","postmaster"

2021-03-13 21:14:43.773 CST,,,85056,,604cbac3.14c40,6,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"restored log file ""000000010000000000000019"" from archive",,,,,,,,,"","startup"

2021-03-13 21:14:44.046 CST,,,85056,,604cbac3.14c40,7,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"restored log file ""00000001000000000000001A"" from archive",,,,,,,,,"","startup"

2021-03-13 21:14:44.291 CST,,,85056,,604cbac3.14c40,8,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"restored log file ""00000001000000000000001B"" from archive",,,,,,,,,"","startup"

2021-03-13 21:14:44.532 CST,,,85056,,604cbac3.14c40,9,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"restored log file ""00000001000000000000001C"" from archive",,,,,,,,,"","startup"

2021-03-13 21:14:44.766 CST,,,85056,,604cbac3.14c40,10,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"restored log file ""00000001000000000000001D"" from archive",,,,,,,,,"","startup"

2021-03-13 21:14:45.000 CST,,,85056,,604cbac3.14c40,11,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"restored log file ""00000001000000000000001E"" from archive",,,,,,,,,"","startup"

2021-03-13 21:14:45.214 CST,,,85056,,604cbac3.14c40,12,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"recovery stopping before commit of transaction 1545, time 2021-03-13 18:18:35.253716+08",,,,,,,,,"","startup"

2021-03-13 21:14:45.214 CST,,,85056,,604cbac3.14c40,13,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"pausing at the end of recovery",,"Execute pg_wal_replay_resume() to promote.",,,,,,,"","startup"

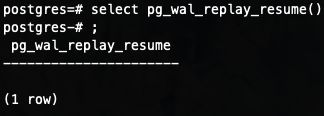

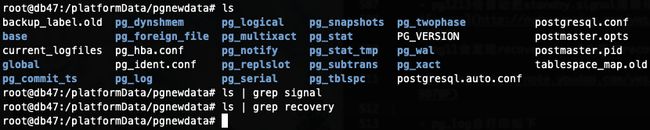

- 此时数据已经恢复了

- 然后执行select pg_wal_replay_resume(), 以去除pg的只读模式

> pg1213会自动把standby.signal清除掉

> pg11会发现recovery.conf会变成recovery.done

pg_log会打印如下

2021-03-13 21:24:09.801 CST,,,85056,,604cbac3.14c40,14,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"redo done at 0/1E000230",,,,,,,,,"","startup"

2021-03-13 21:24:09.801 CST,,,85056,,604cbac3.14c40,15,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"last completed transaction was at log time 2021-03-13 18:07:46.284416+08",,,,,,,,,"","startup"

2021-03-13 21:24:10.043 CST,,,85056,,604cbac3.14c40,16,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"selected new timeline ID: 2",,,,,,,,,"","startup"

2021-03-13 21:24:10.313 CST,,,85056,,604cbac3.14c40,17,,2021-03-13 21:14:43 CST,1/0,0,LOG,00000,"archive recovery complete",,,,,,,,,"","startup"

2021-03-13 21:24:10.641 CST,,,85054,,604cbac2.14c3e,7,,2021-03-13 21:14:42 CST,,0,LOG,00000,"database system is ready to accept connections",,,,,,,,,"","postmaster"

一般在生产环境做增量备份的方式:

每天业务低峰期(7天的话增量恢复时间太慢了)1个全量pg_basebackup拷贝到一个备份目录(主要消耗磁盘IO), 然后在全量基础上应用pg_archive做增量.

master-slave

考点: 用pg_basebackup备份, 然后用pg_promote把从变主

pg12流复制-能跑通

创建2个流复制备库,端口5434同步只读备库, 端口5435异步只读备库

- 主库设置

# master

root@db47:/platformData# su - postgres

postgres@db47:/platformData$ initdb -D /platformData/db-master

/platformData/pgnewhome/bin/pg_ctl start -D /platformData/db-master

############# db-master/postgresql.conf #############

# 连接

listen_addresses = '*'

port = 5432

# 归档: 确保将WAL文件归档到从属群集可以访问的位置, %f将替换为目标WAL文件的文件名,而%p将替换为目标WAL文件的路径

archive_mode = on

archive_command = 'cp %p /platformData/pg_arch/%f'

# 流复制

wal_level = replica 默认就有

max_wal_senders = 10 默认就有

wal_keep_size = 0 默认就有

hot_standby = on 默认就有

# 其他: 必须启用pg_rewind工具才能使其正常工作

wal_log_hints = on

############# db-master/pg_hba.conf #############

# 确保主群集允许从从群集远程复制连接

# Allow replication connections from 127.0.0.1, by a user with the replication privilege.

# TYPE DATABASE USER ADDRESS METHOD

host replication all 127.0.0.1/32 trust

# 使用上述配置文件启动master数据库集群,创建具有复制权限的超级用户,以及一个名为clusterdb的数据库。

$ pg_ctl -D db-master start

$ createuser cary -s --replication

$ createdb clusterdb

# 将一些测试数据插入主群集。为简单起见,我们将向test_table插入100个整数。

$ psql -d clusterdb -U cary -c "CREATE TABLE test_table(x integer)"

CREATE TABLE

$ psql -d clusterdb -U cary -c "INSERT INTO test_table(x) SELECT y FROM generate_series(1, 100) a(y)"

INSERT 0 100

$ psql -d clusterdb -U cary -c "SELECT count(*) from test_table"

count

-------

100

(1 row)

- 从库设置

- 异步从库如下

- 同步从库再

- 在主库的postgresql.conf中增加

- synchronous_commit=off/local/remote_write/remote_apply/on(默认就是on)

- synchronous_standby_names=‘node2’

- 在从库postgresql.auto.conf中的primary_conninfo增加

- application_name=node2

- 在主库的postgresql.conf中增加

pg_basebackup -h 127.0.0.1 -U cary -p 5432 -D db-slave1 -P -Xs -R

# 其中-D是从库的数据路径, -h -U -p 都是主库的连接信息, -P是显示进度, -Xs表示以stream模式并行接收wal部分, -R表示在备库创建standby.signal文件

postgres@db47:/platformData$ ls db-slave1/

backup_label pg_ident.conf pg_stat pg_xact

backup_manifest pg_logical pg_stat_tmp postgresql.auto.conf

base pg_multixact pg_subtrans postgresql.conf

global pg_notify pg_tblspc standby.signal

pg_commit_ts pg_replslot pg_twophase

pg_dynshmem pg_serial PG_VERSION

pg_hba.conf pg_snapshots pg_wal

# 让我们先检查一下db-slave/postgresql.auto.conf,我们将看到pg_basebackup已经为我们创建了primary_conninfo, 它告诉从群集应从何处, 以及如何从主群集中流式传输。确保postgresql.auto.conf中存在此行。

postgres@db47:/platformData$ cat db-slave1/postgresql.auto.conf

# Do not edit this file manually!

# It will be overwritten by the ALTER SYSTEM command.

primary_conninfo = 'user=cary passfile=''/var/lib/postgresql/.pgpass'' channel_binding=disable host=127.0.0.1 port=5432 sslmode=disable sslcompression=0 ssl_min_protocol_version=TLSv1.2 gssencmode=disable krbsrvname=postgres target_session_attrs=any'

# 让我们检查db-slave/postgresql.conf并更新一些参数: 相比主库port不同, 且多了restore_command和archive_cleanup_command的部分

# 由于db-slave/postgresql.conf是通过pg_basebackup从主群集直接复制的,因此我们需要将其更改为port与主端口不同的端口(在本例中为5433),因为两者都在同一台计算机上运行。我们将需要填写restore_command和archive_cleanup_command让从属群集知道如何获取已归档的WAL文件以进行流传输。这两个参数曾经在recovery.conf中定义,PG12将其移至postgresql.conf中。

############# db-slave/postgresql.conf #############

# 连接

listen_addresses = '*'

port = 5433

# 归档: 确保将WAL文件归档到从属群集可以访问的位置, %f将替换为目标WAL文件的文件名,而%p将替换为目标WAL文件的路径

archive_mode = on

archive_command = 'cp %p /platformData/pg_arch/%f'

# 流复制

wal_level = replica 默认就有

max_wal_senders = 10 默认就有

wal_keep_size = 0 默认就有

hot_standby = on 默认就有

# 其他: 必须启用pg_rewind工具才能使其正常工作

wal_log_hints = on 通过pg_basebackup的话默认会和主库相同

# 从库恢复的命令

restore_command = 'cp /platformData/pg_arch/%f %p'

archive_cleanup_command = 'pg_archivecleanup /platformData/pg_arch/ %r'

# 请注意,在db-slave目录中,standby.signal将自动创建一个新文件,pg_basebackup指示该从集群将以standby模式运行。

# 现在,让我们启动从集群:

$ pg_ctl -D db-slave1 start

- 验证流复制

# 一旦主集群和从集群都已设置并运行,我们应该从ps -ef命令中看到一些后端进程已启动来实现复制,即walsender和walreceiver。

postgres@db47:/platformData$ ps -ef | grep wal

postgres 45300 45296 0 16:32 ? 00:00:00 postgres: walwriter

postgres 47897 47886 0 16:50 ? 00:00:00 postgres: walreceiver streaming 0/3000148

postgres 47898 45296 0 16:50 ? 00:00:00 postgres: walsender cary 127.0.0.1(22158) streaming 0/3000148

# 还可以通过向主集群发出查询来详细检查复制状态

postgres@db47:/platformData$ psql -d clusterdb -U cary -c "select * from pg_stat_replication;" -x -p 5432

-[ RECORD 1 ]----+------------------------------

pid | 47898

usesysid | 16386

usename | cary

application_name | walreceiver

client_addr | 127.0.0.1

client_hostname |

client_port | 22158

backend_start | 2021-03-14 16:50:55.378784+08

backend_xmin |

state | streaming

sent_lsn | 0/3000148

write_lsn | 0/3000148

flush_lsn | 0/3000148

replay_lsn | 0/3000148

write_lag |

flush_lag |

replay_lag |

sync_priority | 0

sync_state | async

reply_time | 2021-03-14 16:52:25.548362+08

# 最后,我们可以将其他数据插入主群集,并验证从属群集是否也更新了数据。

postgres@db47:/platformData$ psql -d clusterdb -U cary -c "SELECT count(*) from test_table" -p 5433

count

-------

100

(1 row)

postgres@db47:/platformData$ psql -d clusterdb -U cary -c "SELECT count(*) from test_table" -p 5432

count

-------

100

(1 row)

postgres@db47:/platformData$ psql -d clusterdb -U cary -c "INSERT INTO test_table(x) SELECT y FROM generate_series(1, 100) a(y)" -p 5432

INSERT 0 100

postgres@db47:/platformData$ psql -d clusterdb -U cary -c "SELECT count(*) from test_table" -p 5433

count

-------

200

(1 row)

- 设置复制槽

前面的步骤说明了如何正确设置主群集和从群集之间的流复制。但是,在某些情况下,由于某些原因,从属服务器可能会断开连接,从而导致时间延长,并且当某些未复制的WAL文件被回收或从wal_keep_segments参数控制的主群集中删除时,可能无法与主服务器进行复制。

复制槽确保主服务器可以为所有从服务器保留足够的WAL段,防止主服务器删除可能导致从服务器上发生恢复冲突的行。

让我们在主集群上创建一个复制槽slave:

postgres@db47:/platformData$ psql -d clusterdb -U cary -c "select * from pg_create_physical_replication_slot('slave')" -p 5432

slot_name | lsn

-----------+-----

slave |

(1 row)

postgres@db47:/platformData$ psql -d clusterdb -U cary -c "select * from pg_replication_slots" -x -p 5432

-[ RECORD 1 ]-------+---------

slot_name | slave

plugin |

slot_type | physical

datoid |

database |

temporary | f

active | f

active_pid |

xmin |

catalog_xmin |

restart_lsn |

confirmed_flush_lsn |

wal_status |

safe_wal_size |

# 我们刚刚在master上创建了复制槽slave,该复制槽当前未处于活动状态(active = f)

# 让我们修改从属服务器postgresql.conf并使其连接到主服务器的复制槽

############# db-slave/postgresql.conf #############

primary_slot_name = 'slave'

# 重启从库生效

pg_ctl restart -D db-slave1/

# 如果一切正常,则检查主服务器上的复制槽的插槽状态应为活动状态。

postgres@db47:/platformData$ psql -d clusterdb -U cary -c "select * from pg_replication_slots" -x -p 5432

-[ RECORD 1 ]-------+---------

slot_name | slave

plugin |

slot_type | physical

datoid |

database |

temporary | f

active | f

active_pid |

xmin |

catalog_xmin |

restart_lsn |

confirmed_flush_lsn |

wal_status |

safe_wal_size |

当5434宕机, 5435可升为同步备库

pg多个同步复制服务器

基于优先级的多同步后备的synchronous_standby_names示例1:

synchronous_standby_names = ‘db5434, db5435’

在这个例子中,db5434是同步备库,db5435为潜在同步备库,当db5434不可用时,db5435升级为同步备库

主备切换

- 关老主库模拟主库宕机

- 提老从库

- 老主库设置standby.signal和primary_conninfo, 然后重启老主库

延迟应用日志

pgsql物理复制(pgsql 备库的搭建以及角色互换,提升)

pg延迟备库-自己实验是ok的

echo "recovery_min_apply_delay = 6h" >> /opt/data5556/postgresql.conf

强化:应用管理

对象管理

目的: 把热表放在ssd, ssd建一个表空间

使用超级用户创建应用用户appuser,赋权createdb、login,创建属主为appuser的表空间并命名为exam,指向/exam(若没有此目录请自行创建并管理权限),切换到appuser用户创建app数据库(要求app数据库要在exam表空间内),在app数据库中创建app表(id int)。

pg的登录、创建用户、数据库并赋权

postgres=# create user appuser;

CREATE ROLE

# 用户赋权

postgres=# alter role appuser createdb;

ALTER ROLE

postgres=# alter role appuser login;

ALTER ROLE

postgres=# \du

List of roles

Role name | Attributes | Memb

er of

-----------+------------------------------------------------------------+-

appuser | Create DB | {}

postgres | Superuser, Create role, Create DB, Replication, Bypass RLS | {}

repuser | Replication +| {}

| 5 connections

# 建表空间

root@db47:~# mkdir /exam

root@db47:~# chown postgres:postgres /exam/

postgres=# create tablespace exam location '/exam';

CREATE TABLESPACE

# 赋'表空间'的权给user

postgres=# grant all PRIVILEGES ON tablespace exam to appuser;

GRANT

# 登陆

postgres@db47:/opt$ psql -p5555 -Uappuser

psql (13.1)

Type "help" for help.

# 建库建表

postgres=> create database app tablespace exam;

CREATE DATABASE

postgres=> \c app;

You are now connected to database "app" as user "appuser".

app=> create table app (id int);

CREATE TABLE

逻辑导入

使用psql工具,导入exam.sql到app库中(注:sql文件中包含student表和exam表)。

SET statement_timeout = 0;

SET lock_timeout = 0;

SET idle_in_transaction_session_timeout = 0;

SET client_encoding = 'UTF8';

SET standard_conforming_strings = on;

SET check_function_bodies = false;

SET client_min_messages = warning;

SET row_security = off;

CREATE EXTENSION IF NOT EXISTS plpgsql WITH SCHEMA pg_catalog;

COMMENT ON EXTENSION plpgsql IS 'PL/pgSQL procedural language';

SET search_path = public, pg_catalog;

SET default_tablespace = '';

SET default_with_oids = false;

CREATE TABLE exam (

name character varying(10),

subject character varying(10),

score integer

);

ALTER TABLE exam OWNER TO appuser;

CREATE TABLE student (

id integer,

name character varying(10),

age integer

);

ALTER TABLE student OWNER TO appuser;

INSERT INTO exam VALUES ('张三', '英语', 78);

INSERT INTO exam VALUES ('张三', '语文', 68);

INSERT INTO exam VALUES ('张三', '数学', 99);

INSERT INTO exam VALUES ('李四', '语文', 89);

INSERT INTO exam VALUES ('李四', '数学', NULL);

INSERT INTO exam VALUES ('李四', '英语', 57);

INSERT INTO exam VALUES ('王五', '语文', 55);

INSERT INTO exam VALUES ('王五', '数学', 77);

INSERT INTO exam VALUES ('赵六', '语文', 79);

INSERT INTO exam VALUES ('赵六', '数学', 48);

INSERT INTO exam VALUES ('赵六', '英语', 27);

INSERT INTO student VALUES (1, '张三', 14);

INSERT INTO student VALUES (2, '李四', 14);

INSERT INTO student VALUES (3, '王五', 15);

INSERT INTO student VALUES (4, '赵六', 16);

INSERT INTO student VALUES (5, '吴七', 15);

root@db47:/platformData# psql -f exam.sql -p5555

SET

SET

SET

SET

SET

SET

SET

SET

CREATE EXTENSION

COMMENT

SET

SET

SET

CREATE TABLE

ALTER TABLE

CREATE TABLE

ALTER TABLE

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

INSERT 0 1

逻辑导出

使用pgdump工具导出一份sql文件,命名为answer.sql。(使用-f选项即可. -f选项的文本用psql恢复, 其他格式的用pg_restore恢复)。

root@db47:/platformData# pg_dump -Fp -p5555 -fanswer.sql

root@db47:/platformData# tail answer.sql

5 2021-03-14 18:08:28.069683+08

6 2021-03-14 19:45:24.094873+08

7 2021-03-14 19:47:01.302179+08

\.

--

-- PostgreSQL database dump complete

--

物理备份

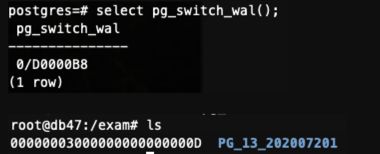

配置postgresql,开启归档所需相关参数(wal_level、archive_mode、archive_command),归档目录为/exam,重启数据库使参数生效,然后切换一次wal日志(pg_switch_wal),在/exam中查看是否归档成功。使用pg_basebackup对数据库做一次基础备份(目录为/backup,请注意目录权限,使用-Fp格式,并将exam表空间备份到/backup/exam,选项提示-D -Fp -Xs -v -h -U -T)。

mkdir -p /exam

chown -R postgres:postgres /exam

wal_level = replica

archive_mode = on

archive_command = 'cp %p /exam/%f'

postgres@db47:~$ cd /platformData/

postgres@db47:/platformData$ pg_ctl restart -D data5555/

# 建目录

root@db47:/exam# mkdir -p /backup

root@db47:/exam# chown -R postgres:postgres /backup

# 开始备份

postgres@db47:/platformData$ pg_basebackup -D /backup -Fp -Xs -v -h127.0.0.1 -p5555 -Upostgres --tablespace-mapping=/exam=/backup/exam

pg_basebackup: initiating base backup, waiting for checkpoint to complete

pg_basebackup: checkpoint completed

pg_basebackup: write-ahead log start point: 0/F000028 on timeline 3

pg_basebackup: starting background WAL receiver

pg_basebackup: created temporary replication slot "pg_basebackup_109279"

pg_basebackup: write-ahead log end point: 0/F000100

pg_basebackup: waiting for background process to finish streaming ...

pg_basebackup: syncing data to disk ...

pg_basebackup: renaming backup_manifest.tmp to backup_manifest

pg_basebackup: base backup completed

# 检查结果

root@db47:/exam# cd /backup/

root@db47:/backup# ls

backup_label pg_dynshmem pg_serial PG_VERSION

backup_manifest pg_hba.conf pg_snapshots pg_wal

base pg_ident.conf pg_stat pg_xact

exam pg_logical pg_stat_tmp postgresql.auto.conf

global pg_multixact pg_subtrans postgresql.conf

log pg_notify pg_tblspc

pg_commit_ts pg_replslot pg_twophase

root@db47:/backup# ls exam

PG_13_202007201

PITR恢复

使用数据库超级用户删除app库中的exam表,查看此时的时间戳(current_timestamp)。切换一次wal日志(pg_switch_wal),关闭数据库,并将此数据目录mv为data.bak文件,将基础备份放到数据目录下。配置此时数据目录(基础备份)中的recovery.conf文件(restore_command、recovery_target_time、recovery_target_timeline),将删除的表恢复。启动数据库,查看是否有删除的exam表。

# 因为上一步骤已经做过pg_basepackup, 备份到/backup了

# 此时先drop表

postgres=# drop table exam;

DROP TABLE

# 切换使wal落盘

postgres=# select pg_switch_wal();

pg_switch_wal

---------------

0/10005470

(1 row)

# 查看当前时间, 推算出故障前的时间点

postgres=# select now();

now

-------------------------------

2021-03-15 09:14:19.013383+08

(1 row)

假设故障点是5min前, 则为 2021-03-15 09:09:19.013383+08

# 检查是否pg_wal落盘了: 000000030000000000000010是已归档的文件, 000000030000000000000011是正在写的wal

postgres@db47:/platformData/data5555$ ll -rt pg_wal/

total 81M

-rw------- 1 postgres postgres 41 Mar 14 18:05 00000002.history

-rw------- 1 postgres postgres 83 Mar 14 18:07 00000003.history

-rw------- 1 postgres postgres 16M Mar 15 00:04 000000030000000000000012

-rw------- 1 postgres postgres 16M Mar 15 00:09 000000030000000000000013

-rw------- 1 postgres postgres 16M Mar 15 00:09 000000030000000000000014

-rw------- 1 postgres postgres 337 Mar 15 00:09 00000003000000000000000F.00000028.backup

-rw------- 1 postgres postgres 16M Mar 15 09:12 000000030000000000000010

drwx------ 2 postgres postgres 4.0K Mar 15 09:12 archive_status

-rw------- 1 postgres postgres 16M Mar 15 09:12 000000030000000000000011

# 检查wal是否归档了: 000000030000000000000010是已归档的文件, 确实已经归档了

postgres@db47:/platformData/data5555$ grep archive_command postgresql.conf

archive_command = 'cp %p /exam/%f' # command to use to archive a logfile segment

postgres@db47:/platformData/data5555$ ll -rt /exam/

total 65M

drwxr-x--- 3 postgres postgres 4.0K Mar 14 20:12 PG_13_202007201

-rw------- 1 postgres postgres 16M Mar 15 00:04 00000003000000000000000D

-rw------- 1 postgres postgres 16M Mar 15 00:09 00000003000000000000000E

-rw------- 1 postgres postgres 16M Mar 15 00:09 00000003000000000000000F

-rw------- 1 postgres postgres 337 Mar 15 00:09 00000003000000000000000F.00000028.backup

-rw------- 1 postgres postgres 16M Mar 15 09:12 000000030000000000000010

# 关闭数据库

postgres@db47:/platformData$ pg_ctl stop -D data5555/

waiting for server to shut down.... done

server stopped

# 备份数据路径

postgres@db47:/platformData$ mv data5555/ data5555.bak

postgres@db47:/platformData$ ls

data5555.bak exam.sql pg_arch pgnewhome pgnewdata pgnewsource pgnewtablespace restart.sh start.sh

# 把pg_basebackup备份的数据拷贝过来, 作为基数据

postgres@db47:/platformData$ mkdir data5555

postgres@db47:chmod 0750 data5555

postgres@db47:/platformData$ ls /backup

backup_label exam pg_commit_ts pg_ident.conf pg_notify pg_snapshots pg_subtrans PG_VERSION postgresql.auto.conf

backup_manifest global pg_dynshmem pg_logical pg_replslot pg_stat pg_tblspc pg_wal postgresql.conf

base log pg_hba.conf pg_multixact pg_serial pg_stat_tmp pg_twophase pg_xact

postgres@db47:/platformData$ cp -r /backup/* data5555

# 改postgresql.conf的restore_command、recovery_target_time、recovery_target_timeline配置

archive_command = 'cp %p /exam/%f'

restore_command = 'cp /exam/%f %p'

recovery_target_time = '2021-03-15 09:09:19.013383+08'

recovery_target_timeline = 'latest' # 'current', 'latest', or timeline ID

# 然后重启数据库看是否能恢复

postgres@db47:/platformData$ pg_ctl start -D data5555

waiting for server to start....2021-03-15 09:29:55.294 CST [184823] LOG: starting PostgreSQL 13.1 on x86_64-pc-linux-gnu, compiled by gcc (Ubuntu 5.4.0-6ubuntu1~16.04.11) 5.4.0 20160609, 64-bit

2021-03-15 09:29:55.294 CST [184823] LOG: listening on IPv6 address "::1", port 5555

2021-03-15 09:29:55.294 CST [184823] LOG: listening on IPv4 address "127.0.0.1", port 5555

2021-03-15 09:29:55.373 CST [184823] LOG: listening on Unix socket "/tmp/.s.PGSQL.5555"

2021-03-15 09:29:55.440 CST [184824] LOG: database system was interrupted; last known up at 2021-03-15 00:09:53 CST

2021-03-15 09:29:55.816 CST [184824] LOG: redo starts at 0/F000028

2021-03-15 09:29:55.848 CST [184824] LOG: consistent recovery state reached at 0/F000100

2021-03-15 09:29:55.848 CST [184824] LOG: redo done at 0/F000100

.2021-03-15 09:29:56.268 CST [184823] LOG: database system is ready to accept connections

done

server started

# 检查发现exam表确实被恢复了

root@db47:~# psql -p5555

psql (13.1)

Type "help" for help.

postgres=# \d

List of relations

Schema | Name | Type | Owner

--------+---------+-------+----------

public | exam | table | appuser

public | student | table | appuser

public | t | table | postgres

(3 rows)

postgres=# select * from exam;

name | subject | score

------+---------+-------

张三 | 英语 | 78

张三 | 语文 | 68

张三 | 数学 | 99

李四 | 语文 | 89

李四 | 数学 |

李四 | 英语 | 57

王五 | 语文 | 55

王五 | 数学 | 77

赵六 | 语文 | 79

赵六 | 数学 | 48

赵六 | 英语 | 27

(11 rows)

流复制主库(host1)配置:

创建复制角色rep,设置登录(login)和复制(replication)权限,并设置密码为123。在pg_hba.conf中设置rep用户可以从对应网段进行流复制请求。配置postgresql.conf(listen_addresses、max_wal_senders、wal_level、wal_keep_segments),重启数据库。

postgres=# create user rep;

CREATE ROLE

postgres=# alter user rep login;

ALTER ROLE

postgres=# alter user rep replication;

ALTER ROLE

postgres=# alter user rep with password '123';

ALTER ROLE

# pg_hba.conf设置如下

host replication rep 127.0.0.1/32 md5

# postgresql.conf设置如下

listen_addresses = '*'

max_wal_senders = 10 默认就有

wal_level = replica 默认就有

wal_keep_size = 100 # in megabytes; 0 disables

# 重启主库生效

postgres@db47:/platformData$ pg_ctl restart -D data5555

流复制备库(host2)配置:

创建数据目录,在备库使用pg_basebackup拉取主库的数据形成基础备份放到备库的数据目录下,配置备库数据目录下的recovery.conf文件(recovery_target_timeline、standby_mode、primary_conninfo),启动备库。查看流复制相关进程是否存在,并在主库的app表插入一条数据,在备库验证是否有此数据。

- 经验:

最好不要带表空间, 否则会有各种问题, 比如如下备库总会找不到文件而进入recovery模式

waiting for server to start....2021-03-15 10:06:37.486 CST [190236] LOG: starting PostgreSQL 13.1 on x86_64-pc-linux-gnu, compiled by gcc (Ubuntu 5.4.0-6ubuntu1~16.04.11) 5.4.0 20160609, 64-bit

2021-03-15 10:06:37.487 CST [190236] LOG: listening on IPv4 address "0.0.0.0", port 5556

2021-03-15 10:06:37.487 CST [190236] LOG: listening on IPv6 address "::", port 5556

2021-03-15 10:06:37.562 CST [190236] LOG: listening on Unix socket "/tmp/.s.PGSQL.5556"

2021-03-15 10:06:37.640 CST [190237] LOG: database system was shut down in recovery at 2021-03-15 10:06:28 CST

cp: cannot stat '/exam/00000004.history': No such file or directory

2021-03-15 10:06:37.644 CST [190237] LOG: entering standby mode

cp: cannot stat '/exam/00000003.history': No such file or directory

2021-03-15 10:06:37.676 CST [190237] LOG: restored log file "000000030000000000000015" from archive

2021-03-15 10:06:37.908 CST [190237] LOG: redo starts at 0/15000060

2021-03-15 10:06:37.938 CST [190237] LOG: restored log file "000000030000000000000016" from archive

2021-03-15 10:06:38.174 CST [190237] LOG: consistent recovery state reached at 0/16000318

2021-03-15 10:06:38.174 CST [190237] LOG: recovery stopping before commit of transaction 529, time 2021-03-15 09:55:04.807326+08

2021-03-15 10:06:38.174 CST [190237] LOG: pausing at the end of recovery

2021-03-15 10:06:38.174 CST [190237] HINT: Execute pg_wal_replay_resume() to promote.

2021-03-15 10:06:38.174 CST [190236] LOG: database system is ready to accept read only connections

- 正文

# 做pg_basebackup做备库的base数据

postgres@db47:/platformData$ pg_basebackup -Fp -h127.0.0.1 -p5555 -Urep -D data5556 -P -v -Xs

# 数据会自动备份好

root@db47:/platformData# ls data5556

backup_label exam pg_dynshmem pg_multixact pg_snapshots pg_tblspc pg_xact

backup_label.old global pg_hba.conf pg_notify pg_stat pg_twophase postgresql.auto.conf

backup_manifest log pg_ident.conf pg_replslot pg_stat_tmp PG_VERSION postgresql.conf

base pg_commit_ts pg_logical pg_serial pg_subtrans pg_wal standby.signal

# 配置会自动写好

root@db47:/platformData# grep recovery_target_timeline data5556/postgresql.conf

recovery_target_timeline = 'latest' # 'current', 'latest', or timeline ID

root@db47:/platformData# grep primary_conninfo data5556/postgresql.auto.conf

primary_conninfo = 'user=rep passfile=''/var/lib/postgresql/.pgpass'' channel_binding=disable host=127.0.0.1 port=5555 sslmode=disable sslcompression=0 ssl_min_protocol_version=TLSv1.2 gssencmode=disable krbsrvname=postgres target_session_attrs=any'

# 需要改端口号

root@db47:/platformData# grep port data5556/postgresql.conf

port = 5456 # (change requires restart)

wal_log_hints = on

# 然后启动备库

postgres@db47:/platformData$ pg_ctl start -D data5556

waiting for server to start....2021-03-15 09:49:00.864 CST [187647] LOG: starting PostgreSQL 13.1 on x86_64-pc-linux-gnu, compiled by gcc (Ubuntu 5.4.0-6ubuntu1~16.04.11) 5.4.0 20160609, 64-bit

2021-03-15 09:49:00.864 CST [187647] LOG: listening on IPv4 address "0.0.0.0", port 5456

2021-03-15 09:49:00.864 CST [187647] LOG: listening on IPv6 address "::", port 5456

2021-03-15 09:49:00.946 CST [187647] LOG: listening on Unix socket "/tmp/.s.PGSQL.5456"

2021-03-15 09:49:00.985 CST [187647] LOG: could not open directory "pg_tblspc/16392/PG_13_202007201": No such file or directory

2021-03-15 09:49:01.027 CST [187648] LOG: database system was interrupted; last known up at 2021-03-15 09:45:45 CST

cp: cannot stat '/exam/00000004.history': No such file or directory

2021-03-15 09:49:01.291 CST [187648] LOG: entering standby mode

cp: cannot stat '/exam/00000003.history': No such file or directory

2021-03-15 09:49:01.323 CST [187648] LOG: restored log file "000000030000000000000014" from archive

2021-03-15 09:49:01.543 CST [187648] LOG: could not open directory "pg_tblspc/16392/PG_13_202007201": No such file or directory

2021-03-15 09:49:01.594 CST [187648] LOG: could not open directory "pg_tblspc/16392/PG_13_202007201": No such file or directory

2021-03-15 09:49:01.595 CST [187648] LOG: redo starts at 0/14000028

2021-03-15 09:49:01.627 CST [187648] LOG: consistent recovery state reached at 0/14000100

2021-03-15 09:49:01.627 CST [187647] LOG: database system is ready to accept read only connections

cp: cannot stat '/exam/000000030000000000000015': No such file or directory

done

server started

postgres@db47:/platformData$ 2021-03-15 09:49:01.674 CST [187670] LOG: started streaming WAL from primary at 0/15000000 on timeline 3

# 主库如下1

postgres=# \d

List of relations

Schema | Name | Type | Owner

--------+---------+-------+----------

public | exam | table | appuser

public | student | table | appuser

public | t | table | postgres

postgres=# show port;

port

------

5555

(1 row)

# 从库如下1

postgres=# \d

List of relations

Schema | Name | Type | Owner

--------+---------+-------+----------

public | exam | table | appuser

public | student | table | appuser

public | t | table | postgres

postgres=# show port;

port

------

5556

(1 row)

# 主库如下2

postgres=# select count(*) from t;

count

-------

8

(1 row)

postgres=# insert into t values(101, now());

INSERT 0 1

# 从库如下2

postgres=# select count(*) from t;

count

-------

8

(1 row)

# 主库如下3

postgres=# select count(*) from t;

count

-------

8

(1 row)

postgres=# select * from t;

id | info

-----+-------------------------------

1 | 2021-03-14 17:57:43.71824+08

2 | 2021-03-14 17:57:43.918607+08

3 | 2021-03-14 18:03:23.617959+08

100 | 2021-03-14 18:06:14.945316+08

5 | 2021-03-14 18:07:59.478954+08

5 | 2021-03-14 18:08:28.069683+08

6 | 2021-03-14 19:45:24.094873+08

7 | 2021-03-14 19:47:01.302179+08

(8 rows)

# 从库如下3

postgres=# select count(*) from t;

count

-------

8

(1 row)

主备切换:

模拟主库故障(关库),提升备库的状态,将备库变为可读写的单库。

# 模拟主库故障(关库)

postgres@db47:/platformData$ pg_ctl stop -D data5555

waiting for server to shut down.... done

server stopped

# 提升备库的状态,将备库变为可读写的单库

postgres=# show port;

port

------

5556

(1 row)

postgres=# select pg_promote();

pg_promote

------------

t

(1 row)

# 此时'老备库'日志可看出已经提升成功

2021-03-16 13:15:30.169 CST [190237] LOG: received promote request

2021-03-16 13:15:30.169 CST [190237] LOG: redo done at 0/16000318

cp: cannot stat '/exam/00000004.history': No such file or directory

2021-03-16 13:15:30.288 CST [190237] LOG: selected new timeline ID: 4

2021-03-16 13:15:30.537 CST [190237] LOG: archive recovery complete

cp: cannot stat '/exam/00000003.history': No such file or directory

2021-03-16 13:15:30.689 CST [190236] LOG: database system is ready to accept connections

# 然后发现'老备库'作为单库, 确实可以做写操作了

postgres=# \d

List of relations

Schema | Name | Type | Owner

--------+---------+-------+----------

public | exam | table | appuser

public | student | table | appuser

public | t | table | postgres

(3 rows)

postgres=# select * from t;

id | info

-----+-------------------------------

1 | 2021-03-14 17:57:43.71824+08

2 | 2021-03-14 17:57:43.918607+08

3 | 2021-03-14 18:03:23.617959+08

100 | 2021-03-14 18:06:14.945316+08

5 | 2021-03-14 18:07:59.478954+08

5 | 2021-03-14 18:08:28.069683+08

6 | 2021-03-14 19:45:24.094873+08

7 | 2021-03-14 19:47:01.302179+08

(8 rows)

postgres=# insert into t values(200, now());

INSERT 0 1

同步流复制:

在原主库(host1)数据目录的配置文件中设置(synchronous_standby_names),重启数据库。并设置备库(host2)的recovery.conf,重启数据库。查看相关流复制进程,并插入测试数据。模拟备库宕机,向主库的app数据库中的app表中插入一条数据看是否夯住。

# 搭建过程同上, 注意在主库设置sychronous_standby_names和在从库设置application_name, 假设现在已经搭建好了同步流复制

# 模拟备库宕机(手动停掉备库)

postgres@db47:/platformData$ pg_ctl stop -D data5433

waiting for server to shut down.... done

server stopped

2021-03-16 14:37:50.822 CST [25244] LOG: received fast shutdown request

2021-03-16 14:37:50.872 CST [25244] LOG: aborting any active transactions

2021-03-16 14:37:50.873 CST [25285] FATAL: terminating connection due to administrator command

2021-03-16 14:37:50.873 CST [25249] FATAL: terminating walreceiver process due to administrator command

2021-03-16 14:37:50.874 CST [25246] LOG: shutting down

2021-03-16 14:37:51.203 CST [25244] LOG: database system is shut down

# 主库仍然可查

postgres=# show port;

port

------

5432

(1 row)

postgres=# select * from t;

id

----

1

2

3

4

5

6

7

8

9

10

(10 rows)

# 写入会被hang住

# 但是在psql中手动ctrl + C, 仍然会在主库写入

postgres=# insert into t select generate_series(1,10);

^CCancel request sent

WARNING: canceling wait for synchronous replication due to user request

DETAIL: The transaction has already committed locally, but might not have been replicated to the standby.

INSERT 0 10

postgres=# delete from t;

^CCancel request sent

WARNING: canceling wait for synchronous replication due to user request

DETAIL: The transaction has already committed locally, but might not have been replicated to the standby.

DELETE 20

逻辑复制,订阅发布模型配置(使用超级用户):

将上面的流复制恢复为两台单机,设置配置文件(wal_level),在发布主机的app库中创建test表(id int,name text),并对test表创建发布者pub;在订阅主机的app库中创建test表(id int,name text),然后创建订阅者sub(连接信息host、port、dbname)。在发布主机上向test表中插入一条数据,在订阅主机上做refresh操作并查看数据是否复制成功。

关键就是wal_level = logical

# 将上面的流复制恢复为两台单机

postgres@db47:/platformData$ initdb -D data5432

postgres@db47:/platformData$ initdb -D data5433

# 设置配置文件

listen_addresses = '*'

port = 5432和5433

archive_mode = on

archive_command = 'cp %p /platformData/pg_arch/%f'

wal_level = logical

max_wal_senders = 10 默认就有

wal_keep_size = 0 默认就有

hot_standby = on 默认就有

wal_log_hints = on

# 启动

pg_ctl start -D data5432

pg_ctl start -D data5433

# 在发布主机的app库中创建test表(id int,name text),并对test表创建发布者pub

postgres@db47:/platformData/data5432$ psql

psql (13.1)

Type "help" for help.

postgres=# show port;

port

------

5432

(1 row)

postgres=# create database app;

CREATE DATABASE

postgres=# \c app;

You are now connected to database "app" as user "postgres".

app=# create table test(id int, name text);

CREATE TABLE

app=# create publication pub for table test;

CREATE PUBLICATION

# 在订阅主机的app库中创建test表(id int,name text),然后创建订阅者sub(连接信息host、port、dbname)

postgres@db47:/platformData/data5433$ psql -p 5433

psql (13.1)

Type "help" for help.

postgres=# show port;

port

------

5433

(1 row)

postgres=# create database app;

CREATE DATABASE

postgres=# \c app;

You are now connected to database "app" as user "postgres".

app=# create table test(id int, name text);

CREATE TABLE

app=# CREATE SUBSCRIPTION sub CONNECTION 'host=127.0.0.1 port=5432 dbname=app user=postgres password=postgres' PUBLICATION pub;

NOTICE: created replication slot "sub" on publisher

CREATE SUBSCRIPTION

# 复制前主库

app=# show port;

port

------

5432

(1 row)

app=# select * from test;

id | name

----+------

(0 rows)

# 复制前从库

app=# show port;

port

------

5433

(1 row)

app=# select * from test;

id | name

----+------

(0 rows)

# 复制后主库

app=# show port;

port

------

5432

(1 row)

app=# insert into test select generate_series(1,5);

INSERT 0 5

app=# select * from test;

id | name

----+------

1 |

2 |

3 |

4 |

5 |

(5 rows)

# 复制后从库

app=# show port;

port

------

5433

(1 row)

app=# select * from test;

id | name

----+------

1 |

2 |

3 |

4 |

5 |

(5 rows)

强化练习:SQL实验

- 目前在app数据库中有两张表,student表与exam表,并有以下数据,如果没有,请使用exam.sql文件重新导入,SQL题目暂不考查权限与对象相关知识,可用超级用户在任意数据库中导入,只需有以下数据即可。

app=# \i /platformData/exam.sql

app=# \d

List of relations

Schema | Name | Type | Owner

--------+---------+-------+----------

public | exam | table | postgres

public | student | table | postgres

app=# select * from student ;

id | name | age

----+------+-----

1 | 张三 | 14

2 | 李四 | 14

3 | 王五 | 15

4 | 赵六 | 16

5 | 吴七 | 15

(5 rows)

app=# select * from exam;

name | subject | score

------+---------+-------

张三 | 英语 | 78

张三 | 语文 | 68

张三 | 数学 | 99

李四 | 语文 | 89

李四 | 数学 |

李四 | 英语 | 57

王五 | 语文 | 55

王五 | 数学 | 77

赵六 | 语文 | 79

赵六 | 数学 | 48

赵六 | 英语 | 27

(11 rows)

- 查询exam表中每科考试的最高分及平均分(结果显示字段:学科exam.subject,最高分maxscore,平均分avgscore)。

SELECT subject, max(score) AS maxscore, avg(score) AS avgscore

FROM exam

GROUP BY subject;

+-------+--------+-------------------+

|subject|maxscore|avgscore |

+-------+--------+-------------------+

|语文 |89 |72.75 |

|英语 |78 |54 |

|数学 |99 |74.6666666666666667|

+-------+--------+-------------------+

- 查询英语分数比英语平均分高的考生(结果显示字段:考生id,考生name,考生age,英语分数score)。

参考

SELECT s.id, s.name, s.age, e.score

FROM student s

INNER JOIN exam e ON s.name = e.name

WHERE e.subject = '英语'

AND e.score > (SELECT avg(score) FROM exam WHERE subject = '英语');

+--+----+---+-----+

|id|name|age|score|

+--+----+---+-----+

|1 |张三 |14 |78 |

|2 |李四 |14 |57 |

+--+----+---+-----+

-- 因结果都大于54, 故正确

- 请使用case表达式,条件当subject为英语时,abc_subject结果显示A英语;当subject为语文时,abc_subject结果显示B语文;当subject为数学时,abc_subject结果显示C数学(结果显示字段:考生name,学科abc_subject)。

SELECT name,

CASE subject

WHEN '英语' THEN 'A英语'

WHEN '语文' THEN 'B语文'

WHEN '数学' THEN 'C数学'

ELSE '' END

AS abc_subject

FROM exam;

+----+-----------+

|name|abc_subject|

+----+-----------+

|张三 |A英语 |

|张三 |B语文 |

|张三 |C数学 |

|李四 |B语文 |

|李四 |C数学 |

|李四 |A英语 |

|王五 |B语文 |

|王五 |C数学 |

|赵六 |B语文 |

|赵六 |C数学 |

|赵六 |A英语 |

+----+-----------+

- 基于student和exam表创建一张视图student_view,查询此视图时可得到以age列倒序,每个考生每门考试的成绩(结果显示字段:考生name,考生age,学科subject,成绩score,)。

CREATE OR REPLACE VIEW student_view AS

SELECT s.name, s.age, e.subject, e.score

FROM student s

INNER JOIN exam e ON s.name = e.name

ORDER BY s.age DESC;

+----+---+-------+-----+

|name|age|subject|score|

+----+---+-------+-----+

|赵六 |16 |英语 |27 |

|赵六 |16 |数学 |48 |

|赵六 |16 |语文 |79 |

|王五 |15 |数学 |77 |

|王五 |15 |语文 |55 |

|张三 |14 |数学 |99 |

|李四 |14 |语文 |89 |

|张三 |14 |语文 |68 |

|张三 |14 |英语 |78 |

|李四 |14 |英语 |57 |

|李四 |14 |数学 |NULL |

+----+---+-------+-----+

- 使用窗口函数rank(),查询出以subject分组且以score排序后,每科目的考生成绩(结果显示字段:考生name,科目subject,成绩score,及排序数值ranking)。

pg的rank()函数用法

pg官网的rank函数

SELECT s.name,

e.subject,

e.score,

rank()

OVER

(PARTITION BY e.subject ORDER BY e.score DESC)

FROM student s

INNER JOIN exam e ON s.name = e.name;

+----+-------+-----+----+

|name|subject|score|rank|

+----+-------+-----+----+

|李四 |数学 |NULL |1 |

|张三 |数学 |99 |2 |

|王五 |数学 |77 |3 |

|赵六 |数学 |48 |4 |

|张三 |英语 |78 |1 |

|李四 |英语 |57 |2 |

|赵六 |英语 |27 |3 |

|赵六 |语文 |100 |1 |

|李四 |语文 |89 |2 |

|赵六 |语文 |79 |3 |

|张三 |语文 |68 |4 |

|王五 |语文 |55 |5 |

+----+-------+-----+----+

- 使用sum()函数计算数学科目的累计值(current_score列),要求current_score列根据score列逐个累加(结果显示字段:学科subject,成绩score,累计值current_score)。

SELECT e.subject, e.score, sum(e.score) OVER (PARTITION BY e.subject ORDER BY score ASC)

FROM exam e

WHERE e.subject = '数学';

+-------+-----+---+

|subject|score|sum|

+-------+-----+---+

|数学 |48 |48 |

|数学 |77 |125|

|数学 |99 |224|

|数学 |NULL |224|

+-------+-----+---+

- 使用rollup()函数,同时得出每科目成绩的小计和成绩的总合计值(结果显示字段:成绩subject,合计值sum_score)。

rollup的用法

SELECT e.subject, sum(e.score) AS sum_score

FROM exam e

GROUP BY ROLLUP (e.subject)

ORDER BY subject;

+-------+---------+

|subject|sum_score|

+-------+---------+

|数学 |224 |

|英语 |162 |

|语文 |391 |

|NULL |777 |

+-------+---------+

- 基于exam创建物化视图exam_view,可以根据此视图查询出每科成绩的最高分(结果显示字段:学科subject,最高分max_score)。向exam表中添加一行数据:“赵六 语文 100”,然后刷新物化视图exam_view。

CREATE MATERIALIZED VIEW IF NOT EXISTS exam_view AS

SELECT e.subject, max(e.score) AS max_score

FROM exam e

GROUP BY e.subject;

SELECT * FROM exam_view;

+-------+---------+

|subject|max_score|

+-------+---------+

|语文 |89 |

|英语 |78 |

|数学 |99 |

+-------+---------+

-- 向exam表中添加一行数据:“赵六 语文 100”,查询没变化

INSERT INTO exam

VALUES ('赵六', '语文', 100);

SELECT * FROM exam_view;

+-------+---------+

|subject|max_score|

+-------+---------+

|语文 |89 |

|英语 |78 |

|数学 |99 |

+-------+---------+

-- 然后刷新物化视图exam_view, 查询变化了

REFRESH MATERIALIZED VIEW exam_view;

SELECT * FROM exam_view;

+-------+---------+

|subject|max_score|

+-------+---------+

|语文 |100 |

|英语 |78 |

|数学 |99 |

+-------+---------+

- 创建范围分区表,以id为分区键,主表postgres(id int,name varchar),分区表a区间在(0-100),分区表b区间在(100-500),分区表c区间在(500-1000)。插入三条数据,“1 a”、“200 b”、“999 c”,查询主表及各分区表。

CREATE TABLE postgres

(

id int,

name varchar

) PARTITION BY RANGE (id);

CREATE TABLE a PARTITION OF postgres FOR VALUES FROM (0) TO (100);

CREATE TABLE b PARTITION OF postgres FOR VALUES FROM (100) TO (500);

CREATE TABLE c PARTITION OF postgres FOR VALUES FROM (500) TO (1000);

app=# \d+ postgres

Partitioned table "public.postgres"

Column | Type | Collation | Nullable | Default | Storage | Stats target | Description

--------+-------------------+-----------+----------+---------+----------+--------------+-------------

id | integer | | | | plain | |

name | character varying | | | | extended | |

Partition key: RANGE (id)

Partitions: a FOR VALUES FROM (0) TO (100),

b FOR VALUES FROM (100) TO (500),

c FOR VALUES FROM (500) TO (1000)

INSERT INTO postgres VALUES (1, 'a');

INSERT INTO postgres VALUES (200, 'b');

INSERT INTO postgres VALUES (999, 'c');

app=# select * from postgres;

id | name

-----+------

1 | a

200 | b

999 | c

(3 rows)

app=# select * from a;

id | name

----+------

1 | a

(1 row)

app=# select * from b;

id | name

-----+------

200 | b

(1 row)

app=# select * from c;

id | name

-----+------

999 | c

(1 row)