System: Centos7.4

I:OpenVINO 的安装

refer:https://docs.openvinotoolkit.org/latest/_docs_install_guides_installing_openvino_linux.html

II: 基于OpenVINO tensorflow 的model optimizer 参考(SSD部分)

https://www.cnblogs.com/fourmi/p/10888513.html

执行路径:/opt/intel/openvino/deployment_tools/model_optimizer

执行指令:

python3.6 mo_tf.py --input_model=/home/gsj/object-detection/test_models/ssd_inception_v2_coco_2018_01_28/frozen_inference_graph.pb --tensorflow_use_custom_operations_config /opt/intel/openvino_2019.2.201/deployment_tools/model_optimizer/extensions/front/tf/ssd_v2_support.json --tensorflow_object_detection_api_pipeline_config /home/gsj/object-detection/test_models/ssd_inception_v2_coco_2018_01_28/pipeline.config --reverse_input_channels --batch 32

II:改写文件路径:

/opt/intel/openvino/inference_engine/samples/self_object_detection

包含文件

main.cpp self_object_detection_engine_head.h

CMakeLists.txt README.md self_object_detection.h

1. main.cpp

1 // Copyright (C) 2018-2019 Intel Corporation

2 // SPDX-License-Identifier: Apache-2.0

3 //

4 /*******************************************************************

5 * Copyright:2019-2030, CC

6 * File Name: Main.cpp

7 * Description: main function includes ObjectDetection,

8 * Initialize_Check, readInputimagesNames,

9 * load_inference_engine, readIRfiles,

10 * prepare_input_blobs, load_and_create_request,*

11 * prepare_input , process

12 *Author: Gao Shengjun

13 *Date: 2019-07-19

14 *******************************************************************/

15 #include

16 #include

17 #include <string>

18 #include

19 #include

20 #include

21 #include

2. self_object_detection_engine_head.h

1 /*******************************************************************

2 * Copyright:2019-2030, CC

3 * File Name: self_object_detection_engine_head.h

4 * Description: main function includes ObjectDetection,

5 * Initialize_Check, readInputimagesNames,

6 * load_inference_engine, readIRfiles,

7 * prepare_input_blobs, load_and_create_request,*

8 * prepare_input , process

9 *Author: Gao Shengjun

10 *Date: 2019-07-19

11 *******************************************************************/

12 #ifndef SELF_OBJECT_DETECTION_ENGINE_HEAD_H

13 #define SELF_OBJECT_DETECTION_ENGINE_HEAD_H

14

15 #include "self_object_detection.h"

16 #include

17 #include

18 #include <string>

19 #include

20 #include

21 #include

22 #include

3.self_object_detection.h

1 // Copyright (C) 2018-2019 Intel Corporation

2 // SPDX-License-Identifier: Apache-2.0

3 //

4

5 #pragma once

6

7 #include <string>

8 #include

9 #include

10 #include

11

12 /* thickness of a line (in pixels) to be used for bounding boxes */

13 #define BBOX_THICKNESS 2

14

15 /// @brief message for help argument

16 static const char help_message[] = "Print a usage message.";

17

18 /// @brief message for images argument

19 static const char image_message[] = "Required. Path to an .bmp image.";

20

21 /// @brief message for model argument

22 static const char model_message[] = "Required. Path to an .xml file with a trained model.";

23

24 /// @brief message for plugin argument

25 static const char plugin_message[] = "Plugin name. For example MKLDNNPlugin. If this parameter is pointed, " \

26 "the sample will look for this plugin only";

27

28 /// @brief message for assigning cnn calculation to device

29 static const char target_device_message[] = "Optional. Specify the target device to infer on (the list of available devices is shown below). " \

30 "Default value is CPU. Use \"-d HETERO:\" format to specify HETERO plugin. " \

31 "Sample will look for a suitable plugin for device specified";

32

33 /// @brief message for clDNN custom kernels desc

34 static const char custom_cldnn_message[] = "Required for GPU custom kernels. "\

35 "Absolute path to the .xml file with the kernels descriptions.";

36

37 /// @brief message for user library argument

38 static const char custom_cpu_library_message[] = "Required for CPU custom layers. " \

39 "Absolute path to a shared library with the kernels implementations.";

40

41 /// @brief message for plugin messages

42 static const char plugin_err_message[] = "Optional. Enables messages from a plugin";

43

44 /// @brief message for config argument

45 static constexpr char config_message[] = "Path to the configuration file. Default value: \"config\".";

46

47 /// \brief Define flag for showing help message

48 DEFINE_bool(h, false, help_message);

49

50 /// \brief Define parameter for set image file

51 /// It is a required parameter

52 DEFINE_string(i, "", image_message);

53

54 /// \brief Define parameter for set model file

55 /// It is a required parameter

56 DEFINE_string(m, "", model_message);

57

58 /// \brief device the target device to infer on

59 DEFINE_string(d, "CPU", target_device_message);

60

61 /// @brief Define parameter for clDNN custom kernels path

62 /// Default is ./lib

63 DEFINE_string(c, "", custom_cldnn_message);

64

65 /// @brief Absolute path to CPU library with user layers

66 /// It is a optional parameter

67 DEFINE_string(l, "", custom_cpu_library_message);

68

69 /// @brief Enable plugin messages

70 DEFINE_bool(p_msg, false, plugin_err_message);

71

72 /// @brief Define path to plugin config

73 DEFINE_string(config, "", config_message);

74

75 /**

76 * \brief This function show a help message

77 */

78 static void showUsage() {

79 std::cout << std::endl;

80 std::cout << "object_detection_sample_ssd [OPTION]" << std::endl;

81 std::cout << "Options:" << std::endl;

82 std::cout << std::endl;

83 std::cout << " -h " << help_message << std::endl;

84 std::cout << " -i \"\" " << image_message << std::endl;

85 std::cout << " -m \"\" " << model_message << std::endl;

86 std::cout << " -l \"\" " << custom_cpu_library_message << std::endl;

87 std::cout << " Or" << std::endl;

88 std::cout << " -c \"\" " << custom_cldnn_message << std::endl;

89 std::cout << " -d \"\" " << target_device_message << std::endl;

90 std::cout << " -p_msg " << plugin_err_message << std::endl;

91 }

IV: 编译

执行路径:

/opt/intel/openvino/inference_engine/samples

sh ./build_samples.sh

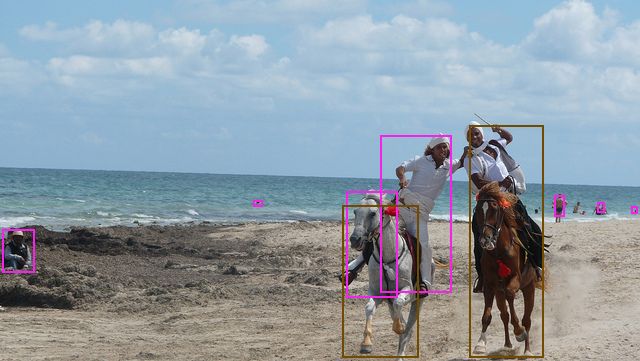

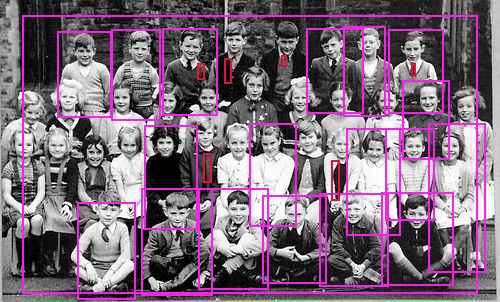

V: Test

执行路径:

/root/inference_engine_samples_build/intel64/Release

执行命令:

./self_object_detection -m /opt/intel/openvino_2019.2.201/deployment_tools/model_optimizer/./frozen_inference_graph.xml -d CPU -i /home/gsj/dataset/coco_val/val8

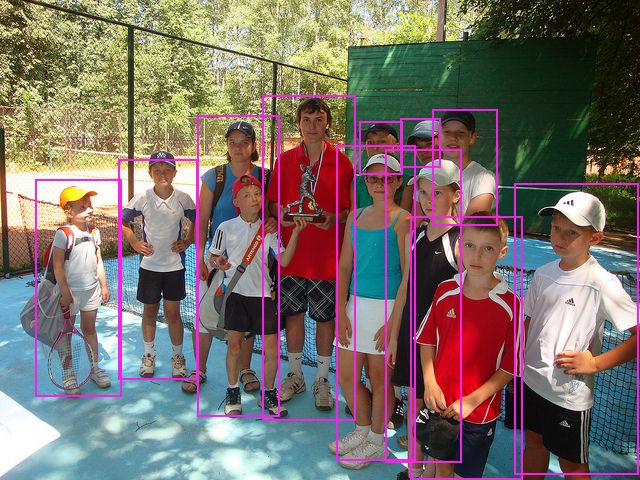

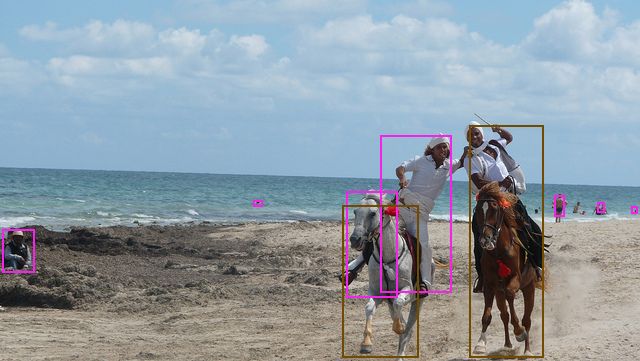

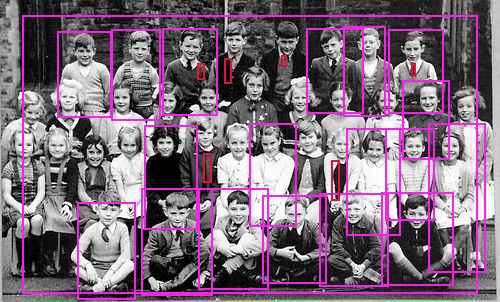

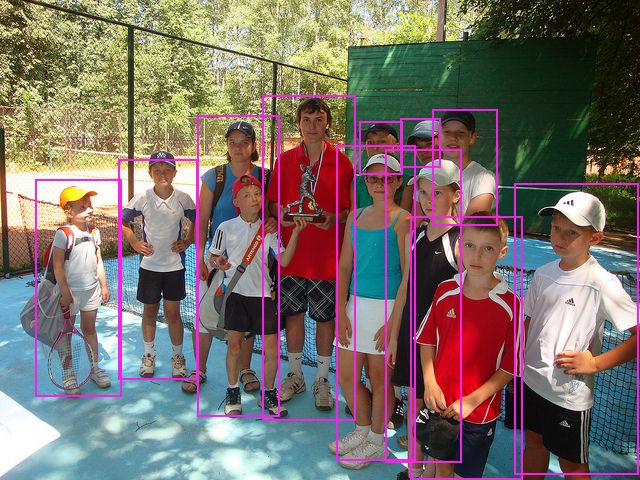

VI: result

[ INFO ] InferenceEngine:

API version ............ 2.0

Build .................. custom_releases/2019/R2_3044732e25bc7dfbd11a54be72e34d512862b2b3

Description ....... API

Parsing input parameters

[ INFO ] Files were added: 8

[ INFO ] /home/gsj/dataset/coco_val/val8/000000000785.jpg

[ INFO ] /home/gsj/dataset/coco_val/val8/000000000139.jpg

[ INFO ] /home/gsj/dataset/coco_val/val8/000000000724.jpg

[ INFO ] /home/gsj/dataset/coco_val/val8/000000000285.jpg

[ INFO ] /home/gsj/dataset/coco_val/val8/000000000802.jpg

[ INFO ] /home/gsj/dataset/coco_val/val8/000000000632.jpg

[ INFO ] /home/gsj/dataset/coco_val/val8/000000000872.jpg

[ INFO ] /home/gsj/dataset/coco_val/val8/000000000776.jpg

[ INFO ] Loading Inference Engine

[ INFO ] Device info:

CPU

MKLDNNPlugin version ......... 2.0

Build ........... 26451

[ INFO ] Loading network files:

/opt/intel/openvino_2019.2.201/deployment_tools/model_optimizer/./frozen_inference_graph.xml

/opt/intel/openvino_2019.2.201/deployment_tools/model_optimizer/./frozen_inference_graph.bin

[ INFO ] Loading network files:

/opt/intel/openvino_2019.2.201/deployment_tools/model_optimizer/./frozen_inference_graph.xml

/opt/intel/openvino_2019.2.201/deployment_tools/model_optimizer/./frozen_inference_graph.bin

[ INFO ] Preparing input blobs

[ INFO ] Batch size is 32

[ INFO ] Preparing output blobs

[ INFO ] Loading model to the device

[ INFO ] Create infer request

[ WARNING ] Image is resized from (640, 425) to (300, 300)

[ WARNING ] Image is resized from (640, 426) to (300, 300)

[ WARNING ] Image is resized from (375, 500) to (300, 300)

[ WARNING ] Image is resized from (586, 640) to (300, 300)

[ WARNING ] Image is resized from (424, 640) to (300, 300)

[ WARNING ] Image is resized from (640, 483) to (300, 300)

[ WARNING ] Image is resized from (621, 640) to (300, 300)

[ WARNING ] Image is resized from (428, 640) to (300, 300)

[ INFO ] Batch Size is 32

[ WARNING ] Number of images 8dosen't match batch size 32

[ WARNING ] Number of images to be processed is 8

[ INFO ] Start inference

[ INFO ] Processing output blobs

640--425 0.452099

[0,1] element, prob = 0.851202 (289,42)-(485,385) batch id : 0 WILL BE PRINTED!

[1,35] element, prob = 0.495117 (205,360)-(600,397) batch id : 0 WILL BE PRINTED!

[2,1] element, prob = 0.376695 (380,177)-(398,208) batch id : 1 WILL BE PRINTED!

[3,1] element, prob = 0.337178 (398,159)-(459,292) batch id : 1 WILL BE PRINTED!

[4,62] element, prob = 0.668834 (365,214)-(428,310) batch id : 1 WILL BE PRINTED!

[5,62] element, prob = 0.558071 (294,220)-(365,321) batch id : 1 WILL BE PRINTED!

[6,62] element, prob = 0.432652 (388,205)-(440,307) batch id : 1 WILL BE PRINTED!

[7,62] element, prob = 0.313619 (218,228)-(300,319) batch id : 1 WILL BE PRINTED!

[8,64] element, prob = 0.488229 (217,178)-(269,217) batch id : 1 WILL BE PRINTED!

[9,72] element, prob = 0.885867 (9,165)-(149,263) batch id : 1 WILL BE PRINTED!

[10,86] element, prob = 0.305516 (239,196)-(255,217) batch id : 1 WILL BE PRINTED!

[11,3] element, prob = 0.332538 (117,284)-(146,309) batch id : 2 WILL BE PRINTED!

[12,13] element, prob = 0.992781 (122,73)-(254,223) batch id : 2 WILL BE PRINTED!

[13,23] element, prob = 0.988277 (4,75)-(580,632) batch id : 3 WILL BE PRINTED!

[14,79] element, prob = 0.981469 (29,292)-(161,516) batch id : 4 WILL BE PRINTED!

[15,82] element, prob = 0.94848 (244,189)-(412,525) batch id : 4 WILL BE PRINTED!

[16,62] element, prob = 0.428106 (255,233)-(348,315) batch id : 5 WILL BE PRINTED!

[17,64] element, prob = 0.978832 (336,217)-(429,350) batch id : 5 WILL BE PRINTED!

[18,64] element, prob = 0.333557 (192,148)-(235,234) batch id : 5 WILL BE PRINTED!

[19,65] element, prob = 0.985633 (-5,270)-(404,477) batch id : 5 WILL BE PRINTED!

[20,84] element, prob = 0.882272 (461,246)-(472,288) batch id : 5 WILL BE PRINTED!

[21,84] element, prob = 0.874527 (494,192)-(503,223) batch id : 5 WILL BE PRINTED!

[22,84] element, prob = 0.850498 (482,247)-(500,285) batch id : 5 WILL BE PRINTED!

[23,84] element, prob = 0.844409 (461,296)-(469,335) batch id : 5 WILL BE PRINTED!

[24,84] element, prob = 0.787552 (414,24)-(566,364) batch id : 5 WILL BE PRINTED!

[25,84] element, prob = 0.748578 (524,189)-(533,224) batch id : 5 WILL BE PRINTED!

[26,84] element, prob = 0.735457 (524,98)-(535,133) batch id : 5 WILL BE PRINTED!

[27,84] element, prob = 0.712015 (528,49)-(535,84) batch id : 5 WILL BE PRINTED!

[28,84] element, prob = 0.689215 (496,51)-(504,82) batch id : 5 WILL BE PRINTED!

[29,84] element, prob = 0.620327 (456,192)-(467,224) batch id : 5 WILL BE PRINTED!

[30,84] element, prob = 0.614535 (481,154)-(514,173) batch id : 5 WILL BE PRINTED!

[31,84] element, prob = 0.609089 (506,151)-(537,172) batch id : 5 WILL BE PRINTED!

[32,84] element, prob = 0.604894 (456,148)-(467,181) batch id : 5 WILL BE PRINTED!

[33,84] element, prob = 0.554959 (485,102)-(505,125) batch id : 5 WILL BE PRINTED!

[34,84] element, prob = 0.549844 (508,244)-(532,282) batch id : 5 WILL BE PRINTED!

[35,84] element, prob = 0.404613 (437,143)-(443,180) batch id : 5 WILL BE PRINTED!

[36,84] element, prob = 0.366167 (435,245)-(446,287) batch id : 5 WILL BE PRINTED!

[37,84] element, prob = 0.320608 (438,191)-(446,226) batch id : 5 WILL BE PRINTED!

[38,1] element, prob = 0.996094 (197,115)-(418,568) batch id : 6 WILL BE PRINTED!

[39,1] element, prob = 0.9818 (266,99)-(437,542) batch id : 6 WILL BE PRINTED!

[40,1] element, prob = 0.517957 (152,117)-(363,610) batch id : 6 WILL BE PRINTED!

[41,40] element, prob = 0.302339 (154,478)-(192,542) batch id : 6 WILL BE PRINTED!

[42,88] element, prob = 0.98227 (8,24)-(372,533) batch id : 7 WILL BE PRINTED!

[43,88] element, prob = 0.924668 (0,268)-(323,640) batch id : 7 WILL BE PRINTED!

[ INFO ] Image out_0.bmp created!

[ INFO ] Image out_1.bmp created!

[ INFO ] Image out_2.bmp created!

[ INFO ] Image out_3.bmp created!

[ INFO ] Image out_4.bmp created!

[ INFO ] Image out_5.bmp created!

[ INFO ] Image out_6.bmp created!

[ INFO ] Image out_7.bmp created!

[ INFO ] Execution successful

[ INFO ] This sample is an API example, for any performance measurements please use the dedicated benchmark_app tool