Hive SQL血缘解析

- Druid可以直接获得所有的列

http://t.csdn.cn/mO4TX - 利用Hive提供的LineageLogger与Execution Hooks机制做血缘

https://blog.csdn.net/qq_44831907/article/details/123033137 - Apache Calcite

- gudusoft 解析方案 商业

https://blog.csdn.net/qq_31557939/article/details/126277212

6.github开源项目:

https://github.com/Shkin1/hathor

https://github.com/sqlparser/sqlflow_public

===========================

一、数仓经常会碰到的几类问题:

1、两个数据报表进行对比,结果差异很大,需要人工核对分析指标的维度信息,比如从头分析数据指标从哪里来,处理条件是什么,最后才能分析出问题原因。

2、基础数据表因某种原因需要修改字段,需要评估其对数仓的影响,费时费力,然后在做方案。

二、问题分析:

数据源长途跋涉,经过大量的处理和组件来传递,呈现在业务用户面前,对数据进行回溯其实很难。元数据回溯在有效决策、策略制定、差异分析等过程中很重要。这两类问题都属于数据血缘分析问题,第一类叫做数据回溯、第二类叫做影响分析,是数据回溯的逆向。

三、解决方法:

自己实现了一套基于hive数仓的数据血缘分析工具,来完成各个数据表、字段之间的关系梳理,进而解决上面两个问题。

工具主要目标:解析计算脚本中的HQL语句,分析得到输入输出表、输入输出字段和相应的处理条件,进行分析展现。

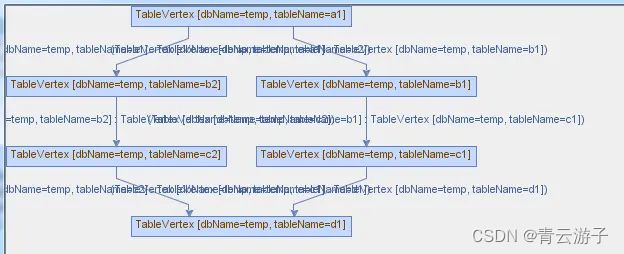

实现思路:对AST深度优先遍历,遇到操作的token则判断当前的操作,遇到子句则压栈当前处理,处理子句。子句处理完,栈弹出。处理字句的过程中,遇到子查询就保存当前子查询的信息,判断与其父查询的关系,最终形成树形结构; 遇到字段或者条件处理则记录当前的字段和条件信息、组成Block,嵌套调用。

关键点解析:

1、遇到TOK_TAB或TOK_TABREF则判断出当前操作的表

2、压栈判断是否是join,判断join条件

3、定义数据结构Block,遇到在where\select\join时获得其下相应的字段和条件,组成Block

4、定义数据结构ColLine,遇到TOK_SUBQUERY保存当前的子查询信息,供父查询使用

5、定义数据结构ColLine,遇到TOK_UNION结束时,合并并截断当前的列信息

6、遇到select 或者未明确指出的字段,查询元数据进行辅助分析

7、解析结果进行相关校验

————————————————

版权声明:本文为CSDN博主「thomas0yang」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/thomas0yang/article/details/49449723

==================================

HiveSqlBloodFigure

如果你喜欢这个项目,那就点击一下右上方的【Star】以及【Fork】,支持一下我,让我有动力持续更新!

项目介绍

在数据仓库建设中,经常会使用到数据血缘追中方面的功能,本项目实现了对hql集合进行静态分析,获取hql对应的血缘图(表血缘 + 字段血缘)

项目升级内容

删除hive-exec与hadoop-common的maven依赖,使得项目更加的轻量级。

重构项目代码,优化解析,修复无字段血缘时,不能获取表血缘的BUG。

规范化接口输入输出,血缘图均为自定义实体,方便进行JSON序列化。

新增接口层,方便Spring的注入,同时也提供静态方式调用。

下个版本升级内容

引入JDBC获取元数据信息,使血缘图更加丰富,同时可以解决Sql中有select * 的问题。

测试用例

请关注test下的HiveBloodEngineTest与HiveSqlBloodFactoryTest。

接口地址

接口类:HiveBloodEngine,实现类:HiveBloodEngineImpl。(spring接入)

工具类:HiveSqlBloodFactory。(静态调用)

使用说明

运行:

结果:

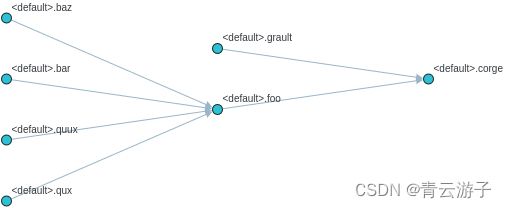

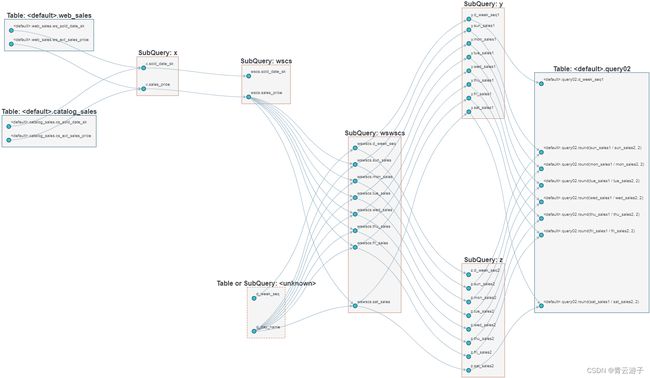

表血缘:

字段血缘:

1 研究背景

随着企业信息化和业务的发展,数据资产日益庞大,数据仓库构建越来越复杂,在数仓构建的过程中,常遇到数据溯源困难,数据模型修改导致业务分析困难等难题,此类问题主要是由于数据血缘分析不足造成的,只有强化血缘关系,才能帮助企业更好的发挥数据价值。

SQL血缘关系是数据仓库模型构建的核心依赖。通过对SQL语句进行梳理与解析,得到各个业务层表之间依赖关系和属性依赖关系,并进行可视化展示,形成数据表和属性血缘层次关系图,充分展示了原始字段数据与数据模型的映射关系。拥有良好的SQL血缘关系系统,不仅有利于数据分析师对业务场景的梳理,还极大帮助对其数仓分层的构建,同时对企业数据质量控制方面起到很好的朔源作用,对构造数据链路图,监控数据变化起到很好的辅助作用。

市场存在一系列血缘关系解析工具,如Druids,但由于只支持对mysql语句的解析,且解析力度不够,不支持复杂的sql逻辑等问题,导致无法在企业中得到广泛使用。同样的hive自身的血缘解析往往在sql执行之后,才可得到解析结果,如果sql执行比较耗时,导致血缘关系无法快速展现,同时造成没有办法提前进行元数据安全和权限认证等问题,在企业真实应用中有一定的限制。

本文结合公司自身业务,研究Hive血缘关系解析源码,并进行优化,首先简化SQL语句剪枝和对包含CTE别名数据表的识别与剔除,降低SQL解析的复杂性,提高血缘解析性能;其次,提供元数据信息服务模块,既保证元信息的完整性,又提供安全的数据表权限认证,维护数据表的操作权限,保证操作的安全性;然后,将postExecuteHook前置,即在SQL执行物理优化前即可获得SQL 的血缘关系,极大提高了获取SQL血缘关系的效率,后续将对这些优化策略进行逐一展开。

————————————————

版权声明:本文为CSDN博主「搜狐技术产品小编2023」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/SOHU_TECH/article/details/110605919

2 相关技术介绍

2.1 SQL血缘关系介绍

在数据仓库构建的过程中,SQL血缘关系体现了各个数据表以及相关属性的依赖关系。SQL血缘关系即是对业务流程涉及到的模型表进行梳理,它包含了集群血缘关系、系统血缘关系、表级血缘关系和字段血缘关系,其指向数据的上游来源,向上游追根溯源。通过简单的SQL语句展现各个表之间的数据关系。

————————————————

版权声明:本文为CSDN博主「搜狐技术产品小编2023」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/SOHU_TECH/article/details/110605919

Apache Atlas

Apache Calcite(可以用)

Trino

ANTLR(可以用)

Antlr4(可以用)

Hive ASTNode(可以用)

Druid

hive hook(需要执行SQL)

https://github.com/webgjc/sql-parser/blob/master/src/main/java/cn/ganjiacheng/hive/HiveSqlFieldLineageParser.java

http://ganjiacheng.cn/article/2020/article_14_%E5%9F%BA%E4%BA%8Eantlr4%E5%AE%9E%E7%8E%B0HQL%E7%9A%84%E8%A7%A3%E6%9E%90-%E8%A1%A8%E8%A1%80%E7%BC%98%E5%92%8C%E5%AD%97%E6%AE%B5%E8%A1%80%E7%BC%98/

https://blog.csdn.net/thomas0yang/article/details/49449723

https://download.csdn.net/download/thomas0yang/9354943

https://download.csdn.net/download/thomas0yang/9369949

http://tech.meituan.com/hive-sql-to-mapreduce.html

http://www.cnblogs.com/drawwindows/p/4595771.html

https://cwiki.apache.org/confluence/display/Hive/LanguageManual

————————————————

版权声明:本文为CSDN博主「thomas0yang」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/thomas0yang/article/details/49449723

https://download.csdn.net/download/xl_1803/75865628?utm_medium=distribute.pc_relevant_download.none-task-download-2defaultBlogCommendFromBaiduRate-1-75865628-download-9369949.257%5Ev11%5Epc_dl_relevant_income_base1&depth_1-utm_source=distribute.pc_relevant_download.none-task-download-2defaultBlogCommendFromBaiduRate-1-75865628-download-9369949.257%5Ev11%5Epc_dl_relevant_income_base1&spm=1003.2020.3001.6616.1

https://download.csdn.net/download/weixin_42131618/16734276?utm_medium=distribute.pc_relevant_download.none-task-download-2defaultBlogCommendFromBaiduRate-12-16734276-download-9369949.257%5Ev11%5Epc_dl_relevant_income_base1&depth_1-utm_source=distribute.pc_relevant_download.none-task-download-2defaultBlogCommendFromBaiduRate-12-16734276-download-9369949.257%5Ev11%5Epc_dl_relevant_income_base1&spm=1003.2020.3001.6616.13

https://github.com/JunNan-X/HiveSqlBloodFigure

https://github.com/lihuigang/dp_dw_lineage

https://blog.csdn.net/qq_44831907/article/details/123033137

https://github.com/webgjc/sql-parser

http://ganjiacheng.cn/article/2020/article_6_%E5%9F%BA%E4%BA%8Eantlr4%E5%AE%9E%E7%8E%B0HQL%E7%9A%84%E8%A7%A3%E6%9E%90-%E5%85%83%E6%95%B0%E6%8D%AE/

http://ganjiacheng.cn/article/2020/article_14_%E5%9F%BA%E4%BA%8Eantlr4%E5%AE%9E%E7%8E%B0HQL%E7%9A%84%E8%A7%A3%E6%9E%90-%E8%A1%A8%E8%A1%80%E7%BC%98%E5%92%8C%E5%AD%97%E6%AE%B5%E8%A1%80%E7%BC%98/

http://ganjiacheng.cn/article/2020/article_12_%E5%9F%BA%E4%BA%8Eantlr4%E5%AE%9E%E7%8E%B0HQL%E7%9A%84%E8%A7%A3%E6%9E%90-%E6%A0%BC%E5%BC%8F%E5%8C%96/

https://gitee.com/Kingkazuma111/sql-parser-lineage?_from=gitee_search

https://gitee.com/hassan1314/flink-sql-lineage?_from=gitee_search

https://blog.csdn.net/SOHU_TECH/article/details/110605919

https://github.com/reata/sqllineage

https://reata.github.io/blog/sqllineage-a-sql-lineage-analysis-tool/

跨分区扫描

package com.atguigu.dga.governance.assess.assessor.calc;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import com.atguigu.dga.governance.assess.Assessor;

import com.atguigu.dga.governance.bean.AssessParam;

import com.atguigu.dga.governance.bean.GovernanceAssessDetail;

import com.atguigu.dga.util.SqlParser;

import com.atguigu.dga.meta.bean.TableMetaInfo;

import com.google.common.collect.Sets;

import lombok.*;

import org.apache.hadoop.hive.ql.lib.Dispatcher;

import org.apache.hadoop.hive.ql.lib.Node;

import org.apache.hadoop.hive.ql.parse.ASTNode;

import org.apache.hadoop.hive.ql.parse.BaseSemanticAnalyzer;

import org.apache.hadoop.hive.ql.parse.HiveParser;

import org.apache.hadoop.hive.ql.parse.SemanticException;

import org.springframework.stereotype.Component;

import java.math.BigDecimal;

import java.util.*;

@Component("MULTI_PARTITION")

public class MultiPartitionAssessor extends Assessor {

@Override

protected void checkProblem(GovernanceAssessDetail governanceAssessDetail, AssessParam assessParam) throws Exception {

if (assessParam.getTableMetaInfo().getTableMetaInfoExtra().getDwLevel().equals("ODS") || assessParam.getTDsTaskDefinition() == null) { //ods层没有sql处理

return;

}

//跨分区扫描

//提取sql 进行

if (assessParam.getTableMetaInfo().getTableName().equals("ads_order_to_pay_interval_avg")) {

System.out.println(111);

}

String sql = assessParam.getTDsTaskDefinition().getSql();

governanceAssessDetail.setAssessComment(sql);

Map<String, TableMetaInfo> tableMetaInfoMap = assessParam.getTableMetaInfoMap();

CheckMultiPartitionScanDispatcher dispatcher = new CheckMultiPartitionScanDispatcher();

dispatcher.setTableMetaInfoMap(tableMetaInfoMap);

dispatcher.setDefaultSchemaName(assessParam.getTableMetaInfo().getSchemaName());

SqlParser.parse(dispatcher, sql);

Map<String, Set<String>> tableFilterFieldMap = new HashMap<>();

Map<String, Set<String>> tableRangeFilterFieldMap = new HashMap<>();

//把条件列表 整理为 表-被过滤字段 的结构

List<CheckMultiPartitionScanDispatcher.WhereCondition> whereConditionList = dispatcher.getWhereConditionList();

for (CheckMultiPartitionScanDispatcher.WhereCondition whereCondition : whereConditionList) {

List<CheckMultiPartitionScanDispatcher.OriginTableField> tableFieldList = whereCondition.getTableFieldList();

for (CheckMultiPartitionScanDispatcher.OriginTableField originTableField : tableFieldList) {

Set<String> tableFilterFieldSet = tableFilterFieldMap.get(originTableField.getOriginTable());

if (tableFilterFieldSet == null) {

tableFilterFieldSet = new HashSet<>();

tableFilterFieldMap.put(originTableField.getOriginTable(), tableFilterFieldSet);

}

tableFilterFieldSet.add(originTableField.getField());

if (!whereCondition.operator.equals("=")) {

Set<String> tableFilterFilterFieldSet = tableRangeFilterFieldMap.get(originTableField.getOriginTable());

if (tableFilterFilterFieldSet == null) {

tableFilterFilterFieldSet = new HashSet<>();

tableRangeFilterFieldMap.put(originTableField.getOriginTable(), tableFilterFieldSet);

}

tableFilterFilterFieldSet.add(originTableField.getField());

}

}

}

StringBuilder assessProblem = new StringBuilder();

// 获得所有引用表的清单

Map<String, List<CheckMultiPartitionScanDispatcher.CurTableField>> refTableFieldMap = dispatcher.getRefTableFieldMap();

for (String refTableName : refTableFieldMap.keySet()) {

//获得元数据的分区字段

TableMetaInfo tableMetaInfo = tableMetaInfoMap.get(refTableName);

Set<String> tableFilterFieldSet = tableFilterFieldMap.get(refTableName);

Set<String> tableFilterRangeFieldSet = tableRangeFilterFieldMap.get(refTableName);

//检查每个分区字段 1 是否被过滤 2 是否被范围查询

String partitionColNameJson = tableMetaInfo.getPartitionColNameJson();

List<JSONObject> partitionJsonObjList = JSON.parseArray(partitionColNameJson, JSONObject.class);

for (JSONObject partitionJsonObj : partitionJsonObjList) {

String partitionName = partitionJsonObj.getString("name");

if (tableFilterFieldSet == null || !tableFilterFieldSet.contains(partitionName)) {

assessProblem.append("引用表:" + refTableName + "中的分区字段" + partitionName + "未参与过滤 ;");

}

if (tableFilterRangeFieldSet != null && tableFilterRangeFieldSet.contains(partitionName)) {

assessProblem.append("引用表:" + refTableName + "中的分区字段" + partitionName + "涉及多分区扫描 ;");

}

}

}

if (assessProblem.length() > 0) {

governanceAssessDetail.setAssessScore(BigDecimal.ZERO);

governanceAssessDetail.setAssessProblem(assessProblem.toString());

governanceAssessDetail.setAssessComment(JSON.toJSONString(dispatcher.refTableFieldMap.keySet()) + "||" + sql);

}

}

//节点处理器 会经过sql所有节点处理环节,每经过一个节点执行dispatch方法

public class CheckMultiPartitionScanDispatcher implements Dispatcher {

//检查策略

// 1 获得比较条件的语句

// 2 查看比较字段是否为分区字段

// 3 如果比较符号为 >= <= < > <> in 则是为多分区

// 4 如果比较符号为 = 则获得比较的值 如果同一个分区字段 有多个值 则视为多分区

@Setter

Map<String, TableMetaInfo> tableMetaInfoMap = new HashMap<>();

@Getter

Map<String, List<CurTableField>> subqueryTableFiedlMap = new HashMap<>(); //表<表名,<字段名,Set<原始字段>>

@Getter

Map<String, List<CurTableField>> insertTableFieldMap = new HashMap<>(); //表<表名,<字段名,Set<原始字段>>

@Getter

Map<String, List<CurTableField>> refTableFieldMap = new HashMap<>();

@Getter

List<WhereCondition> whereConditionList = new ArrayList<>();

@Setter

String defaultSchemaName = null;

Set<String> operators = Sets.newHashSet("=", ">", "<", ">=", "<=", "<>", "like"); // in / not in 属于函数计算

@Override

public Object dispatch(Node nd, Stack<Node> stack, Object... nodeOutputs) throws SemanticException {

//检查该节点的处理内容

ASTNode queryNode = (ASTNode) nd;

//分析查询

if (queryNode.getType() == HiveParser.TOK_QUERY) {

//System.out.println("astNode = " + queryNode.getText());

Map<String, List<CurTableField>> curQueryTableFieldMap = new HashMap<>();

Map<String, String> aliasMap = new HashMap<>();

for (Node childNode : queryNode.getChildren()) {

ASTNode childAstNode = (ASTNode) childNode;

if (childAstNode.getType() == HiveParser.TOK_FROM) {

loadTablesFromNodeRec(childAstNode, curQueryTableFieldMap, aliasMap);

} else if (childAstNode.getType() == HiveParser.TOK_INSERT) {

for (Node insertChildNode : childAstNode.getChildren()) {

ASTNode insertChildAstNode = (ASTNode) insertChildNode;

if (insertChildAstNode.getType() == HiveParser.TOK_WHERE) {

loadConditionFromNodeRec(insertChildAstNode, whereConditionList, curQueryTableFieldMap, aliasMap);

} else if (insertChildAstNode.getType() == HiveParser.TOK_SELECT || insertChildAstNode.getType() == HiveParser.TOK_SELECTDI) {

// 如果有子查询 //把查询字段写入缓存 // 没有子查询作为最终输出字段

List<CurTableField> tableFieldOutputList = getTableFieldOutput(insertChildAstNode, curQueryTableFieldMap, aliasMap);

//向上追溯子查询的别名

ASTNode subqueryNode = (ASTNode) queryNode.getAncestor(HiveParser.TOK_SUBQUERY);

//保存到子查询

if (subqueryNode != null) {

cacheSubqueryTableFieldMap(subqueryNode, tableFieldOutputList);

}

ASTNode insertTableNode = (ASTNode) childAstNode.getFirstChildWithType(HiveParser.TOK_DESTINATION).getChild(0);

if (insertTableNode.getType() == HiveParser.TOK_TAB) { //需要做sql最终输出

cacheInsertTableField(insertTableNode, tableFieldOutputList);

}

}

}

}

}

}

return null;

}

private void loadTableFiledByTableName(String tableName, Map<String, List<CurTableField>> curTableFieldMap, Map<String, String> aliasMap) {

String tableWithSchema = tableName;

if (tableName.indexOf(".") < 0) {

tableWithSchema = defaultSchemaName + "." + tableName;

}

TableMetaInfo tableMetaInfo = tableMetaInfoMap.get(tableWithSchema);

if (tableMetaInfo != null) { //是 真实表

List<CurTableField> curFieldsList = new ArrayList<>();

//加载普通字段

String colNameJson = tableMetaInfo.getColNameJson();

List<JSONObject> colJsonObjectList = JSON.parseArray(colNameJson, JSONObject.class);

for (JSONObject colJsonObject : colJsonObjectList) {

CurTableField curTableField = new CurTableField();

OriginTableField originTableField = new OriginTableField();

originTableField.setField(colJsonObject.getString("name"));

originTableField.setPartition(false);

originTableField.setOriginTable(tableMetaInfo.getSchemaName() + "." + tableMetaInfo.getTableName());

if (colJsonObject.getString("type").indexOf("struct") > 0) {

Set<String> structFieldSet = getStructFieldSet(colJsonObject.getString("type"));

originTableField.setSubFieldSet(structFieldSet);

}

curTableField.getOriginTableFieldList().add(originTableField);

curTableField.setCurFieldName(colJsonObject.getString("name"));

curFieldsList.add(curTableField);

}

//加载分区字段

String partitionColNameJson = tableMetaInfo.getPartitionColNameJson();

List<JSONObject> partitionJsonObjectList = JSON.parseArray(partitionColNameJson, JSONObject.class);

for (JSONObject partitionColJsonObject : partitionJsonObjectList) {

CurTableField curTableField = new CurTableField();

OriginTableField originTableField = new OriginTableField();

originTableField.setField(partitionColJsonObject.getString("name"));

originTableField.setPartition(true);

originTableField.setOriginTable(tableMetaInfo.getSchemaName() + "." + tableMetaInfo.getTableName());

curTableField.getOriginTableFieldList().add(originTableField);

curTableField.setCurFieldName(partitionColJsonObject.getString("name"));

curFieldsList.add(curTableField);

}

curTableFieldMap.put(tableName, curFieldsList);

refTableFieldMap.put(tableName, curFieldsList);

String tableWithoutSchema = tableWithSchema.substring(tableWithSchema.indexOf(".") + 1);

aliasMap.put(tableWithoutSchema, tableWithSchema); //把不带库名的表名 作为别名的一种

} else { //不是真实表 从缓存中提取

List<CurTableField> subqueryFieldsList = subqueryTableFiedlMap.get(tableName);

if (subqueryFieldsList == null) {

throw new RuntimeException("未识别对应表: " + tableName);

}

curTableFieldMap.put(tableName, subqueryFieldsList);

}

}

// 递归查找某个节点下的引用的表,并进行加载

public void loadTablesFromNodeRec(ASTNode astNode, Map<String, List<CurTableField>> tableFieldMap, Map<String, String> aliasMap) {

if (astNode.getType() == HiveParser.TOK_TABREF) {

ASTNode tabTree = (ASTNode) astNode.getChild(0);

String tableName = null;

if (tabTree.getChildCount() == 1) {

tableName = BaseSemanticAnalyzer.getUnescapedName((ASTNode) tabTree.getChild(0));

} else {

tableName = BaseSemanticAnalyzer.getUnescapedName((ASTNode) tabTree.getChild(0)) + "." + tabTree.getChild(1); //自动拼接表名

}

//根据表名和补充元数据

loadTableFiledByTableName(tableName, tableFieldMap, aliasMap);

//涉及别名

if (astNode.getChildren().size() == 2) {

ASTNode aliasNode = (ASTNode) astNode.getChild(1);

aliasMap.put(aliasNode.getText(), tableName);

}

} else if (astNode.getType() == HiveParser.TOK_SUBQUERY) {

String aliasName = astNode.getFirstChildWithType(HiveParser.Identifier).getText();

loadTableFiledByTableName(aliasName, tableFieldMap, aliasMap);

} else if (astNode.getChildren() != null && astNode.getChildren().size() > 0) {

for (Node childNode : astNode.getChildren()) {

ASTNode childAstNode = (ASTNode) childNode;

loadTablesFromNodeRec(childAstNode, tableFieldMap, aliasMap);

}

}

}

//递归检查并收集条件表达式

public void loadConditionFromNodeRec(ASTNode node, List<WhereCondition> whereConditionList, Map<String, List<CurTableField>> queryTableFieldMap, Map<String, String> aliasMap) {

if (operators.contains(node.getText())

|| (node.getType() == HiveParser.TOK_FUNCTION && node.getChild(0).getText().equals("in"))) {

WhereCondition whereCondition = new WhereCondition();

if (node.getType() == HiveParser.TOK_FUNCTION && node.getChild(0).getText().equals("in")) {

if (node.getParent().getText().equals("not")) {

whereCondition.setOperator("nin");

} else {

whereCondition.setOperator("in");

}

} else {

whereCondition.setOperator(node.getText());

}

ArrayList<Node> children = node.getChildren();

for (Node child : children) {

ASTNode operatorChildNode = (ASTNode) child;

if (operatorChildNode.getType() == HiveParser.DOT) { //带表名的字段名

ASTNode prefixNode = (ASTNode) operatorChildNode.getChild(0).getChild(0);

ASTNode fieldNode = (ASTNode) operatorChildNode.getChild(1);

getWhereField(whereCondition, prefixNode.getText(), fieldNode.getText(), queryTableFieldMap, aliasMap);

whereConditionList.add(whereCondition);

} else if (operatorChildNode.getType() == HiveParser.TOK_TABLE_OR_COL) { //不带表名的字段名

ASTNode fieldNode = (ASTNode) operatorChildNode.getChild(0);

getWhereField(whereCondition, null, fieldNode.getText(), queryTableFieldMap, aliasMap);

whereConditionList.add(whereCondition);

}

}

} else {

if (node.getChildren() != null) {

for (Node nd : node.getChildren()) {

ASTNode nodeChild = (ASTNode) nd;

loadConditionFromNodeRec(nodeChild, whereConditionList, queryTableFieldMap, aliasMap);

}

}

}

}

private String getInputTableName(Stack<Node> stack) {

ASTNode globalQueryNode = (ASTNode) stack.firstElement();

ASTNode insertNode = (ASTNode) globalQueryNode.getFirstChildWithType(HiveParser.TOK_INSERT);

ASTNode tableNode = (ASTNode) insertNode.getChild(0).getChild(0); //TOK_DESINATION->TOK_TAB

if (tableNode.getChildCount() == 1) {

return defaultSchemaName + "." + BaseSemanticAnalyzer.getUnescapedName((ASTNode) tableNode.getChild(0)); //不带库名 补库名

} else {

return BaseSemanticAnalyzer.getUnescapedName((ASTNode) tableNode.getChild(0)) + "." + tableNode.getChild(1); //带库名

}

}

public List<CurTableField> getTableFieldOutput(ASTNode selectNode, Map<String, List<CurTableField>> curQueryTableFieldMap, Map<String, String> aliasMap) {

List<CurTableField> outputTableFieldList = new ArrayList<>();

for (Node selectXPRNode : selectNode.getChildren()) {

ASTNode selectXPRAstNode = (ASTNode) selectXPRNode;

// 如果是隐性 或者select * //返回子查询

if (selectXPRAstNode.getChild(0).getType() == HiveParser.TOK_SETCOLREF || selectXPRAstNode.getChild(0).getType() == HiveParser.TOK_ALLCOLREF) {

for (List<CurTableField> curTableFieldList : curQueryTableFieldMap.values()) {

outputTableFieldList.addAll(curTableFieldList);

}

return outputTableFieldList;

} else {

//逐个取节点下的select 的字段

CurTableField curTableField = new CurTableField();

loadCurTableFieldFromNodeRec((ASTNode) selectXPRAstNode, curTableField, curQueryTableFieldMap, aliasMap);

if (selectXPRNode.getChildren().size() == 2) { //说明为字段起了别名

ASTNode aliasNode = (ASTNode) ((ASTNode) selectXPRNode).getChild(1);

curTableField.setCurFieldName(aliasNode.getText());

}

outputTableFieldList.add(curTableField);

}

}

return outputTableFieldList;

}

//把对象保存到子查询缓存中

private void cacheSubqueryTableFieldMap(ASTNode subqueryNode, List<CurTableField> curTableFieldList) {

ASTNode subqueryAliasNode = (ASTNode) subqueryNode.getFirstChildWithType(HiveParser.Identifier);

String aliasName = subqueryAliasNode.getText();

List<CurTableField> existsTableFieldList = subqueryTableFiedlMap.get(aliasName);

if (existsTableFieldList != null) { //说明已经有查询声明为改别名了 ,主要原因是因为union造成的, 这种情况要按照顺序把每个字段的原始字段信息追加

for (int i = 0; i < existsTableFieldList.size(); i++) {

CurTableField existsTableField = existsTableFieldList.get(i);

CurTableField curTableField = curTableFieldList.get(i);

existsTableField.getOriginTableFieldList().addAll(curTableField.getOriginTableFieldList());

}

} else { //把子查询加入缓存

subqueryTableFiedlMap.put(aliasName, curTableFieldList);

}

}

private void cacheInsertTableField(ASTNode outputTableNode, List<CurTableField> curTableFieldList) {

String outputTableName = null;

if (outputTableNode.getChildCount() == 2) {

outputTableName = outputTableNode.getChild(0).getChild(0).getText() + "." + outputTableNode.getChild(1).getText();

} else {

outputTableName = defaultSchemaName + "." + outputTableNode.getChild(0).getChild(0).getText();

}

insertTableFieldMap.put(outputTableName, curTableFieldList);

}

// 利用递归获得当前节点下的字段信息

public void loadCurTableFieldFromNodeRec(ASTNode recNode, CurTableField curTableField, Map<String, List<CurTableField>> curQueryTableFieldMap, Map<String, String> aliasMap) {

if (recNode.getChildren() != null) {

for (Node subNode : recNode.getChildren()) {

ASTNode subAstNode = (ASTNode) subNode;

if (subAstNode.getType() == HiveParser.DOT) { //带表的字段

ASTNode prefixNode = (ASTNode) subAstNode.getChild(0).getChild(0);

ASTNode fieldNode = (ASTNode) subAstNode.getChild(1);

String prefix = prefixNode.getText();

List<OriginTableField> originTableFieldList = getOriginFieldByFieldName(prefix, fieldNode.getText(), curQueryTableFieldMap, aliasMap);

curTableField.getOriginTableFieldList().addAll(originTableFieldList);

curTableField.setCurFieldName(fieldNode.getText());

} else if (subAstNode.getType() == HiveParser.TOK_TABLE_OR_COL) {

ASTNode fieldNode = (ASTNode) subAstNode.getChild(0);

//不带表的字段要从

List<OriginTableField> originTableFieldList = getOriginTableFieldList(curQueryTableFieldMap, fieldNode.getText());

curTableField.getOriginTableFieldList().addAll(originTableFieldList);

curTableField.setCurFieldName(fieldNode.getText());

} else {

loadCurTableFieldFromNodeRec(subAstNode, curTableField, curQueryTableFieldMap, aliasMap);

}

}

}

}

//前缀

public void getWhereField(WhereCondition whereCondition, String prefix, String fieldName, Map<String, List<CurTableField>> queryTableFieldMap, Map<String, String> aliasMap) {

List<OriginTableField> originTableFieldList = null;

if (prefix == null) {

originTableFieldList = getOriginTableFieldList(queryTableFieldMap, fieldName);

} else { //有前缀

originTableFieldList = getOriginFieldByFieldName(prefix, fieldName, queryTableFieldMap, aliasMap); //把前缀作为表查询

}

if (originTableFieldList == null) {

throw new RuntimeException("无法识别的字段名:" + fieldName);

}

whereCondition.setTableFieldList(originTableFieldList);

}

// private List getOriginTableFieldList(List curTableField, String fieldName) {

//

// }

// private List getCurTableFieldListByPrefix(String prefix, Map> queryTableFieldMap, Map aliasMap) {

// List curFieldList = queryTableFieldMap.get(prefix); //把前缀作为表查询

// if (curFieldList == null) {//未查询出 尝试换为字段查询

// String tableName = aliasMap.get(prefix);

// if (tableName != null) {

// curFieldList = queryTableFieldMap.get(tableName);

// }

// }

//

// return curFieldList;

// }

//根据前缀和字段名 获得从表结构中获得 原始字段列表

private List<OriginTableField> getOriginFieldByFieldName(String prefix, String fieldName, Map<String, List<CurTableField>> queryTableFieldMap, Map<String, String> aliasMap) {

List<CurTableField> curFieldList = queryTableFieldMap.get(prefix); //把前缀作为表查询

if (curFieldList == null) {//未查询出 尝试换为字段查询

String tableName = aliasMap.get(prefix);

if (tableName != null) {

curFieldList = queryTableFieldMap.get(tableName);

}

}

if (curFieldList == null) {

return getOriginTableFieldList(queryTableFieldMap, prefix); //前缀有可能是结构体字段名

}

if (curFieldList == null) {

throw new RuntimeException("不明确的表前缀:" + prefix);

}

return getOriginTableFieldList(fieldName, curFieldList);

}

private List<OriginTableField> getOriginTableFieldList(String fieldName, List<CurTableField> curFieldList) {

for (CurTableField tableField : curFieldList) {

if (tableField.getCurFieldName().equals(fieldName)) {

return tableField.getOriginTableFieldList();

}

}

return new ArrayList<>(); // 一般是常量字段 比 lateral产生的常量字段 不是从表中计算而来

}

private List<OriginTableField> getOriginTableFieldList(Map<String, List<CurTableField>> queryTableFieldMap, String fieldName) {

List<OriginTableField> originTableFieldList = null;

for (Map.Entry entry : queryTableFieldMap.entrySet()) {

List<CurTableField> curTableFieldList = (List<CurTableField>) entry.getValue();

List<OriginTableField> matchedOriginTableFieldList = getOriginTableFieldList(fieldName, curTableFieldList);

if (originTableFieldList != null && originTableFieldList.size() > 0 && matchedOriginTableFieldList.size() > 0) {

throw new RuntimeException("归属不明确的字段:" + fieldName);

} else {

originTableFieldList = matchedOriginTableFieldList;

}

}

return originTableFieldList;

}

//拆分子字段

//structHive 解析语法树

package com.atguigu.dga.util;

import org.apache.hadoop.hive.ql.lib.DefaultGraphWalker;

import org.apache.hadoop.hive.ql.lib.Dispatcher;

import org.apache.hadoop.hive.ql.lib.GraphWalker;

import org.apache.hadoop.hive.ql.lib.Node;

import org.apache.hadoop.hive.ql.parse.*;

import java.util.Collections;

import java.util.Stack;

public class SqlParser {

//1 把sql转换为语法树 有工具 完成 在hive依赖中就已经提供了

//

//2 提供了一个 遍历器 后序遍历

//

//3 自定义一个节点处理器

//4 把处理器放到遍历器中

//5 让遍历器遍历语法树

public static void parse(Dispatcher dispatcher,String sql ) throws Exception {

//1 把sql转换为语法树 有工具 完成 在hive依赖中就已经提供了

ParseDriver parseDriver = new ParseDriver(); //用于把sql转为语法树

ASTNode astNode = parseDriver.parse(sql);

//2 提供了一个 遍历器 后序遍历

while(astNode.getType()!= HiveParser.TOK_QUERY){ //循环遍历直到找到第一个query节点 ,循环退出 ,用query节点作为根节点。

astNode=(ASTNode)astNode.getChild(0);

}

//3 自定义一个节点处理器 //根据不同的需求在方法外部定义 ,定义好后传递

//4 把处理器放到遍历器中

GraphWalker graphWalker=new DefaultGraphWalker(dispatcher);

//5 让遍历器遍历语法树

graphWalker.startWalking(Collections.singletonList(astNode),null);

}

public static void main(String[] args) throws Exception {

String sql = " select a,b,c from gmall.user_info u where u.id='123' and dt='123123' ";

// 自定义一个节点处理器

TestDispatcher testDispatcher = new TestDispatcher();

SqlParser.parse( testDispatcher,sql);

}

static class TestDispatcher implements Dispatcher {

//每到达一个节点要处理的事项

@Override

public Object dispatch(Node nd, Stack<Node> stack, Object... nodeOutputs) throws SemanticException {

ASTNode astNode = (ASTNode) nd;

System.out.println("type"+astNode.getType() +" || token:"+astNode.getToken().getText());

return null;

}

}

}

druid 解析SQL语法树

import com.alibaba.druid.DbType;

import com.alibaba.druid.sql.ast.*;

import com.alibaba.druid.sql.ast.expr.SQLAggregateExpr;

import com.alibaba.druid.sql.ast.expr.SQLMethodInvokeExpr;

import com.alibaba.druid.sql.ast.statement.SQLSelectItem;

import com.alibaba.druid.sql.ast.statement.SQLSelectStatement;

import com.alibaba.druid.sql.dialect.hive.ast.HiveInsertStatement;

import com.alibaba.druid.sql.dialect.hive.parser.HiveStatementParser;

import com.alibaba.druid.sql.parser.SQLParserUtils;

import com.alibaba.druid.sql.parser.SQLStatementParser;

import java.util.List;

import java.util.Map;

import java.util.Set;

import com.alibaba.druid.sql.SQLUtils;

import com.alibaba.druid.sql.ast.SQLExpr;

import com.alibaba.druid.sql.ast.SQLName;

import com.alibaba.druid.sql.ast.SQLStatement;

import com.alibaba.druid.sql.ast.expr.SQLBinaryOpExpr;

import com.alibaba.druid.sql.parser.SQLStatementParser;

import com.alibaba.druid.sql.parser.ParserException;

import com.alibaba.druid.sql.parser.SQLParserFeature;

import com.alibaba.druid.sql.repository.SchemaRepository;

import com.alibaba.druid.sql.visitor.SchemaStatVisitor;

import com.alibaba.druid.stat.TableStat;

import com.alibaba.fastjson.JSON;

import com.alibaba.druid.sql.ast.SQLExpr;

import com.alibaba.druid.sql.ast.SQLStatement;

import com.alibaba.druid.sql.ast.statement.SQLSelect;

import com.alibaba.druid.sql.ast.statement.SQLSelectItem;

import com.alibaba.druid.sql.SQLUtils;

import com.alibaba.druid.sql.ast.SQLStatement;

import com.alibaba.druid.sql.dialect.mysql.visitor.MySqlSchemaStatVisitor;

import com.alibaba.druid.sql.dialect.hive.visitor.*;

/**

* Created by 黄凯 on 2023/6/7 0007 14:11

*

* @author 黄凯

* 永远相信美好的事情总会发生.

*/

public class DruidDemo {

/*public static void main(String[] args) {

String sql = " select a,b,c from gmall.user_info u where u.id='123' and dt='123123' ";

// String sql = "with t1 as (select aa(a), b, c, dt as dd\n" +

// " from tt1,\n" +

// " tt2\n" +

// " where tt1.a = tt2.b\n" +

// " and dt = '2023-05-11')\n" +

// "insert\n" +

// "overwrite\n" +

// "table\n" +

// "tt9\n" +

// "select a, b, c\n" +

// "from t1\n" +

// "where dt = date_add('2023-06-08', -4)\n" +

// "union\n" +

// "select a, b, c\n" +

// "from t2\n" +

// "where dt = date_add('2023-06-08', -7)";

// 提取表名

// SQLSelectItem sqlSelectItem = SQLUtils.toSelectItem(sql, DbType.mysql);

// System.out.println("sqlSelectItem = " + sqlSelectItem);

//

// SQLExpr expr = sqlSelectItem.getExpr();

//

// System.out.println(expr.toString());

// String string1 = JSON.toJSONString(expr);

//

//

//

// System.out.println(expr.getAttributesDirect());

//

// SQLCommentHint hint = expr.getHint();

// Map attributes1 = expr.getAttributes();

// List children1 = expr.getChildren();

// Map attributesDirect = expr.getAttributesDirect();

// Object subQuery = expr.getAttribute("subQuery");

// List afterCommentsDirect = expr.getAfterCommentsDirect();

// SQLObject parent = expr.getParent();

//

//

// Map attributes2 = children1.get(0).getAttributes();

// 创建Parser对象

SQLStatementParser parser = SQLParserUtils.createSQLStatementParser(sql, DbType.hive);

SQLStatement statement = parser.parseStatement();

List children2 = statement.getChildren();

Object select = statement.getAttribute("select");

Object query = statement.getAttribute("query");

Object from = statement.getAttribute("from");

List headHintsDirect = statement.getHeadHintsDirect();

Map attributesDirect1 = statement.getAttributesDirect();

Map attributes3 = statement.getAttributes();

List afterCommentsDirect1 = statement.getAfterCommentsDirect();

List beforeCommentsDirect = statement.getBeforeCommentsDirect();

String string = JSON.toJSONString(children2);

for (SQLObject child : children2) {

if (child instanceof SQLSelectItem) {

SQLSelectItem selectItem = (SQLSelectItem) child;

SQLExpr expr2 = selectItem.getExpr();

SQLObject parent = expr2.getParent();

System.out.println("parent = " + parent);

// 处理selectList中的表达式

System.out.println(expr2.toString());

}

}

List children = statement.getChildren();

for (SQLObject child : children) {

System.out.println("child = " + child);

Map attributes = child.getAttributes();

System.out.println("attributes = " + attributes);

}

SQLLimit limit = SQLUtils.getLimit(statement, DbType.mysql);

System.out.println("limit = " + limit);

//强转

SQLSelectStatement statement1 = (SQLSelectStatement) statement;

// HiveInsertStatement statement1 = (HiveInsertStatement) statement;

//对的

SQLSelect select1 = statement1.getSelect();

// SQLSelect select1 = null;

SQLSelect sqlSelect = select1;

List selectItems = sqlSelect.getQueryBlock().getSelectList();

for (SQLSelectItem selectItem : selectItems) {

SQLExpr expr3 = selectItem.getExpr();

// 处理selectList中的表达式

System.out.println(expr3.toString());

}

SQLExpr where = sqlSelect.getQueryBlock().getWhere();

System.out.println("where = " + where);

//对的

/

// 假设sql是要分析的SQL查询语句

// String sql = "SELECT column1, column2 FROM table1";

// 解析SQL语句

List statements = SQLUtils.parseStatements(sql, DbType.hive);

// 创建血缘分析器

HiveSchemaStatVisitor visitor = new HiveSchemaStatVisitor();

for (SQLStatement statement3 : statements) {

statement3.accept(visitor);

}

System.out.println(visitor);

SchemaStatVisitor schemaStatVisitor = new SchemaStatVisitor();

HiveStatementParser parser3 = new HiveStatementParser(sql);

// 使用Parser解析生成AST,这里SQLStatement就是AST

SQLStatement sqlStatement = parser3.parseStatement();

HiveSchemaStatVisitor visitor3 = new HiveSchemaStatVisitor();

sqlStatement.accept(visitor3);

Map tables = visitor3.getTables();

System.out.println(tables.keySet());

System.out.println("使用visitor数据表:" + visitor3.getTables());

System.out.println("使用visitor字段:" + visitor3.getColumns());

System.out.println("使用visitor条件:" + visitor3.getConditions());

System.out.println("使用visitor分组:" + visitor3.getGroupByColumns());

System.out.println("使用visitor排序:" + visitor3.getOrderByColumns());

// 获取血缘关系结果

// Map> tableLineage = visitor.getColumnsLineage();

// 获取血缘关系结果

// Map> tableLineage = visitor.getco

// 创建血缘分析器

// MySqlSchemaStatVisitor visitor = new MySqlSchemaStatVisitor();

// // 获取血缘关系结果

// Map> tableLineage = visitor.getColumnsLineage();

// 假设statement是SQLStatement对象

// if (select1 instanceof SQLSelect) {

// SQLSelect sqlSelect = (SQLSelect) statement;

// List selectItems = sqlSelect.getQueryBlock().getSelectList();

// for (SQLSelectItem selectItem : selectItems) {

// SQLExpr expr3 = selectItem.getExpr();

// // 处理selectList中的表达式

// System.out.println(expr3.toString());

// }

// }

// // 获取表名

// String tableName = statement.getTableSource().toString();

//

// // 获取列名

// List selectItems = statement.getSelect().getQueryBlock().getSelectList();

// for (SQLSelectItem item : selectItems) {

// String columnName = item.getExpr().toString();

// // 处理列名

// }

//

// // 获取条件

// SQLExpr where = statement.getSelect().getQueryBlock().getWhere();

// if (where != null) {

// // 处理条件

// }

}*/

/**

* 测试Druid

*

* @param args

*/

public static void main(String[] args) {

String sql = "with t1 as (select aa(a), b, c, dt as dd\n" +

" from tt1,\n" +

" tt2\n" +

" where tt1.a = tt2.b\n" +

" and dt = '2023-05-11')\n" +

"insert\n" +

"overwrite\n" +

"table\n" +

"tt9\n" +

"select a, b, c\n" +

"from t1\n" +

"where dt = date_add('2023-06-08', -4)\n" +

"union\n" +

"select a, b, c\n" +

"from t2\n" +

"where dt = date_add('2023-06-08', -7)";

// 解析SQL语句

List<SQLStatement> statements = SQLUtils.parseStatements(sql, DbType.hive);

String string = SQLUtils.toSQLString(statements, DbType.hive);

System.out.println("string = " + string);

// 创建血缘分析器

HiveSchemaStatVisitor visitor = new HiveSchemaStatVisitor();

for (SQLStatement statement3 : statements) {

statement3.accept(visitor);

}

System.out.println(visitor);

SchemaStatVisitor schemaStatVisitor = new SchemaStatVisitor();

HiveStatementParser parser3 = new HiveStatementParser(sql);

// 使用Parser解析生成AST,这里SQLStatement就是AST

SQLStatement sqlStatement = parser3.parseStatement();

HiveSchemaStatVisitor visitor3 = new HiveSchemaStatVisitor();

sqlStatement.accept(visitor3);

Map<TableStat.Name, TableStat> tables = visitor3.getTables();

System.out.println(tables.keySet());

System.out.println("使用visitor数据表:" + visitor3.getTables());

System.out.println("使用visitor字段:" + visitor3.getColumns());

System.out.println("使用visitor条件:" + visitor3.getConditions());

System.out.println("使用visitor分组:" + visitor3.getGroupByColumns());

System.out.println("使用visitor排序:" + visitor3.getOrderByColumns());

List<SQLAggregateExpr> aggregateFunctions = visitor3.getAggregateFunctions();

List<SQLMethodInvokeExpr> functions = visitor3.getFunctions();

List<SQLName> originalTables = visitor3.getOriginalTables();

List<Object> parameters = visitor3.getParameters();

Set<TableStat.Relationship> relationships = visitor3.getRelationships();

SchemaRepository repository = visitor3.getRepository();

}

}