分布式环境中如何使用聚合日志系统ELK

ELK简介

ELK日志系统相信大家都不陌生了,如果你的系统是集群有多个实例,那么去后台看日志肯定不方便,因为前台访问时随机路由到后台app的,所以需要一个聚合的日志查询系统。ELK就是一个不错的选择。

ELK简单说的是:Elasticsearch + Logstash + Kibana。Logstash用于分析日志,获取想要的日志格式;Elasticsearch用于给日志创建索引;Kibana用于展现日志。

这里我们还要增加一个采集软件:FileBeat,用于采集各app的日志。

系统机构图如下:

ELK配置

安装ELK的环境需要安装JDK,这里我会说一些简单的配置,详细的安装大家可以自行上网搜索。有兴趣的童鞋可以看看这篇文章:

“https://blog.51cto.com/54dev/2570811?source=dra

”

当我们解压了Logstash软件后,我们需要修改startup.options文件配置:

cd logstash/config/startup.options

LS_HOME=/home/elk/support/logstash

LS_SETTINGS_DIR="${LS_HOME}/config"

LS_OPTS="--path.settings ${LS_SETTINGS_DIR}"

LS_JAVA_OPTS=""

LS_PIDFILE=${LS_HOME}/logs/logstash.pid

LS_USER=elk

LS_GROUP=elk

LS_GC_LOG_FILE=${LS_HOME}/logs/logstash-gc.log

我们还要给Logstash配置beats的端口,还有es的ip地址:

cd logstash/config/logstash-aicrm-with-filebeat.yml

input {

beats {

port => 5044

}

}

output {

elasticsearch {

hosts => "192.168.205.129:9200"

index => "aicrm-app-node"

}

}

对于Filebeat,我们需要配置日志格式以及Logstash的地址:

cd filebeat/filebeat.yml

filebeat:

prospectors:

-

paths:

- /home/elk/logs/xxx*.log

type: log

mutiline.pattern: '^\['

mutiline.negate: true

mutiline.match: after

output:

logstash:

hosts: ["192.168.205.129:5044"]

最后我们在Kibana里面配置es的地址:

cd kibana/config/kibana.yml

...

7 server.host: "0.0.0.0"

8

...

21 elasticsearch.url: "http://192.168.205.129:9200";

22

配置好后,启动ELK

启动顺序为:elasticsearch ➡ logstash ➡ filebeat ➡ kibana

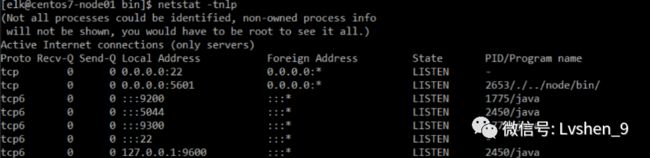

启动后的进程:

我们浏览器端访问:

http://192.168.205.129:5601/app/kibana

这里我们需要创建ES索引

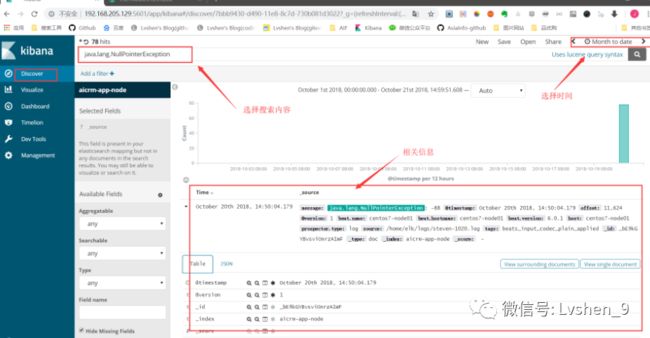

之后我们就可以搜索日志了:

关于日志解析

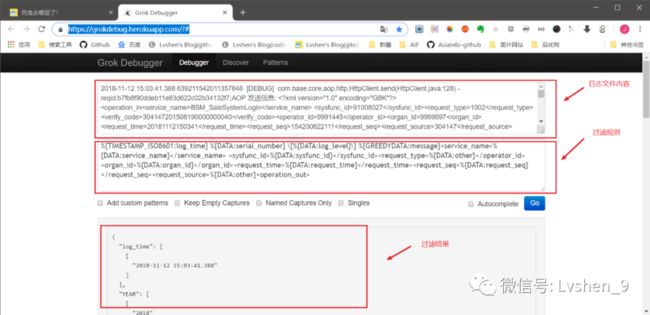

根据业务情况,会出现ELK解析多种格式的日志需求,这时需要在Logstash的配置文件中配置grok规则解析日志文件,grok解析建议使用在线工具测试。

在线Grok解析工具地址:https://grokdebug.herokuapp.com/?#

注意,这个解析地址需要FQ才能访问。

解析样例:

在线测试样例:

Grok的语句需要写在ELK的Logstash中的配置文件中,如下图:

异常日志

2018-11-09 23:01:18.766 [ERROR] com.xxx.rpc.server.handler.ServerHandler - 调用com.xxx.search.server.SearchServer.search时发生错误!

java.lang.reflect.InvocationTargetException

at sun.reflect.GeneratedMethodAccessor6.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

grok解析

%{TIMESTAMP_ISO8601:log_time} [%{DATA:log_level}] %{GREEDYDATA:message}

解析结果

{

"log_time": [

[

"2018-11-09 23:01:18.766"

]

],

"YEAR": [

[

"2018"

]

],

"MONTHNUM": [

[

"11"

]

],

"MONTHDAY": [

[

"09"

]

],

"HOUR": [

[

"23",

null

]

],

"MINUTE": [

[

"01",

null

]

],

"SECOND": [

[

"18.766"

]

],

"ISO8601_TIMEZONE": [

[

null

]

],

"log_level": [

[

"ERROR"

]

],

"message": [

[

" com.xxx.rpc.server.handler.ServerHandler - 调用com.xxx.search.server.SearchServer.search时发生错误!"

]

]

我们再来解析如下日志:

请求报文:service_name接口名,sysfunc_id功能号,operator_id 操作员id,organ_id 机构号,request_seq 请求流水

2018-11-12 15:03:41.388 639211542011357848 [DEBUG] com.base.core.aop.http.HttpClient.send(HttpClient.java:128) -

reqid:b7fb8f90ddeb11e83d622c02b34132f7;AOP 发送信息: BSM_SaleSystemLogin

91008027 1002 304147201506190000000040 9991445

9999997 20181112150341 154200622111 304147 0100 0100 13xx6945211 871221 101704 34 0000 120.33.xxx.198, 10.46.xxx.182, 12.0.1

BSM_SaleSystemLogin 1002 91008027

15xxx0622111 20181112150342 471860579309 304147 0 0000 173616671275425657328820 132394 13xxx945211 6100004 1 1 20170623145448 30000101000000 0 20170623145448 4020205 创建手机号码与角色对应关系

|

我们将此段日志在Grok网站进行解析,获得grok语句:

%{TIMESTAMP_ISO8601:log_time} %{DATA:serial_number} [%{DATA:log_level}] %{GREEDYDATA:message}%{DATA:service_name} %{DATA:sysfunc_id} %{DATA:other}%{DATA:organ_id} %{DATA:request_time} %{DATA:request_seq} %{DATA:other}

解析结果如下:

{

"log_time": [

[

"2018-11-12 15:03:41.388"

]

],

"YEAR": [

[

"2018"

]

],

"MONTHNUM": [

[

"11"

]

],

"MONTHDAY": [

[

"12"

]

],

"HOUR": [

[

"15",

null

]

],

"MINUTE": [

[

"03",

null

]

],

"SECOND": [

[

"41.388"

]

],

"ISO8601_TIMEZONE": [

[

null

]

],

"serial_number": [

[

"639211542011357848 "

]

],

"log_level": [

[

"DEBUG"

]

],

"message": [

[

" com.base.core.aop.http.HttpClient.send(HttpClient.java:128) - reqid:b7fb8f90ddeb11e83d622c02b34132f7;AOP 发送信息: 304147201506190000000040 9991445",

"3041470100 0100 13xxx945211 871221 101704 34 0000 120.33.xxx.198, 10.46.xxx.182, 12.0.1 我们再来解析一条niginx的日志:

2018/11/01 23:30:39 [error] 15105#0: *397937824 connect() failed (111: Connection refused) while connecting to upstream, client: 10.48.xxx.3, server: 127.0.0.1, request: "POST /o2o_usercenter_svc/xxx/sysUserInfoService?req_sid=1612e430ddeb11e83d622c02b34132f7&syslogid=null HTTP/1.1", upstream: "http://127.0.0.1:xxx/o2o_usercenter_svc/remote/sysUserInfoService?req_sid=1612e430ddeb11e83d622c02b34132f7&syslogid=null", host: "10.46.xxx.155:xxx"

解析语句:

(?%{YEAR}[./-]%{MONTHNUM}[./-]%{MONTHDAY}[- ]%{TIME}) [%{LOGLEVEL:severity}] %{POSINT:pid}#%{NUMBER}: %{GREEDYDATA:errormessage}(?:, client: (?%{IP}|%{HOSTNAME}))(?:, server: %{IPORHOST:server}?)(?:, request: %{QS:request})?(?:, upstream: (?\”%{URI}\”|%{QS}))?(?:, host: %{QS:request_host})?(?:, referrer: \”%{URI:referrer}\”)?

解析结果如下:

{

"timestamp": [

[

"2018/11/01 23:30:39"

]

],

"YEAR": [

[

"2018"

]

],

"MONTHNUM": [

[

"11"

]

],

"MONTHDAY": [

[

"01"

]

],

"TIME": [

[

"23:30:39"

]

],

"HOUR": [

[

"23"

]

],

"MINUTE": [

[

"30"

]

],

"SECOND": [

[

"39"

]

],

"severity": [

[

"error"

]

],

"pid": [

[

"15105"

]

],

"NUMBER": [

[

"0"

]

],

"BASE10NUM": [

[

"0"

]

],

"errormessage": [

[

"*397937824 connect() failed (111: Connection refused) while connecting to upstream"

]

],

"remote_addr": [

[

"10.48.xxx.3"

]

],

"IP": [

[

"10.48.xxx.3",

null,

null,

null

]

],

"IPV6": [

[

null,

null,

null,

null

]

],

"IPV4": [

[

"10.48.xxx.3",

null,

null,

null

]

],

"HOSTNAME": [

[

null,

"127.0.0.1",

"127.0.0.1",

null

]

],

"server": [

[

"127.0.0.1"

]

],

...

}

Kibana图表面板

上图中,我们可以在Kibana上面配置一些监控面板。比如配置异常日志监控。

关于ELK监控面板配置,有兴趣的童鞋可以看看这篇文章:

“https://blog.51cto.com/hnr520/1845900

”

往期推荐

-

我写出这样干净的代码,老板直夸我

-

云南丽江旅游攻略

-

使用ThreadLocal怕内存泄漏?

-

Java进阶之路思维导图

-

程序员必看书籍推荐

-

3万字的Java后端面试总结(附PDF)

扫码二维码,获取更多精彩。或微信搜Lvshen_9,可后台回复获取资料

回复"java" 获取java电子书;

回复"python"获取python电子书;

回复"算法"获取算法电子书;

回复"大数据"获取大数据电子书;

回复"spring"获取SpringBoot的学习视频。

回复"面试"获取一线大厂面试资料

回复"进阶之路"获取Java进阶之路的思维导图

回复"手册"获取阿里巴巴Java开发手册(嵩山终极版)

回复"总结"获取Java后端面试经验总结PDF版

回复"Redis"获取Redis命令手册,和Redis专项面试习题(PDF)

回复"并发导图"获取Java并发编程思维导图(xmind终极版)

另:点击【我的福利】有更多惊喜哦。

![]()