4️⃣Hive

1. 是什么?

- 由Facebook开源用于解决海量结构化日志的数据统计工具。

- Hive是基于Hadoop的一个数据仓库工具,可以将结构化的数据文件映射为一张表,并提供类SQL查询功能。(Hive没有存储数据的能力,只有使用数据的能力)

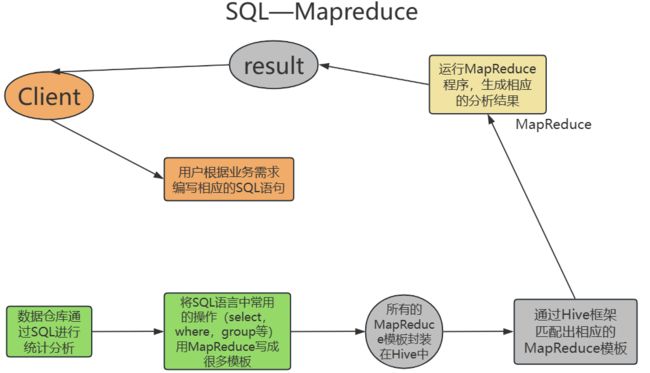

2. Hive本质

- 将HQL转化成MapReduce程序

- Hive 处理的数据存储在 HDFS

- Hive 分析数据底层的实现是 MapReduce

- 执行程序运行在 Yarn 上

3. Hive优缺点

3.1. 优点

- 操作接口采用类SQL语法,提供快速开发的能力(简单,容易上手)

- 避免了去写 MapReduce,减少开发人员的学习成本。

- Hive 的执行延迟比较高,因此 Hive 常用于数据分析,对实时性要求不高的场合。

- Hive 优势在于处理大数据,对于处理小数据没有优势,因为 Hive 的执行延迟比较 高。

- Hive 支持用户自定义函数,用户可以根据自己的需求来实现自己的函数。

3.2. 缺点

- Hive 的 HQL 表达能力有限

-

- 迭代式算法无法表达

- 数据挖掘方面不擅长,由于 MapReduce 数据处理流程的限制,效率更高的算法却 无法实现。

- Hive 的效率比较低

-

- Hive 自动生成的 MapReduce 作业,通常情况下不够智能化

- Hive 调优比较困难,粒度较粗

4. Hive 架构原理

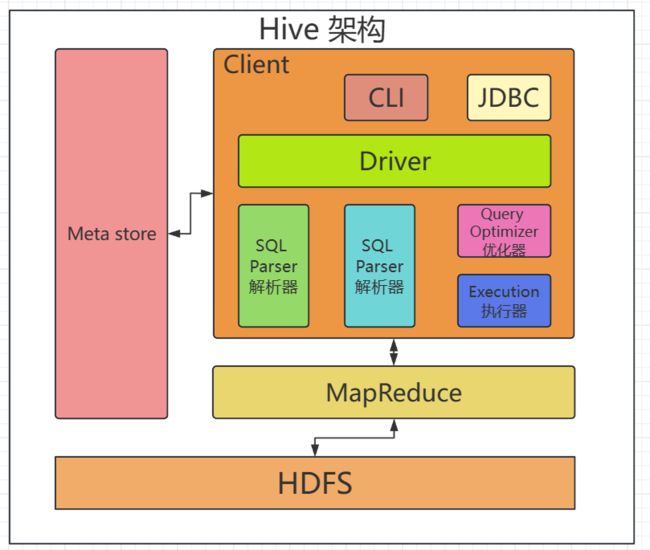

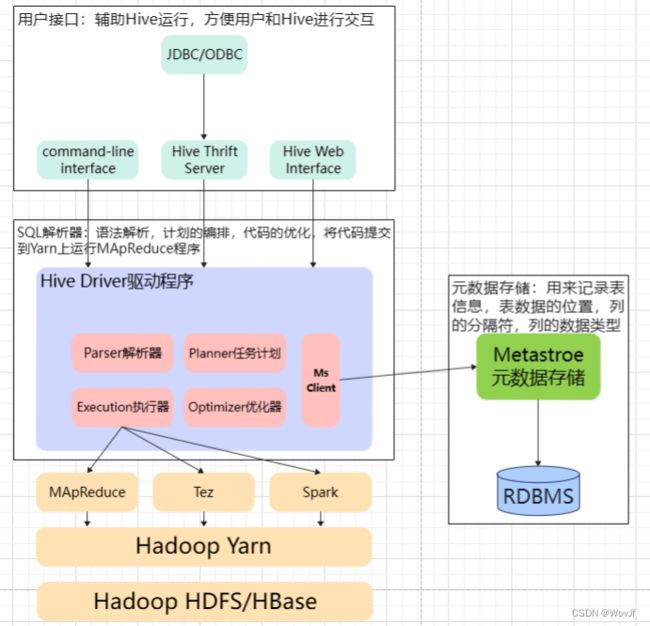

用户接口:Client

- CLI(command-line interface)、JDBC/ODBC(jdbc 访问 hive)、WEBUI(浏览器访问 hive)

元数据:Metastore

- 元数据包括:表名、表所属的数据库(默认是 default)、表的拥有者、列/分区字段、 表的类型(是否是外部表)、表的数据所在目录等;

- 默认存储在自带的 derby 数据库中,推荐使用 MySQL 存储 Metastore

Hadoop

- 使用 HDFS 进行存储,使用 MapReduce 进行计算。

驱动器:Driver

- 解析器(SQL Parser):将 SQL 字符串转换成抽象语法树 AST,这一步一般都用第 三方工具库完成,比如 antlr;对 AST 进行语法分析,比如表是否存在、字段是否存在、SQL 语义是否有误。

- 编译器(Physical Plan):将 AST 编译生成逻辑执行计划。

- 优化器(Query Optimizer):对逻辑执行计划进行优化。

- 执行器(Execution):把逻辑执行计划转换成可以运行的物理计划。对于 Hive 来 说,就是 MR/Spark。

Hive 通过给用户提供的一系列交互接口,接收到用户的指令(SQL),使用自己的 Driver, 结合元数据(MetaStore),将这些指令翻译成 MapReduce,提交到 Hadoop 中执行,最后,将 执行返回的结果输出到用户交互接口。

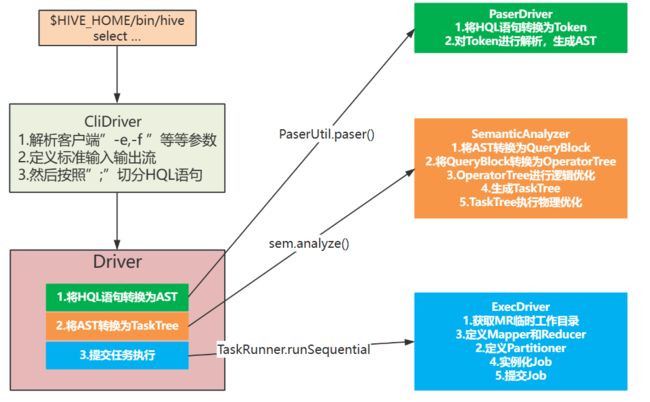

5. HQL 转换为 MR 任务流程说明

- 进入程序,利用Antlr框架定义HQL的语法规则,对HQL完成词法语法解析,将HQL转换为为AST(抽象语法树);

- 遍历AST,抽象出查询的基本组成单元QueryBlock(查询块),可以理解为最小的查询执行单元;

- 遍历QueryBlock,将其转换为OperatorTree(操作树,也就是逻辑执行计划),可以理解为不可拆分的一个逻辑执行单元;

- 使用逻辑优化器对OperatorTree(操作树)进行逻辑优化。例如合并不必要的ReduceSinkOperator,减少Shuffle数据量;

- 遍历OperatorTree,转换为TaskTree。也就是翻译为MR任务的流程,将逻辑执行计划转换为物理执行计划;

- 使用物理优化器对TaskTree进行物理优化;

- 生成最终的执行计划,提交任务到Hadoop集群运行。

5.1. HQL转换为MR核心流程

5.2. 程序入口 — CliDriver

- 众所周知,我们执行一个 HQL 语句通常有以下几种方式:

-

- $HIVE_HOME/bin/hive 进入客户端,然后执行 HQL;

- $HIVE_HOME/bin/hive -e “hql”;

- $HIVE_HOME/bin/hive -f hive.sql;

- 先开启 hivesever2 服务端,然后通过 JDBC 方式连接远程提交 HQL。

- 可 以 知 道 我 们 执 行 HQL 主 要 依 赖 于 $HIVE_HOME/bin/hive 和 $HIVE_HOME/bin/ hivesever2 两种脚本来实现提交 HQL,而在这两个脚本中,最终启动的 JAVA 程序的主类为 “org.apache.hadoop.hive.cli.CliDriver”,所以其实 Hive 程序的入口就是“CliDriver”这个类。

6. Hive和数据库比较

由于Hive采用了类似SQL的查询语言HQL,因此很容易将Hive理解为数据库。从结构上看,Hive和数据库除了拥有类似的查询语言,再无类似之处。

| Hive |

数据库 |

|

| 查询语言 |

HQL |

SQL |

| 数据存储 |

HDFS |

块设备或者本地文件系统 |

| 数据更新 |

不支持修改和添加 |

支持修改和添加 |

| 执行方式 |

MapReduce |

Executor |

| 执行延迟 |

高 |

低 |

| 可扩展性 |

高 |

低 |

| 数据规模 |

大 |

小 |

| 索引 |

0.8版本后加入位图索引 |

支持多种索引 |

| 事务 |

0.14版本后开始支持事务 |

支持 |

7. 基本数据类型

| Hive数据类型 |

Java 数据类型 |

长度 |

例子 |

| TINYINT |

byte |

1byte有符号整数 |

20 |

| SMALINT |

short |

2byte有符号整数 |

20 |

| INT |

int |

4byte有符号整数 |

20 |

| BIGINT |

long |

8byte有符号整数 |

20 |

| BOOLEAN |

boolean |

布尔类型,true 或者false |

TRUE FALSE |

| FLOAT |

float |

单精度浮点数 |

3.14159 |

| DOUBLE |

double |

双精度浮点数 |

3.14159 |

| STRING |

string |

字符系列。可以指定字符集。可以使用单引号或者双引号。 |

‘now is the time ’ “ for all good men” |

| TIMESTAMP |

时间类型 |

||

| BINARY |

字节数组 |

8. 集合数据类型

| 数据类型 |

描述 |

语法示例 |

| STRUCT |

和c语言中的struct类似,都可以通过“点”符号访问元素内容。例如,如果某个列的数据类型是STRUCT{first STRING,last STRING},那么第 1 个元素可以通过字段.first 来引用。 |

struct() 例如 struct |

| MAP |

MAP 是一组键-值对元组集合,使用数组表示法可以访问数据。例如,如果某个列的数据类型是 MAP,其中键->值对是’first’->’John’和'last’->’Doe’,那么可以通过字段名[“last’]获取最后一个元素 |

map() 例如 map |

| ARRAY |

数组是一组具有相同类型和名称的变量的集合。这些变量称为数组的元素,每个数组元素都有一个编号,编号从0开始。例如,数组值为[“John’,Doe’],那么第二个元素可以通过数组名[1]进行引用。 |

Array() 例如 array |

9. DDL 数据定义

9.1. 创建数据库

CREATE DATABASE [IF NOT EXISTS] database_name --指定库名

[COMMENT database_comment] --指定库的描述信息

[LOCATION hdfs_path] --指定库在HDFS中的对应目录

[WITH DBPROPERTIES (property_name=property_value, ...)]; --指定库的属性信息9.1.1. 创建一个数据库,数据库在 HDFS 上的默认存储路径是/user/hive/warehouse/*.db。

hive (default)> create database db_hive;9.1.2. 避免要创建的数据库已经存在错误,增加 if not exists 判断。(标准写法)

hive (default)> create database if not exists db_hive;9.1.3. 创建一个数据库,指定数据库在 HDFS 上存放的位置

hive (default)> create database db_hive2 location '/db_hive2.db';9.1.4. 元数据解释

- 在Hive中创建的库或者表,在HDFS中都有一个对应的目录,库或者表与目录的映射关系维护到MySQL中的表中,建库或者建表的时候,可以通过location指定库和表在HDFS的映射目录,如果指定,则使用指定的目录。

- 如果不指定,库默认映射的目录为/user/hive/warehouse/库名.db

-

- 表默认映射的目录为,表所在的库映射的目录/表名

9.2. 查询数据库

9.2.1. 显示数据库

9.2.1.1. 显示数据库

hive> show databases;9.2.1.2. 过滤显示查询的数据库

hive> show databases like 'db_hive*';

OK

db_hive

db_hive_19.2.2. 查看数据库详情

9.2.2.1. 显示数据库信息

- desc database 库名

hive> desc database db_hive;

OK

db_hive hdfs://hadoop102:9820/user/hive/warehouse/db_hive.db

WovJ9.2.2.2. 显示数据库详细信息,extended

- desc database extended 库名

hive> desc database extended db_hive;

OK

db_hive hdfs://hadoop102:9820/user/hive/warehouse/db_hive.db

WovJ9.2.3. 切换当前数据库

hive (default)> use db_hive;9.3. 修改数据库

- 用户可以使用 ALTER DATABASE 命令为某个数据 库的 DBPROPERTIES 设置键-值对属性值, 来描述这个数据库的属性信息。

- alter database 库名 set dbproperties('k'='v');

hive (default)> alter database db_hive set dbproperties('createtime'='20170830');- 在 hive 中查看修改结果

hive> desc database extended db_hive;

db_name comment location owner_name owner_type parameters

db_hive hdfs://hadoop102:9820/user/hive/warehouse/db_hive.db

WovJ USER {createtime=20170830}9.4. 删除数据库

9.4.1. 删除空数据库

- drop database 库名

hive>drop database db_hive2;9.4.2. 如果删除的数据库不存在,最好采用 if exists 判断数据库是否存在

hive> drop database db_hive;

FAILED: SemanticException [Error 10072]: Database does not exist: db_hive

hive> drop database if exists db_hive2;9.4.3. 如果数据库不为空,可以采用cascade命令,强制删除

- drop database 库名 cascade

hive> drop database db_hive cascade;9.4.4. if not exists | if exists

- if not exists:建库建表时可以加上,表示如果创建的库或者表不存在,则进行创建,如果已经存在,则不创建。

- if exists:删库删表时可以加上,表示如果删除的库或者表存在,则删除,不存在,则不删除。

9.5. 创建表

9.5.1. 建表语法

CREATE [EXTERNAL] TABLE [IF NOT EXISTS] table_name --指定表名,[EXTERNAL]表示外部表,如果不加,则创建内部表

[(col_name data_type [COMMENT col_comment], ...)] --指定列名,列类型,列描述信息

[COMMENT table_comment] --指定表的描述信息

[PARTITIONED BY (col_name data_type [COMMENT col_comment], ...)] --指定分区列名 列类型 列描述信息 分区是分目录

[CLUSTERED BY (col_name, col_name, ...) --指定分桶列名 分桶是分数据

[SORTED BY (col_name [ASC|DESC], ...)] INTO num_buckets BUCKETS] --指定排序列(几乎不用),分多少个桶

[ROW FORMAT delimited fields terminated by 分隔符] --指定每行数据中每个元素的分隔符

[collection items terminated by 分隔符] --指定集合的元素的分隔符

[map keys terminated by 分隔符] --指定Map的kv的分隔符

[lines terminated by 分隔符] --指定行分隔符

[STORED AS file_format] --指定表数据的存储格式(textfile,orc,parquet)

[LOCATION hdfs_path] --指定表对应的HDFS的目录

[TBLPROPERTIES (property_name=property_value, ...)] --指定表的属性信息

[AS select_statement]字段解释说明:

- CREATE TABLE: 创建一个指定名字的表。如果相同名字的表已经存在,则抛出异常;用户可以用 IF NOT EXISTS 选项来忽略这个异常。

- EXTERNAL: 关键字可以让用户创建一个外部表,在建表的同时可以指定一个指向实 际数据的路径(LOCATION),在删除表的时候,内部表的元数据和数据会被一起删除,而外 部表只删除元数据,不删除数据。

-

- 创建内部表时,会将数据移动到数据仓库指向的路径(默认位置);

- 创建外部表时,仅记录数据所在的路径,不对数据的位置做任何改变。

- 在删除表的时候,内部表的元数据和数据会被一起删除,而外部表只删除元数据,不删除数据。

- COMMENT:为表和列添加注释

- PARTITIONED BY: 创建分区表

- CLUSTERED BY: 创建分桶表

- SORTED BY:不常用,对桶中的一个或多个列另外排序

- ROW FORMAT:DELIMITED [FIELDS TERMINATED BY char] [COLLECTION ITEMS TERMINATED BY char] [MAP KEYS TERMINATED BY char] [LINES TERMINATED BY char] | SERDE serde_name [WITH SERDEPROPERTIES (property_name=property_value, property_name=property_value, ...)]

-

- 用户在建表的时候可以自定义 SerDe 或者使用自带的 SerDe。如果没有指定 ROW FORMAT 或者 ROW FORMAT DELIMITED,将会使用自带的 SerDe(默认是 LazySimpleSerDe 类,只支持单字节分隔符)。

- 在建表的时候,用户还需要为表指定列,用户在指定表的列的同时也会指定自定义的 SerDe,Hive 通过 SerDe 确 定表的具体的列的数据。

- SerDe 是 Serialize/Deserilize 的简称,目的是用于序列化和反序列化。

- STORED AS:指定存储文件的类型。常用的存储文件类型有:

-

- SEQUENCEFILE(二进制序列文件)

- TEXTFILE(文本)

- RCFILE(列式存储格式文件)如果文件数据是纯文本,可以使用 STORED AS TEXTFILE。如果数据需要压缩,使用 STORED AS SEQUENCEFILE

- LOCATION:指定表在 HDFS 上的存储位置。

- AS:后跟查询语句,根据查询结果创建表。

- LIKE:允许用户复制现有的表结构,但是不复制数据。

9.5.2. 查表

- 查所有的表:show tables

- 查表的详情:desc 表名 | desc fromatted 表名

9.5.3. 修改

- 改表名:alter table 表名 rename to 新的名字

- 修改已有列:alter table 表名 change column 旧列名 新列名 列类型

- 增加新列:alter table 表名 add columns (新列名 列类型 ……)

- 替换列:alter table 表名 replace columns (新列名 列类型 ……)

-

- 需要注意 新列的类型不能比对应旧列的类型小

9.5.4. 删除

- drop table if exists 表名

10. DML操作 数据导入

10.1. 向表中装载数据(load)

- 语法:load data [local] inpath '待导入数据的路径' [overwrite] into table 表名 [partition (partcol1 = val1, …)];

- Local:

-

- 如果写,表示从Linux本地将数据进行导入,(剪切)

- 如果不写,表示从HDFS将数据进行导入,(复制)

- overwrite:

-

- 如果写,表示新导入的数据将表中原有的数据进行覆盖

- 如果不写,表示新数据导入到表的目录下,表中已有的数据不会被覆盖

10.2. insert

- insert into table 表名 values(xx,xx,xx ……)需要执行mr

- insert into | overwriter table 表名1 select * from 表名2. 需要执行mr

10.3. as select

- create table 表名1 as select * from 表名2 需要执行mr

10.4. location

- create table 表名 (列名 列类型 ……)row format ……location ‘HDFS中数据的位置’

10.5. import

- import table 表名 from ‘HDFS路径’

11. 内部表

11.1. 理论

- 默认创建的表都是所谓的管理表,有时也称为内部表,因为这种表,Hive会(或多或少的)控制着数据的生命周期。Hive默认情况下会将这些表的数据存储在由配置项 hive.metastore.warehouse.dir(例如,/user/hive/warehouse)所定义的目录的子目录下。

- 当我们删除一个管理表时,Hive也会删除这个表中数据,管理表不适合和其他工具共享数据

- 删除表时,会将表在HDFS中映射的目录和目录下的数据都删除

12. 外部表(EXTERNAL)

12.1. 理论

- 因为表时外部表,所以Hive并非认为其完全拥有这份数据,删除该表并不会删除掉这份数据,不过描述表的元数据信息会被删除掉。

- 删除表时,只会删除MySQL中的元数据信息,不会删除表在HDFS中映射的目录和目录下的数据。

13. 内部表和外部表的使用场景

- 每天将收集到的网站日志定期流入HDFS文本文件,在外部表(原始日志表)的基础上做大量的统计分析,用到的中间表,结果表使用内部表存储,数据通过SELECT+INSERT进入内部表。

|

|

创建 |

存储位置 |

删除数据 |

理念 |

| 内部表 |

create table 表名 …… |

Hive管理,存储位置默认 /user/hive/warehouse |

|

Hive管理表 持久使用 |

| 外部表 |

create externaltable 表名 ……location 存储位置…… |

随意,location关键字之指定存储位置 |

|

临时链接外部数据 |

14. DML数据导出

14.1. insert

- insert overwrite [Local] directory ‘导出的路径’row format …… select * from 表

14.2. Hadoop方式导出

- Hadoop fs -get

- dfs -get (Hive窗口中)

14.3. Hive命令方式

- hive -e “sql”>文件

- hive -f 文件>文件

14.4. export

- export table 表名 ‘HDFS路径’

14.5. truncate 清空表数据(只能清空管理表)

- truncate table 表名

15. 查询

15.1. 查询语法

SELECT [ALL | DISTINCT] select_expr, select_expr, ... --查询表中的哪些字段

FROM table_reference --从哪个表查

[WHERE where_condition] --where过滤条件

[GROUP BY col_list] --分组

[ORDER BY col_list] --全局排序

[CLUSTER BY col_list --分区排序

| [DISTRIBUTE BY col_list] --分区 [SORT BY col_list] --排序

]

[LIMIT number] --限制返回的数据条数15.2. 基本查询

15.2.1. 全表和特定列查询

15.2.1.1. 写SQL的分析方式:

- 从哪查?from

- 查什么?select

- 怎么查?是否要join,join方式,条件过滤,是否分组,是否Limit……

15.2.1.2. 数据准备

15.2.1.2.1. 原始数据

dept:

10 ACCOUNTING 1700

20 RESEARCH 1800

30 SALES 1900

40 OPERATIONS 1700emp:

7369 SMITH CLERK 7902 1980-12-17 800.00 20

7499 ALLEN SALESMAN 7698 1981-2-20 1600.00 300.00 30

7521 WARD SALESMAN 7698 1981-2-22 1250.00 500.00 30

7566 JONES MANAGER 7839 1981-4-2 2975.00 20

7654 MARTIN SALESMAN 7698 1981-9-28 1250.00 1400.00 30

7698 BLAKE MANAGER 7839 1981-5-1 2850.00 30

7782 CLARK MANAGER 7839 1981-6-9 2450.00 10

7788 SCOTT ANALYST 7566 1987-4-19 3000.00 20

7839 KING PRESIDENT 1981-11-17 5000.00 10

7844 TURNER SALESMAN 7698 1981-9-8 1500.00 0.00 30

7876 ADAMS CLERK 7788 1987-5-23 1100.00 20

7900 JAMES CLERK 7698 1981-12-3 950.00 30

7902 FORD ANALYST 7566 1981-12-3 3000.00 20

7934 MILLER CLERK 7782 1982-1-23 1300.00 1015.2.1.2.2. 创建部门表

create table if not exists dept(

deptno int,

dname string,

loc int

)

row format delimited fields terminated by '\t';15.2.1.2.3. 创建员工表

create table if not exists emp(

empno int,

ename string,

job string,

mgr int,

hiredate string,

sal double,

comm double,

deptno int)

row format delimited fields terminated by '\t';15.2.1.2.4. 导入数据

load data local inpath '/opt/module/datas/dept.txt' into table

dept;

load data local inpath '/opt/module/datas/emp.txt' into table emp;15.2.1.3. 全表查询

select * from emp;

select empno,ename,job,mgr,hiredate,sal,comm,deptno from emp;15.2.1.4. 选择特定列查询

select empno,ename from emp;15.2.2. 列别名

- 重命名一个列

- 便于计算

- 紧跟列名,也可以在列名和别名之间加入关键字‘AS’

- 案列

- 查询名称和部门

select ename AS name,deptno dn from emp;15.2.3. 算术运算符

| 运算符 |

描述 |

| A+B |

A 和 B 相加 |

| A-B |

A 减去 B |

| A*B |

A 和 B 相乘 |

| A/B |

A 除以 B |

| A%B |

A 对 B 取余 |

| A&B |

A 和 B 按位取与 |

| A|B |

A 和 B 按位取或 |

| A^B |

A 和 B 按位取异或 |

| ~A |

A 按位取反 |

- 案例实操:

- 查询出所有员工的薪水后加 1 显示。

select sal + 1 from emp;15.2.4. 常用函数

- 求总行数(count)

select count(*) cnt from emp;- 求工资的最大值(max)

select max(sal) max_sal from emp;- 求工资最小值(min)

select min(sal) min_sal from emp;- 求工资的总和(sum)

select sum(sal) sum_sal from emp;- 求工资的平均值(avg)

select avg(sal) avg_sal from emp;15.2.5. Limit语句

- 典型的查询会返回多行数据,Limit子句用于限制返回的行数

select * from emp limit 5; --取前五行数据

select * from emp limit 2,3; --从第二行起,取前三行15.2.6. where语句

- 使用where子句,将不满足条件的行过滤掉

- where子句紧随from子句

- 案列实操:

- 查询出薪水大于1000的所有员工

select * from emp where sal>1000;- 注意:where子句中不能使用字段别名。

15.2.7. 比较运算符(Between/In/Is Null)

- 下面表中描述了谓词操作符,这些操作符同样可以用于 JOIN…ON 和 HAVING 语句中。

| 操作符 |

支持的数据类型 |

描述 |

| A=B |

基本数据类型 |

如果 A 等于 B 则返回 TRUE,反之返回 FALSE |

| A<=>B |

基本数据类型 |

如果 A 和 B 都为 NULL,则返回 TRUE,如果一边为 NULL, 返回 False |

| A<>B, A!=B |

基本数据类型 |

A 或者 B 为 NULL 则返回 NULL;如果 A 不等于 B,则返回 TRUE,反之返回 FALSE |

| A |

基本数据类型 |

A 或者 B 为 NULL,则返回 NULL;如果 A 小于 B,则返回 TRUE,反之返回 FALSE |

| A<=B |

基本数据类型 |

A 或者 B 为 NULL,则返回 NULL;如果 A 小于等于 B,则返 回 TRUE,反之返回 FALSE |

| A>B |

基本数据类型 |

A 或者 B 为 NULL,则返回 NULL;如果 A 大于 B,则返回 TRUE,反之返回 FALSE |

| A>=B |

A 或者 B 为 NULL,则返回 NULL;如果 A 大于等于 B,则返 回 TRUE,反之返回 FALSE |

|

| A [NOT] BETWEEN B AND C |

基本数据类型 |

如果 A,B 或者 C 任一为 NULL,则结果为 NULL。如果 A 的 值大于等于 B 而且小于或等于 C,则结果为 TRUE,反之为 FALSE。 如果使用 NOT 关键字则可达到相反的效果。 |

| A IS NULL |

所有数据类型 |

如果 A 等于 NULL,则返回 TRUE,反之返回 FALSE |

| A IS NOT NULL |

所有数据类型 |

如果 A 不等于 NULL,则返回 TRUE,反之返回 FALSE |

| IN(数值 1, 数值 2) |

所有数据类型 |

使用 IN 运算显示列表中的值 |

| A [NOT] LIKE B |

STRING 类型 |

B 是一个 SQL 下的简单正则表达式,也叫通配符模式,如 果 A 与其匹配的话,则返回 TRUE;反之返回 FALSE。B 的表达式 说明如下:‘x%’表示 A 必须以字母‘x’开头,‘%x’表示 A 必须以字母’x’结尾,而‘%x%’表示 A 包含有字母’x’,可以 位于开头,结尾或者字符串中间。如果使用 NOT 关键字则可达到 相反的效果。 |

| A RLIKE B, A REGEXP B |

STRING 类型 |

B 是基于 java 的正则表达式,如果 A 与其匹配,则返回 TRUE;反之返回 FALSE。匹配使用的是 JDK 中的正则表达式接口实现的,因为正则也依据其中的规则。例如,正则表达式必须和 整个字符串 A 相匹配,而不是只需与其字符串匹配。 |

- 案例实操 :

- 查询出薪水等于5000的所有员工

select * from emp where sal = 5000;- 查询工资在500到1000的员工信息

select * from emp where sal between 500 and 1000;- 查询 comm 为空的所有员工信息

select * from emp where comm is null;- 查询工资是 1500 或 5000 的员工信息

select * from emp where sal In (1500,5000);15.2.8. Like 和RLike

- 使用LIKE运算选择类似的值

- 选择条件可以包含字符或数字:

-

- %代表零个或多个字符(任意个字符)

- _代表一个字符

- RLIKE子句

- RLIKE子句是Hive中这个功能的一个扩展,其可以通过Java的正则表达式这个更强大的语言来指定匹配条件

- 案列实操:

- 查找名字以 A 开头的员工信息

select * from emp where ename like 'A%';- 查找名字中第二个字母为 A 的员工信息

select * from emp where ename like '_A%';- 查找名字中带有 A 的员工信息

select * from emp where ename rlike '[A]';15.2.9. 逻辑运算符(And/Or/Not)

| 操作符 |

含义 |

| AND |

逻辑并 |

| OR |

逻辑或 |

| NOT |

逻辑否 |

- 案列实操:

- 查询薪水大于1000,部门是30

select * from emp where sal > 1000 and deptno = 30;- 查询薪水大于1000,或者部门是30

select * from emp where sal > 1000 or deptno =30;- 查询除了20部门和30部门以外的员工信息

select * from emp where deptno not in(30,20);15.2.10. 分组

15.2.10.1. Group By 语句

- Group By语句通常会和聚合函数一起使用,按照一个或者多个列队结果进行分组,然后对每个组执行聚合操作

- 注意:分组以后,在select后面只能跟分组字段(组标识)和聚合函数(组函数)

- 案列实操:

- 查询除了20部门和30部门以外的员工信息

select t.deptno,avg(t.sal) avg_sal from emp t group by t.deptno;- 计算emp每个部门中每个岗位的最高薪水

select t.deptno,t.job,max(t.sal) max_saL from emp t

group by

t.deptno,t.job;15.2.10.2. Having 语句

- having与where不同点

-

- where后面不能写分组函数,而having后面可以使用分组函数。

- having只用于Group By分组统计语句

- 案列实操:

- 求每个部门的平均薪水大于2000的部门

- 求每个部门的平均薪资

select deptno,avg(sal) from emp group by deptno;- 求每个部门的平均薪水大于2000的部门

select deptno,avg(sal) avg_sal from emp group by deptno

having

avg_sal > 2000;15.2.11. Join 语句

15.2.11.1. 等值Join

Hive支持通常的SQL JOIN语句,但是只支持等值连接,不支持非等值连接

- 案列实操:

- 根据员工表和部门表中的部门编号相等,查询员工编号,员工名称和部门名称

select e.empno,e.ename,d.deptno,d.dname from emp e

join dept d on e.deptno = d.deptno;- 内连接

select e.emp,e.ename,d.dname from emp e

inner join

dept d

on

e.deptno =d.deptno;- 左外连接 emp 主; dept 从

select e.empno,e.ename,d.dname from emp e

left outer join

dept d

on

e.deptno =d.deptno;15.2.11.2. 表的别名

15.2.11.2.1. 好处

- 使用别名可以简化查询。

- 使用表名前缀可以提高执行效率。

- 案例实操 :

- 合并员工表和部门表

select e.empno,e.ename,d.deptno from emp e

join

dept d on e.deptno = d.deptno;15.2.11.3. 内连接

- 语法:A inner join B on 连接条件;

- 结果集:取交集

- 小表驱动大表

- 内连接:只有进行连接的两个表中都存在与连接条件相匹配的数据才会被保留下来。

select e.empno,e.ename,d.deptno from emp e

join

dept d on e.deptno =d.deptno;15.2.11.4. 外连接

- 左外连接语法:A left outer join B on 连接条件;

- 右外连接语法:A right outer join B on 连接条件;

- 结果集:

-

- 先确认主(驱动)从(匹配)表,左外连左主右从,右外连右主左从

- 结果集为:主表所有的数据+从表中与主表匹配的数据

15.2.11.5. 左外连接

- 左外连接:JOIN 操作符左边表中符合 WHERE 子句的所有记录将会被返回。

select e.empno,e.ename,d.deptno from emp e

left join

dept d on e.deptno = d.deptno;15.2.11.6. 右外连接

- 右外连接:JOIN 操作符右边表中符合 WHERE 子句的所有记录将会被返回。

select e.empno,e.ename,d.deptno from emp e

right join

dept d on e.deptno = d.deptno;15.2.11.7. 满外连接

- 满外连接:将会返回所有表中符合 WHERE 语句条件的所有记录。如果任一表的指定字 段没有符合条件的值的话,那么就使用 NULL 值替代。

select e.empno,e.ename,d.deptno from emp e

full join

dept d on e.deptno = d.deptno;15.2.11.8. 多表连接

- 注意:连接n个表,至少需要n-1个连接条件。例如:连接三个表,至少需要两个连接条件。

- 数据准备

1700 Beijing

1800 London

1900 Tokyo15.2.11.8.1. 创建位置表

create table if not exists location(

loc int,

loc_name string

)

row format delimited fields terminated by '\t';15.2.11.8.2. 导入数据

load data local inpath '/opt/module/datas/location.txt'

into table location;15.2.11.8.3. 多表连接查询

- 查询每个员工的名字,员工所在的部门名,部门的位置名

SELECT e.ename, d.dname, l.loc_name

FROM emp e

JOIN dept d

ON d.deptno = e.deptno

JOIN location l

ON d.loc = l.loc;15.2.11.9. 笛卡尔积

- 笛卡尔积会在下面条件下产生

-

- 省略连接条件

- 连接条件无效

- 所有表中的所有行互相连接

- 案列实操:

select empno,dname from emp ,dept;15.2.12. 排序

14.2.12.1. Order By与Sort By

- Order By: 全局排序,只能有一个Reducer(一个分区)

- Sort By :区内排序,有多个分区,在每个分区内排序,一般不单独使用,配合Distribute By来使用

- Distribute By:分区

- Cluster By:分区排序

- 如果Distribute By 和 Sort By 使用的是同一个字段,且排序是升序,可以直接通过Cluster By 替换,

- 【distribute By 分区字段 sort by 排序字段 asc 等价于 cluster by 分区排序字段】

15.2.12.2. 全局排序(Order By)

- Order By:全局排序,只有一个Reducer

- 使用Order By子句排序

-

- ASC(ascend):升序(默认)

- DESC(descend):降序

- ORDER BY 子句在 SELECT 语句的结尾

- 案列实操:

- 查询员工信息按工资升序排序

select * from emp order by sal;- 查询员工信息按工资降序排序

select * from emp order by sal desc;15.2.12.3. 按照别名排序

- 按照员工薪水的2倍排序

select ename,sal * 12 twosal from emp order by twosal;15.2.12.4. 多个列排序

- 按照部门和工资升序排序

select ename,deptno,sal from emp order by deptno,sal;15.2.12.5. 每个Reduce内部排序(Sort By)

- Sort By:对于大规模的数据集Sort By的效率非常低,在很多情况下,并不需要全局排序,此时可以使用Sort By。

- Sort By为每个Reduce产生一个排序文件,每个Reducer内部进行排序,对全局结果集来说不是排序

- 设置Reduce个数

set mapreduce.job.reduces = 3;- 查看设置Reduce个数

set mapreduce.job.reduces;- 根据部门编号降序查看员工信息

select * from emp sort by deptno desc;- 将查询结果导入到文件中(按照部门编号降序排序)

insert overwrite local directory

'/opt/module/data/sortby-result'

select * from emp sort by deptno desc;15.2.12.6. 分区(Distribute By )

- Distribute By :在有些情况下,我们需要控制某个特定行应该到哪个Reducer,通常是为了进行后续的聚集操作,Distribute By子句可以做这件事,Distribute By类似MR中partition(自定义分区),进行分区,结合Sort by使用。

- 对于 Distribute By 进行测试,一定要分配Reduce进行处理,否则无法看到Distribute By 的效果

- 案列实操:

- 先按照部门编号分区,再按照员工编号降序排序

set mapreduce.job.reduces=3;

insert overwrite local directory

'opt/module/hive/datas/distribute-result' select * from emp distribute

by deptno sort by empno desc;- 注意:

-

- Distribute By的分区规则是根据分区字段的hash码与Reduce的个数进行模除后,余数相同的分到一个区

- Hive要求Distribute By语句要写在Sort By语句之前

15.2.12.7. Cluster By

- 当Distribute By和Sort By字段相同时,可以使用Cluster By方式

- Cluster By 处理具有Distribute By的功能外还兼具Sort By的功能,但是排序只能是升序排序,不能指定排序规则为ASC或者DESC

- 以下两种写法等价

select * from emp cluster by deptno;

select * from emp distribute by deptno sort by deptno;- 注意:按照部门编号分区,不一定就是固定死的数值,可以是20号和30号部门分到一个分区里面去。

15.2.13. 分区表和分桶表

15.2.13.1. 分区表

- 分区表实际上就是对应一个 HDFS 文件系统上的独立的文件夹,该文件夹下是该分区所有的数据文件。Hive 中的分区就是分目录,把一个大的数据集根据业务需要分割成小的数据集。在查询时通过WHERE 子句中的表达式选择查询所需要的指定的分区,这样的查询效率会提高很多。

15.2.13.1.1. 分区表基本操作

- 引入分区表(需要根据日期对日志进行管理, 通过部门信息模拟)

dept_20200401.log

dept_20200402.log

dept_20200403.log- 创建分区表语法

create table dept_partition(

deptno int, dname string, loc string

)

partitioned by (day string)

row format delimited fields terminated by '\t';- 注意:分区字段不能是表中已经存在的数据,可以将分区字段看作表的伪列。

- 加载数据到分区表中

-

- 数据准备

- dept_20200401.log

10 ACCOUNTING 1700

20 RESEARCH 1800-

- dept_20200402.log

30 SALES 1900

40 OPERATIONS 1700-

- dept_20200403.log

50 TEST 2000

60 DEV 1900-

- 加载数据

load data local inpath

'/opt/module/hive/datas/dept_20200401.log' into table dept_partition

partition(day='20200401');load data local inpath

'/opt/module/hive/datas/dept_20200402.log' into table dept_partition

partition(day='20200402');load data local inpath

'/opt/module/hive/datas/dept_20200403.log' into table dept_partition

partition(day='20200403');-

- 注意:分区表加载数据时,必须指定分区

- 查询分区表中数据

-

- 单分区查询

select * from dept_partition where day='20200401';-

- 多分区联合查询

select * from dept_partition where day='20200401'

union

select * from dept_partition where day='20200402'

union

select * from dept_partition where day='20200403';

select * from dept_partition where day='20200401'

or day='20200402' or day='20200403';- 增加分区

-

- 创建单个分区

alter table dept_partition add partition(day='20200404');-

- 同时创建多个分区

alter table dept_partition add partition(day='20200405')

partition(day='20200406');- 删除分区

-

- 删除单个分区

alter table dept_partition drop partition

(day='20200406');-

- 同时删除多个分区

alter table dept_partition drop partition

(day='20200404'), partition(day='20200405');- 查看分区表有多少分区

show partitions dept_partition;- 查看分区表结构

desc formatted dept_partition;15.2.13.1.2. 二级分区

- 思考:如何一天的日志数据量也很大,如何再将数据拆分?

- 创建二级分区表

create table dept_partition2(

deptno int, dname string, loc string

)

partitioned by (day string, hour string)

row format delimited fields terminated by '\t';- 正常的加载数据

-

- 加载数据到二级分区表中

load data local inpath

'/opt/module/hive/datas/dept_20200401.log' into table

dept_partition2 partition(day='20200401', hour='12');-

- 查询分区数据

select * from dept_partition2 where day='20200401' and

hour='12';- 把数据直接上传到分区目录上,让分区表和数据产生关联的三种方式

-

- 方式一:上传数据后修复

-

-

- 上传数据

-

dfs -mkdir -p

/user/hive/warehouse/mydb.db/dept_partition2/day=20200401/hour=13;

hive (default)> dfs -put /opt/module/datas/dept_20200401.log

/user/hive/warehouse/mydb.db/dept_partition2/day=20200401/hour=13;-

-

- 查询数据(查询不到刚上传的数据)

-

select * from dept_partition2 where day='20200401' and

hour='13';-

-

- 执行修复命

-

msck repair table dept_partition2;-

-

- 再次查询数据

-

select * from dept_partition2 where day='20200401' and

hour='13';-

- 方式二:上传数据后添加分区

-

-

- 上传数据

-

dfs -mkdir -p

/user/hive/warehouse/mydb.db/dept_partition2/day=20200401/hour=14;dfs -put /opt/module/hive/datas/dept_20200401.log

/user/hive/warehouse/mydb.db/dept_partition2/day=20200401/hour=14;-

-

- 执行添加分区

-

alter table dept_partition2 add

partition(day='201709',hour='14');-

-

- 查询数据

-

select * from dept_partition2 where day='20200401' and

hour='14';-

- 方式三:创建文件夹后 load 数据到分区

-

-

- 创建目录

-

dfs -mkdir -p

/user/hive/warehouse/mydb.db/dept_partition2/day=20200401/hour=15;-

-

- 上传数据

-

load data local inpath

'/opt/module/hive/datas/dept_20200401.log' into table

dept_partition2 partition(day='20200401',hour='15');-

-

- 查询数据

-

select * from dept_partition2 where day='20200401' and

hour='15';15.2.13.1.3. 动态分区调整

- 关系型数据库中,对分区表 Insert 数据时候,数据库自动会根据分区字段的值,将数据 插入到相应的分区中,Hive 中也提供了类似的机制,即动态分区(Dynamic Partition),只不过, 使用 Hive 的动态分区,需要进行相应的配置。

15.2.13.1.3.1. 开启动态分区参数设置

- 开启动态分区功能(默认 true,开启)

hive.exec.dynamic.partition=true- 设置为非严格模式(动态分区的模式,默认 strict,表示必须指定至少一个分区为 静态分区,nonstrict 模式表示允许所有的分区字段都可以使用动态分区。)

hive.exec.dynamic.partition.mode=nonstrict- 在所有执行 MR 的节点上,最大一共可以创建多少个动态分区。默认 1000

hive.exec.max.dynamic.partitions=1000- 在每个执行 MR 的节点上,最大可以创建多少个动态分区。该参数需要根据实际 的数据来设定。比如:源数据中包含了一年的数据,即 day 字段有 365 个值,那么该参数就 需要设置成大于 365,如果使用默认值 100,则会报错。

hive.exec.max.dynamic.partitions.pernode=100- 整个 MR Job 中,最大可以创建多少个 HDFS 文件。默认 100000

hive.exec.max.created.files=100000- 当有空分区生成时,是否抛出异常。一般不需要设置。默认 false

hive.error.on.empty.partition=false15.2.13.1.3.2. 案例实操

- 需求:将 dept 表中的数据按照地区(loc 字段),插入到目标表 dept_partition 的相应 分区中。

-

- 创建目标分区表

create table dept_partition_dy(id int, name string)

partitioned by (loc int) row format delimited fields terminated by '\t';-

- 设置动态分区

set hive.exec.dynamic.partition.mode = nonstrict;insert into table dept_partition_dy partition(loc) select

deptno, dname, loc from dept;-

- 查看目标分区表的分区情况

show partitions dept_partition;- 思考:目标分区表是如何匹配到分区字段的?

-

- 按照顺序进行匹配

15.2.14. 分桶表

- 分区提供一个隔离数据和优化查询的便利方式。不过,并非所有的数据集都可形成合理 的分区。对于一张表或者分区,Hive 可以进一步组织成桶,也就是更为细粒度的数据范围 划分。

- 分桶是将数据集分解成更容易管理的若干部分的另一个技术。

- 分区针对的是数据的存储路径;分桶针对的是数据文件。

15.2.14.1.1. 先创建分桶表

15.2.14.1.1.1. 数据准备

1001 ss1

1002 ss2

1003 ss3

1004 ss4

1005 ss5

1006 ss6

1007 ss7

1008 ss8

1009 ss9

1010 ss10

1011 ss11

1012 ss12

1013 ss13

1014 ss14

1015 ss15

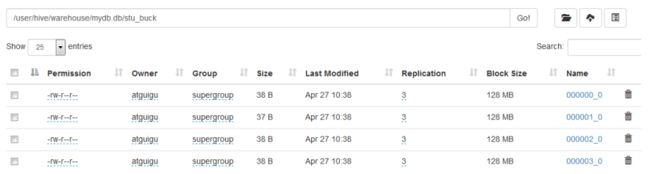

1016 ss1615.2.14.1.1.2. 创建分桶表

create table stu_buck(id int, name string)

clustered by(id)

into 4 buckets

row format delimited fields terminated by '\t';15.2.14.1.1.3. 查看表结构

desc formatted stu_buck;

Num Buckets:15.2.14.1.1.4. 导入数据到分桶表中,load 的方式

load data inpath '/student.txt' into table stu_buck;15.2.14.1.1.5. 查看创建的分桶表中是否分成 4 个桶

15.2.14.1.1.6. 查询分桶的数据

select * from stu_buck;15.2.14.1.1.7. 分桶规则:

- 根据结果可知:Hive 的分桶采用对分桶字段的值进行哈希,然后除以桶的个数求余的方 式决定该条记录存放在哪个桶当中

15.2.14.1.2. 分桶表操作需要注意的事项:

- reduce 的个数设置为-1,让 Job 自行决定需要用多少个 reduce 或者将 reduce 的个 数设置为大于等于分桶表的桶数

- 从 hdfs 中 load 数据到分桶表中,避免本地文件找不到问题

- 不要使用本地模式

15.2.14.1.3. insert 方式将数据导入分桶表

insert into table stu_buck select * from student_insert;15.2.14.1.4. 分区表和分桶表结合

create table dept_partition_bucket

(deptno int, dname string,loc int)

partitioned by (day string)

clustered by (deptno)

row format delimited fields terminated by '\t';15.2.15. 抽样查询

- 对于非常大的数据集,有时用户需要使用的是一个具有代表性的查询结果而不是全部结果。Hive 可以通过对表进行抽样来满足这个需求。

- 语法: TABLESAMPLE(BUCKET x OUT OF y)

-

- 查询表 stu_buck 中的数据。

select * from stu_buck tablesample(bucket 1 out of 4 on id);- 注意:x 的值必须小于等于 y 的值,否则

FAILED: SemanticException [Error 10061]: Numerator should not be bigger

than denominator in sample clause for table stu_buck16. 分区表和分桶表的区别

16.1. 二者的区别

- 分区和分桶都是细化数据管理,但是分区表是手动添加区分,由于hive是读模式,所以对添加进分区的数据不做模式校验。分桶表的数据时按住某些分桶字段进行 hasha 散列 相乘的多个文件,所以数据的准确性高很多

- 分区表是指按照数据表的某列或某些列分为多个区,区从形式上可以理解为文件夹

- 分桶是相对分区进行更细粒度的划分。分桶将整个数据内容按照某列属性值的hash值进行区分,如要按照name属性分为3个桶,就是对name属性值的hash值对3取摸,按照取模结果对数据分桶。如取模结果为0的数据记录存放到一个文件,取模为1的数据存放到一个文件,取模为2的数据存放到一个文件

16.2. 归纳总结两者的区别:

| 分区表 |

分桶表 |

|

| 表现形式 |

是一个目录 |

是文件 |

| 创建语句 |

使用partitioned by 子句指定,以指定字段为伪列,需要指定字段类型 |

由clustered by 子句指定,指定字段为真实字段,需要指定桶的个数 |

| 数量 |

分区个数可以增长 |

一旦指定,不能再增长 |

| 作用 |

避免全表扫描,根据分区列查询指定目录提高查询速度 |

分桶保存分桶查询结果的分桶结构 (数据已经按照分桶字段进行了hash散列)。 分桶表数据进行抽样和JOIN时可以提高MR程序效率 |

17. 函数

17.1. 系统内置函数

17.1.1. 查看系统自带的函数

show functions;17.1.2. 显示自带的函数的用法

desc function upper;17.1.3. 详细显示自带的函数的用法

desc function extended upper;17.2. 常用内置函数

17.2.1. 空字段赋值

17.2.1.1. 函数说明

- NVL:给值为 NULL 的数据赋值,它的格式是 NVL( value,default_value)。它的功能是如 果 value 为 NULL,则 NVL 函数返回 default_value 的值,否则返回 value 的值,如果两个参数 都为 NULL ,则返回 NULL。

17.2.1.2. 数据准备:采用员工表

17.2.1.3. 查询:如果员工的 comm 为 NULL,则用-1 代替

select comm,nvl(comm, -1) from emp;17.2.1.4. 查询:如果员工的 comm 为 NULL,则用领导 id 代替

select comm, nvl(comm,mgr) from emp;17.2.2. CASE WHEN THEN ELSE END

17.2.2.1. 数据准备

| name |

dept_id |

sex |

| 悟空 |

A |

男 |

| 大海 |

A |

男 |

| 宋宋 |

B |

男 |

| 凤姐 |

A |

女 |

| 婷姐 |

B |

女 |

| 婷婷 |

B |

女 |

17.2.2.2. 需求

- 求出不同部门男女各多少人。结果如下:

dept_Id 男 女

A 2 1

B 1 217.2.2.3. 创建本地 emp_sex.txt,导入数据

[WovJ@hadoop102 datas]$ vi emp_sex.txt

悟空 A 男

大海 A 男

宋宋 B 男

凤姐 A 女

婷姐 B 女

婷婷 B 女17.2.2.4. 创建 hive 表并导入数据

create table emp_sex(

name string,

dept_id string,

sex string)

row format delimited fields terminated by "\t";

load data local inpath '/opt/module/hive/data/emp_sex.txt' into table

emp_sex;17.2.2.5. 按需求查询数据

select

dept_id,

sum(case sex when '男' then 1 else 0 end) male_count,

sum(case sex when '女' then 1 else 0 end) female_count

from emp_sex

group by dept_id;17.2.3. 行转列

17.2.3.1. 相关函数说明

- CONCAT(string A/col, string B/col…):返回输入字符串连接后的结果,支持任意个输入字 符串; (将给定的多个字符串进行拼接返回)

- CONCAT_WS(separator, str1, str2,...):它是一个特殊形式的 CONCAT()。第一个参数剩余参 数间的分隔符。分隔符可以是与剩余参数一样的字符串。如果分隔符是 NULL,返回值也将 为 NULL。这个函数会跳过分隔符参数后的任何 NULL 和空字符串。分隔符将被加到被连接 的字符串之间; (将给定的多个字符串通过给定的分隔符拼接返回)

- 注意: CONCAT_WS must be "string or array

- COLLECT_SET(col):函数只接受基本数据类型,它的主要作用是将某字段的值进行去重 汇总,产生 Array 类型字段。 (将多行数据转换成一列)

17.2.3.2. 数据准备

| name |

constellation |

blood_type |

| 孙悟空 |

白羊座 |

A |

| 大海 |

射手座 |

A |

| 宋宋 |

白羊座 |

B |

| 猪八戒 |

白羊座 |

A |

| 凤姐 |

射手座 |

A |

| 苍老师 |

白羊座 |

B |

17.2.3.3. 需求

- 把星座和血型一样的人归类到一起。结果如下:

射手座,A 大海|凤姐

白羊座,A 孙悟空|猪八戒

白羊座,B 宋宋|苍老师17.2.3.4. 创建本地 constellation.txt,导入数据

[WovJ@hadoop102 datas]$ vim person_info.txt

孙悟空 白羊座 A

大海 射手座 A

宋宋 白羊座 B

猪八戒 白羊座 A

凤姐 射手座 A

苍老师 白羊座 B17.2.3.5. 创建 hive 表并导入数据

create table person_info(

name string,

constellation string,

blood_type string)

row format delimited fields terminated by "\t";

load data local inpath "/opt/module/hive/data/person_info.txt" into table

person_info;17.2.3.6. 按需求查询数据

SELECT

t1.c_b,

CONCAT_WS("|",collect_set(t1.name))

FROM (

SELECT

NAME,

CONCAT_WS(',',constellation,blood_type) c_b

FROM person_info

)t1

GROUP BY t1.c_b17.2.4. 列转行

17.2.4.1. 函数说明

- EXPLODE(col):将 hive 一列中复杂的 Array 或者 Map 结构拆分成多行。 (将一列array拆分成多行)

- LATERAL VIEW :侧写

- 用法:LATERAL VIEW udtf(expression) tableAlias AS columnAlias

- 解释:用于和 split, explode 等 UDTF 一起使用,它能够将一列数据拆成多行数据,在此 基础上可以对拆分后的数据进行聚合。

17.2.4.2. 数据准备

- 表 6-7 数据准备

| movie |

category |

| 《疑犯追踪》 |

悬疑,动作,科幻,剧情 |

| 《Lie to me》 |

悬疑,警匪,动作,心理,剧情 |

| 《战狼 2》 |

战争,动作,灾难 |

17.2.4.3. 需求

- 将电影分类中的数组数据展开。结果如下:

《疑犯追踪》 悬疑

《疑犯追踪》 动作

《疑犯追踪》 科幻

《疑犯追踪》 剧情

《Lie to me》 悬疑

《Lie to me》 警匪

《Lie to me》 动作

《Lie to me》 心理

《Lie to me》 剧情

《战狼 2》 战争

《战狼 2》 动作

《战狼 2》 灾难17.2.4.4. 创建本地 movie.txt,导入数据

[WovJ@hadoop102 datas]$ vi movie_info.txt

《疑犯追踪》 悬疑,动作,科幻,剧情

《Lie to me》悬疑,警匪,动作,心理,剧情

《战狼 2》 战争,动作,灾难17.2.4.5. 创建 hive 表并导入数据

create table movie_info(

movie string,

category string)

row format delimited fields terminated by "\t";

load data local inpath "/opt/module/data/movie.txt" into table

movie_info;17.2.4.6. 按需求查询数据

SELECT

movie,

category_name

FROM

movie_info

lateral VIEW

explode(split(category,",")) movie_info_tmp AS category_name;17.2.5. 窗口函数(开窗函数)

17.2.5.1. 相关函数说明

- OVER():指定分析函数工作的数据窗口大小,这个数据窗口大小可能会随着行的变而变化。

- CURRENT ROW:当前行

- n PRECEDING:往前 n 行数据

- n FOLLOWING:往后 n 行数据

- UNBOUNDED:起点,

-

- UNBOUNDED PRECEDING 表示从前面的起点,

- UNBOUNDED FOLLOWING 表示到后面的终点

- LAG(col,n,default_val):往前第 n 行数据

- LEAD(col,n, default_val):往后第 n 行数据

- NTILE(n):把有序窗口的行分发到指定数据的组中,各个组有编号,编号从 1 开始,对 于每一行,NTILE 返回此行所属的组的编号。

- 注意:n 必须为 int 类型。

17.2.5.2. 数据准备:name,orderdate,cost

jack,2023-01-01,10

tony,2023-01-02,15

jack,2023-02-03,23

tony,2023-01-04,29

jack,2023-01-05,46

jack,2023-04-06,42

tony,2023-01-07,50

jack,2023-01-08,55

mart,2023-04-08,62

mart,2023-04-09,68

neil,2023-05-10,12

mart,2023-04-11,75

neil,2023-06-12,80

mart,2023-04-13,9417.2.5.3. 需求

- 查询在 2023 年 4 月份购买过的顾客及总人数

- 查询顾客的购买明细及月购买总额

- 上述的场景, 将每个顾客的 cost 按照日期进行累加

- 查询每个顾客上次的购买时间

- 查询前 20%时间的订单信息

17.2.5.4. 创建本地 business.txt,导入数据

[WovJ@hadoop102 datas]$ vi business.txt17.2.5.5. 创建 hive 表并导入数据

create table business(

name string,

orderdate string,

cost int

) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

load data local inpath "/opt/module/data/business.txt" into table

business;17.2.5.6. 按需求查询数据

- 查询在 2023 年 4 月份购买过的顾客及总人数

select name,count(*) over ()

from business

where substring(orderdate,1,7) = '2023-04'

group by name;- 查询顾客的购买明细及月购买总额

select name,orderdate,cost,sum(cost) over(partition by month(orderdate))

from business;- 将每个顾客的 cost 按照日期进行累加

select name,orderdate,cost,

sum(cost) over() as sample1,--所有行相加

sum(cost) over(partition by name) as sample2,--按 name 分组,组内数据相加

sum(cost) over(partition by name order by orderdate) as sample3,--按 name

分组,组内数据累加

sum(cost) over(partition by name order by orderdate rows between

UNBOUNDED PRECEDING and current row ) as sample4 ,--和 sample3 一样,由起点到

当前行的聚合

sum(cost) over(partition by name order by orderdate rows between 1

PRECEDING and current row) as sample5, --当前行和前面一行做聚合

sum(cost) over(partition by name order by orderdate rows between 1

PRECEDING AND 1 FOLLOWING ) as sample6,--当前行和前边一行及后面一行

sum(cost) over(partition by name order by orderdate rows between current

row and UNBOUNDED FOLLOWING ) as sample7 --当前行及后面所有行

from business;-

- rows 必须跟在 order by 子句之后,对排序的结果进行限制,使用固定的行数来限制分 区中的数据行数量

- 查看顾客上次的购买时间

select name,orderdate,cost,

lag(orderdate,1,'1900-01-01') over(partition by name order by orderdate )

as time1, lag(orderdate,2) over (partition by name order by orderdate) as

time2

from business;- 查询前 20%时间的订单信息

select * from (

select name,orderdate,cost, ntile(5) over(order by orderdate) sorted

from business

) t

where sorted = 1;17.2.5.7. 窗口函数总结:

- over():默认的窗口大小就是查询结果集的大小

- 默认情况下,会为每个窗口中的每条数据都开一个窗口,窗口大小就是当前窗口的大小

- over(partition by ……):如果通过partition by进行分区,对于每个分区的数据来讲,窗口大小就是当前分区的大小

- over(Order by ……):每条数据的窗口大小默认是从数据集的第一条到前一条

- over(partition by ……Order by ……):上面两个结合起来

17.2.6. Rank

17.2.6.1. 函数说明

- RANK() 排序相同时会重复,总数不会变

- DENSE_RANK() 排序相同时会重复,总数会减少

- ROW_NUMBER() 会根据顺序计算

17.2.6.2. 数据准备

| name |

subject |

score |

| 孙悟空 |

语文 |

87 |

| 孙悟空 |

数学 |

95 |

| 孙悟空 |

英语 |

68 |

| 大海 |

语文 |

94 |

| 大海 |

数学 |

56 |

| 大海 |

英语 |

84 |

| 宋宋 |

语文 |

64 |

| 宋宋 |

数学 |

86 |

| 宋宋 |

英语 |

84 |

| 婷婷 |

语文 |

65 |

| 婷婷 |

数学 |

85 |

| 婷婷 |

英语 |

78 |

17.2.6.3. 需求

- 计算每门学科成绩排名。

17.2.6.4. 创建本地 score.txt,导入数据

[WovJ@hadoop102 datas]$ vi score.txt17.2.6.5. 创建 hive 表并导入数据

create table score(

name string,

subject string,

score int)

row format delimited fields terminated by "\t";

load data local inpath '/opt/module/data/score.txt' into table score;17.2.6.6. 按需求查询数据

select name,

subject,

score,

rank() over(partition by subject order by score desc) rp,

dense_rank() over(partition by subject order by score desc) drp,

row_number() over(partition by subject order by score desc) rmp

from score;- 扩展:求出每门学科前三名的学生?

17.2.7. 关键字总结

建表:

- partitioned by:分区表指定分区字段

- clustered by:分桶表指定分桶字段

查询:

- Order by:查询时指定全局排序的字段

- Distribute by:查询时指定分区字段

- sort by:查询时指定分区中的排序字段。

- clustered by:查询时指定分区排序宁段(分区和排序使用同一个字段,且排序为升序)

窗口函数:

- partition by:指定分区字段

- Order by:指定排序字段

- over(partition by ……order by ……)=over(distribute by ……sort by……)

17.3. 自定义函数

- Hive 自带了一些函数,比如:max/min 等,但是数量有限,自己可以通过自定义 UDF 来 方便的扩展。

- 当 Hive 提供的内置函数无法满足你的业务处理需要时,此时就可以考虑使用用户自定义 函数(UDF:user-defined function)。

- 根据用户自定义函数类别分为以下三种:

-

- UDF(User-Defined-Function) 一进一出

- UDAF(User-Defined Aggregation Function) 聚集函数,多进一出 类似于:count/max/min

- UDTF(User-Defined Table-Generating Functions) 一进多出 如 lateral view explode()

18. 文件存储格式

- Hive 支持的存储数据的格式主要有:TEXTFILE 、SEQUENCEFILE、ORC、PARQUET。

18.1. 列式存储和行式存储

- 如图所示左边为逻辑表,右边第一个为行式存储,第二个为列式存储。

18.1.1. 行存储的特点

- 查询满足条件的一整行数据的时候,列存储则需要去每个聚集的字段找到对应的每个列 的值,行存储只需要找到其中一个值,其余的值都在相邻地方,所以此时行存储查询的速度更快。

18.1.2. 列存储的特点

- 因为每个字段的数据聚集存储,在查询只需要少数几个字段的时候,能大大减少读取的 数据量;每个字段的数据类型一定是相同的,列式存储可以针对性的设计更好的设计压缩算 法。

- TEXTFILE 和 SEQUENCEFILE 的存储格式都是基于行存储的;

- ORC 和 PARQUET 是基于列式存储的。

19. 企业级调优

19.1. Fetch 抓取

- Fetch 抓取是指,Hive 中对某些情况的查询可以不必使用 MapReduce 计算。例如:SELECT * FROM employees;在这种情况下,Hive 可以简单地读取 employee 对应的存储目录下的文件, 然后输出查询结果到控制台。

- 在 hive-default.xml.template 文件中 hive.fetch.task.conversion 默认是 more,老版本 hive 默认是 minimal,该属性修改为 more 以后,在全局查找、字段查找、limit 查找等都不走 mapreduce。

hive.fetch.task.conversion

more

Expects one of [none, minimal, more].

Some select queries can be converted to single FETCH task minimizing

latency.

Currently the query should be single sourced not having any subquery

and should not have any aggregations or distincts (which incurs RS),

lateral views and joins.

0. none : disable hive.fetch.task.conversion

1. minimal : SELECT STAR, FILTER on partition columns, LIMIT only

2. more : SELECT, FILTER, LIMIT only (support TABLESAMPLE and

virtual columns)

19.1.1. 案例实操:

- 把 hive.fetch.task.conversion 设置成 none,然后执行查询语句,都会执行 mapreduce 程序。

set hive.fetch.task.conversion=none;

select * from emp;

select ename from emp;

select ename from emp limit 3;- 把 hive.fetch.task.conversion 设置成 more,然后执行查询语句,如下查询方式都不会执行 mapreduce 程序。

set hive.fetch.task.conversion=more;

select * from emp;

select ename from emp;

select ename from emp limit 3;19.2. 本地模式

- 大多数的 Hadoop Job 是需要 Hadoop 提供的完整的可扩展性来处理大数据集的。不过, 有时 Hive 的输入数据量是非常小的。在这种情况下,为查询触发执行任务消耗的时间可能 会比实际 job 的执行时间要多的多。对于大多数这种情况,Hive 可以通过本地模式在单台机 器上处理所有的任务。对于小数据集,执行时间可以明显被缩短。

- 用户可以通过设置 hive.exec.mode.local.auto 的值为 true,来让 Hive 在适当的时候自动 启动这个优化。

set hive.exec.mode.local.auto=true; //开启本地 mr

//设置 local mr 的最大输入数据量,当输入数据量小于这个值时采用 local mr 的方式,默认

为 134217728,即 128M

set hive.exec.mode.local.auto.inputbytes.max=50000000;

//设置 local mr 的最大输入文件个数,当输入文件个数小于这个值时采用 local mr 的方式,默

认为 4

set hive.exec.mode.local.auto.input.files.max=10;19.2.1. 案例实操:

- 关闭本地模式(默认是关闭的),并执行查询语句

select count(*) from emp group by deptno;- 开启本地模式,并执行查询语句

set hive.exec.mode.local.auto=true;

select count(*) from emp group by deptno;19.3. 表的优化

19.3.1. 小表大表 Join(MapJOIN)

- 将 key 相对分散,并且数据量小的表放在 join 的左边,可以使用 map join 让小的维度表 先进内存。在 map 端完成 join。

- 实际测试发现:新版的 hive 已经对小表 JOIN 大表和大表 JOIN 小表进行了优化。小表放在左边和右边已经没有区别。

19.3.2. 大表 Join 大表

19.3.2.1. 空 KEY 过滤

- 有时 join 超时是因为某些 key 对应的数据太多,而相同 key 对应的数据都会发送到相同 的 reducer 上,从而导致内存不够。此时我们应该仔细分析这些异常的 key,很多情况下,这些 key 对应的数据是异常数据,我们需要在 SQL 语句中进行过滤。例如 key 对应的字段为空

19.3.2.2. 空 key 转换

- 有时虽然某个 key 为空对应的数据很多,但是相应的数据不是异常数据,必须要包含在 join 的结果中,此时我们可以表 a 中 key 为空的字段赋一个随机的值,使得数据随机均匀地 分不到不同的 reducer 上。

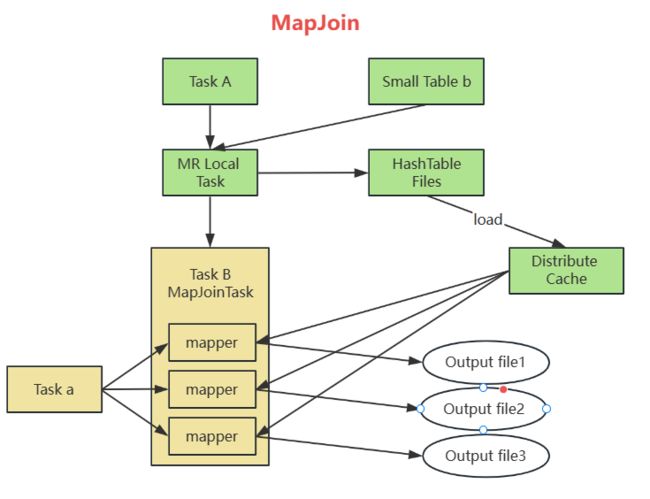

19.3.3. MapJoin

如果不指定 MapJoin 或者不符合 MapJoin 的条件,那么Hive 解析器会将 Join 操作转换成CommonJoin,即:在 Reduce 阶段完成 join。容易发生数据倾斜。可以用 MapJoin 把小表全部加载到内存在 map 端进行 join,避免 reducer 处理。

19.3.3.1. 开启 MapJoin 参数设置

- 设置自动选择 Mapjoin

set hive.auto.convert.join = true; 默认为 true- 大表小表的阈值设置(默认 25M 以下认为是小表):

set hive.mapjoin.smalltable.filesize = 25000000;19.3.3.2. MapJoin 工作机制

- Task A,它是一个Local Task(在客户端 本地执行的Task),负责扫描小表b的数据,将其转换成一个HashTable的数据结构,并写入本地的文件中,之后将该文件 加载到DistributeCache中。

- Task B,该任务是一个没有Reduce的MR, 启动MapTasks扫描大表a,在Map阶段,根据a的 每一条记录去和DistributeCache中b表对应的 HashTable关联,并直接输出结果。

- 由于MapJoin没有Reduce,所以由Map直 接输出结果文件,有多少个Map Task,就有多 少个结果文件。

19.3.4. Group By

- 默认情况下,Map 阶段同一 Key 数据分发给一个 reduce,当一个 key 数据过大时就倾斜了。

- 并不是所有的聚合操作都需要在 Reduce 端完成,很多聚合操作都可以先在 Map 端进行部分聚合,最后在 Reduce 端得出最终结果。

19.3.4.1. 开启 Map 端聚合参数设置

- 是否在 Map 端进行聚合,默认为 True

set hive.map.aggr = true- 在 Map 端进行聚合操作的条目数目

set hive.groupby.mapaggr.checkinterval = 100000有数据倾斜的时候进行负载均衡(默认是 false)

set hive.groupby.skewindata = true- 当选项设定为 true,生成的查询计划会有两个 MR Job。第一个 MR Job 中,Map 的输出 结果会随机分布到 Reduce 中,每个 Reduce 做部分聚合操作,并输出结果,这样处理的结果 是相同的 Group By Key 有可能被分发到不同的 Reduce 中,从而达到负载均衡的目的;第二 个 MR Job 再根据预处理的数据结果按照 Group By Key 分布到 Reduce 中(这个过程可以保证 相同的 Group By Key 被分布到同一个 Reduce 中),最后完成最终的聚合操作。

19.3.5. Count(Distinct) 去重统计

- 数据量小的时候无所谓,数据量大的情况下,由于 COUNT DISTINCT 操作需要用一个 Reduce Task 来完成,这一个 Reduce 需要处理的数据量太大,就会导致整个 Job 很难完成, 一般 COUNT DISTINCT 使用先 GROUP BY 再 COUNT 的方式替换,但是需要注意 group by 造成 的数据倾斜问题.

- 虽然会多用一个 Job 来完成,但在数据量大的情况下,这个绝对是值得的。

19.3.6. 笛卡尔积

- 尽量避免笛卡尔积,join 的时候不加 on 条件,或者无效的 on 条件,Hive 只能使用 1 个 reducer 来完成笛卡尔积。

19.3.7. 行列过滤

- 列处理:在 SELECT 中,只拿需要的列,如果有分区,尽量使用分区过滤,少用 SELECT *。

- 行处理:在分区剪裁中,当使用外关联时,如果将副表的过滤条件写在 Where 后面, 那么就会先全表关联,之后再过滤,

19.3.8. 分区

通过分目录的方式把数据集按照规则进行拆开

- 详见13.2.13章

19.3.9. 分桶

没有办法通过某一个规则再把数据拆开的时候,就分桶

- 详见13.2.13章

19.4. 合理设置 Map 及 Reduce 数

- 通常情况下,作业会通过 input 的目录产生一个或者多个 map 任务。

-

- 主要的决定因素有:input 的文件总个数,input 的文件大小,集群设置的文件块大小。

- 是不是 map 数越多越好?

-

- 答案是否定的。如果一个任务有很多小文件(远远小于块大小 128m),则每个小文件 也会被当做一个块,用一个 map 任务来完成,而一个 map 任务启动和初始化的时间远远大 于逻辑处理的时间,就会造成很大的资源浪费。而且,同时可执行的 map 数是受限的。

- 是不是保证每个 map 处理接近 128m 的文件块,就高枕无忧了?

- 答案也是不一定。比如有一个 127m 的文件,正常会用一个 map 去完成,但这个文件只 有一个或者两个小字段,却有几千万的记录,如果 map 处理的逻辑比较复杂,用一个 map 任务去做,肯定也比较耗时。

- 针对上面的问题 2 和 3,我们需要采取两种方式来解决:即减少 map 数和增加 map 数;

19.4.1. 复杂文件增加 Map 数

- 当 input 的文件都很大,任务逻辑复杂,map 执行非常慢的时候,可以考虑增加 Map 数, 来使得每个 map 处理的数据量减少,从而提高任务的执行效率。

-

- 增加 map 的方法为:根据 computeSliteSize(Math.max(minSize,Math.min(maxSize,blocksize)))=blocksize=128M 公式, 调整 maxSize 最大值。让 maxSize 最大值低于 blocksize 就可以增加 map 的个数。

- 案例实操:

- 执行查询

select count(*) from emp;

Hadoop job information for Stage-1: number of mappers: 1; number of

reducers: 1- 设置最大切片值为 100 个字节

set mapreduce.input.fileinputformat.split.maxsize=100;

select count(*) from emp;

Hadoop job information for Stage-1: number of mappers: 6; number of

reducers: 119.4.2. 小文件进行合并

- 在 map 执行前合并小文件,减少 map 数:CombineHiveInputFormat 具有对小文件进行合 并的功能(系统默认的格式)。HiveInputFormat 没有对小文件合并功能。

set hive.input.format=

org.apache.hadoop.hive.ql.io.CombineHiveInputFormat;- 在 Map-Reduce 的任务结束时合并小文件的设置:

-

- 在 map-only 任务结束时合并小文件,默认 true

SET hive.merge.mapfiles = true;-

- 在 map-reduce 任务结束时合并小文件,默认 false

SET hive.merge.mapredfiles = true;-

- 合并文件的大小,默认 256M

SET hive.merge.size.per.task = 268435456;-

- 当输出文件的平均大小小于该值时,启动一个独立的 map-reduce 任务进行文件 merge

SET hive.merge.smallfiles.avgsize = 16777216;19.4.3. 合理设置 Reduce 数

19.4.3.1. 调整 reduce 个数方法一

- 每个 Reduce 处理的数据量默认是 256MB

hive.exec.reducers.bytes.per.reducer=256000000- 每个任务最大的 reduce 数,默认为 1009

hive.exec.reducers.max=1009- 计算 reducer 数的公式

N=min(参数 2,总输入数据量/参数 1)19.4.3.2. 调整 reduce 个数方法二

- 在 hadoop 的 mapred-default.xml 文件中修改

- 设置每个 job 的 Reduce 个数

set mapreduce.job.reduces = 15;19.4.3.3. reduce 个数并不是越多越好

- 过多的启动和初始化 reduce 也会消耗时间和资源;

- 另外,有多少个 reduce,就会有多少个输出文件,如果生成了很多个小文件,那 么如果这些小文件作为下一个任务的输入,则也会出现小文件过多的问题;

- 在设置 reduce 个数的时候也需要考虑这两个原则:处理大数据量利用合适的 reduce 数; 使单个 reduce 任务处理数据量大小要合适;

19.5. 并行执行

- Hive 会将一个查询转化成一个或者多个阶段。这样的阶段可以是 MapReduce 阶段、抽样阶段、合并阶段、limit 阶段。或者 Hive 执行过程中可能需要的其他阶段。默认情况下, Hive 一次只会执行一个阶段。不过,某个特定的 job 可能包含众多的阶段,而这些阶段可能 并非完全互相依赖的,也就是说有些阶段是可以并行执行的,这样可能使得整个 job 的执行 时间缩短。不过,如果有更多的阶段可以并行执行,那么 job 可能就越快完成。

- 通过设置参数 hive.exec.parallel 值为 true,就可以开启并发执行。不过,在共享集群中, 需要注意下,如果 job 中并行阶段增多,那么集群利用率就会增加。

set hive.exec.parallel=true; //打开任务并行执行

set hive.exec.parallel.thread.number=16; //同一个 sql 允许最大并行度,默认为8。- 当然,得是在系统资源比较空闲的时候才有优势,否则,没资源,并行也起不来。

19.6. 严格模式

- Hive 可以通过设置防止一些危险操作:

19.6.1. 分区表不使用分区过滤

- 将 hive.strict.checks.no.partition.filter 设置为 true 时,对于分区表,除非 where 语句中含 有分区字段过滤条件来限制范围,否则不允许执行。换句话说,就是用户不允许扫描所有分区。进行这个限制的原因是,通常分区表都拥有非常大的数据集,而且数据增加迅速。没有进行分区限制的查询可能会消耗令人不可接受的巨大资源来处理这个表。

19.6.2. 使用 order by 没有 limit 过滤

- 将 hive.strict.checks.orderby.no.limit 设置为 true 时,对于使用了 order by 语句的查询,要 求必须使用 limit 语句。因为 order by 为了执行排序过程会将所有的结果数据分发到同一个 Reducer 中进行处理,强制要求用户增加这个 LIMIT 语句可以防止 Reducer 额外执行很长一 段时间。

19.6.3. 笛卡尔积

- 将 hive.strict.checks.cartesian.product 设置为 true 时,会限制笛卡尔积的查询。对关系型数 据库非常了解的用户可能期望在 执行 JOIN 查询的时候不使用 ON 语句而是使用 where 语 句,这样关系数据库的执行优化器就可以高效地将 WHERE 语句转化成那个 ON 语句。不幸 的是,Hive 并不会执行这种优化,因此,如果表足够大,那么这个查询就会出现不可控的情 况。

19.7. JVM 重用

略

19.8. 压缩

略

19.9. 执行计划(Explain)

19.9.1. 基本语法

EXPLAIN [EXTENDED | DEPENDENCY | AUTHORIZATION] query

19.9.2. 案例实操

- 查看下面这条语句的执行计划

-

- 没有生成 MR 任务的

explain select * from emp;

Explain

STAGE DEPENDENCIES:

Stage-0 is a root stage

STAGE PLANS:

Stage: Stage-0

Fetch Operator

limit: -1

Processor Tree:

TableScan

alias: emp

Statistics: Num rows: 1 Data size: 7020 Basic stats: COMPLETE

Column stats: NONE

Select Operator

expressions: empno (type: int), ename (type: string), job

(type: string), mgr (type: int), hiredate (type: string), sal (type:

double), comm (type: double), deptno (type: int)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5,

_col6, _col7

Statistics: Num rows: 1 Data size: 7020 Basic stats: COMPLETE

Column stats: NONE

ListSink-

- 有生成 MR 任务的

explain select deptno, avg(sal) avg_sal from emp group by

deptno;

Explain

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-0 depends on stages: Stage-1

STAGE PLANS:

Stage: Stage-1

Map Reduce

Map Operator Tree:

TableScan

alias: emp

Statistics: Num rows: 1 Data size: 7020 Basic stats: COMPLETE

Column stats: NONE

Select Operator

expressions: sal (type: double), deptno (type: int)

outputColumnNames: sal, deptno

Statistics: Num rows: 1 Data size: 7020 Basic stats: COMPLETE

Column stats: NONE

Group By Operator

aggregations: sum(sal), count(sal)

keys: deptno (type: int)

mode: hash

outputColumnNames: _col0, _col1, _col2

Statistics: Num rows: 1 Data size: 7020 Basic stats:

COMPLETE Column stats: NONE

Reduce Output Operator

key expressions: _col0 (type: int)

sort order: +

Map-reduce partition columns: _col0 (type: int)

Statistics: Num rows: 1 Data size: 7020 Basic stats:

COMPLETE Column stats: NONE

value expressions: _col1 (type: double), _col2 (type:

bigint)

Execution mode: vectorized

Reduce Operator Tree:

Group By Operator

aggregations: sum(VALUE._col0), count(VALUE._col1)

keys: KEY._col0 (type: int)

mode: mergepartial

outputColumnNames: _col0, _col1, _col2

Statistics: Num rows: 1 Data size: 7020 Basic stats: COMPLETE

Column stats: NONE

Select Operator

expressions: _col0 (type: int), (_col1 / _col2) (type: double)

outputColumnNames: _col0, _col1

Statistics: Num rows: 1 Data size: 7020 Basic stats: COMPLETE

Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 1 Data size: 7020 Basic stats: COMPLETE

Column stats: NONE

table:

input format:

org.apache.hadoop.mapred.SequenceFileInputFormat

output format:

org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

Stage: Stage-0

Fetch Operator

limit: -1

Processor Tree:

ListSink- 查看详细执行计划

explain extended select * from emp;

explain extended select deptno, avg(sal) avg_sal from emp

group by deptno;20. ETL

- 通过观察原始数据形式,可以发现,视频可以有多个所属分类,每个所属分类用&符号分割,且分割的两边有空格字符,同时相关视频也是可以有多个元素,多个相关视频又用“\t”进行分割。为了分析数据时方便对存在多个子元素的数据进行操作,我们首先进行数据重组清洗操作。即:将所有的类别用“&”分割,同时去掉两边空格,多个相关视频 id 也使用“&”进行分割。

20.1. ETL之封装工具类

public class ETLUtil {

/**

* 数据清洗方法

* 清洗规则:

* 1.数据长度

* 2.去掉视频类别中的空格

* 3.将多个关联视频的id通过&拼接

*/

public static String etlData(String srcData){

StringBuffer resultData = new StringBuffer();

//1.先将数据通过\t 切割

String[] datas = srcData.split("\t");

//2.判断长度是否小于 9

if(datas.length <9){

return null ;

}

//3将数据中的视频类别的空格去掉

datas[3]=datas[3].replaceAl1(”","");

//4.将数据中的关联视频 id 通过&拼接

for (int i = 0; i < datas.length; i++){

if(i<9){

//4.1没有关联视频的情况

if(i == datas.length-1){

resultData.append(datas[i]);

}else{

resultData.append(datas[i]).append("\t");

}

}else{

//4.2有关联视频的情况

if(i == datas.length-1){

resultData.append(datas[i]);

}else{

resultData.append(datas[i]+"&");

}

}

}

return resultData.toString();

}

}