文档相似度之词条相似度word2vec、及基于词袋模型计算sklearn实现和gensim

文档相似度之词条相似度word2vec、及基于词袋模型计算sklearn实现和gensim

示例代码:

import jieba

import pandas as pd

from gensim.models.word2vec import Word2Vec

from gensim import corpora, models

from gensim.models.ldamodel import LdaModel

raw = pd.read_table('./金庸-射雕英雄传txt精校版.txt', names=['txt'], encoding="GBK")

# 章节判断用变量预处理

def m_head(tmpstr):

return tmpstr[:1]

def m_mid(tmpstr):

return tmpstr.find("回 ")

raw['head'] = raw.txt.apply(m_head)

raw['mid'] = raw.txt.apply(m_mid)

raw['len'] = raw.txt.apply(len)

# 章节判断

chapnum = 0

for i in range(len(raw)):

if raw['head'][i] == "第" and raw['mid'][i] > 0 and raw['len'][i] < 30:

chapnum += 1

if chapnum >= 40 and raw['txt'][i] == "附录一:成吉思汗家族":

chapnum = 0

raw.loc[i, 'chap'] = chapnum

# 删除临时变量

del raw['head']

del raw['mid']

del raw['len']

rawgrp = raw.groupby('chap')

chapter = rawgrp.agg(sum) # 只有字符串的情况下,sum函数自动转为合并字符串

chapter = chapter[chapter.index != 0]

# print(chapter)

# 设定分词及请理停用词函数

stop_list = list(pd.read_csv('./停用词.txt', names=['w'], sep='aaa', encoding='utf-8').w)

# print(stop_list)

# 分词和预处理,生成list of list格式

chapter['cut'] = chapter.txt.apply(jieba.lcut)

print(chapter.head())

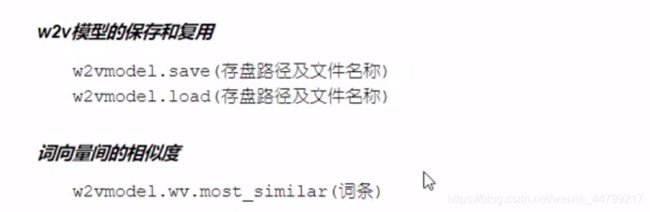

# 初始化word2vec模型和词表

n_dim = 300 # 指定向量维度,大样本量时300~500较好

w2v_model = Word2Vec(size=n_dim, min_count=10)

w2v_model.build_vocab(chapter.cut) # 生成词表

print(w2v_model)

# 在评论训练集上建模(大数据集时可能会花费几分钟)

w2v_model.train(chapter.cut, total_examples=w2v_model.corpus_count, epochs=10)

# 训练完毕的模型实质

print(w2v_model.wv['郭靖'].shape)

print(w2v_model.wv['郭靖'])

print(w2v_model.wv.most_similar('郭靖'))

print(w2v_model.wv.most_similar('黄蓉', topn=20))

print(w2v_model.wv.most_similar('黄蓉道'))

# 寻找对应关系

print(w2v_model.wv.most_similar(['郭靖', '小红马'], ['黄药师'], topn=5))

print(w2v_model.wv.most_similar(positive=['郭靖', '黄蓉'], negative=['杨康'], topn=10))

# 计算两个词的相似度/相关程度

print(w2v_model.wv.similarity('郭靖', '黄蓉'))

print(w2v_model.wv.similarity('郭靖', '杨康'))

print(w2v_model.wv.similarity('郭靖', '杨铁心'))

# 找出不合群的词

print(w2v_model.wv.doesnt_match('小红马 黄药师 鲁有脚'.split()))

print(w2v_model.wv.doesnt_match('杨铁心 黄药师 黄蓉 洪七公'.split()))

print(w2v_model.wv.doesnt_match('郭靖 黄药师 黄蓉 洪七公'.split()))

运行效果:

txt cut

chap

1.0 第一回 风雪惊变 钱塘江浩浩江水,日日夜夜无穷无休的从两浙西路临安府牛家村边绕过,东流... [第一回, , 风雪, 惊变, , , , , 钱塘江, 浩浩, 江水, ,, 日...

2.0 第二回 江南七怪 颜烈跨出房门,过道中一个中年士人拖着鞋皮,踢跶踢跶的直响,一路打着哈... [第二回, , 江南七怪, , , , , 颜烈, 跨出, 房门, ,, 过道, ...

3.0 第三回 黄沙莽莽 寺里僧众见焦木圆寂,尽皆悲哭。有的便为伤者包扎伤处,抬入客舍。 忽... [第三回, , 黄沙, 莽莽, , , , , 寺里, 僧众, 见, 焦木, 圆寂...

4.0 第四回 黑风双煞 完颜洪熙笑道:“好,再打他个痛快。”蒙古兵前哨报来:“王罕亲自前来迎... [第四回, , 黑风双, 煞, , , , , 完颜洪熙, 笑, 道, :, “,...

5.0 第五回 弯弓射雕 一行人下得山来,走不多时,忽听前面猛兽大吼声一阵阵传来。韩宝驹一提缰... [第五回, , 弯弓, 射雕, , , , , 一行, 人下, 得, 山来, ,,...

Word2Vec(vocab=5459, size=300, alpha=0.025)

(300,)

[-4.25572187e-01 5.09252429e-01 -3.35490882e-01 2.78963238e-01

-1.02605844e+00 3.77141428e-03 -3.16083908e-01 -3.92541476e-03

-7.55077541e-01 -4.14136142e-01 -7.96370953e-03 -3.11409801e-01

-1.04131603e+00 1.45814225e-01 8.70647311e-01 1.02275096e-01

-3.58931541e-01 -4.92596596e-01 -4.38451134e-02 -2.07327008e-01

-5.05177259e-01 8.17781910e-02 1.39241904e-01 1.02690317e-01

-1.08738653e-02 3.38276535e-01 7.73900509e-01 1.30810887e-01

3.70290913e-02 -4.63242799e-01 -2.74094075e-01 6.19357049e-01

7.47549534e-01 -7.18842843e-04 -5.06826639e-02 1.17821284e-01

-4.82503533e-01 2.54026383e-01 -3.16515833e-01 1.89624745e-02

4.54292834e-01 -2.35798195e-01 -2.51395345e-01 -4.02360201e-01

9.39531863e-01 8.11806545e-02 4.22813594e-02 3.76699902e-02

2.98213772e-02 1.42095342e-01 1.45684227e-01 -4.01940584e-01

4.07561034e-01 -2.66518950e-01 6.67903721e-01 5.01626313e-01

-3.70534241e-01 -1.67340368e-01 1.92730218e-01 -6.58192158e-01

5.08721054e-01 7.57201239e-02 -3.05096835e-01 -3.13329577e-01

-9.28190768e-01 -6.06709778e-01 2.21651703e-01 1.30794179e-02

2.44673714e-01 5.97735286e-01 2.54324138e-01 -1.07708804e-01

-4.30957884e-01 3.88660342e-01 3.25726151e-01 3.37605029e-01

2.26746127e-01 5.75829931e-02 1.68527797e-01 2.50947684e-01

-8.53613853e-01 -3.71761650e-01 9.28249136e-02 4.80269641e-01

1.09297246e-01 -7.71963120e-01 4.78450656e-01 2.03546405e-01

-2.20786463e-02 -7.38570452e-01 -2.94516891e-01 -3.64878774e-01

-8.38900208e-01 -1.47488326e-01 5.07901274e-02 -9.72001553e-02

2.23598689e-01 -6.29560888e-01 1.00483783e-01 -4.56726253e-01

-9.47031826e-02 4.62332100e-01 -8.71771425e-02 5.56389689e-01

-9.30684283e-02 -5.85853076e-03 7.49156177e-01 1.77695587e-01

7.10729539e-01 6.42484009e-01 1.59202948e-01 -3.84662598e-01

-1.01668365e-01 -4.73941356e-01 -4.12283838e-01 5.54680347e-01

-7.88018525e-01 -9.89478603e-02 -3.38515341e-01 6.88804924e-01

-8.50284547e-02 -4.15543646e-01 7.00870872e-01 5.73243201e-01

-9.22002435e-01 -3.08895737e-01 4.53976035e-01 -6.34495974e-01

-2.33024452e-02 5.55906557e-02 8.52066219e-01 -1.21728078e-01

-1.04310322e+00 1.67843744e-01 -3.70012581e-01 5.35915256e-01

-5.99495649e-01 4.46197003e-01 6.29074156e-01 7.83082768e-02

1.03811696e-01 -2.28996769e-01 3.72441858e-02 -1.18466330e+00

7.22329080e-01 8.30452740e-01 -2.96548098e-01 -1.80357605e-01

-4.79318738e-01 4.73362468e-02 6.15784049e-01 -2.58318454e-01

-3.28815848e-01 2.52488762e-01 -3.53550434e-01 2.83297598e-01

-7.09015250e-01 1.78405985e-01 3.67755711e-01 5.31901062e-01

-3.65966074e-02 6.86934292e-01 9.35065001e-03 3.14038575e-01

-6.15793526e-01 -5.35331786e-01 1.68589428e-01 -4.13265936e-02

1.91599071e-01 6.66444898e-01 -8.04961801e-01 2.23901987e-01

-3.58554758e-02 -4.20156986e-01 -1.05377257e-01 -3.64615709e-01

5.47731698e-01 3.24334651e-01 3.80239844e-01 -3.17966312e-01

1.61627874e-01 -1.91430487e-02 -5.29973447e-01 3.45900446e-01

3.70819747e-01 -5.26902033e-03 7.79446065e-01 3.84049714e-01

5.39269149e-01 6.00706160e-01 1.54636788e+00 5.65437861e-02

1.01196229e+00 -1.68419033e-01 3.09479296e-01 -1.18783295e-01

3.38959545e-01 8.73865366e-01 7.63210416e-01 -1.31056860e-01

1.87102288e-01 4.16087627e-01 -1.82035211e-02 5.21824658e-01

5.08903153e-02 -3.79299283e-01 2.37221986e-01 1.13913290e-01

-5.76654375e-01 4.00207430e-01 -6.08394817e-02 -2.02899039e-01

1.04189709e-01 -4.22556341e-01 -9.73940492e-01 -3.64475995e-01

2.00148493e-01 -1.90512016e-01 -4.60629255e-01 -2.77194858e-01

-2.47719780e-01 6.79912746e-01 1.06271304e-01 -2.53444225e-01

-1.68864746e-02 2.92011857e-01 -4.92196888e-01 -5.58556318e-01

3.94930728e-02 -6.35132134e-01 -4.88759756e-01 7.75367677e-01

2.31892675e-01 -8.91844481e-02 -2.39149243e-01 -2.87970752e-01

-4.66604084e-02 -4.16176230e-01 3.29162002e-01 5.15133202e-01

-2.69217163e-01 -5.06954901e-02 -3.64632845e-01 2.68169373e-01

-5.10865092e-01 -2.34507605e-01 2.77217269e-01 6.38278008e-01

-2.84449011e-01 -7.82826304e-01 2.44286552e-01 1.28226519e+00

3.50646049e-01 9.34412926e-02 3.74015957e-01 5.76247238e-02

-3.65534909e-02 -5.58727264e-01 -2.02053517e-01 4.39867437e-01

3.92843515e-01 -7.64699876e-01 -2.00794842e-02 -6.12827241e-02

3.69807869e-01 2.53667206e-01 3.05663139e-01 -1.77007452e-01

-5.83643556e-01 4.10691023e-01 5.85832894e-02 -6.43341184e-01

1.48141354e-01 -2.10599437e-01 3.14153098e-02 -1.77107662e-01

-4.81100738e-01 4.63612467e-01 1.01896954e+00 -3.32962066e-01

3.23046595e-01 -5.77437393e-02 -3.56524438e-01 -5.32343686e-01

-6.75636008e-02 -1.65643185e-01 3.28297466e-01 3.37299228e-01

-6.16224051e-01 9.88392889e-01 6.98523045e-01 1.74140930e-01

9.73622724e-02 2.14205697e-01 1.13871165e-01 2.19000936e-01

2.26686820e-01 1.53621882e-01 -3.02151501e-01 3.32021177e-01]

[('黄蓉', 0.9228439331054688), ('欧阳克', 0.8506240844726562), ('欧阳锋', 0.7657182216644287), ('梅超风', 0.7550132274627686), ('裘千仞', 0.7529821395874023), ('穆念慈', 0.74937903881073), ('程瑶迦', 0.7446237206459045), ('黄药师', 0.7445610165596008), ('完颜康', 0.7358383536338806), ('周伯通', 0.7228418588638306)]

[('郭靖', 0.9228439927101135), ('欧阳克', 0.8548903465270996), ('穆念慈', 0.8016418218612671), ('周伯通', 0.797595739364624), ('完颜康', 0.7936385869979858), ('程瑶迦', 0.7841259241104126), ('陆冠英', 0.7631279826164246), ('洪七公', 0.7594529986381531), ('裘千仞', 0.7572054266929626), ('杨康', 0.7420238256454468), ('李萍', 0.7414523363113403), ('那道人', 0.7407932281494141), ('柯镇恶', 0.737557590007782), ('一灯', 0.7374000549316406), ('欧阳锋', 0.7373932003974915), ('黄药师', 0.7257256507873535), ('华筝', 0.7247843742370605), ('那公子', 0.7243456840515137), ('鲁有脚', 0.721243679523468), ('穆易', 0.721138060092926)]

[('郭靖道', 0.9680619239807129), ('杨康道', 0.9004895091056824), ('朱聪道', 0.8976290225982666), ('傻姑道', 0.8683680295944214), ('头道', 0.8035480976104736), ('马钰道', 0.7973171472549438), ('那人道', 0.7740610241889954), ('郭靖摇', 0.7600215673446655), ('怒道', 0.7592462301254272), ('欧阳克笑', 0.7311669588088989)]

[('奔', 0.8548181056976318), ('晕', 0.8207278251647949), ('退', 0.8112013936042786), ('茶', 0.8089604377746582), ('远远', 0.8086404204368591)]

[('欧阳克', 0.75826096534729), ('欧阳锋', 0.7300347089767456), ('梅超风', 0.6927435398101807), ('洪七公', 0.6642888784408569), ('她', 0.6387393474578857), ('黄药师', 0.6353062391281128), ('周伯通', 0.6277655363082886), ('那道人', 0.6228901743888855), ('当下', 0.6063475012779236), ('主人', 0.5766028165817261)]

0.922844

0.7174542

0.65131724

小红马

杨铁心

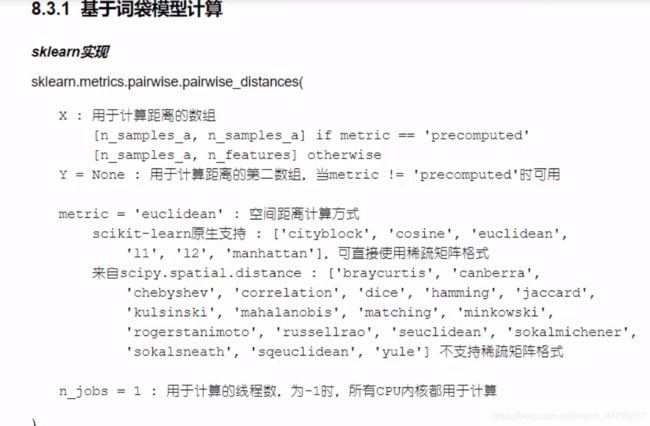

洪七公示例代码:

import jieba

import pandas as pd

from gensim.models.word2vec import Word2Vec

from gensim import corpora, models

from gensim.models.ldamodel import LdaModel

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.metrics.pairwise import pairwise_distances

from sklearn.feature_extraction.text import TfidfTransformer

raw = pd.read_table('./金庸-射雕英雄传txt精校版.txt', names=['txt'], encoding="GBK")

# 章节判断用变量预处理

def m_head(tmpstr):

return tmpstr[:1]

def m_mid(tmpstr):

return tmpstr.find("回 ")

raw['head'] = raw.txt.apply(m_head)

raw['mid'] = raw.txt.apply(m_mid)

raw['len'] = raw.txt.apply(len)

# 章节判断

chapnum = 0

for i in range(len(raw)):

if raw['head'][i] == "第" and raw['mid'][i] > 0 and raw['len'][i] < 30:

chapnum += 1

if chapnum >= 40 and raw['txt'][i] == "附录一:成吉思汗家族":

chapnum = 0

raw.loc[i, 'chap'] = chapnum

# 删除临时变量

del raw['head']

del raw['mid']

del raw['len']

rawgrp = raw.groupby('chap')

chapter = rawgrp.agg(sum) # 只有字符串的情况下,sum函数自动转为合并字符串

chapter = chapter[chapter.index != 0]

# print(chapter)

# 设定分词及请理停用词函数

stop_list = list(pd.read_csv('./停用词.txt', names=['w'], sep='aaa', encoding='utf-8').w)

# print(stop_list)

# jeiba分词

def m_cut(intxt):

return [w for w in jieba.cut(intxt) if w not in stop_list and len(w) > 1]

clean_chap = [" ".join(m_cut(w)) for w in chapter.txt.iloc[:5]]

count_vec = CountVectorizer()

resmtx = count_vec.fit_transform(clean_chap)

print(resmtx)

# 基于词频矩阵X计算TF-Idf值

transformer = TfidfTransformer()

tfidf = transformer.fit_transform(resmtx)

print(pairwise_distances(resmtx)) # 默认值为Euclidean

print(pairwise_distances(resmtx, metric='cosine'))

# 使用TF-IDF矩阵进行相似度计算

print(pairwise_distances(tfidf[:5], metric='cosine'))

运行结果:

(0, 8763) 1

(0, 11379) 3

(0, 5284) 1

(0, 10928) 1

(0, 7554) 1

(0, 7395) 1

(0, 6537) 1

(0, 6509) 1

(0, 6464) 1

(0, 7552) 2

(0, 915) 21

(0, 7847) 7

(0, 8995) 1

(0, 7531) 1

(0, 7397) 1

(0, 168) 1

(0, 6328) 1

(0, 7027) 1

(0, 2956) 1

(0, 7721) 1

(0, 9395) 1

(0, 1873) 1

(0, 3794) 1

(0, 6917) 1

(0, 10876) 1

: :

(4, 5979) 1

(4, 2341) 1

(4, 7755) 1

(4, 2318) 1

(4, 5064) 1

(4, 4125) 1

(4, 7987) 1

(4, 10077) 1

(4, 47) 1

(4, 10098) 1

(4, 10105) 1

(4, 9096) 1

(4, 10171) 1

(4, 3149) 1

(4, 8685) 1

(4, 8367) 1

(4, 10766) 1

(4, 10441) 1

(4, 10151) 1

(4, 9809) 1

(4, 5542) 1

(4, 7865) 1

(4, 7512) 1

(4, 2980) 1

(4, 7686) 1

[[ 0. 295.77356204 317.52637686 320.33576135 316.85170033]

[295.77356204 0. 266.95130642 265.77622166 277.24898557]

[317.52637686 266.95130642 0. 233.9615353 226.09290126]

[320.33576135 265.77622166 233.9615353 0. 202.57344347]

[316.85170033 277.24898557 226.09290126 202.57344347 0. ]]

[[0. 0.63250402 0.77528382 0.78540047 0.82880469]

[0.63250402 0. 0.62572437 0.61666388 0.73192845]

[0.77528382 0.62572437 0. 0.51645443 0.5299046 ]

[0.78540047 0.61666388 0.51645443 0. 0.42108002]

[0.82880469 0.73192845 0.5299046 0.42108002 0. ]]

[[0. 0.69200348 0.84643282 0.85601472 0.89124575]

[0.69200348 0. 0.7438766 0.70590455 0.81767486]

[0.84643282 0.7438766 0. 0.60106637 0.63537168]

[0.85601472 0.70590455 0.60106637 0. 0.54121177]

[0.89124575 0.81767486 0.63537168 0.54121177 0. ]]

示例代码:

import jieba

import pandas as pd

from gensim import corpora, models

from gensim.models.ldamodel import LdaModel

from gensim import similarities

raw = pd.read_table('./金庸-射雕英雄传txt精校版.txt', names=['txt'], encoding="GBK")

# 章节判断用变量预处理

def m_head(tmpstr):

return tmpstr[:1]

def m_mid(tmpstr):

return tmpstr.find("回 ")

raw['head'] = raw.txt.apply(m_head)

raw['mid'] = raw.txt.apply(m_mid)

raw['len'] = raw.txt.apply(len)

# 章节判断

chapnum = 0

for i in range(len(raw)):

if raw['head'][i] == "第" and raw['mid'][i] > 0 and raw['len'][i] < 30:

chapnum += 1

if chapnum >= 40 and raw['txt'][i] == "附录一:成吉思汗家族":

chapnum = 0

raw.loc[i, 'chap'] = chapnum

# 删除临时变量

del raw['head']

del raw['mid']

del raw['len']

rawgrp = raw.groupby('chap')

chapter = rawgrp.agg(sum) # 只有字符串的情况下,sum函数自动转为合并字符串

chapter = chapter[chapter.index != 0]

# print(chapter)

# 设定分词及请理停用词函数

stop_list = list(pd.read_csv('./停用词.txt', names=['w'], sep='aaa', encoding='utf-8').w)

# print(stop_list)

# jeiba分词

def m_cut(intxt):

return [w for w in jieba.cut(intxt) if w not in stop_list and len(w) > 1]

# 文档预处理,提取主题词

chap_list = [m_cut(w) for w in chapter.txt]

# 生成文档对应的字典和bow稀疏向量

dictionary = corpora.Dictionary(chap_list)

corpus = [dictionary.doc2bow(text) for text in chap_list] # 仍为list in list

tfidf_model = models.TfidfModel(corpus) # 建立TF-IDF模型

corpus_tfidf = tfidf_model[corpus] # 对所需文档计算TF-IDF

ldamodel = LdaModel(corpus_tfidf, id2word=dictionary, num_topics=10, passes=5)

# 检索和第一章内容最相似(所属主题相同)的章节

simmtx = similarities.MatrixSimilarity(corpus) # 使用的矩阵种类要和拟合模型时相同

print(simmtx)

print(simmtx.index[:2])

# 使用gensim的LDA拟合结果进行演示

query = chapter.txt[1]

query_bow = dictionary.doc2bow(m_cut(query))

lda_evc = ldamodel[query_bow] # 转换为lda模型下的向量

sims = simmtx[lda_evc] # 进行矩阵内向量和所提供向量的余弦相似度查询

sims = sorted(enumerate(sims), key=lambda item: -item[1])

print(sims)

运行结果:

MatrixSimilarity<40 docs, 43955 features>

[[0.00360987 0.11551594 0.00360987 ... 0. 0. 0. ]

[0. 0.15784042 0. ... 0. 0. 0. ]]

[(0, 0.0058326684), (6, 0.0038030709), (3, 0.0035722235), (30, 0.002921846), (1, 0.0028936525), (7, 0.0028846266), (38, 0.002834554), (23, 0.0027586762), (4, 0.0027273756), (35, 0.0026802267), (8, 0.002584503), (31, 0.0024519921), (2, 0.0023968564), (29, 0.0022414625), (36, 0.0022174662), (24, 0.0020882194), (27, 0.0020224026), (21, 0.0019537606), (25, 0.0019245804), (5, 0.0018208353), (28, 0.001775686), (15, 0.0017177735), (39, 0.0016619969), (16, 0.001606744), (12, 0.0015473039), (33, 0.0015236269), (26, 0.0015073677), (10, 0.0015002472), (22, 0.0013651266), (11, 0.0013272304), (9, 0.001299706), (34, 0.0012887274), (37, 0.0011597403), (17, 0.0010317966), (14, 0.0009737435), (32, 0.00091980596), (18, 0.0008978047), (20, 0.00086604763), (19, 0.00065769325), (13, 0.0006388487)]