Kafka源码分析 Topic与Partition使用

文章目录

-

- 主题与分区

-

- Topic的管理命令

-

- 1. 创建Topic

- 2. 查看Topic

- 3. 修改Topic

- 4. 删除Topic

- kafka-topics.sh命令式创建Topic代码阅读分析

主题与分区

Topic的管理命令

1. 创建Topic

创建主题的命令,创建一个名为test的拥有10个partition,副本因子是3的Topic。

bin/kafka-topics.sh --zookeeper localhost:2181/kafka --create --topic test --partitions 10 --replication-factor 3

2. 查看Topic

查看当前所有可用主题:

bin/kafka-topics.sh --zookeeper localhost:2181/kafka -list

查看主题信息:

bin/kafka-topics.sh --zookeeper localhost:2181/kafka --describe --topic test

查看多个主题详细信息用“,”隔开:

bin/kafka-topics.sh --zookeeper localhost:2181/kafka --describe --topic test,test2

查找所有包含失效副本的分区:

bin/kafka-topics.sh --zookeeper localhost:2181/kafka --describe --topic test --under-replicated-partitions

查找主题中没有leader副本的分区:

bin/kafka-topics.sh --zookeeper localhost:2181/kafka --describe --topic test --unavailable-partitions

3. 修改Topic

增加分区命令:

bin/kafka-topics.sh --zookeeper localhost:2181/kafka --alter --topic topic-config --partitions 3

不支持减少分区

4. 删除Topic

删除主题的命令

bin/kafka-topics.sh --zookeeper localhost:2181/kafka --delete test --if-exists

手动删除主题顺序执行,

- 删除zookeeper节点 /config/topics/test

- 删除zookeeper节点/brokers/topics/test 及其子节点

- 删除集群中所有与主题test有关的文件 rm -rf /tmp/kafka-logs/test

kafka-topics.sh命令式创建Topic代码阅读分析

使用kafka-topic.shell脚本,其内容是调用的TopicCommand类

#!/bin/bash

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

exec $(dirname $0)/kafka-run-class.sh kafka.admin.TopicCommand "$@"

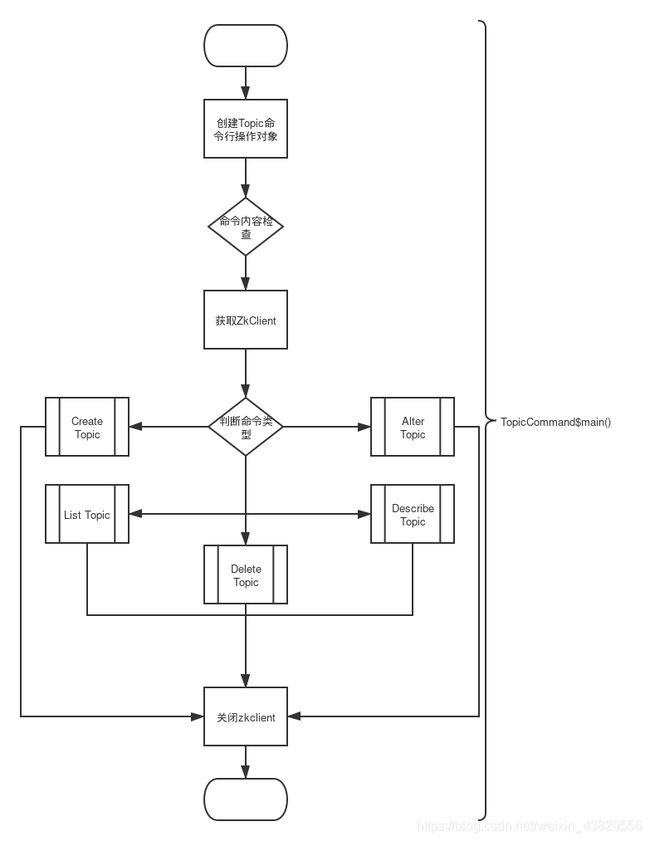

类的mian方法

def main(args: Array[String]): Unit = {

val opts = new TopicCommandOptions(args)

if(args.length == 0)

CommandLineUtils.printUsageAndDie(opts.parser, "Create, delete, describe, or change a topic.")

// should have exactly one action

val actions = Seq(opts.createOpt, opts.listOpt, opts.alterOpt, opts.describeOpt, opts.deleteOpt).count(opts.options.has _)

if(actions != 1)

CommandLineUtils.printUsageAndDie(opts.parser, "Command must include exactly one action: --list, --describe, --create, --alter or --delete")

opts.checkArgs()

val time = Time.SYSTEM

val zkClient = KafkaZkClient(opts.options.valueOf(opts.zkConnectOpt), JaasUtils.isZkSecurityEnabled, 30000, 30000,

Int.MaxValue, time)

var exitCode = 0

try {

if(opts.options.has(opts.createOpt))

createTopic(zkClient, opts)

else if(opts.options.has(opts.alterOpt))

alterTopic(zkClient, opts)

else if(opts.options.has(opts.listOpt))

listTopics(zkClient, opts)

else if(opts.options.has(opts.describeOpt))

describeTopic(zkClient, opts)

else if(opts.options.has(opts.deleteOpt))

deleteTopic(zkClient, opts)

} catch {

case e: Throwable =>

println("Error while executing topic command : " + e.getMessage)

error(Utils.stackTrace(e))

exitCode = 1

} finally {

zkClient.close()

Exit.exit(exitCode)

}

}

实例化一个Topic命令行操作对象,检测参数合法性,构造一个kafkazk的client,分辨操作的类型,这按createTopic(zkClient, opts)。

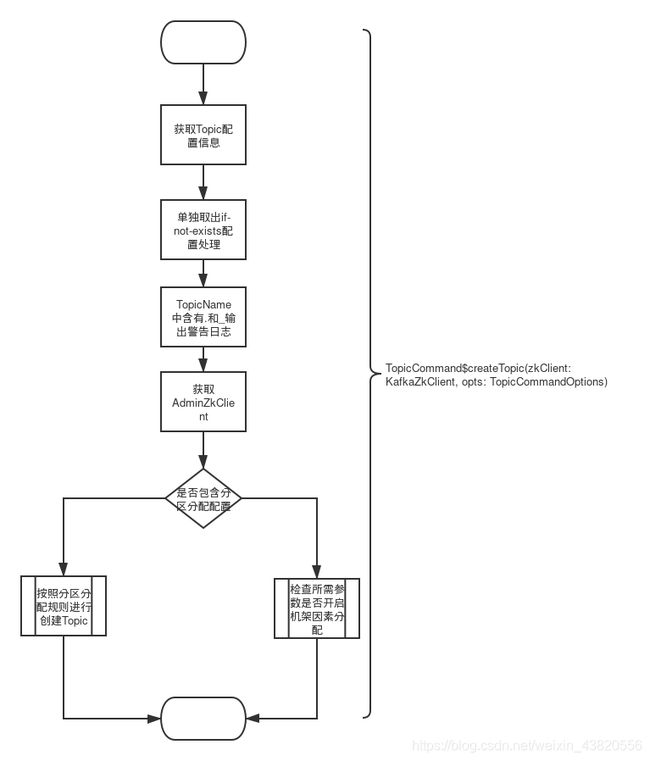

def createTopic(zkClient: KafkaZkClient, opts: TopicCommandOptions) {

val topic = opts.options.valueOf(opts.topicOpt)

val configs = parseTopicConfigsToBeAdded(opts)

val ifNotExists = opts.options.has(opts.ifNotExistsOpt)

if (Topic.hasCollisionChars(topic))

println("WARNING: Due to limitations in metric names, topics with a period ('.') or underscore ('_') could collide. To avoid issues it is best to use either, but not both.")

val adminZkClient = new AdminZkClient(zkClient)

try {

if (opts.options.has(opts.replicaAssignmentOpt)) {

val assignment = parseReplicaAssignment(opts.options.valueOf(opts.replicaAssignmentOpt))

adminZkClient.createOrUpdateTopicPartitionAssignmentPathInZK(topic, assignment, configs, update = false)

} else {

CommandLineUtils.checkRequiredArgs(opts.parser, opts.options, opts.partitionsOpt, opts.replicationFactorOpt)

val partitions = opts.options.valueOf(opts.partitionsOpt).intValue

val replicas = opts.options.valueOf(opts.replicationFactorOpt).intValue

val rackAwareMode = if (opts.options.has(opts.disableRackAware)) RackAwareMode.Disabled

else RackAwareMode.Enforced

adminZkClient.createTopic(topic, partitions, replicas, configs, rackAwareMode)

}

println("Created topic \"%s\".".format(topic))

} catch {

case e: TopicExistsException => if (!ifNotExists) throw e

}

}

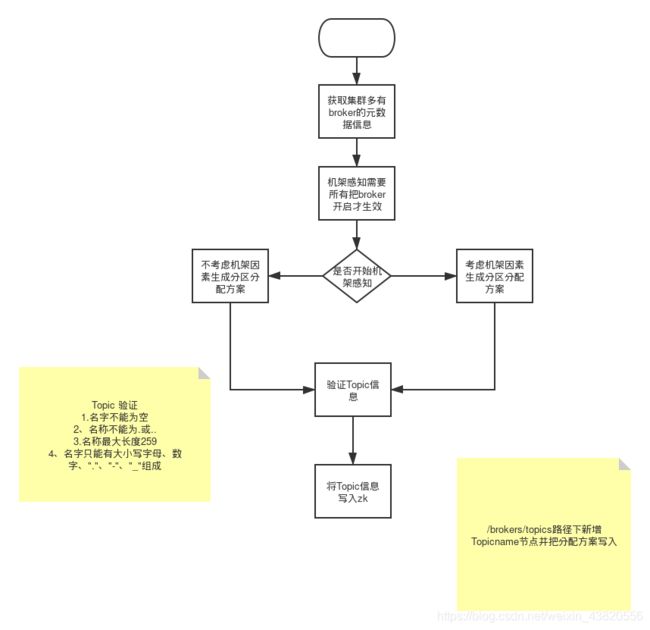

获取topic,解析topic的配置,判断特殊参数if-not-exists,检测topicname,对含有_和.的进行waring告警。实例化一个AdminZkClient对象;检测是否通过参数replica-assignment进行了partition到broker分配的分配列表。如果制订了分区分配就按此方案进行创建。否则走以下分支,检查命令中是否partitions和replication-factor参数,获取参数的值。检查参数disable-rack-aware,考虑创建topic是否考虑机架因素。

def createTopic(topic: String,

partitions: Int,

replicationFactor: Int,

topicConfig: Properties = new Properties,

rackAwareMode: RackAwareMode = RackAwareMode.Enforced) {

val brokerMetadatas = getBrokerMetadatas(rackAwareMode)

// 生成分区分配方案

val replicaAssignment = AdminUtils.assignReplicasToBrokers(brokerMetadatas, partitions, replicationFactor)

createOrUpdateTopicPartitionAssignmentPathInZK(topic, replicaAssignment, topicConfig)

}

获取broker元数据信息

通过zk获取集群上所有的broker的ids列表,zk的path是/brokers/ids,结果会根据ids进行sorted,小的在前。获取在ZK上存储的每一个broker的数据

通过AdminUtils进行assignReplicasToBrokers分配方案的生成。

/**

* Creates or Updates the partition assignment for a given topic

* @param topic

* @param partitionReplicaAssignment

* @param config

* @param update

*/

def createOrUpdateTopicPartitionAssignmentPathInZK(topic: String,

partitionReplicaAssignment: Map[Int, Seq[Int]],

config: Properties = new Properties,

update: Boolean = false) {

validateCreateOrUpdateTopic(topic, partitionReplicaAssignment, config, update)

if (!update) {

// write out the config if there is any, this isn't transactional with the partition assignments

zkClient.setOrCreateEntityConfigs(ConfigType.Topic, topic, config)

}

// create the partition assignment

writeTopicPartitionAssignment(topic, partitionReplicaAssignment, update)

}

在zk上进行topic和分配方案的创建。

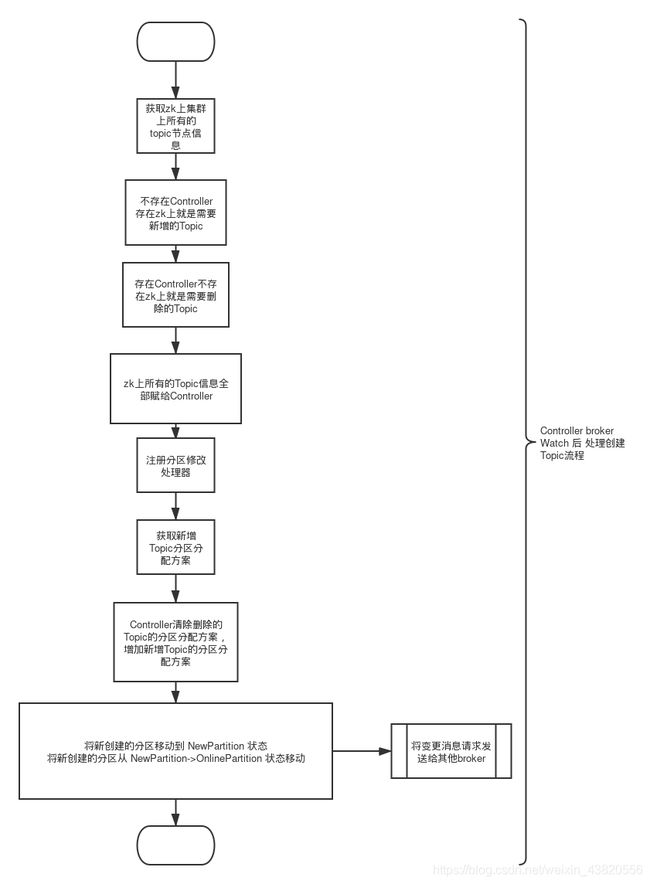

作为 Controller的broker对ZK上的pathroker/topic进行了watch。当zk写入成功时候。会触发监听handler。

case object TopicChange extends ControllerEvent {

override def state: ControllerState = ControllerState.TopicChange

override def process(): Unit = {

if (!isActive) return

val topics = zkClient.getAllTopicsInCluster.toSet

val newTopics = topics -- controllerContext.allTopics

val deletedTopics = controllerContext.allTopics -- topics

controllerContext.allTopics = topics

registerPartitionModificationsHandlers(newTopics.toSeq)

val addedPartitionReplicaAssignment = zkClient.getReplicaAssignmentForTopics(newTopics)

controllerContext.partitionReplicaAssignment = controllerContext.partitionReplicaAssignment.filter(p =>

!deletedTopics.contains(p._1.topic))

controllerContext.partitionReplicaAssignment ++= addedPartitionReplicaAssignment

info(s"New topics: [$newTopics], deleted topics: [$deletedTopics], new partition replica assignment " +

s"[$addedPartitionReplicaAssignment]")

if (addedPartitionReplicaAssignment.nonEmpty)

onNewPartitionCreation(addedPartitionReplicaAssignment.keySet)

}

}

/**

* This callback is invoked by the topic change callback with the list of failed brokers as input.

* It does the following -

* 1. Move the newly created partitions to the NewPartition state

* 2. Move the newly created partitions from NewPartition->OnlinePartition state

*/

private def onNewPartitionCreation(newPartitions: Set[TopicPartition]) {

info(s"New partition creation callback for ${newPartitions.mkString(",")}")

partitionStateMachine.handleStateChanges(newPartitions.toSeq, NewPartition)

replicaStateMachine.handleStateChanges(controllerContext.replicasForPartition(newPartitions).toSeq, NewReplica)

partitionStateMachine.handleStateChanges(newPartitions.toSeq, OnlinePartition, Option(OfflinePartitionLeaderElectionStrategy))

replicaStateMachine.handleStateChanges(controllerContext.replicasForPartition(newPartitions).toSeq, OnlineReplica)

}

partitionStateMachine的handleStateChanges

def handleStateChanges(partitions: Seq[TopicPartition], targetState: PartitionState,

partitionLeaderElectionStrategyOpt: Option[PartitionLeaderElectionStrategy] = None): Unit = {

if (partitions.nonEmpty) {

try {

controllerBrokerRequestBatch.newBatch()

doHandleStateChanges(partitions, targetState, partitionLeaderElectionStrategyOpt)

controllerBrokerRequestBatch.sendRequestsToBrokers(controllerContext.epoch)

} catch {

case e: Throwable => error(s"Error while moving some partitions to $targetState state", e)

}

}

}

replicaStateMachine的handleStateChanges

def handleStateChanges(replicas: Seq[PartitionAndReplica], targetState: ReplicaState,

callbacks: Callbacks = new Callbacks()): Unit = {

if (replicas.nonEmpty) {

try {

controllerBrokerRequestBatch.newBatch()

replicas.groupBy(_.replica).map { case (replicaId, replicas) =>

val partitions = replicas.map(_.topicPartition)

doHandleStateChanges(replicaId, partitions, targetState, callbacks)

}

controllerBrokerRequestBatch.sendRequestsToBrokers(controllerContext.epoch)

} catch {

case e: Throwable => error(s"Error while moving some replicas to $targetState state", e)

}

}

}