Python爬虫模板(v3.0版本)与使用示例

一、简介

对于一个爬虫项目,在观察好目标网站后,对其实施爬虫一般要分为三个步骤:

数据获取→数据解析→数据保存

1.数据获取:

(1)本模板可以在主程序crawler中选择使用Urllib、Requests、Cloudscraper三种库

其中urllib是最经典的,requests比urllib性能好(可以传json型和非json型两种表单),cloudscraper可以绕过一些验证码。程序默认配置使用requests。

(2)可以设置随机请求身份User-Agent

2.数据解析:

(1)对于异步得到的json数据,在crawler获取数据后使用json.loads即可得到Python结构的数据,随后根据数据的结构和你的目的自行进行解析。

请参考我的文章:

Python爬虫获取数据实战:2023数学建模美赛春季赛帆船数据网站sailboatdata.com(状态码403forbidden→使用cloudscraper绕过cloudflare)_和谐号hexh的博客-CSDN博客

(2)对于html形式的数据,在下面的使用示例中给出了两种解析方法,第一种是基于beautifulsoup按结构搜索与遍历,第二种是直接转换为string,用正则表达式re提取。(后者更好用)

如需学习beautifulsoup和re,可见:

Python爬虫之数据解析——BeautifulSoup亮汤模块(一):基础与遍历(接上文,2023美赛春季赛帆船数据解析sailboatdata.com)_和谐号hexh的博客-CSDN博客

Python爬虫之数据解析——BeautifulSoup亮汤模块(二):搜索(再接上文,2023美赛春季赛帆船数据解析sailboatdata.com)_和谐号hexh的博客-CSDN博客 Python正则表达式re库_和谐号hexh的博客-CSDN博客

3.保存数据

本模板给出两种保存方法,第一种是利用xlwt库,另一种直接用文件处理。后者为追加写入。

二、模板源码

1.模板架构

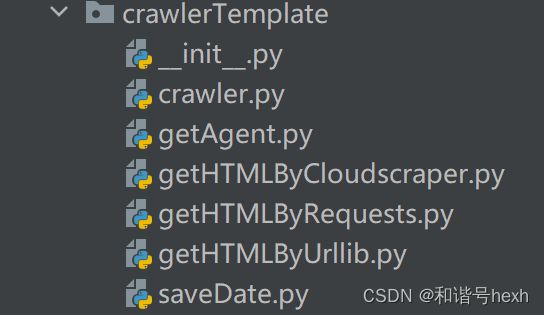

模板中共有6个py文件,我放在crawlerTemplate包下。

2.源代码

(1)getAgent模块

# -*- coding: utf-8 -*-

# @Time: 2023-08-20 20:14

# @Author: hexh

# @File: getAgent.py

# @Software: PyCharm

from random import randint

# 随机获取身份

def main():

USER_AGENTS = [

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)",

"Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)",

"Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)",

"Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)",

"Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0",

"Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5",

"Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20",

"Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52",

]

return USER_AGENTS[randint(0, len(USER_AGENTS) - 1)]

user-Agent是我们每次发送请求的身份标识。

每次使用不同的user-Agent,可以更好的隐藏身份,防止被封。

参考网址:

爬虫请求网站时报错http.client.RemoteDisconnected: Remote end closed connection without response 请求网站时报错_如果我变成回忆l的博客-CSDN博客

(2)getHTMLByUrllib模块

# -*- coding: utf-8 -*-

# @File: getHTMLByUrllib.py

# @Author: 和谐号

# @Software: PyCharm

# @CreationTime: 2023-08-23 3:25

# @OverviewDescription:

import gzip

import urllib

from io import BytesIO

def main(info, configLog, timeoutTime):

# 根据从目标网页的txt文件中提取请求信息info,正式爬取HTML响应内容

#

# 传入:

# 从目标网页的txt文件中提取请求信息:info ([url,method,data,header])

# 相关配置信息:configLog

# 最大允许等待时间:timeoutTime

#

# 返回一个参数:

# 响应内容:html

# 打包请求信息

if info[1] == "POST":

if configLog["表单数据形式"] == "字典":

data = bytes(urllib.parse.urlencode(info[2]), encoding="utf-8") # 打包data表单

else:

data = info[2].encode("utf-8")

req = urllib.request.Request(url=info[0], headers=info[3], data

=data, method="POST")

elif info[1] == "GET":

req = urllib.request.Request(url=info[0], headers=info[3])

else:

print("请求类型错误:", info[1])

return None

try:

# 发送请求,得到响应response

if timeoutTime > 0:

response = urllib.request.urlopen(req, timeout=timeoutTime)

else:

response = urllib.request.urlopen(req)

# 解码responses到html,若是Gzip压缩,二进制文件以"1f8b08"开头,否则直接解码

html = response.read()

if html.hex().startswith("1f8b08"):

buff = BytesIO(html)

f = gzip.GzipFile(fileobj=buff)

html = f.read().decode('utf-8')

else:

html = html.decode('utf-8')

return html

except (urllib.error.URLError, Exception) as e:

if hasattr(e, "code"):

print("urllib报错,响应状态码:", e.code)

if hasattr(e, "reason"):

print("urllib报错,原因:", e.reason)

(3)getHTMLByRequests模块

# -*- coding: utf-8 -*-

# @File: getHTMLByRequests.py

# @Author: 和谐号

# @Software: PyCharm

# @CreationTime: 2023-08-23 3:26

# @OverviewDescription:

import requests

from requests.exceptions import ReadTimeout, HTTPError, RequestException

def main(info, configLog, timeoutTime):

# 根据从目标网页的txt文件中提取请求信息,正式爬取HTML响应内容

#

# 传入:

# 从目标网页的txt文件中提取请求信息:info ([url,method,data,header])

# 相关配置信息:configLog

# 最大允许等待时间:timeoutTime

#

# 返回一个参数:

# 响应内容:html

try:

if info[1] == "POST":

if timeoutTime > 0:

if configLog["ContentType"] in ["json(自动配置)", "json(手动配置)"]:

response = requests.post(info[0], headers=info[3], json=info[2], timeout=timeoutTime)

else:

response = requests.post(info[0], headers=info[3], data=info[2], timeout=timeoutTime)

else:

if configLog["ContentType"] in ["json(自动配置)", "json(手动配置)"]:

response = requests.post(info[0], headers=info[3], json=info[2])

else:

response = requests.post(info[0], headers=info[3], data=info[2])

elif info[1] == "GET":

if len(info[2]) > 0: # 这里或许能优化

if timeoutTime > 0:

response = requests.get(info[0], headers=info[3], params=info[2], timeout=timeoutTime)

else:

response = requests.get(info[0], headers=info[3], params=info[2])

else:

if timeoutTime > 0:

response = requests.get(info[0], headers=info[3], timeout=timeoutTime)

else:

response = requests.get(info[0], headers=info[3])

else:

print("请求类型错误:", info[1])

return None

# 解码:

if response.status_code == 200:

html = response.text # 如果输出乱码,这里可以考虑使用contents属性

return html

else:

print('请求失败,状态码:', response.status_code)

print('Error response:', response.text)

return None

except ReadTimeout as e:

print('Timeout', e)

except HTTPError as e:

print('Http error', e)

except RequestException as e:

print('Error', e)

(4)getHTMLByCloudscraper模块

# -*- coding: utf-8 -*-

# @File: getHTMLByCloudscraper.py

# @Author: 和谐号

# @Software: PyCharm

# @CreationTime: 2023-08-23 19:24

# @OverviewDescription:

import cloudscraper

import cloudscraper.exceptions

def main(info, configLog, timeoutTime):

# 根据从目标网页的txt文件中提取请求信息,正式爬取HTML响应内容

#

# 传入:

# 从目标网页的txt文件中提取请求信息:info ([url,method,data,header])

# 相关配置信息:configLog

# 最大允许等待时间:timeoutTime

#

# 返回一个参数:

# 响应内容:html

scraper = cloudscraper.create_scraper()

try:

if info[1] == "POST":

if timeoutTime > 0:

if configLog["ContentType"] in ["json(自动配置)", "json(手动配置)"]:

response = scraper.post(info[0], headers=info[3], json=info[2], timeout=timeoutTime)

else:

response = scraper.post(info[0], headers=info[3], data=info[2], timeout=timeoutTime)

else:

if configLog["ContentType"] in ["json(自动配置)", "json(手动配置)"]:

response = scraper.post(info[0], headers=info[3], json=info[2])

else:

response = scraper.post(info[0], headers=info[3], data=info[2])

elif info[1] == "GET":

if len(info[2]) > 0: # 这里或许能优化

if timeoutTime > 0:

response = scraper.get(info[0], headers=info[3], params=info[2], timeout=timeoutTime)

else:

response = scraper.get(info[0], headers=info[3], params=info[2])

else:

if timeoutTime > 0:

response = scraper.get(info[0], headers=info[3], timeout=timeoutTime)

else:

response = scraper.get(info[0], headers=info[3])

else:

print("请求类型错误:", info[1])

return None

# 解码:

if response.status_code == 200:

html = response.text # 如果输出乱码,这里可以考虑使用contents属性

return html

else:

print('请求失败,状态码:', response.status_code)

print('Error response:', response.text)

return None

except cloudscraper.exceptions as e:

print('出错', e)

(5)crawler模块

# -*- coding: utf-8 -*-

# @Time: 2023-08-20 22:23

# @Author: hexh

# @File: crawler.py

# @Software: PyCharm

import re

from crawlerTemplate import getAgent, getHTMLByUrllib, getHTMLByRequests, getHTMLByCloudscraper

def toDict(theList, noNeedKey):

# 将data或header的格式,从字符串list转换为字典

#

# 传入:

# 待转换的list:theList

# 不需要的字段key列表:noNeedKey

#

# 返回一个参数:

# 转换后的字典:res

res = {}

for item in theList:

if ":" not in item:

continue

i = item.index(":")

if item[0:i] in noNeedKey:

continue

res[item[0:i]] = item[i + 2:-1] if item.endswith("\n") else item[i + 2:]

return res

def getRequestInfoFromTxt(path, data, url, headerNoneedKey):

# 从目标网页的txt文件中提取请求信息

#

# 传入:

# txt文件路径:path

# 手动配置的数据表单:data

# 手动配置的url:url

# header中不需要的key:headerNoneedKey

#

# 返回一个参数:列表info

# [url, method, data, header]

# 读

contextList = []

try:

f = open(path, "r", encoding='utf-8')

try:

contextList = f.readlines()

except Exception as e:

print(e)

finally:

f.close()

except Exception as e:

print(e)

# 解析:

header = []

method = "未检测出请求类型,请检查配置文件"

tmp = "请求 URL:\n"

try:

if tmp in contextList:

i = contextList.index(tmp)

if data == "auto":

data = contextList[0:i]

if url == "auto":

url = contextList[i + 1][0:-1]

method = contextList[i + 3][0:-1]

header = contextList[i + 10:]

header = toDict(header, headerNoneedKey)

except Exception as e:

print("txt文件配置错误", e)

return [url, method, data, header]

def config(data, url, info, libraryUsed, isPrint):

# 记录、更改爬虫配置

#

# 传入:

# 主方法中手动配置的数据表单:data //主要判断是否是auto

# 主方法中手动配置的url:url //主要判断是否是auto

# txt文件中读取的信息:info

# 主方法中选择的爬虫库:libraryUsed

# 是否打印配置信息:isPrint

#

# 返回一个参数:

# 配置日志:configLog

configLog = {"表单数据获取方式": None, "url获取方式": None, "表单数据形式": None, "爬虫库": None,

"ContentType": None, "User-Agent": None}

# 判断表单数据获取方式,并自动获取表单数据形式

if data == "auto":

configLog["表单数据获取方式"] = "自动获取"

configLog["表单数据形式"] = "字符串" if len(info[2]) == 1 else "字典"

else:

configLog["表单数据获取方式"] = "手动配置"

if isinstance(info[2], dict):

configLog["表单数据形式"] = "字典"

elif isinstance(info[2], str):

configLog["表单数据形式"] = "字符串"

else:

configLog["表单数据形式"] = "错误"

configLog["url获取方式"] = ("自动获取" if url == "auto" else "手动配置")

# 随机User-Agent

if info[3].get("User-Agent") == "True":

info[3]["User-Agent"] = getAgent.main()

configLog["User-Agent"] = '(随机)' + info[3]["User-Agent"]

else:

configLog["User-Agent"] = '(配置)' + info[3].get("User-Agent")

# 读取header中的Content-Type,用于判断是否用json=data

findContentType = re.compile("content-type", re.I)

contentTypeName = (re.findall(findContentType, str(info[3])))[0]

contentType = info[3].get(contentTypeName, "")

configLog["ContentType"] = "json(手动配置)" if "json" in contentType else "非json(手动配置)"

# 如果libraryUsed没有传入,即为默认值auto,则自动优化配置“爬虫库”和“ContentType”

if libraryUsed == "auto":

if configLog["表单数据形式"] == "字典":

configLog["爬虫库"] = "requests(自动配置)"

configLog["ContentType"] = "json(自动配置)"

elif configLog["表单数据形式"] == "字符串":

configLog["爬虫库"] = "requests(自动配置)"

configLog["ContentType"] = "非json(自动配置)"

elif libraryUsed == "r":

configLog["爬虫库"] = "requests(手动配置)"

elif libraryUsed == "u":

configLog["爬虫库"] = "urllib(手动配置)"

elif libraryUsed == "c":

configLog["爬虫库"] = "cloudscraper(手动配置)"

else:

configLog["爬虫库"] = "错误"

if isPrint:

for key, value in configLog.items():

print(key + ":" + value)

return configLog

def dataProcessing(info, configLog):

# 表单数据data的处理

#

# 传入:

# txt文件中读取的信息:info

# 配置日志:configLog

#

# 无返回值

if configLog["表单数据获取方式"] == "自动获取":

if configLog["表单数据形式"] == "字典":

info[2] = toDict(info[2], [])

elif configLog["表单数据形式"] == "字符串":

info[2] = info[2][0][:-1]

def main(filepath, libraryUsed="auto", data="auto", url="auto", isPrint=True, timeoutTime=0, headerNoneedKey=None):

# 爬虫主函数,根据提供的目标网站txt(文件路径),返回爬虫结果

# txt文件要求:

# 先从F12中显示原始,将浏览器请求信息,拷贝到txt文件

# 如果是POST方法,需要将表单data拷贝到请求信息前

# 如果需要采用随机user-Agent,请将txt中该行设置为"User-Agent: True",注意True前有空格,后无空格

#

# 传入:

# 目标网页的txt配置文件路径:filepath //必填参数

# 爬虫库选择参数:libraryUsed //如果不写,默认为"auto",自动配置爬虫库和ContentType。可选参数:"r":requests库,"u":urllib库."c":cloudscraper库

# 表单数据:data //如果不写,默认为"auto",自动从txt中获取,否则用形参中的data

# 目标url:url //如果不写,默认为“auto”,自动从txt中获取,否则用形参中的url

# 是否打印配置信息:isPrint //如果不写,默认为True,打印配置信息

# 爬虫timeout秒数,即最多等服务器反应的时间:timeoutTime //如果不写,默认为0,即不设置

# header中不需要的键:headerNoneedKey //一般不写,取默认值["Date", "Server", "Transfer-Encoding","Accept-Encoding"]

#

# 返回一个参数:

# 响应内容:html

#

# requests库有时比urllib更快,但在使用requests库时要注意表单类型(json类型/data类型)

# 一般来说,header里如果content-type里包含了json字样,就是json类型,json=data;否则是data类型,data=data

# 如果出现错误:400,Error response: {"message":"Expecting object or array (near 1:1)","status":400}

# 很有可能是content-type配置错了,可以取消libraryUsed自动配置,在txt中手动配置content—type

# 另外注:urllib目前不分json和data,统一是data,只有用requests库时要考虑

# cloudscraper库一般用于带验证码反爬的网站

if headerNoneedKey is None:

headerNoneedKey = ["Date", "Server", "Transfer-Encoding", "Accept-Encoding"]

info = getRequestInfoFromTxt(filepath, data, url, headerNoneedKey)

if info[1] not in ["POST", "GET"]:

print("请求类型错误:", info[1])

return None

configLog = config(data, url, info, libraryUsed, isPrint)

# 配置检查:

if None in configLog.values() or "错误" in configLog.values():

print("配置错误")

return None

dataProcessing(info, configLog)

if configLog["爬虫库"] in ["urllib(自动配置)", "urllib(手动配置)"]:

return getHTMLByUrllib.main(info, configLog, timeoutTime)

elif configLog["爬虫库"] in ["requests(自动配置)", "requests(手动配置)"]:

return getHTMLByRequests.main(info, configLog, timeoutTime)

elif configLog["爬虫库"] in ["cloudscraper(自动配置)", "cloudscraper(手动配置)"]:

return getHTMLByCloudscraper.main(info, configLog, timeoutTime)

(6)savaData模块

# -*- coding: utf-8 -*-

# @File: saveDateToXls.py

# @Author: 和谐号

# @Software: PyCharm

# @CreationTime: 2023-08-26 9:38

# @OverviewDescription:

import xlwt

import os

import datetime

nowTime = datetime.datetime.now().strftime("%Y-%m-%d %H%M%S %f")[:-3]

def byXlwt(datalist, savePath, headOfDataSheet=None, sheetName="sheet1"):

# 用xlwt库保存数据

#

# 传入:

# 爬取到的数据列表:datalist //必填,格式为datalist[ [项目1字段1,项目1字段2,...],[项目2字段1,项目2字段2,...] ]

# 数据表的保存路径:savePath //必填,当文件已存在时,将文件名后加上时间

# 数据表单的表头:headOfDataSheet //默认值为None

# 数据表里的sheet名:sheetName //默认为"sheet1"

#

# 无返回值

if os.path.exists(savePath):

savePath = savePath[:-4] + " " + nowTime + savePath[-4:]

workbook = xlwt.Workbook(encoding="utf-8", style_compression=0)

worksheet = workbook.add_sheet(sheetName, cell_overwrite_ok=True)

rowIndex = 0

if headOfDataSheet is not None:

for j, item in enumerate(headOfDataSheet):

worksheet.write(rowIndex, j, item)

rowIndex += 1

for item1 in datalist:

for j, item2 in enumerate(item1):

worksheet.write(rowIndex, j, item2)

rowIndex += 1

worksheet.write(rowIndex, 0, nowTime)

workbook.save(savePath)

def byFile(datalist, savePath, headOfDataSheet=None):

# 用Python自带的文件操作保存数据

#

# 传入:

# 爬取到的数据列表:datalist //必填,格式为datalist[ [项目1字段1,项目1字段2,...],[项目2字段1,项目2字段2,...] ]

# 数据表的保存路径:savePath //必填,当文件已存在时,追加写入

# 数据表单的表头:headOfDataSheet //默认值为None

#

# 无返回值

f = open(savePath, "a", encoding="utf-8")

try:

if headOfDataSheet is not None:

for item in headOfDataSheet:

f.write(item + "\t")

f.write("\n")

for item1 in datalist:

for item2 in item1:

f.write(item2 + "\t")

f.write("\n")

f.write(nowTime + "\n\n")

except Exception as e:

print("写入时出错: ", e)

finally:

f.close()3.代码中的一些解释

(如果你只是想用模板的话,这部分可以跳过不看)

请见:Python爬虫数据获取模板与使用方法(v2.0版本)_和谐号hexh的博客-CSDN博客

4.参数介绍

需要的确定的参数:

(1)txt文件中

url(目标网址),method(POST或GET),data(表单数据),header(请求头)

其中,header中有三个比较重要的字段:

①cookie:与登录有关

②User-Agent:当前身份。如果需要随机身份,需要将其值设置为:“ True”

③Context-Type:表单数据的文本类型,如果出现json字样,则要用json类型,没有则为data类型。更准确的方法是根据表单数据取判断,如果有列表[],特殊情况,建议用json类型。

(2)crawler.main方法形参

最重要的就是txt文件的路径filepath,必填。

其余的都可以使用默认值,程序会自动配置。

如果报错,可以试着调调libraryUsed和Context-Type,以及data

def main(filepath, libraryUsed="auto", data="auto", url="auto", isPrint=True, timeoutTime=0, headerNoneedKey=None):

# 爬虫主函数,根据提供的目标网站txt(文件路径),返回爬虫结果

# txt文件要求:

# 先从F12中显示原始,将浏览器请求信息,拷贝到txt文件

# 如果是POST方法,需要将表单data拷贝到请求信息前

# 如果需要采用随机user-Agent,请将txt中该行设置为"User-Agent: True",注意True前有空格,后无空格

#

# 传入:

# 目标网页的txt配置文件路径:filepath //必填参数

# 爬虫库选择参数:libraryUsed //如果不写,默认为"auto",自动配置爬虫库和ContentType。可选参数:"r":requests库,"u":urllib库."c":cloudscraper库

# 表单数据:data //如果不写,默认为"auto",自动从txt中获取,否则用形参中的data

# 目标url:url //如果不写,默认为“auto”,自动从txt中获取,否则用形参中的url

# 是否打印配置信息:isPrint //如果不写,默认为True,打印配置信息

# 爬虫timeout秒数,即最多等服务器反应的时间:timeoutTime //如果不写,默认为0,即不设置

# header中不需要的键:headerNoneedKey //一般不写,取默认值["Date", "Server", "Transfer-Encoding","Accept-Encoding"]

#

# 返回一个参数:

# 响应内容:html

#

# requests库有时比urllib更快,但在使用requests库时要注意表单类型(json类型/data类型)

# 一般来说,header里如果content-type里包含了json字样,就是json类型,json=data;否则是data类型,data=data

# 如果出现错误:400,Error response: {"message":"Expecting object or array (near 1:1)","status":400}

# 很有可能是content-type配置错了,可以取消libraryUsed自动配置,在txt中手动配置content—type

# 另外注:urllib目前不分json和data,统一是data,只有用requests库时要考虑

# cloudscraper库一般用于带验证码反爬的网站

if headerNoneedKey is None:

headerNoneedKey = ["Date", "Server", "Transfer-Encoding", "Accept-Encoding"]

info = getRequestInfoFromTxt(filepath, data, url, headerNoneedKey)

if info[1] not in ["POST", "GET"]:

print("请求类型错误:", info[1])

return None

configLog = config(data, url, info, libraryUsed, isPrint)

# 配置检查:

if None in configLog.values() or "错误" in configLog.values():

print("配置错误")

return None

dataProcessing(info, configLog)

if configLog["爬虫库"] in ["urllib(自动配置)", "urllib(手动配置)"]:

return getHTMLByUrllib.main(info, configLog, timeoutTime)

elif configLog["爬虫库"] in ["requests(自动配置)", "requests(手动配置)"]:

return getHTMLByRequests.main(info, configLog, timeoutTime)

elif configLog["爬虫库"] in ["cloudscraper(自动配置)", "cloudscraper(手动配置)"]:

return getHTMLByCloudscraper.main(info, configLog, timeoutTime)(3)saveData中两个函数的形参

见函数下的注释

def byXlwt(datalist, savePath, headOfDataSheet=None, sheetName="sheet1"):

# 用xlwt库保存数据

#

# 传入:

# 爬取到的数据列表:datalist //必填,格式为datalist[ [项目1字段1,项目1字段2,...],[项目2字段1,项目2字段2,...] ]

# 数据表的保存路径:savePath //必填,当文件已存在时,将文件名后加上时间

# 数据表单的表头:headOfDataSheet //默认值为None

# 数据表里的sheet名:sheetName //默认为"sheet1"

#

# 无返回值

if os.path.exists(savePath):

savePath = savePath[:-4] + " " + nowTime + savePath[-4:]

workbook = xlwt.Workbook(encoding="utf-8", style_compression=0)

worksheet = workbook.add_sheet(sheetName, cell_overwrite_ok=True)

rowIndex = 0

if headOfDataSheet is not None:

for j, item in enumerate(headOfDataSheet):

worksheet.write(rowIndex, j, item)

rowIndex += 1

for item1 in datalist:

for j, item2 in enumerate(item1):

worksheet.write(rowIndex, j, item2)

rowIndex += 1

worksheet.write(rowIndex, 0, nowTime)

workbook.save(savePath)

def byFile(datalist, savePath, headOfDataSheet=None):

# 用Python自带的文件操作保存数据

#

# 传入:

# 爬取到的数据列表:datalist //必填,格式为datalist[ [项目1字段1,项目1字段2,...],[项目2字段1,项目2字段2,...] ]

# 数据表的保存路径:savePath //必填,当文件已存在时,追加写入

# 数据表单的表头:headOfDataSheet //默认值为None

#

# 无返回值

f = open(savePath, "a", encoding="utf-8")

try:

if headOfDataSheet is not None:

for item in headOfDataSheet:

f.write(item + "\t")

f.write("\n")

for item1 in datalist:

for item2 in item1:

f.write(item2 + "\t")

f.write("\n")

f.write(nowTime + "\n\n")

except Exception as e:

print("写入时出错: ", e)

finally:

f.close()三、使用示例:

目标网站:豆瓣电影 Top 250 (douban.com)

(1)准备目标网站的txt文件:

url,method,data,header 这些可以从F12中找到(注意把“原始”勾上)

url对应请求url,method对应请求方法,header对应响应标头+请求标头

对于请求方法为POST的还记得加上表单信息,详见:

Python爬虫数据获取模板与使用方法(v2.0版本)_和谐号hexh的博客-CSDN博客

得到如下txt文件,将其放在target目录下:

请求 URL:

https://movie.douban.com/top250?start=

请求方法:

GET

状态代码:

200 OK

远程地址:

49.233.242.15:443

引用者策略:

strict-origin-when-cross-origin

HTTP/1.1 200 OK

Date: Fri, 25 Aug 2023 14:42:34 GMT

Content-Type: text/html; charset=utf-8

Transfer-Encoding: chunked

Connection: keep-alive

Keep-Alive: timeout=30

X-Xss-Protection: 1; mode=block

X-Douban-Mobileapp: 0

Expires: Sun, 1 Jan 2006 01:00:00 GMT

Pragma: no-cache

Cache-Control: must-revalidate, no-cache, private

Set-Cookie: ck="deleted"; max-age=0; domain=.douban.com; expires=Thu, 01-Jan-1970 00:00:00 GMT; path=/

Set-Cookie: dbcl2="deleted"; max-age=0; domain=.douban.com; expires=Thu, 01-Jan-1970 00:00:00 GMT; path=/

X-DAE-App: movie

X-DAE-Instance: default

Server: dae

Strict-Transport-Security: max-age=15552000

X-Content-Type-Options: nosniff

Content-Encoding: br

GET /top250?start= HTTP/1.1

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7

Accept-Encoding: gzip, deflate, br

Accept-Language: zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6

Cache-Control: max-age=0

Connection: keep-alive

Cookie: bid=K2X8CBbKBww; douban-fav-remind=1; __utmz=223695111.1692277019.2.1.utmcsr=(direct)|utmccn=(direct)|utmcmd=(none); _pk_id.100001.4cf6=b11063a76568058a.1692284848.; ct=y; __utmz=30149280.1692880274.6.2.utmcsr=baidu|utmccn=(organic)|utmcmd=organic; _pk_ses.100001.4cf6=1; ap_v=0,6.0; __utma=30149280.867465334.1642341176.1692880274.1692974521.7; __utmb=30149280.0.10.1692974521; __utmc=30149280; __utma=223695111.258043023.1642341176.1692296145.1692974521.5; __utmb=223695111.0.10.1692974521; __utmc=223695111

Host: movie.douban.com

Sec-Fetch-Dest: document

Sec-Fetch-Mode: navigate

Sec-Fetch-Site: none

Sec-Fetch-User: ?1

Upgrade-Insecure-Requests: 1

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36 Edg/116.0.1938.54

sec-ch-ua: "Chromium";v="116", "Not)A;Brand";v="24", "Microsoft Edge";v="116"

sec-ch-ua-mobile: ?0

sec-ch-ua-platform: "Windows"(2)调用模板

# -*- coding: utf-8 -*-

# @Time: 2023-08-17 21:51

# @Author: hexh

# @File: douban.py

# @Software: PyCharm

from crawlerTemplate import crawler # 获取数据

import parse # 数据解析

from crawlerTemplate import saveDate # 保存数据

if __name__ == "__main__":

datalist = []

# 1 获取数据

baseurl = "https://movie.douban.com/top250?start="

for i in range(10):

url = baseurl + str(i * 25)

html = crawler.main(r".\target\douban.txt", url=url, isPrint=False)

# 2 逐个解析html数据:(得到详情链接、图片、中国名外国名、评分、评价人数、概述、相关信息)

datalist.extend(parse.myParse(html)) # 用我的解析函数解析(直接用bs的操作,按结构分析,不用正则)

# datalist.extend(parse.teaParse(html)) # 用老师方法的解析函数解析(将得到的bs直接全部转str,用正则搜)

# 3 保存数据

savePath = r".\resultXls\movieTop250.xls"

head = ('详情链接', '图片', '中国名', '外国名', '评分', '评价人数', '概述', '相关信息')

saveDate.byXlwt(datalist, savePath, head)

# saveDate.byFile(datalist, savePath, head)

解释:

第16行,找到这250部电影url的规律,爬取10个url即可

第17行,调用crawler.main,第一个形参传入txt文件的位置,url设置一下,isprint可以先用默认值True,调试成功后再关闭为False。

第24行,设置保存xls表文件的路径

第25行,xls文件表头,没有也可以

第26行,调用saveData中的byXlwt(基于xlwt库)

第27行,调用savaData中的byFile(基于文件处理操作)

第20行和第21行的数据解析函数代码:

# -*- coding: utf-8 -*-

# @File: parser.py

# @Author: 和谐号

# @Software: PyCharm

# @CreationTime: 2023-08-26 10:40

# @OverviewDescription:

from bs4 import BeautifulSoup

import re

def myParse(html):

datalist = []

bs = BeautifulSoup(html, "html.parser")

for item in bs.find_all('div', class_='item'):

link = item.a.attrs.get("href")

pic = item.img.attrs.get("src")

div_hd = item("div", class_="info")[0]("div", class_="hd")[0]

chineseName = div_hd("span", class_="title")[0].string

outName = div_hd("span", class_="other")[0].string

div_bd = item("div", class_="info")[0]("div", class_="bd")[0]

score = div_bd("span", class_="rating_num")[0].string

scoredNum = div_bd("div", class_="star")[0]("span")[-1].string[:-3]

inq = div_bd("span", class_="inq")

inq = "" if len(inq) == 0 else inq[0].string

bd = div_bd.p.text

bd = re.sub("

", "", bd)

bd = re.sub("\n *", "", bd)

# data要包含一部电影的详情链接、图片、中国名外国名、评分、评价人数、概述

data = [link, pic, chineseName, outName, score, scoredNum, inq, bd]

datalist.append(data)

return datalist

def teaParse(html):

datalist = []

bs = BeautifulSoup(html, "html.parser")

for item in bs.find_all('div', class_='item'):

item = str(item)

# 可以先定义正则模式再找

# findLink = re.compile('')

# link = re.findall(findLink, item)[0]

# 也可以不单独定义正则模式

link = re.findall('', item)[0]

pic = re.findall('src="(.*?)"', item)[0]

chineseName = re.findall('(.*?)', item)[0]

outName = re.findall('(.*?)', item)[0]

score = re.findall('', item)[0]

scoredNum = re.findall('(.*?)人评价', item)[0]

# scoredNum = re.findall('(\d*)人评价',item)[0] 也可以

inq = re.findall('(.*?)', item)

inq = "" if len(inq) == 0 else inq[0]

findBd = re.compile('(.*?)

', re.S)

bd = re.findall(findBd, item)[0]

bd = re.sub("

(\s+)?", "", bd).strip() # \s去除了内部的空字符,strip去除两端空字符

# data要包含一部电影的详情链接、图片、中国名外国名、评分、评价人数、概述、演职人员信息

data = [link, pic, chineseName, outName, score, scoredNum, inq, bd]

datalist.append(data)

return datalist