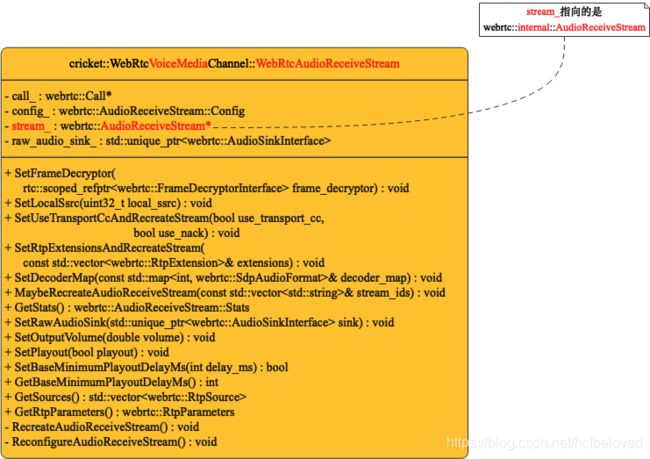

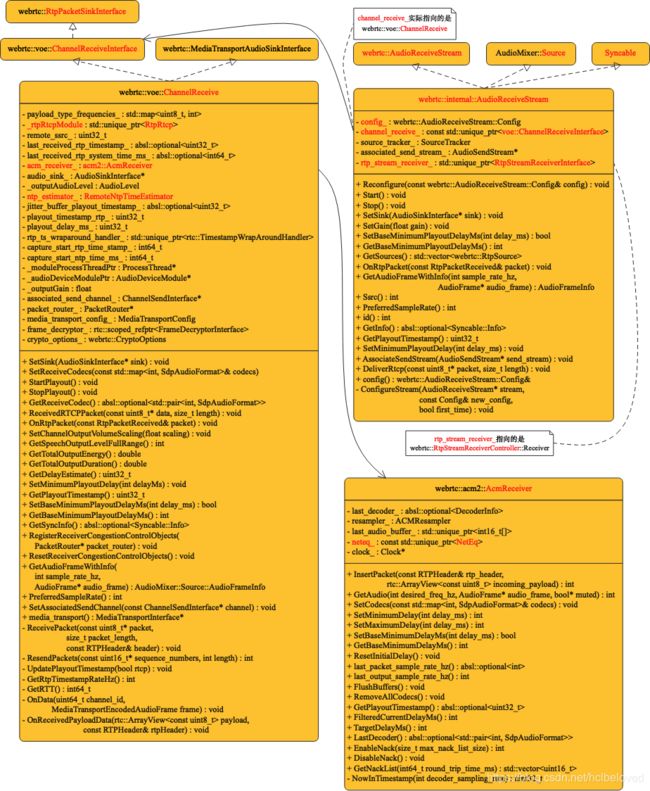

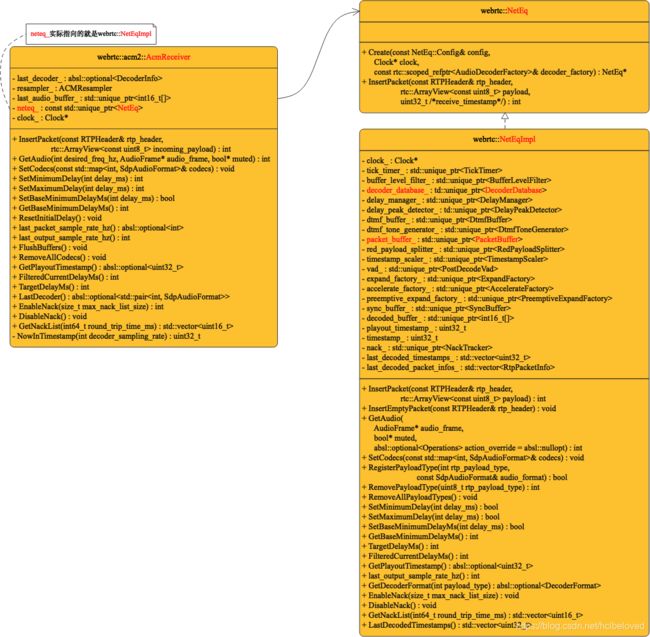

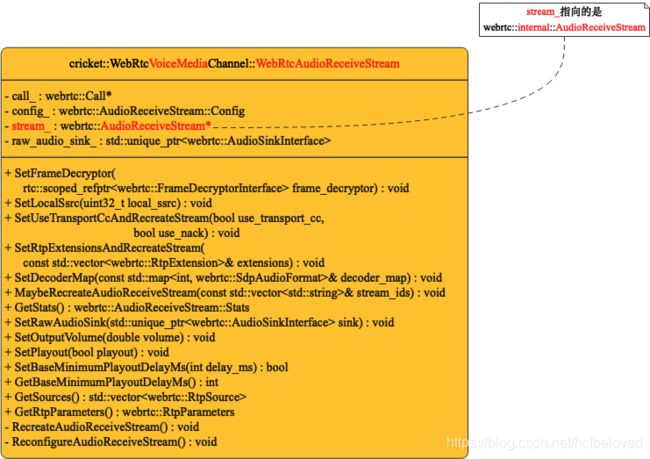

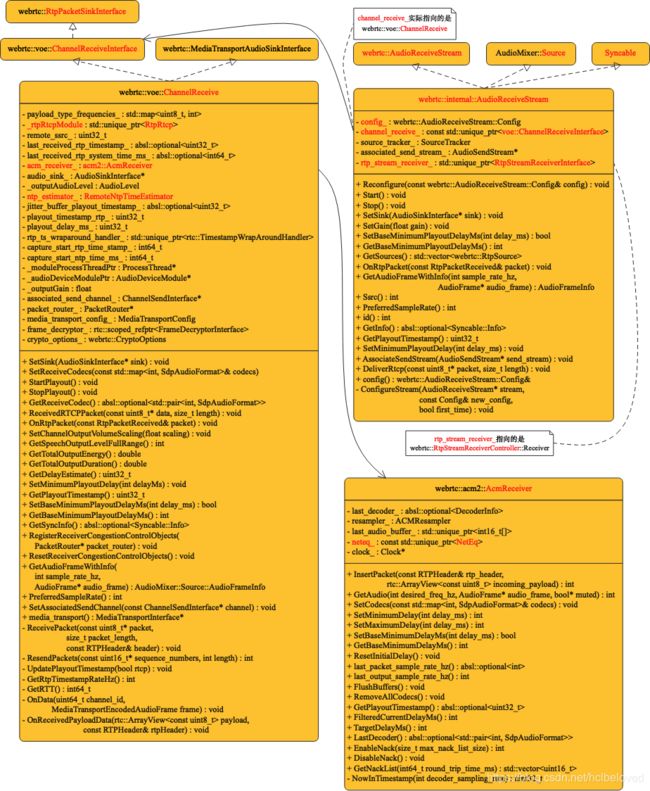

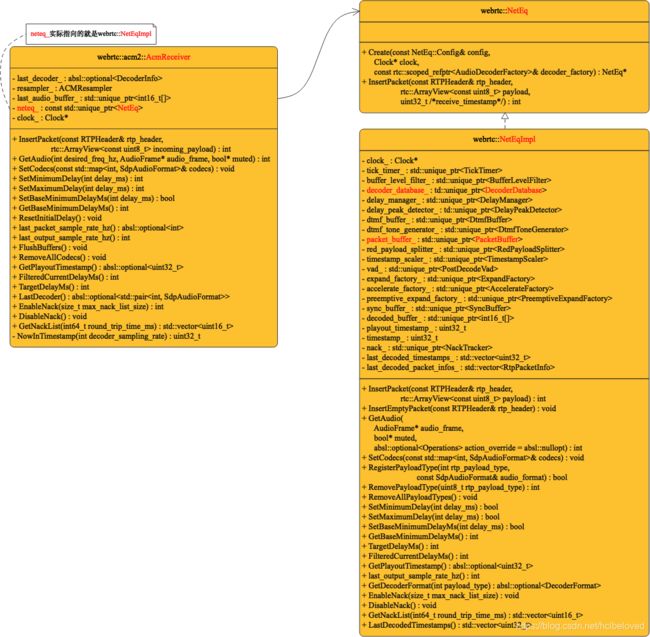

1 相关类图

2 代码

void Conductor::OnSuccess(webrtc::SessionDescriptionInterface* desc) {

peer_connection_->SetLocalDescription(

DummySetSessionDescriptionObserver::Create(), desc);

std::string sdp;

desc->ToString(&sdp);

// For loopback test. To save some connecting delay.

if (loopback_) {

// Replace message type from "offer" to "answer"

std::unique_ptr session_description =

webrtc::CreateSessionDescription(webrtc::SdpType::kAnswer, sdp);

peer_connection_->SetRemoteDescription( // 注意这里

DummySetSessionDescriptionObserver::Create(),

session_description.release());

return;

}

Json::StyledWriter writer;

Json::Value jmessage;

jmessage[kSessionDescriptionTypeName] =

webrtc::SdpTypeToString(desc->GetType());

jmessage[kSessionDescriptionSdpName] = sdp;

SendMessage(writer.write(jmessage));

}

void PeerConnection::SetRemoteDescription(

SetSessionDescriptionObserver* observer,

SessionDescriptionInterface* desc) {

SetRemoteDescription( // 注意这里

std::unique_ptr(desc),

rtc::scoped_refptr(

new SetRemoteDescriptionObserverAdapter(this, observer)));

}

void PeerConnection::SetRemoteDescription(

std::unique_ptr desc,

rtc::scoped_refptr observer) {

RTC_DCHECK_RUN_ON(signaling_thread());

TRACE_EVENT0("webrtc", "PeerConnection::SetRemoteDescription");

if (!observer) {

RTC_LOG(LS_ERROR) << "SetRemoteDescription - observer is NULL.";

return;

}

if (!desc) {

observer->OnSetRemoteDescriptionComplete(RTCError(

RTCErrorType::INVALID_PARAMETER, "SessionDescription is NULL."));

return;

}

// If a session error has occurred the PeerConnection is in a possibly

// inconsistent state so fail right away.

if (session_error() != SessionError::kNone) {

std::string error_message = GetSessionErrorMsg();

RTC_LOG(LS_ERROR) << "SetRemoteDescription: " << error_message;

observer->OnSetRemoteDescriptionComplete(

RTCError(RTCErrorType::INTERNAL_ERROR, std::move(error_message)));

return;

}

if (IsUnifiedPlan()) {

if (configuration_.enable_implicit_rollback) {

if (desc->GetType() == SdpType::kOffer &&

signaling_state() == kHaveLocalOffer) {

Rollback();

}

}

// Explicit rollback.

if (desc->GetType() == SdpType::kRollback) {

observer->OnSetRemoteDescriptionComplete(Rollback());

return;

}

} else if (desc->GetType() == SdpType::kRollback) {

observer->OnSetRemoteDescriptionComplete(

RTCError(RTCErrorType::UNSUPPORTED_OPERATION,

"Rollback not supported in Plan B"));

return;

}

if (desc->GetType() == SdpType::kOffer) {

// Report to UMA the format of the received offer.

ReportSdpFormatReceived(*desc);

}

// Handle remote descriptions missing a=mid lines for interop with legacy end

// points.

FillInMissingRemoteMids(desc->description());

RTCError error = ValidateSessionDescription(desc.get(), cricket::CS_REMOTE);

if (!error.ok()) {

std::string error_message = GetSetDescriptionErrorMessage(

cricket::CS_REMOTE, desc->GetType(), error);

RTC_LOG(LS_ERROR) << error_message;

observer->OnSetRemoteDescriptionComplete(

RTCError(error.type(), std::move(error_message)));

return;

}

// Grab the description type before moving ownership to

// ApplyRemoteDescription, which may destroy it before returning.

const SdpType type = desc->GetType();

error = ApplyRemoteDescription(std::move(desc)); // 注意这里

// |desc| may be destroyed at this point.

if (!error.ok()) {

// If ApplyRemoteDescription fails, the PeerConnection could be in an

// inconsistent state, so act conservatively here and set the session error

// so that future calls to SetLocalDescription/SetRemoteDescription fail.

SetSessionError(SessionError::kContent, error.message());

std::string error_message =

GetSetDescriptionErrorMessage(cricket::CS_REMOTE, type, error);

RTC_LOG(LS_ERROR) << error_message;

observer->OnSetRemoteDescriptionComplete(

RTCError(error.type(), std::move(error_message)));

return;

}

RTC_DCHECK(remote_description());

if (type == SdpType::kAnswer) {

// TODO(deadbeef): We already had to hop to the network thread for

// MaybeStartGathering...

network_thread()->Invoke(

RTC_FROM_HERE, rtc::Bind(&cricket::PortAllocator::DiscardCandidatePool,

port_allocator_.get()));

// Make UMA notes about what was agreed to.

ReportNegotiatedSdpSemantics(*remote_description());

}

if (IsUnifiedPlan()) {

bool was_negotiation_needed = is_negotiation_needed_;

UpdateNegotiationNeeded();

if (signaling_state() == kStable && was_negotiation_needed &&

is_negotiation_needed_) {

Observer()->OnRenegotiationNeeded();

}

}

observer->OnSetRemoteDescriptionComplete(RTCError::OK());

NoteUsageEvent(UsageEvent::SET_REMOTE_DESCRIPTION_SUCCEEDED);

}

RTCError PeerConnection::ApplyRemoteDescription(

std::unique_ptr desc) {

RTC_DCHECK_RUN_ON(signaling_thread());

RTC_DCHECK(desc);

// Update stats here so that we have the most recent stats for tracks and

// streams that might be removed by updating the session description.

stats_->UpdateStats(kStatsOutputLevelStandard);

// Take a reference to the old remote description since it's used below to

// compare against the new remote description. When setting the new remote

// description, grab ownership of the replaced session description in case it

// is the same as |old_remote_description|, to keep it alive for the duration

// of the method.

const SessionDescriptionInterface* old_remote_description =

remote_description();

std::unique_ptr replaced_remote_description;

SdpType type = desc->GetType();

if (type == SdpType::kAnswer) {

replaced_remote_description = pending_remote_description_

? std::move(pending_remote_description_)

: std::move(current_remote_description_);

current_remote_description_ = std::move(desc);

pending_remote_description_ = nullptr;

current_local_description_ = std::move(pending_local_description_);

} else {

replaced_remote_description = std::move(pending_remote_description_);

pending_remote_description_ = std::move(desc);

}

// The session description to apply now must be accessed by

// |remote_description()|.

RTC_DCHECK(remote_description());

// Report statistics about any use of simulcast.

ReportSimulcastApiVersion(kSimulcastVersionApplyRemoteDescription,

*remote_description()->description());

RTCError error = PushdownTransportDescription(cricket::CS_REMOTE, type);

if (!error.ok()) {

return error;

}

// Transport and Media channels will be created only when offer is set.

if (IsUnifiedPlan()) {

RTCError error = UpdateTransceiversAndDataChannels(

cricket::CS_REMOTE, *remote_description(), local_description(),

old_remote_description);

if (!error.ok()) {

return error;

}

} else {

// Media channels will be created only when offer is set. These may use new

// transports just created by PushdownTransportDescription.

if (type == SdpType::kOffer) {

// TODO(mallinath) - Handle CreateChannel failure, as new local

// description is applied. Restore back to old description.

RTCError error = CreateChannels(*remote_description()->description());

if (!error.ok()) {

return error;

}

}

// Remove unused channels if MediaContentDescription is rejected.

RemoveUnusedChannels(remote_description()->description());

}

// NOTE: Candidates allocation will be initiated only when

// SetLocalDescription is called.

error = UpdateSessionState(type, cricket::CS_REMOTE,

remote_description()->description()); // 注意这里

if (!error.ok()) {

return error;

}

if (local_description() &&

!UseCandidatesInSessionDescription(remote_description())) {

LOG_AND_RETURN_ERROR(RTCErrorType::INVALID_PARAMETER, kInvalidCandidates);

}

if (old_remote_description) {

for (const cricket::ContentInfo& content :

old_remote_description->description()->contents()) {

// Check if this new SessionDescription contains new ICE ufrag and

// password that indicates the remote peer requests an ICE restart.

// TODO(deadbeef): When we start storing both the current and pending

// remote description, this should reset pending_ice_restarts and compare

// against the current description.

if (CheckForRemoteIceRestart(old_remote_description, remote_description(),

content.name)) {

if (type == SdpType::kOffer) {

pending_ice_restarts_.insert(content.name);

}

} else {

// We retain all received candidates only if ICE is not restarted.

// When ICE is restarted, all previous candidates belong to an old

// generation and should not be kept.

// TODO(deadbeef): This goes against the W3C spec which says the remote

// description should only contain candidates from the last set remote

// description plus any candidates added since then. We should remove

// this once we're sure it won't break anything.

WebRtcSessionDescriptionFactory::CopyCandidatesFromSessionDescription(

old_remote_description, content.name, mutable_remote_description());

}

}

}

if (session_error() != SessionError::kNone) {

LOG_AND_RETURN_ERROR(RTCErrorType::INTERNAL_ERROR, GetSessionErrorMsg());

}

// Set the the ICE connection state to connecting since the connection may

// become writable with peer reflexive candidates before any remote candidate

// is signaled.

// TODO(pthatcher): This is a short-term solution for crbug/446908. A real fix

// is to have a new signal the indicates a change in checking state from the

// transport and expose a new checking() member from transport that can be

// read to determine the current checking state. The existing SignalConnecting

// actually means "gathering candidates", so cannot be be used here.

if (remote_description()->GetType() != SdpType::kOffer &&

remote_description()->number_of_mediasections() > 0u &&

ice_connection_state() == PeerConnectionInterface::kIceConnectionNew) {

SetIceConnectionState(PeerConnectionInterface::kIceConnectionChecking);

}

// If setting the description decided our SSL role, allocate any necessary

// SCTP sids.

rtc::SSLRole role;

if (DataChannel::IsSctpLike(data_channel_type_) && GetSctpSslRole(&role)) {

AllocateSctpSids(role);

}

if (IsUnifiedPlan()) {

std::vector>

now_receiving_transceivers;

std::vector> remove_list;

std::vector> added_streams;

std::vector> removed_streams;

for (const auto& transceiver : transceivers_) {

const ContentInfo* content =

FindMediaSectionForTransceiver(transceiver, remote_description());

if (!content) {

continue;

}

const MediaContentDescription* media_desc = content->media_description();

RtpTransceiverDirection local_direction =

RtpTransceiverDirectionReversed(media_desc->direction());

// Roughly the same as steps 2.2.8.6 of section 4.4.1.6 "Set the

// RTCSessionDescription: Set the associated remote streams given

// transceiver.[[Receiver]], msids, addList, and removeList".

// https://w3c.github.io/webrtc-pc/#set-the-rtcsessiondescription

if (RtpTransceiverDirectionHasRecv(local_direction)) {

std::vector stream_ids;

if (!media_desc->streams().empty()) {

// The remote description has signaled the stream IDs.

stream_ids = media_desc->streams()[0].stream_ids();

}

RTC_LOG(LS_INFO) << "Processing the MSIDs for MID=" << content->name

<< " (" << GetStreamIdsString(stream_ids) << ").";

SetAssociatedRemoteStreams(transceiver->internal()->receiver_internal(),

stream_ids, &added_streams,

&removed_streams);

// From the WebRTC specification, steps 2.2.8.5/6 of section 4.4.1.6

// "Set the RTCSessionDescription: If direction is sendrecv or recvonly,

// and transceiver's current direction is neither sendrecv nor recvonly,

// process the addition of a remote track for the media description.

if (!transceiver->fired_direction() ||

!RtpTransceiverDirectionHasRecv(*transceiver->fired_direction())) {

RTC_LOG(LS_INFO)

<< "Processing the addition of a remote track for MID="

<< content->name << ".";

now_receiving_transceivers.push_back(transceiver);

}

}

// 2.2.8.1.9: If direction is "sendonly" or "inactive", and transceiver's

// [[FiredDirection]] slot is either "sendrecv" or "recvonly", process the

// removal of a remote track for the media description, given transceiver,

// removeList, and muteTracks.

if (!RtpTransceiverDirectionHasRecv(local_direction) &&

(transceiver->fired_direction() &&

RtpTransceiverDirectionHasRecv(*transceiver->fired_direction()))) {

ProcessRemovalOfRemoteTrack(transceiver, &remove_list,

&removed_streams);

}

// 2.2.8.1.10: Set transceiver's [[FiredDirection]] slot to direction.

transceiver->internal()->set_fired_direction(local_direction);

// 2.2.8.1.11: If description is of type "answer" or "pranswer", then run

// the following steps:

if (type == SdpType::kPrAnswer || type == SdpType::kAnswer) {

// 2.2.8.1.11.1: Set transceiver's [[CurrentDirection]] slot to

// direction.

transceiver->internal()->set_current_direction(local_direction);

// 2.2.8.1.11.[3-6]: Set the transport internal slots.

if (transceiver->mid()) {

auto dtls_transport =

LookupDtlsTransportByMidInternal(*transceiver->mid());

transceiver->internal()->sender_internal()->set_transport(

dtls_transport);

transceiver->internal()->receiver_internal()->set_transport(

dtls_transport);

}

}

// 2.2.8.1.12: If the media description is rejected, and transceiver is

// not already stopped, stop the RTCRtpTransceiver transceiver.

if (content->rejected && !transceiver->stopped()) {

RTC_LOG(LS_INFO) << "Stopping transceiver for MID=" << content->name

<< " since the media section was rejected.";

transceiver->Stop();

}

if (!content->rejected &&

RtpTransceiverDirectionHasRecv(local_direction)) {

if (!media_desc->streams().empty() &&

media_desc->streams()[0].has_ssrcs()) {

uint32_t ssrc = media_desc->streams()[0].first_ssrc();

transceiver->internal()->receiver_internal()->SetupMediaChannel(ssrc);

} else {

transceiver->internal()

->receiver_internal()

->SetupUnsignaledMediaChannel();

}

}

}

// Once all processing has finished, fire off callbacks.

auto observer = Observer();

for (const auto& transceiver : now_receiving_transceivers) {

stats_->AddTrack(transceiver->receiver()->track());

observer->OnTrack(transceiver);

observer->OnAddTrack(transceiver->receiver(),

transceiver->receiver()->streams());

}

for (const auto& stream : added_streams) {

observer->OnAddStream(stream);

}

for (const auto& transceiver : remove_list) {

observer->OnRemoveTrack(transceiver->receiver());

}

for (const auto& stream : removed_streams) {

observer->OnRemoveStream(stream);

}

}

const cricket::ContentInfo* audio_content =

GetFirstAudioContent(remote_description()->description());

const cricket::ContentInfo* video_content =

GetFirstVideoContent(remote_description()->description());

const cricket::AudioContentDescription* audio_desc =

GetFirstAudioContentDescription(remote_description()->description());

const cricket::VideoContentDescription* video_desc =

GetFirstVideoContentDescription(remote_description()->description());

const cricket::RtpDataContentDescription* rtp_data_desc =

GetFirstRtpDataContentDescription(remote_description()->description());

// Check if the descriptions include streams, just in case the peer supports

// MSID, but doesn't indicate so with "a=msid-semantic".

if (remote_description()->description()->msid_supported() ||

(audio_desc && !audio_desc->streams().empty()) ||

(video_desc && !video_desc->streams().empty())) {

remote_peer_supports_msid_ = true;

}

// We wait to signal new streams until we finish processing the description,

// since only at that point will new streams have all their tracks.

rtc::scoped_refptr new_streams(StreamCollection::Create());

if (!IsUnifiedPlan()) {

// TODO(steveanton): When removing RTP senders/receivers in response to a

// rejected media section, there is some cleanup logic that expects the

// voice/ video channel to still be set. But in this method the voice/video

// channel would have been destroyed by the SetRemoteDescription caller

// above so the cleanup that relies on them fails to run. The RemoveSenders

// calls should be moved to right before the DestroyChannel calls to fix

// this.

// Find all audio rtp streams and create corresponding remote AudioTracks

// and MediaStreams.

if (audio_content) {

if (audio_content->rejected) {

RemoveSenders(cricket::MEDIA_TYPE_AUDIO);

} else {

bool default_audio_track_needed =

!remote_peer_supports_msid_ &&

RtpTransceiverDirectionHasSend(audio_desc->direction());

UpdateRemoteSendersList(GetActiveStreams(audio_desc),

default_audio_track_needed, audio_desc->type(),

new_streams);

}

}

// Find all video rtp streams and create corresponding remote VideoTracks

// and MediaStreams.

if (video_content) {

if (video_content->rejected) {

RemoveSenders(cricket::MEDIA_TYPE_VIDEO);

} else {

bool default_video_track_needed =

!remote_peer_supports_msid_ &&

RtpTransceiverDirectionHasSend(video_desc->direction());

UpdateRemoteSendersList(GetActiveStreams(video_desc),

default_video_track_needed, video_desc->type(),

new_streams);

}

}

// If this is an RTP data transport, update the DataChannels with the

// information from the remote peer.

if (rtp_data_desc) {

UpdateRemoteRtpDataChannels(GetActiveStreams(rtp_data_desc));

}

// Iterate new_streams and notify the observer about new MediaStreams.

auto observer = Observer();

for (size_t i = 0; i < new_streams->count(); ++i) {

MediaStreamInterface* new_stream = new_streams->at(i);

stats_->AddStream(new_stream);

observer->OnAddStream(

rtc::scoped_refptr(new_stream));

}

UpdateEndedRemoteMediaStreams();

}

if (type == SdpType::kAnswer &&

local_ice_credentials_to_replace_->SatisfiesIceRestart(

*current_local_description_)) {

local_ice_credentials_to_replace_->ClearIceCredentials();

}

return RTCError::OK();

}

RTCError PeerConnection::UpdateSessionState(

SdpType type,

cricket::ContentSource source,

const cricket::SessionDescription* description) {

RTC_DCHECK_RUN_ON(signaling_thread());

// If there's already a pending error then no state transition should happen.

// But all call-sites should be verifying this before calling us!

RTC_DCHECK(session_error() == SessionError::kNone);

// If this is answer-ish we're ready to let media flow.

if (type == SdpType::kPrAnswer || type == SdpType::kAnswer) {

EnableSending();

}

// Update the signaling state according to the specified state machine (see

// https://w3c.github.io/webrtc-pc/#rtcsignalingstate-enum).

if (type == SdpType::kOffer) {

ChangeSignalingState(source == cricket::CS_LOCAL

? PeerConnectionInterface::kHaveLocalOffer

: PeerConnectionInterface::kHaveRemoteOffer);

} else if (type == SdpType::kPrAnswer) {

ChangeSignalingState(source == cricket::CS_LOCAL

? PeerConnectionInterface::kHaveLocalPrAnswer

: PeerConnectionInterface::kHaveRemotePrAnswer);

} else {

RTC_DCHECK(type == SdpType::kAnswer);

ChangeSignalingState(PeerConnectionInterface::kStable);

transceiver_stable_states_by_transceivers_.clear();

}

// Update internal objects according to the session description's media

// descriptions.

RTCError error = PushdownMediaDescription(type, source); // 注意这里

if (!error.ok()) {

return error;

}

return RTCError::OK();

}

RTCError PeerConnection::PushdownMediaDescription(

SdpType type,

cricket::ContentSource source) {

const SessionDescriptionInterface* sdesc =

(source == cricket::CS_LOCAL ? local_description()

: remote_description());

RTC_DCHECK(sdesc);

// Push down the new SDP media section for each audio/video transceiver.

for (const auto& transceiver : transceivers_) {

const ContentInfo* content_info =

FindMediaSectionForTransceiver(transceiver, sdesc);

cricket::ChannelInterface* channel = transceiver->internal()->channel();//transceiver->internal() 返回的是指向 RtpTransceiver 的指针

if (!channel || !content_info || content_info->rejected) { // 针对视频,transceiver->internal()->channel() 返回的指针指向的是 cricket::VideoChannel*, 其指向的对象中包裹了 cricket::VideoMediaChannel 的子类 cricket::WebRtcVideoChannel

continue; // 针对音频,transceiver->internal()->channel() 返回的指针指向的是 cricket::VoiceChannel*, 其指向的对象中包裹了 cricket::VoiceMediaChannel 的子类 cricket::WebRtcVoiceMediaChannel

}

const MediaContentDescription* content_desc =

content_info->media_description();

if (!content_desc) {

continue;

}

std::string error;

bool success = (source == cricket::CS_LOCAL)

? channel->SetLocalContent(content_desc, type, &error)

: channel->SetRemoteContent(content_desc, type, &error);// 注意这里

if (!success) {

LOG_AND_RETURN_ERROR(RTCErrorType::INVALID_PARAMETER, error);

}

}

// If using the RtpDataChannel, push down the new SDP section for it too.

if (rtp_data_channel_) {

const ContentInfo* data_content =

cricket::GetFirstDataContent(sdesc->description());

if (data_content && !data_content->rejected) {

const MediaContentDescription* data_desc =

data_content->media_description();

if (data_desc) {

std::string error;

bool success =

(source == cricket::CS_LOCAL)

? rtp_data_channel_->SetLocalContent(data_desc, type, &error)

: rtp_data_channel_->SetRemoteContent(data_desc, type, &error);

if (!success) {

LOG_AND_RETURN_ERROR(RTCErrorType::INVALID_PARAMETER, error);

}

}

}

}

// Need complete offer/answer with an SCTP m= section before starting SCTP,

// according to https://tools.ietf.org/html/draft-ietf-mmusic-sctp-sdp-19

if (sctp_mid_ && local_description() && remote_description()) {

rtc::scoped_refptr sctp_transport =

transport_controller_->GetSctpTransport(*sctp_mid_);

auto local_sctp_description = cricket::GetFirstSctpDataContentDescription(

local_description()->description());

auto remote_sctp_description = cricket::GetFirstSctpDataContentDescription(

remote_description()->description());

if (sctp_transport && local_sctp_description && remote_sctp_description) {

int max_message_size;

// A remote max message size of zero means "any size supported".

// We configure the connection with our own max message size.

if (remote_sctp_description->max_message_size() == 0) {

max_message_size = local_sctp_description->max_message_size();

} else {

max_message_size =

std::min(local_sctp_description->max_message_size(),

remote_sctp_description->max_message_size());

}

sctp_transport->Start(local_sctp_description->port(),

remote_sctp_description->port(), max_message_size);

}

}

return RTCError::OK();

}

bool BaseChannel::SetRemoteContent(const MediaContentDescription* content,

SdpType type,

std::string* error_desc) {

TRACE_EVENT0("webrtc", "BaseChannel::SetRemoteContent");

return InvokeOnWorker(

RTC_FROM_HERE,

Bind(&BaseChannel::SetRemoteContent_w, this, content, type, error_desc)); // BaseChannel::SetRemoteContent_w是纯虚函数,这里是多态

}

bool VoiceChannel::SetRemoteContent_w(const MediaContentDescription* content,

SdpType type,

std::string* error_desc) {

TRACE_EVENT0("webrtc", "VoiceChannel::SetRemoteContent_w");

RTC_DCHECK_RUN_ON(worker_thread());

RTC_LOG(LS_INFO) << "Setting remote voice description";

RTC_DCHECK(content);

if (!content) {

SafeSetError("Can't find audio content in remote description.", error_desc);

return false;

}

const AudioContentDescription* audio = content->as_audio();

RtpHeaderExtensions rtp_header_extensions =

GetFilteredRtpHeaderExtensions(audio->rtp_header_extensions());

AudioSendParameters send_params = last_send_params_;

RtpSendParametersFromMediaDescription(audio, rtp_header_extensions,

&send_params);

send_params.mid = content_name();

bool parameters_applied = media_channel()->SetSendParameters(send_params);

if (!parameters_applied) {

SafeSetError("Failed to set remote audio description send parameters.",

error_desc);

return false;

}

last_send_params_ = send_params;

if (!webrtc::RtpTransceiverDirectionHasSend(content->direction())) {

RTC_DLOG(LS_VERBOSE) << "SetRemoteContent_w: remote side will not send - "

"disable payload type demuxing";

ClearHandledPayloadTypes();

if (!RegisterRtpDemuxerSink()) {

RTC_LOG(LS_ERROR) << "Failed to update audio demuxing.";

return false;

}

}

// TODO(pthatcher): Move remote streams into AudioRecvParameters,

// and only give it to the media channel once we have a local

// description too (without a local description, we won't be able to

// recv them anyway).

if (!UpdateRemoteStreams_w(audio->streams(), type, error_desc)) { // 注意这里

SafeSetError("Failed to set remote audio description streams.", error_desc);

return false;

}

set_remote_content_direction(content->direction());

UpdateMediaSendRecvState_w();

return true;

}

bool BaseChannel::UpdateRemoteStreams_w(

const std::vector& streams,

SdpType type,

std::string* error_desc) {

// Check for streams that have been removed.

bool ret = true;

for (const StreamParams& old_stream : remote_streams_) {

// If we no longer have an unsignaled stream, we would like to remove

// the unsignaled stream params that are cached.

if (!old_stream.has_ssrcs() && !HasStreamWithNoSsrcs(streams)) {

ResetUnsignaledRecvStream_w();

RTC_LOG(LS_INFO) << "Reset unsignaled remote stream.";

} else if (old_stream.has_ssrcs() &&

!GetStreamBySsrc(streams, old_stream.first_ssrc())) {

if (RemoveRecvStream_w(old_stream.first_ssrc())) {

RTC_LOG(LS_INFO) << "Remove remote ssrc: " << old_stream.first_ssrc();

} else {

rtc::StringBuilder desc;

desc << "Failed to remove remote stream with ssrc "

<< old_stream.first_ssrc() << ".";

SafeSetError(desc.str(), error_desc);

ret = false;

}

}

}

demuxer_criteria_.ssrcs.clear();

// Check for new streams.

for (const StreamParams& new_stream : streams) {

// We allow a StreamParams with an empty list of SSRCs, in which case the

// MediaChannel will cache the parameters and use them for any unsignaled

// stream received later.

if ((!new_stream.has_ssrcs() && !HasStreamWithNoSsrcs(remote_streams_)) ||

!GetStreamBySsrc(remote_streams_, new_stream.first_ssrc())) {

if (AddRecvStream_w(new_stream)) { // 注意这里

RTC_LOG(LS_INFO) << "Add remote ssrc: "

<< (new_stream.has_ssrcs()

? std::to_string(new_stream.first_ssrc())

: "unsignaled");

} else {

rtc::StringBuilder desc;

desc << "Failed to add remote stream ssrc: "

<< (new_stream.has_ssrcs()

? std::to_string(new_stream.first_ssrc())

: "unsignaled");

SafeSetError(desc.str(), error_desc);

ret = false;

}

}

// Update the receiving SSRCs.

demuxer_criteria_.ssrcs.insert(new_stream.ssrcs.begin(),

new_stream.ssrcs.end());

}

// Re-register the sink to update the receiving ssrcs.

RegisterRtpDemuxerSink();

remote_streams_ = streams;

return ret;

}

bool BaseChannel::AddRecvStream_w(const StreamParams& sp) {

RTC_DCHECK(worker_thread() == rtc::Thread::Current());

return media_channel()->AddRecvStream(sp); //

}

bool WebRtcVoiceMediaChannel::AddRecvStream(const StreamParams& sp) {

TRACE_EVENT0("webrtc", "WebRtcVoiceMediaChannel::AddRecvStream");

RTC_DCHECK(worker_thread_checker_.IsCurrent());

RTC_LOG(LS_INFO) << "AddRecvStream: " << sp.ToString();

if (!sp.has_ssrcs()) {

// This is a StreamParam with unsignaled SSRCs. Store it, so it can be used

// later when we know the SSRCs on the first packet arrival.

unsignaled_stream_params_ = sp;

return true;

}

if (!ValidateStreamParams(sp)) {

return false;

}

const uint32_t ssrc = sp.first_ssrc();

// If this stream was previously received unsignaled, we promote it, possibly

// recreating the AudioReceiveStream, if stream ids have changed.

if (MaybeDeregisterUnsignaledRecvStream(ssrc)) {

recv_streams_[ssrc]->MaybeRecreateAudioReceiveStream(sp.stream_ids());

return true;

}

if (recv_streams_.find(ssrc) != recv_streams_.end()) {

RTC_LOG(LS_ERROR) << "Stream already exists with ssrc " << ssrc;

return false;

}

// Create a new channel for receiving audio data.

recv_streams_.insert(std::make_pair(

ssrc, new WebRtcAudioReceiveStream( // 注意这里

ssrc, receiver_reports_ssrc_, recv_transport_cc_enabled_,

recv_nack_enabled_, sp.stream_ids(), recv_rtp_extensions_,

call_, this, media_transport_config(), // WebRtcVoiceMediaChannel 继承自 webrtc::Transport

engine()->decoder_factory_, decoder_map_, codec_pair_id_, // engine()->decoder_factory_ 就是 webrtc::CreatePeerConnectionFactory 中 webrtc::CreateBuiltinAudioDecoderFactory() 的返回值

engine()->audio_jitter_buffer_max_packets_, // webrtc::CreateBuiltinAudioDecoderFactory() 返回值是

engine()->audio_jitter_buffer_fast_accelerate_,

engine()->audio_jitter_buffer_min_delay_ms_,

engine()->audio_jitter_buffer_enable_rtx_handling_,

unsignaled_frame_decryptor_, crypto_options_)));

recv_streams_[ssrc]->SetPlayout(playout_); // 注意这里WebRtcAudioReceiveStream::SetPlayout

return true;

}

// 音频 decoder

rtc::scoped_refptr CreateBuiltinAudioDecoderFactory() {

return CreateAudioDecoderFactory<

#if WEBRTC_USE_BUILTIN_OPUS

AudioDecoderOpus, NotAdvertised,

#endif

AudioDecoderIsac, AudioDecoderG722,

#if WEBRTC_USE_BUILTIN_ILBC

AudioDecoderIlbc,

#endif

AudioDecoderG711, NotAdvertised>();

}

template

rtc::scoped_refptr CreateAudioDecoderFactory() {

// There's no technical reason we couldn't allow zero template parameters,

// but such a factory couldn't create any decoders, and callers can do this

// by mistake by simply forgetting the <> altogether. So we forbid it in

// order to prevent caller foot-shooting.

static_assert(sizeof...(Ts) >= 1,

"Caller must give at least one template parameter");

return rtc::scoped_refptr(

new rtc::RefCountedObject<

audio_decoder_factory_template_impl::AudioDecoderFactoryT>());

}

template

class AudioDecoderFactoryT : public AudioDecoderFactory {

public:

std::vector GetSupportedDecoders() override {

std::vector specs;

Helper::AppendSupportedDecoders(&specs);

return specs;

}

bool IsSupportedDecoder(const SdpAudioFormat& format) override {

return Helper::IsSupportedDecoder(format);

}

std::unique_ptr MakeAudioDecoder(

const SdpAudioFormat& format,

absl::optional codec_pair_id) override {

return Helper::MakeAudioDecoder(format, codec_pair_id);

}

};

class WebRtcVoiceMediaChannel::WebRtcAudioReceiveStream {

public:

WebRtcAudioReceiveStream(

uint32_t remote_ssrc,

uint32_t local_ssrc,

bool use_transport_cc,

bool use_nack,

const std::vector& stream_ids,

const std::vector& extensions,

webrtc::Call* call,

webrtc::Transport* rtcp_send_transport, // rtcp_send_transport 就是 WebRtcVoiceMediaChannel 的 this 指针

const webrtc::MediaTransportConfig& media_transport_config,

const rtc::scoped_refptr& decoder_factory,// 具体见CreateBuiltinAudioDecoderFactory()

const std::map& decoder_map,

absl::optional codec_pair_id,

size_t jitter_buffer_max_packets,

bool jitter_buffer_fast_accelerate,

int jitter_buffer_min_delay_ms,

bool jitter_buffer_enable_rtx_handling,

rtc::scoped_refptr frame_decryptor,

const webrtc::CryptoOptions& crypto_options)

: call_(call), config_() {

RTC_DCHECK(call);

config_.rtp.remote_ssrc = remote_ssrc;

config_.rtp.local_ssrc = local_ssrc;

config_.rtp.transport_cc = use_transport_cc;

config_.rtp.nack.rtp_history_ms = use_nack ? kNackRtpHistoryMs : 0;

config_.rtp.extensions = extensions;

config_.rtcp_send_transport = rtcp_send_transport; // rtcp_send_transport 就是 WebRtcVoiceMediaChannel 的 this 指针

config_.media_transport_config = media_transport_config;

config_.jitter_buffer_max_packets = jitter_buffer_max_packets;

config_.jitter_buffer_fast_accelerate = jitter_buffer_fast_accelerate;

config_.jitter_buffer_min_delay_ms = jitter_buffer_min_delay_ms;

config_.jitter_buffer_enable_rtx_handling =

jitter_buffer_enable_rtx_handling;

if (!stream_ids.empty()) {

config_.sync_group = stream_ids[0];

}

config_.decoder_factory = decoder_factory; // decoder_factory 的创建具体见 CreateBuiltinAudioDecoderFactory()

config_.decoder_map = decoder_map;

config_.codec_pair_id = codec_pair_id;

config_.frame_decryptor = frame_decryptor;

config_.crypto_options = crypto_options;

RecreateAudioReceiveStream(); // 注意这里

}

// WebRtcVoiceMediaChannel::WebRtcAudioReceiveStream::RecreateAudioReceiveStream

void RecreateAudioReceiveStream() { // 对比 WebRtcVideoChannel::WebRtcVideoReceiveStream::RecreateWebRtcVideoStream

RTC_DCHECK(worker_thread_checker_.IsCurrent());

if (stream_) {

call_->DestroyAudioReceiveStream(stream_);

}

stream_ = call_->CreateAudioReceiveStream(config_); // STREAM_ 实际指向的是 WEBRTC::INTERNAL::aUDIOrECEIVEsTREAM

RTC_CHECK(stream_);

stream_->SetGain(output_volume_);

SetPlayout(playout_);

stream_->SetSink(raw_audio_sink_.get());

}

webrtc::AudioReceiveStream* Call::CreateAudioReceiveStream(

const webrtc::AudioReceiveStream::Config& config) {

TRACE_EVENT0("webrtc", "Call::CreateAudioReceiveStream");

RTC_DCHECK_RUN_ON(&configuration_sequence_checker_);

RegisterRateObserver();

event_log_->Log(std::make_unique(

CreateRtcLogStreamConfig(config)));

AudioReceiveStream* receive_stream = new AudioReceiveStream( // 注意这里

clock_, &audio_receiver_controller_, transport_send_ptr_->packet_router(), // RtpStreamReceiverController audio_receiver_controller_;

module_process_thread_.get(), config, config_.audio_state, event_log_);// config_.audio_stat

{

WriteLockScoped write_lock(*receive_crit_);

receive_rtp_config_.emplace(config.rtp.remote_ssrc,

ReceiveRtpConfig(config));

audio_receive_streams_.insert(receive_stream);

ConfigureSync(config.sync_group);

}

{

ReadLockScoped read_lock(*send_crit_);

auto it = audio_send_ssrcs_.find(config.rtp.local_ssrc);

if (it != audio_send_ssrcs_.end()) {

receive_stream->AssociateSendStream(it->second);

}

}

UpdateAggregateNetworkState();

return receive_stream;

}

AudioReceiveStream::AudioReceiveStream(

Clock* clock,

RtpStreamReceiverControllerInterface* receiver_controller, // receiver_controller 就是 webrtc::internal::Call 中 audio_receiver_controller_的 地址

PacketRouter* packet_router,

ProcessThread* module_process_thread,

const webrtc::AudioReceiveStream::Config& config,

const rtc::scoped_refptr& audio_state, //

webrtc::RtcEventLog* event_log)

: AudioReceiveStream(clock,

receiver_controller,

packet_router,

config,

audio_state,

event_log,

CreateChannelReceive(clock,

audio_state.get(),

module_process_thread,

config,

event_log)) {}

// 缩进表示内部的创建细节

std::unique_ptr CreateChannelReceive(

Clock* clock,

webrtc::AudioState* audio_state,

ProcessThread* module_process_thread,

const webrtc::AudioReceiveStream::Config& config,

RtcEventLog* event_log) {

RTC_DCHECK(audio_state);

internal::AudioState* internal_audio_state =

static_cast(audio_state);

return voe::CreateChannelReceive( // 注意这里

clock, module_process_thread, internal_audio_state->audio_device_module(),

config.media_transport_config, config.rtcp_send_transport, event_log,// config.rtcp_send_transport 就是 WebRtcVoiceMediaChannel 的 this 指针,参考 WebRtcVoiceMediaChannel::WebRtcAudioReceiveStream

config.rtp.local_ssrc, config.rtp.remote_ssrc,

config.jitter_buffer_max_packets, config.jitter_buffer_fast_accelerate,

config.jitter_buffer_min_delay_ms,

config.jitter_buffer_enable_rtx_handling, config.decoder_factory, // config.decoder_factory 就是 CreateBuiltinAudioDecoderFactory() 的返回值

config.codec_pair_id, config.frame_decryptor, config.crypto_options);

}

std::unique_ptr CreateChannelReceive(

Clock* clock,

ProcessThread* module_process_thread,

AudioDeviceModule* audio_device_module,

const MediaTransportConfig& media_transport_config,

Transport* rtcp_send_transport, //

RtcEventLog* rtc_event_log,

uint32_t local_ssrc,

uint32_t remote_ssrc,

size_t jitter_buffer_max_packets,

bool jitter_buffer_fast_playout,

int jitter_buffer_min_delay_ms,

bool jitter_buffer_enable_rtx_handling,

rtc::scoped_refptr decoder_factory, // decoder_factory 就是 CreateBuiltinAudioDecoderFactory() 的返回值

absl::optional codec_pair_id,

rtc::scoped_refptr frame_decryptor,

const webrtc::CryptoOptions& crypto_options) {

return std::make_unique( // 注意这里

clock, module_process_thread, audio_device_module, media_transport_config,

rtcp_send_transport, rtc_event_log, local_ssrc, remote_ssrc, //

jitter_buffer_max_packets, jitter_buffer_fast_playout,

jitter_buffer_min_delay_ms, jitter_buffer_enable_rtx_handling,

decoder_factory, codec_pair_id, frame_decryptor, crypto_options); // decoder_factory 就是 CreateBuiltinAudioDecoderFactory() 的返回值

}

// webrtc::voe::ChannelReceive

// class ChannelReceiveInterface : public RtpPacketSinkInterface

// class ChannelReceive : public ChannelReceiveInterface,

public MediaTransportAudioSinkInterface

===>

acm2::AcmReceiver acm_receiver_;// 注意这里

ChannelReceive::ChannelReceive(

Clock* clock,

ProcessThread* module_process_thread,

AudioDeviceModule* audio_device_module,

const MediaTransportConfig& media_transport_config,

Transport* rtcp_send_transport, //

RtcEventLog* rtc_event_log,

uint32_t local_ssrc,

uint32_t remote_ssrc,

size_t jitter_buffer_max_packets,

bool jitter_buffer_fast_playout,

int jitter_buffer_min_delay_ms,

bool jitter_buffer_enable_rtx_handling,

rtc::scoped_refptr decoder_factory,// decoder_factory 就是 CreateBuiltinAudioDecoderFactory() 的返回值

absl::optional codec_pair_id,

rtc::scoped_refptr frame_decryptor,

const webrtc::CryptoOptions& crypto_options)

: event_log_(rtc_event_log),

rtp_receive_statistics_(ReceiveStatistics::Create(clock)),

remote_ssrc_(remote_ssrc),

acm_receiver_(AcmConfig(decoder_factory, // decoder_factory 就是 CreateBuiltinAudioDecoderFactory() 的返回值

codec_pair_id,

jitter_buffer_max_packets,

jitter_buffer_fast_playout)),

_outputAudioLevel(),

ntp_estimator_(clock),

playout_timestamp_rtp_(0),

playout_delay_ms_(0),

rtp_ts_wraparound_handler_(new rtc::TimestampWrapAroundHandler()),

capture_start_rtp_time_stamp_(-1),

capture_start_ntp_time_ms_(-1),

_moduleProcessThreadPtr(module_process_thread),

_audioDeviceModulePtr(audio_device_module),

_outputGain(1.0f),

associated_send_channel_(nullptr),

media_transport_config_(media_transport_config),

frame_decryptor_(frame_decryptor),

crypto_options_(crypto_options) {

// TODO(nisse): Use _moduleProcessThreadPtr instead?

module_process_thread_checker_.Detach();

RTC_DCHECK(module_process_thread);

RTC_DCHECK(audio_device_module);

acm_receiver_.ResetInitialDelay();

acm_receiver_.SetMinimumDelay(0);

acm_receiver_.SetMaximumDelay(0);

acm_receiver_.FlushBuffers();

_outputAudioLevel.ResetLevelFullRange();

rtp_receive_statistics_->EnableRetransmitDetection(remote_ssrc_, true);

RtpRtcp::Configuration configuration;

configuration.clock = clock;

configuration.audio = true;

configuration.receiver_only = true;

configuration.outgoing_transport = rtcp_send_transport;

configuration.receive_statistics = rtp_receive_statistics_.get();

configuration.event_log = event_log_;

configuration.local_media_ssrc = local_ssrc;

_rtpRtcpModule = RtpRtcp::Create(configuration);

_rtpRtcpModule->SetSendingMediaStatus(false);

_rtpRtcpModule->SetRemoteSSRC(remote_ssrc_);

_moduleProcessThreadPtr->RegisterModule(_rtpRtcpModule.get(), RTC_FROM_HERE);

// Ensure that RTCP is enabled for the created channel.

_rtpRtcpModule->SetRTCPStatus(RtcpMode::kCompound);

if (media_transport()) {

media_transport()->SetReceiveAudioSink(this);

}

}

AudioCodingModule::Config AcmConfig(

rtc::scoped_refptr decoder_factory, // decoder_factory 就是 CreateBuiltinAudioDecoderFactory() 的返回值

absl::optional codec_pair_id,

size_t jitter_buffer_max_packets,

bool jitter_buffer_fast_playout) {

AudioCodingModule::Config acm_config;

acm_config.decoder_factory = decoder_factory; // decoder_factory 就是 CreateBuiltinAudioDecoderFactory() 的返回值

acm_config.neteq_config.codec_pair_id = codec_pair_id;

acm_config.neteq_config.max_packets_in_buffer = jitter_buffer_max_packets;

acm_config.neteq_config.enable_fast_accelerate = jitter_buffer_fast_playout;

acm_config.neteq_config.enable_muted_state = true;

return acm_config;

}

AcmReceiver::AcmReceiver(const AudioCodingModule::Config& config)

: last_audio_buffer_(new int16_t[AudioFrame::kMaxDataSizeSamples]),

neteq_(NetEq::Create(config.neteq_config,

config.clock,

config.decoder_factory)), // config.decoder_factory 就是 CreateBuiltinAudioDecoderFactory() 的返回值

clock_(config.clock),

resampled_last_output_frame_(true) {

RTC_DCHECK(clock_);

memset(last_audio_buffer_.get(), 0,

sizeof(int16_t) * AudioFrame::kMaxDataSizeSamples);

}

NetEq* NetEq::Create(

const NetEq::Config& config,

Clock* clock,

const rtc::scoped_refptr& decoder_factory) { // decoder_factory 就是 CreateBuiltinAudioDecoderFactory() 的返回值

return new NetEqImpl(config, /

NetEqImpl::Dependencies(config, clock, decoder_factory));

}

NetEqImpl::Dependencies::Dependencies(

const NetEq::Config& config,

Clock* clock,

const rtc::scoped_refptr& decoder_factory)

: clock(clock),

tick_timer(new TickTimer),

stats(new StatisticsCalculator),

buffer_level_filter(new BufferLevelFilter),

decoder_database(

new DecoderDatabase(decoder_factory, config.codec_pair_id)), // 注意这里

delay_peak_detector(

new DelayPeakDetector(tick_timer.get(), config.enable_rtx_handling)),

delay_manager(DelayManager::Create(config.max_packets_in_buffer,

config.min_delay_ms,

config.enable_rtx_handling,

delay_peak_detector.get(),

tick_timer.get(),

stats.get())),

dtmf_buffer(new DtmfBuffer(config.sample_rate_hz)),

dtmf_tone_generator(new DtmfToneGenerator),

packet_buffer(

new PacketBuffer(config.max_packets_in_buffer, tick_timer.get())),

red_payload_splitter(new RedPayloadSplitter),

timestamp_scaler(new TimestampScaler(*decoder_database)),

accelerate_factory(new AccelerateFactory),

expand_factory(new ExpandFactory),

preemptive_expand_factory(new PreemptiveExpandFactory) {}

AudioReceiveStream::AudioReceiveStream(

Clock* clock,

RtpStreamReceiverControllerInterface* receiver_controller,//

PacketRouter* packet_router,

const webrtc::AudioReceiveStream::Config& config,

const rtc::scoped_refptr& audio_state, //

webrtc::RtcEventLog* event_log,

std::unique_ptr channel_receive)// channel_receive 实际指向的是 webrtc::voe::ChannelReceive

: audio_state_(audio_state),

channel_receive_(std::move(channel_receive)), // move 后 channel_receive_ 实际上指向的也是 webrtc::voe::ChannelReceive

source_tracker_(clock) {

RTC_LOG(LS_INFO) << "AudioReceiveStream: " << config.rtp.remote_ssrc;

RTC_DCHECK(config.decoder_factory);

RTC_DCHECK(config.rtcp_send_transport);

RTC_DCHECK(audio_state_);

RTC_DCHECK(channel_receive_);

module_process_thread_checker_.Detach();

if (!config.media_transport_config.media_transport) {

RTC_DCHECK(receiver_controller);

RTC_DCHECK(packet_router);

// Configure bandwidth estimation.

channel_receive_->RegisterReceiverCongestionControlObjects(packet_router); //

// Register with transport.

rtp_stream_receiver_ = receiver_controller->CreateReceiver( // 注意这里RtpStreamReceiverController::CreateReceiver

config.rtp.remote_ssrc, channel_receive_.get()); // channel_receive_ 实际上指向的也是 webrtc::voe::ChannelReceive

} // rtp_stream_receiver_ 指向的是 webrtc::RtpStreamReceiverController::Receiver

ConfigureStream(this, config, true); // webrtc::RtpStreamReceiverController::Receiver

}

// webrtc::voe::ChannelReceive

// class ChannelReceiveInterface : public RtpPacketSinkInterface

// class ChannelReceive : public ChannelReceiveInterface,

public MediaTransportAudioSinkInterface

// class webrtc::RtpStreamReceiverController::Receiver : public RtpStreamReceiverInterface

std::unique_ptr

RtpStreamReceiverController::CreateReceiver(uint32_t ssrc,

RtpPacketSinkInterface* sink) {

return std::make_unique(this, ssrc, sink); // webrtc::RtpStreamReceiverController::Receiver

}

void WebRtcAudioReceiveStream::SetPlayout(bool playout) {

RTC_DCHECK(worker_thread_checker_.IsCurrent());

RTC_DCHECK(stream_);

if (playout) { // stream_ 实际指向的是 webrtc::internal::AudioReceiveStream

stream_->Start(); // webrtc::internal::AudioReceiveStream::Start

} else {

stream_->Stop();

}

playout_ = playout;

}

void AudioReceiveStream::Start() {

RTC_DCHECK_RUN_ON(&worker_thread_checker_);

if (playing_) {

return;

}// channel_receive_ 实际上指向的也是 webrtc::voe::ChannelReceive

channel_receive_->StartPlayout(); // ChannelReceive::StartPlayout

playing_ = true;

audio_state()->AddReceivingStream(this); // webrtc::internal::AudioState::AddReceivingStream

}

// webrtc::internal::AudioState::AddReceivingStream

void AudioState::AddReceivingStream(webrtc::AudioReceiveStream* stream) {

RTC_DCHECK(thread_checker_.IsCurrent());

RTC_DCHECK_EQ(0, receiving_streams_.count(stream));

receiving_streams_.insert(stream); //

if (!config_.audio_mixer->AddSource( // 音频解码的时候使用

static_cast(stream))) {

RTC_DLOG(LS_ERROR) << "Failed to add source to mixer.";

}

// Make sure playback is initialized; start playing if enabled.

auto* adm = config_.audio_device_module.get(); // config_.audio_device_module 的设置见: WebRtcVoiceEngine::Init

if (!adm->Playing()) { // config_.audio_device_module 指向的是 webrtc::AudioDeviceModuleImpl

if (adm->InitPlayout() == 0) { // webrtc::AudioDeviceModuleImpl::InitPlayout

if (playout_enabled_) {

adm->StartPlayout(); // webrtc::AudioDeviceModuleImpl::StartPlayout

}

} else {

RTC_DLOG_F(LS_ERROR) << "Failed to initialize playout.";

}

}

}

int32_t AudioDeviceModuleImpl::StartPlayout() {

RTC_LOG(INFO) << __FUNCTION__;

CHECKinitialized_();

if (Playing()) {

return 0;

}

audio_device_buffer_.StartPlayout();

int32_t result = audio_device_->StartPlayout(); // 由 AudioDeviceModuleImpl::CreatePlatformSpecificObjects 可知,在windows下 audio_device_ 就是 webrtc::AudioDeviceWindowsCore

RTC_LOG(INFO) << "output: " << result;

RTC_HISTOGRAM_BOOLEAN("WebRTC.Audio.StartPlayoutSuccess",

static_cast(result == 0));

return result;

}

int32_t AudioDeviceWindowsCore::StartPlayout() {

if (!_playIsInitialized) {

return -1;

}

if (_hPlayThread != NULL) {

return 0;

}

if (_playing) {

return 0;

}

{

rtc::CritScope critScoped(&_critSect);

// Create thread which will drive the rendering.

assert(_hPlayThread == NULL);

_hPlayThread = CreateThread(NULL, 0, WSAPIRenderThread, this, 0, NULL);

if (_hPlayThread == NULL) {

RTC_LOG(LS_ERROR) << "failed to create the playout thread";

return -1;

}

// Set thread priority to highest possible.

SetThreadPriority(_hPlayThread, THREAD_PRIORITY_TIME_CRITICAL);

} // critScoped

DWORD ret = WaitForSingleObject(_hRenderStartedEvent, 1000);

if (ret != WAIT_OBJECT_0) {

RTC_LOG(LS_VERBOSE) << "rendering did not start up properly";

return -1;

}

_playing = true;

RTC_LOG(LS_VERBOSE) << "rendering audio stream has now started...";

return 0;

}

DWORD WINAPI AudioDeviceWindowsCore::WSAPIRenderThread(LPVOID context) {

return reinterpret_cast(context)->DoRenderThread();

}

DWORD AudioDeviceWindowsCore::DoRenderThread() {

bool keepPlaying = true;

HANDLE waitArray[2] = {_hShutdownRenderEvent, _hRenderSamplesReadyEvent};

HRESULT hr = S_OK;

HANDLE hMmTask = NULL;

// Initialize COM as MTA in this thread.

ScopedCOMInitializer comInit(ScopedCOMInitializer::kMTA);

if (!comInit.succeeded()) {

RTC_LOG(LS_ERROR) << "failed to initialize COM in render thread";

return 1;

}

rtc::SetCurrentThreadName("webrtc_core_audio_render_thread");

// Use Multimedia Class Scheduler Service (MMCSS) to boost the thread

// priority.

//

if (_winSupportAvrt) {

DWORD taskIndex(0);

hMmTask = _PAvSetMmThreadCharacteristicsA("Pro Audio", &taskIndex);

if (hMmTask) {

if (FALSE == _PAvSetMmThreadPriority(hMmTask, AVRT_PRIORITY_CRITICAL)) {

RTC_LOG(LS_WARNING) << "failed to boost play-thread using MMCSS";

}

RTC_LOG(LS_VERBOSE)

<< "render thread is now registered with MMCSS (taskIndex="

<< taskIndex << ")";

} else {

RTC_LOG(LS_WARNING) << "failed to enable MMCSS on render thread (err="

<< GetLastError() << ")";

_TraceCOMError(GetLastError());

}

}

_Lock();

IAudioClock* clock = NULL;

// Get size of rendering buffer (length is expressed as the number of audio

// frames the buffer can hold). This value is fixed during the rendering

// session.

//

UINT32 bufferLength = 0;

hr = _ptrClientOut->GetBufferSize(&bufferLength);

EXIT_ON_ERROR(hr);

RTC_LOG(LS_VERBOSE) << "[REND] size of buffer : " << bufferLength;

// Get maximum latency for the current stream (will not change for the

// lifetime of the IAudioClient object).

//

REFERENCE_TIME latency;

_ptrClientOut->GetStreamLatency(&latency);

RTC_LOG(LS_VERBOSE) << "[REND] max stream latency : " << (DWORD)latency

<< " (" << (double)(latency / 10000.0) << " ms)";

// Get the length of the periodic interval separating successive processing

// passes by the audio engine on the data in the endpoint buffer.

//

// The period between processing passes by the audio engine is fixed for a

// particular audio endpoint device and represents the smallest processing

// quantum for the audio engine. This period plus the stream latency between

// the buffer and endpoint device represents the minimum possible latency that

// an audio application can achieve. Typical value: 100000 <=> 0.01 sec =

// 10ms.

//

REFERENCE_TIME devPeriod = 0;

REFERENCE_TIME devPeriodMin = 0;

_ptrClientOut->GetDevicePeriod(&devPeriod, &devPeriodMin);

RTC_LOG(LS_VERBOSE) << "[REND] device period : " << (DWORD)devPeriod

<< " (" << (double)(devPeriod / 10000.0) << " ms)";

// Derive initial rendering delay.

// Example: 10*(960/480) + 15 = 20 + 15 = 35ms

//

int playout_delay = 10 * (bufferLength / _playBlockSize) +

(int)((latency + devPeriod) / 10000);

_sndCardPlayDelay = playout_delay;

_writtenSamples = 0;

RTC_LOG(LS_VERBOSE) << "[REND] initial delay : " << playout_delay;

double endpointBufferSizeMS =

10.0 * ((double)bufferLength / (double)_devicePlayBlockSize);

RTC_LOG(LS_VERBOSE) << "[REND] endpointBufferSizeMS : "

<< endpointBufferSizeMS;

// Before starting the stream, fill the rendering buffer with silence.

//

BYTE* pData = NULL;

hr = _ptrRenderClient->GetBuffer(bufferLength, &pData);

EXIT_ON_ERROR(hr);

hr =

_ptrRenderClient->ReleaseBuffer(bufferLength, AUDCLNT_BUFFERFLAGS_SILENT);

EXIT_ON_ERROR(hr);

_writtenSamples += bufferLength;

hr = _ptrClientOut->GetService(__uuidof(IAudioClock), (void**)&clock);

if (FAILED(hr)) {

RTC_LOG(LS_WARNING)

<< "failed to get IAudioClock interface from the IAudioClient";

}

// Start up the rendering audio stream.

hr = _ptrClientOut->Start();

EXIT_ON_ERROR(hr);

_UnLock();

// Set event which will ensure that the calling thread modifies the playing

// state to true.

//

SetEvent(_hRenderStartedEvent);

// >> ------------------ THREAD LOOP ------------------

while (keepPlaying) {

// Wait for a render notification event or a shutdown event

DWORD waitResult = WaitForMultipleObjects(2, waitArray, FALSE, 500);

switch (waitResult) {

case WAIT_OBJECT_0 + 0: // _hShutdownRenderEvent

keepPlaying = false;

break;

case WAIT_OBJECT_0 + 1: // _hRenderSamplesReadyEvent

break;

case WAIT_TIMEOUT: // timeout notification

RTC_LOG(LS_WARNING) << "render event timed out after 0.5 seconds";

goto Exit;

default: // unexpected error

RTC_LOG(LS_WARNING) << "unknown wait termination on render side";

goto Exit;

}

while (keepPlaying) {

_Lock();

// Sanity check to ensure that essential states are not modified

// during the unlocked period.

if (_ptrRenderClient == NULL || _ptrClientOut == NULL) {

_UnLock();

RTC_LOG(LS_ERROR)

<< "output state has been modified during unlocked period";

goto Exit;

}

// Get the number of frames of padding (queued up to play) in the endpoint

// buffer.

UINT32 padding = 0;

hr = _ptrClientOut->GetCurrentPadding(&padding);

EXIT_ON_ERROR(hr);

// Derive the amount of available space in the output buffer

uint32_t framesAvailable = bufferLength - padding;

// Do we have 10 ms available in the render buffer?

if (framesAvailable < _playBlockSize) {

// Not enough space in render buffer to store next render packet.

_UnLock();

break;

}

// Write n*10ms buffers to the render buffer

const uint32_t n10msBuffers = (framesAvailable / _playBlockSize);

for (uint32_t n = 0; n < n10msBuffers; n++) {

// Get pointer (i.e., grab the buffer) to next space in the shared

// render buffer.

hr = _ptrRenderClient->GetBuffer(_playBlockSize, &pData);

EXIT_ON_ERROR(hr);

if (_ptrAudioBuffer) {

// Request data to be played out (#bytes =

// _playBlockSize*_audioFrameSize)

_UnLock();

int32_t nSamples =

_ptrAudioBuffer->RequestPlayoutData(_playBlockSize);

_Lock();

if (nSamples == -1) {

_UnLock();

RTC_LOG(LS_ERROR) << "failed to read data from render client";

goto Exit;

}

// Sanity check to ensure that essential states are not modified

// during the unlocked period

if (_ptrRenderClient == NULL || _ptrClientOut == NULL) {

_UnLock();

RTC_LOG(LS_ERROR)

<< "output state has been modified during unlocked"

<< " period";

goto Exit;

}

if (nSamples != static_cast(_playBlockSize)) {

RTC_LOG(LS_WARNING)

<< "nSamples(" << nSamples << ") != _playBlockSize"

<< _playBlockSize << ")";

}

// Get the actual (stored) data

nSamples = _ptrAudioBuffer->GetPlayoutData((int8_t*)pData);

}

DWORD dwFlags(0);

hr = _ptrRenderClient->ReleaseBuffer(_playBlockSize, dwFlags);

// See http://msdn.microsoft.com/en-us/library/dd316605(VS.85).aspx

// for more details regarding AUDCLNT_E_DEVICE_INVALIDATED.

EXIT_ON_ERROR(hr);

_writtenSamples += _playBlockSize;

}

// Check the current delay on the playout side.

if (clock) {

UINT64 pos = 0;

UINT64 freq = 1;

clock->GetPosition(&pos, NULL);

clock->GetFrequency(&freq);

playout_delay = ROUND((double(_writtenSamples) / _devicePlaySampleRate -

double(pos) / freq) *

1000.0);

_sndCardPlayDelay = playout_delay;

}

_UnLock();

}

}

// ------------------ THREAD LOOP ------------------ <<

SleepMs(static_cast(endpointBufferSizeMS + 0.5));

hr = _ptrClientOut->Stop();

Exit:

SAFE_RELEASE(clock);

if (FAILED(hr)) {

_ptrClientOut->Stop();

_UnLock();

_TraceCOMError(hr);

}

if (_winSupportAvrt) {

if (NULL != hMmTask) {

_PAvRevertMmThreadCharacteristics(hMmTask);

}

}

_Lock();

if (keepPlaying) {

if (_ptrClientOut != NULL) {

hr = _ptrClientOut->Stop();

if (FAILED(hr)) {

_TraceCOMError(hr);

}

hr = _ptrClientOut->Reset();

if (FAILED(hr)) {

_TraceCOMError(hr);

}

}

RTC_LOG(LS_ERROR)

<< "Playout error: rendering thread has ended pre-maturely";

} else {

RTC_LOG(LS_VERBOSE) << "_Rendering thread is now terminated properly";

}

_UnLock();

return (DWORD)hr;

}