Xxljob的使用

目录

调度中心地址

使用步骤

1.登录控制台创建一个执行器

2.配置

3.定义executorHandler

4.控制台创建定时任务

阻塞处理策略

路由策略

注意事项

调度中心地址

首先我们需要两个地址

控制台:用于调度任务和任务管理

调度中心根地址:代码中的执行器注册地址,可以配置多个,相对于nacos的服务实例

使用步骤

1.登录控制台创建一个执行器

AppName即为执行器标识,名称自定义,注册方式选择自动注册即可,服务启动时会将执行器实例自动注册到对应的AppName,一个执行器可以有多个实例。

AppName即为执行器标识,名称自定义,注册方式选择自动注册即可,服务启动时会将执行器实例自动注册到对应的AppName,一个执行器可以有多个实例。

2.配置

nacos

xxl:

job:

admin:

# 调度中心部署根地址 [选填]:如调度中心集群部署存在多个地址则用逗号分隔。执行器将会使用该地址进行"执行器心跳注册"和"任务结果回调";为空则关闭自动注册;

addresses: http://xxl-job-admin.xxl-job-admin.svc.cluster.local:8080/xxl-job-admin

# 执行器通讯TOKEN [选填]:非空时启用;

accessToken: ''

executor:

# 执行器AppName [选填]:执行器心跳注册分组依据;为空则关闭自动注册

appname: scfp-dev

# 执行器注册 [选填]:优先使用该配置作为注册地址,为空时使用内嵌服务 ”IP:PORT“ 作为注册地址。从而更灵活的支持容器类型执行器动态IP和动态映射端口问题。

address:

# 执行器IP [选填]:默认为空表示自动获取IP,多网卡时可手动设置指定IP,该IP不会绑定Host仅作为通讯实用;地址信息用于 "执行器注册" 和 "调度中心请求并触发任务";

ip:

# 执行器端口号 [选填]:小于等于0则自动获取;默认端口为9999,单机部署多个执行器时,注意要配置不同执行器端口;

port: 9999

# 执行器运行日志文件存储磁盘路径 [选填] :需要对该路径拥有读写权限;为空则使用默认路径;

logpath: xxl-job/jobhandler

# # 执行器日志文件保存天数 [选填] : 过期日志自动清理, 限制值大于等于3时生效; 否则, 如-1, 关闭自动清理功能;

logretentiondays: 30maven依赖

com.xuxueli

xxl-job-core

配置类:注册执行器实例

@Configuration

@Slf4j

public class XxlJobConfig {

@Value("${xxl.job.admin.addresses}")

private String adminAddresses;

@Value("${xxl.job.accessToken}")

private String accessToken;

@Value("${xxl.job.executor.appname}")

private String appname;

@Value("${xxl.job.executor.address}")

private String address;

@Value("${xxl.job.executor.ip}")

private String ip;

@Value("${xxl.job.executor.port}")

private int port;

@Value("${xxl.job.executor.logpath}")

private String logPath;

@Value("${xxl.job.executor.logretentiondays}")

private int logRetentionDays;

@Bean

public XxlJobSpringExecutor xxlJobExecutor() {

log.info(">>>>>>>>>>> xxl-job config init.");

XxlJobSpringExecutor xxlJobSpringExecutor = new XxlJobSpringExecutor();

xxlJobSpringExecutor.setAdminAddresses(adminAddresses);

xxlJobSpringExecutor.setAppname(appname);

xxlJobSpringExecutor.setAddress(address);

xxlJobSpringExecutor.setIp(ip);

xxlJobSpringExecutor.setPort(port);

xxlJobSpringExecutor.setAccessToken(accessToken);

xxlJobSpringExecutor.setLogPath(logPath);

xxlJobSpringExecutor.setLogRetentionDays(logRetentionDays);

return xxlJobSpringExecutor;

}

}3.定义executorHandler

handler即为任务名称,一个执行器对应多个定时任务,使用@XxlJob注解配合@Component就可注册handler,所以理论上来说@Service、@Configuration、@RestController里面注册都没问题。

注意这里String param的参数必须添加,因为在xxljob 2.3.0之前传参都是直接对应到方法里的param,即使没有参数也需要预留。在2.3.0版本之后就是通过String jobParam = XxlJobHelper.getJobParam()来传参了。

@Component

@Slf4j

public class MtyJobController {

@Resource

private MtyService mtyService;

@XxlJob(value = MtyJobConstant.EXECUTE_JOB)

public ReturnT executeJob(String param) {

log.info("executeJob定时任务调度成功,{}", param);

SingleApiResult singleApiResult = null;

try {

singleApiResult = mtyService.executeJob(param);

} catch (Exception e) {

log.error("===== 调度发生的的异常为:{}", GlobalExceptionHandler.getStackTrace(e));

return ReturnT.FAIL;

}

if (singleApiResult.isSuccess()) {

log.info("xxl-job猫头鹰任务执行结束。任务id:{}", param);

return ReturnT.SUCCESS;

} else {

log.info("xxl-job猫头鹰任务执行失败。任务id:{}", param);

return ReturnT.FAIL;

}

}

@XxlJob(value = MtyJobConstant.FREEZE_RECORD_AUTOSYNEBS)

public ReturnT mtyFreezeRecordAutoSynEBS(String param){

log.info("自动同步ebs开始调度");

SingleApiResult singleApiResult = mtyService.mtyFreezeRecordAutoSynEBS();

if (singleApiResult.isSuccess()) {

log.info("自动同步ebs成功");

return ReturnT.SUCCESS;

} else {

log.info("自动同步ebs失败");

return ReturnT.FAIL;

}

}

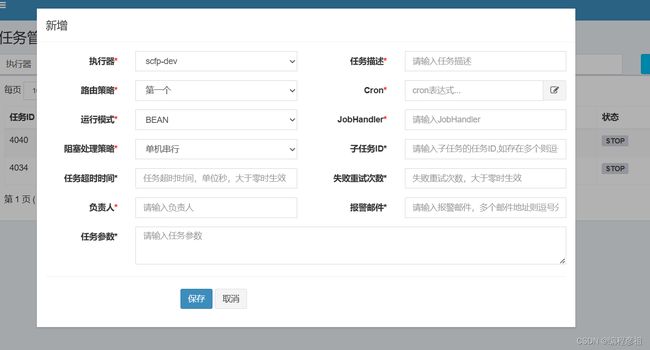

} 4.控制台创建定时任务

配置好执行器和handler之后就可以创建相应的定时任务。

选择实例注册的执行器,若要传多个参数可以用字符做分割在代码中处理,JobHandler与@XxlJob里的value值对应,运行模式因为是spring注入的所以选BEAN就行

阻塞处理策略

单机串行(默认)

调度进入单机执行器后,调度请求进入FIFO队列中并以串行方式运行

丢弃后续调度(推荐)

调度请求进入单机执行器,发现执行器存在运行的调度任务,本次请求将会被丢弃并标记为失败

覆盖之前调度(不推荐)

调度请求进入单机执行器后,发现执行器存在运行的调度任务, 将会终止运行中的调度任务并清空队列,然后运行本地调度

路由策略

FIRST(第一个):固定选择第一个机器;

LAST(最后一个):固定选择最后一个机器;

ROUND(轮询):;

RANDOM(随机):随机选择在线的机器;

CONSISTENT_HASH(一致性HASH):每个任务按照Hash算法固定选择某一台机器,且所有任务均匀散列在不同机器上。

LEAST_FREQUENTLY_USED(最不经常使用):使用频率最低的机器优先被选举;

LEAST_RECENTLY_USED(最近最久未使用):最久未使用的机器优先被选举;

FAILOVER(故障转移):按照顺序依次进行心跳检测,第一个心跳检测成功的机器选定为目标执行器并发起调度;

BUSYOVER(忙碌转移):按照顺序依次进行空闲检测,第一个空闲检测成功的机器选定为目标执行器并发起调度;

SHARDING_BROADCAST(分片广播):广播触发对应集群中所有机器执行一次任务,同时系统自动传递分片参数;可根据分片参数开发分片任务;1、FIRST:获取地址列表中的第一个

public class ExecutorRouteFirst extends ExecutorRouter {

@Override

public ReturnT route(TriggerParam triggerParam, List addressList) {

return new ReturnT(addressList.get(0));

}

} 2、LAST:获取地址列表中的最后一个

public class ExecutorRouteLast extends ExecutorRouter {

@Override

public ReturnT route(TriggerParam triggerParam, List addressList) {

return new ReturnT(addressList.get(addressList.size()-1));

}

} 3、轮询:

缓存时间是1天, 叠加次数最多为一百万,超过后进行重置,但是重置时采用随机方式,随机到一个小于100的数字,基于计数器,对地址列表取模

public class ExecutorRouteRound extends ExecutorRouter {

private static ConcurrentMap routeCountEachJob = new ConcurrentHashMap<>();

private static long CACHE_VALID_TIME = 0;

private static int count(int jobId) {

// cache clear

if (System.currentTimeMillis() > CACHE_VALID_TIME) {

routeCountEachJob.clear();

CACHE_VALID_TIME = System.currentTimeMillis() + 1000*60*60*24;

}

AtomicInteger count = routeCountEachJob.get(jobId);

if (count == null || count.get() > 1000000) {

// 初始化时主动Random一次,缓解首次压力

count = new AtomicInteger(new Random().nextInt(100));

} else {

// count++

count.addAndGet(1);

}

routeCountEachJob.put(jobId, count);

return count.get();

}

@Override

public ReturnT route(TriggerParam triggerParam, List addressList) {

String address = addressList.get(count(triggerParam.getJobId())%addressList.size());

return new ReturnT(address);

}

}4、随机,随机选择一台及其执行

public class ExecutorRouteRandom extends ExecutorRouter {

private static Random localRandom = new Random();

@Override

public ReturnT route(TriggerParam triggerParam, List addressList) {

String address = addressList.get(localRandom.nextInt(addressList.size()));

return new ReturnT(address);

}

}5、一致性哈希

分组下机器地址相同,不同JOB均匀散列在不同机器上,保证分组下机器分配JOB平均;且每个JOB固定调度其中一台机器; a、virtual node:解决不均衡问题 b、hash method replace hashCode:String的hashCode可能重复,需要进一步扩大hashCode的取值范围

public class ExecutorRouteConsistentHash extends ExecutorRouter {

private static int VIRTUAL_NODE_NUM = 100;

/**

* get hash code on 2^32 ring (md5散列的方式计算hash值)

* @param key

* @return

*/

private static long hash(String key) {

// md5 byte

MessageDigest md5;

try {

md5 = MessageDigest.getInstance("MD5");

} catch (NoSuchAlgorithmException e) {

throw new RuntimeException("MD5 not supported", e);

}

md5.reset();

byte[] keyBytes = null;

try {

keyBytes = key.getBytes("UTF-8");

} catch (UnsupportedEncodingException e) {

throw new RuntimeException("Unknown string :" + key, e);

}

md5.update(keyBytes);

byte[] digest = md5.digest();

// hash code, Truncate to 32-bits

long hashCode = ((long) (digest[3] & 0xFF) << 24)

| ((long) (digest[2] & 0xFF) << 16)

| ((long) (digest[1] & 0xFF) << 8)

| (digest[0] & 0xFF);

long truncateHashCode = hashCode & 0xffffffffL;

return truncateHashCode;

}

public String hashJob(int jobId, List addressList) {

// ------A1------A2-------A3------

// -----------J1------------------

TreeMap addressRing = new TreeMap();

for (String address: addressList) {

for (int i = 0; i < VIRTUAL_NODE_NUM; i++) {

long addressHash = hash("SHARD-" + address + "-NODE-" + i);

addressRing.put(addressHash, address);

}

}

long jobHash = hash(String.valueOf(jobId));

SortedMap lastRing = addressRing.tailMap(jobHash);

if (!lastRing.isEmpty()) {

return lastRing.get(lastRing.firstKey());

}

return addressRing.firstEntry().getValue();

}

@Override

public ReturnT route(TriggerParam triggerParam, List addressList) {

String address = hashJob(triggerParam.getJobId(), addressList);

return new ReturnT(address);

}

} 6. LEAST_FREQUENTLY_USED(最不经常使用):

缓存时间还是一天,对地址列表进行筛选,如果新加入的地址列表或者使用次数超过一百万次的话,就会随机重置为小于地址列表地址个数的值。 最后返回的就是value值最小的地址

public class ExecutorRouteLFU extends ExecutorRouter {

private static ConcurrentMap jobLfuMap = new ConcurrentHashMap();

private static long CACHE_VALID_TIME = 0;

public String route(int jobId, List addressList) {

// cache clear

if (System.currentTimeMillis() > CACHE_VALID_TIME) {

jobLfuMap.clear();

CACHE_VALID_TIME = System.currentTimeMillis() + 1000*60*60*24;

}

// lfu item init

HashMap lfuItemMap = jobLfuMap.get(jobId); // Key排序可以用TreeMap+构造入参Compare;Value排序暂时只能通过ArrayList;

if (lfuItemMap == null) {

lfuItemMap = new HashMap();

jobLfuMap.putIfAbsent(jobId, lfuItemMap); // 避免重复覆盖

}

// put new

for (String address: addressList) {

if (!lfuItemMap.containsKey(address) || lfuItemMap.get(address) >1000000 ) {

lfuItemMap.put(address, new Random().nextInt(addressList.size())); // 初始化时主动Random一次,缓解首次压力

}

}

// remove old

List delKeys = new ArrayList<>();

for (String existKey: lfuItemMap.keySet()) {

if (!addressList.contains(existKey)) {

delKeys.add(existKey);

}

}

if (delKeys.size() > 0) {

for (String delKey: delKeys) {

lfuItemMap.remove(delKey);

}

}

// load least userd count address

List lfuItemList = new ArrayList(lfuItemMap.entrySet());

Collections.sort(lfuItemList, new Comparator() {

@Override

public int compare(Map.Entry o1, Map.Entry o2) {

return o1.getValue().compareTo(o2.getValue());

}

});

Map.Entry addressItem = lfuItemList.get(0);

String minAddress = addressItem.getKey();

addressItem.setValue(addressItem.getValue() + 1);

return addressItem.getKey();

}

@Override

public ReturnT route(TriggerParam triggerParam, List addressList) {

String address = route(triggerParam.getJobId(), addressList);

return new ReturnT(address);

}

}7、 LEAST_RECENTLY_USED(最近最久未使用):

缓存时间还是一天,对地址列表进行筛选, 采用LinkedHashMap实现LRU算法

其中LinkedHashMap的构造器中有一个参数: //accessOrder 为true, 每次调用get或者put都会将该元素放置到链表最后,因而获取第一个元素就是当前没有使用过的元素

public class ExecutorRouteLRU extends ExecutorRouter {

private static ConcurrentMap jobLRUMap = new ConcurrentHashMap();

private static long CACHE_VALID_TIME = 0;

public String route(int jobId, List addressList) {

// cache clear

if (System.currentTimeMillis() > CACHE_VALID_TIME) {

jobLRUMap.clear();

CACHE_VALID_TIME = System.currentTimeMillis() + 1000*60*60*24;

}

// init lru

LinkedHashMap lruItem = jobLRUMap.get(jobId);

if (lruItem == null) {

/**

* LinkedHashMap

* a、accessOrder:true=访问顺序排序(get/put时排序);false=插入顺序排期;

* b、removeEldestEntry:新增元素时将会调用,返回true时会删除最老元素;可封装LinkedHashMap并重写该方法,比如定义最大容量,超出是返回true即可实现固定长度的LRU算法;

*/

//accessOrder 为true, 每次调用get或者put都会将该元素放置到链表最后,因而获取第一个元素就是当前没有使用过的元素

lruItem = new LinkedHashMap(16, 0.75f, true);

jobLRUMap.putIfAbsent(jobId, lruItem);

}

// put new

for (String address: addressList) {

if (!lruItem.containsKey(address)) {

lruItem.put(address, address);

}

}

// remove old

List delKeys = new ArrayList<>();

for (String existKey: lruItem.keySet()) {

if (!addressList.contains(existKey)) {

delKeys.add(existKey);

}

}

if (delKeys.size() > 0) {

for (String delKey: delKeys) {

lruItem.remove(delKey);

}

}

// load

String eldestKey = lruItem.entrySet().iterator().next().getKey();

String eldestValue = lruItem.get(eldestKey);

return eldestValue;

}

@Override

public ReturnT route(TriggerParam triggerParam, List addressList) {

String address = route(triggerParam.getJobId(), addressList);

return new ReturnT(address);

}

}8、FAILOVER

会返回第一个心跳检测ok的执行器,主要是使用xxl-job的执行器 RESTful API中的 beat,按照顺序依次进行心跳检测,第一个心跳检测成功的机器选定为目标执行器并发起调度;

public class ExecutorRouteFailover extends ExecutorRouter {

@Override

public ReturnT route(TriggerParam triggerParam, List addressList) {

StringBuffer beatResultSB = new StringBuffer();

for (String address : addressList) {

// beat

ReturnT beatResult = null;

try {

ExecutorBiz executorBiz = XxlJobScheduler.getExecutorBiz(address);

beatResult = executorBiz.beat();

} catch (Exception e) {

logger.error(e.getMessage(), e);

beatResult = new ReturnT(ReturnT.FAIL_CODE, ""+e );

}

beatResultSB.append( (beatResultSB.length()>0)?"

":"")

.append(I18nUtil.getString("jobconf_beat") + ":")

.append("

address:").append(address)

.append("

code:").append(beatResult.getCode())

.append("

msg:").append(beatResult.getMsg());

// beat success

if (beatResult.getCode() == ReturnT.SUCCESS_CODE) {

beatResult.setMsg(beatResultSB.toString());

beatResult.setContent(address);

return beatResult;

}

}

return new ReturnT(ReturnT.FAIL_CODE, beatResultSB.toString());

}

} 9、BUSYOVER(忙碌转移):

按照顺序依次进行空闲检测,第一个空闲检测成功的机器选定为目标执行器并发起调度;会返回空闲的第一个执行器的地址,主要是使用xxl-job的执行器 RESTful API中的 idleBeat

public class ExecutorRouteBusyover extends ExecutorRouter {

@Override

public ReturnT route(TriggerParam triggerParam, List addressList) {

StringBuffer idleBeatResultSB = new StringBuffer();

for (String address : addressList) {

// beat

ReturnT idleBeatResult = null;

try {

ExecutorBiz executorBiz = XxlJobScheduler.getExecutorBiz(address);

idleBeatResult = executorBiz.idleBeat(new IdleBeatParam(triggerParam.getJobId()));

} catch (Exception e) {

logger.error(e.getMessage(), e);

idleBeatResult = new ReturnT(ReturnT.FAIL_CODE, ""+e );

}

idleBeatResultSB.append( (idleBeatResultSB.length()>0)?"

":"")

.append(I18nUtil.getString("jobconf_idleBeat") + ":")

.append("

address:").append(address)

.append("

code:").append(idleBeatResult.getCode())

.append("

msg:").append(idleBeatResult.getMsg());

// beat success

if (idleBeatResult.getCode() == ReturnT.SUCCESS_CODE) {

idleBeatResult.setMsg(idleBeatResultSB.toString());

idleBeatResult.setContent(address);

return idleBeatResult;

}

}

return new ReturnT(ReturnT.FAIL_CODE, idleBeatResultSB.toString());

}

} 10、SHARDING_BROADCAST

SHARDING_BROADCAST(分片广播):广播触发对应集群中所有机器执行一次任务,同时系统自动传递分片参数;可根据分片参数开发分片任务;

注意事项

-

xxl-job的默认处理策略是单机串行,这里串行指的是具体执行器上面的同一个任务串行

-

如果配置了多个执行器(即多节点),且没有通过入参控制job获取的处理数据,则最好调整路由策略为 hash一致性,这种路由会均匀的将job分散在不同的执行器,且同一个任务永远在一台服务器上执行